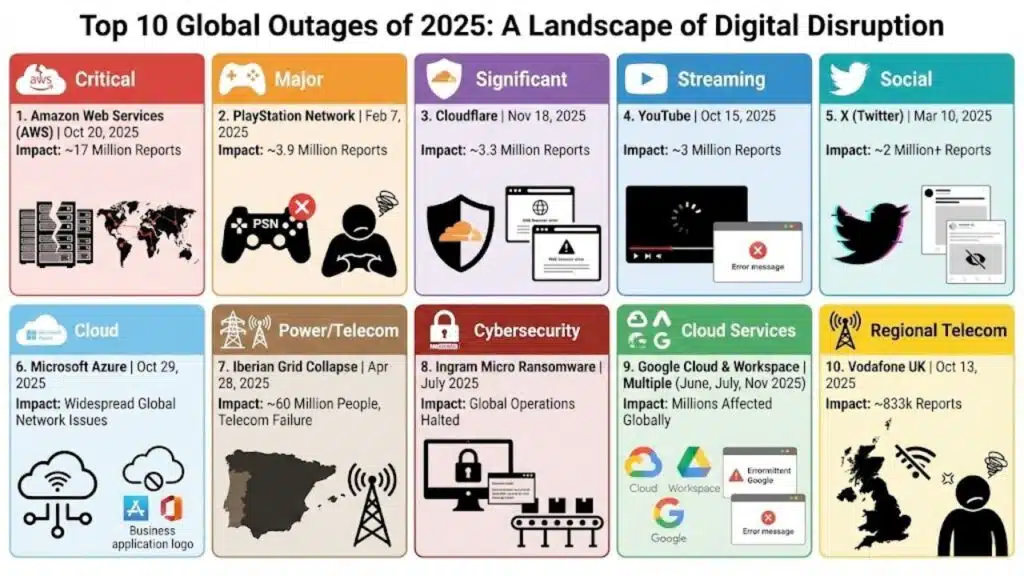

In 2025, big online services did not fail in quiet ways. They failed in public. People could not pay, message, join calls, log in, or reach apps they use every day. What most readers want is clarity—outages with root causes—so they can understand what really happened and what to do next.

This article ranks ten major outages of 2025. For each one, you will see what broke, how long it lasted, who felt it, and the confir med trigger behind it. I also explain the patterns that keep showing up across cloud outages, DNS failures, configuration mistakes, and cyber incidents.

Outages with Root Causes: The Fast Guide to 2025’s Biggest Breakdowns

Use this section to skim. Then jump to the outage you care about.

Quick Summary (Top Outages at a Glance)

| Rank | Date (2025) | Service / Platform | What broke (simple) | Duration (approx.) | Root cause category |

|---|---|---|---|---|---|

| 1 | Oct 19–20 | AWS (US-EAST-1) | DynamoDB DNS resolution failed and spread | ~15+ hours | DNS automation / race condition |

| 2 | Nov 18 | Cloudflare | A generated bot file grew too large and crashed systems | ~3–6 hours | Config pipeline / data permissions |

| 3 | Jun 12 | Google Cloud | Service Control crashed after a global policy change | ~7+ hours | Control plane bug / policy data |

| 4 | Oct 29 | Azure Front Door | Bad metadata and async processing led to crashes | Multi-hour | Config/metadata / latent bug |

| 5 | Mar 1–2 | Microsoft 365 (Outlook) | Access and login issues; rollback applied | ~hours | Problematic code change |

| 6 | Feb 26 | Slack | Messaging and login degraded during incident | ~9.5 hours | DB maintenance + caching defect |

| 7 | Apr 16 | Zoom | zoom.us stopped resolving due to registry block | ~2 hours | Domain/DNS control failure |

| 8 | May 29 | SentinelOne | Platform connectivity lost after route removal | ~hours | Automation / routing flaw |

| 9 | Jul 3 onward | Ingram Micro | Business systems disrupted during ransomware response | Multi-day | Ransomware / ops shutdown |

| 10 | Jan | Conduent | State payment and support systems disrupted | Days (varied) | Cyber incident |

Notes: durations vary by region and product. Some incidents recover core functions first, then clear backlogs later.

What Counts as a “Major Outage” in This List?

To make this list useful, each outage meets at least one of these rules:

- It disrupted a very large number of users, based on verified reporting or large-scale public signals.

- It hit a major platform layer like cloud, edge/CDN, domain/DNS, or core work tools.

- It affected critical services like payments, public benefits, or security operations.

- There is a credible explanation from an official postmortem, a status page, a company statement, or high-quality reporting.

This also means I do not include rumors. If the root cause was not confirmed, it did not make the list.

This is why the article highlights outages with root causes instead of “it was down and nobody knows why.”

Top 10 Outages of 2025 (Ranked)

Below, each outage includes: a plain timeline, what users saw (think API timeouts, login failures, “SOS only”), the likely root cause pattern, and the practical fix categories (change management, circuit breakers, multi-region).

1. AWS Outage (US-EAST-1) — October 19–20, 2025

What happened

AWS had a major disruption tied to Amazon DynamoDB in the US-EAST-1 region (Northern Virginia). Many customer apps saw errors because key AWS services could not reach DynamoDB endpoints reliably. AWS later said the larger event came from DNS resolution issues tied to DynamoDB endpoints.

Timeline (high level)

AWS said the disruption started on October 19–20 and that by 12:26 AM PDT on Oct 20 it had identified the event as DNS resolution issues for DynamoDB endpoints, with mitigation of that DNS issue by 2:24 AM PDT, followed by slower recovery for other subsystems and backlogs.

Ars Technica reported the incident lasted 15 hours and 32 minutes, citing AWS engineers.

Who was affected

When AWS US-EAST-1 has a deep issue, the impact can spread fast. Many companies run critical workloads there. That can include consumer apps, business dashboards, and behind-the-scenes services that power logins and payments. Reuters described broad disruption across many services that rely on AWS.

Root cause (confirmed trigger)

AWS and multiple reports explained a fault in DynamoDB’s automated DNS management. AWS described DNS resolution issues for DynamoDB service endpoints. Reporting on the post-event details said a race condition left an empty DNS record for a regional endpoint.

Why it spread

- DNS is a “front door” for service discovery. If DNS points nowhere, systems cannot connect.

- DynamoDB is a core dependency for many other services. So one DNS error can become a chain reaction.

- After the initial fix, internal backlogs and impaired subsystems can slow full recovery. AWS said some internal subsystems stayed impaired and it throttled some operations to facilitate recovery.

What changed after

Reporting said AWS temporarily disabled the affected DynamoDB DNS automation globally while it added safeguards.

Key lesson

Automated DNS management needs strong brakes. Test the edge cases. Make rollback easy. Keep a safe manual path.

2. Cloudflare Global Outage — November 18, 2025

What happened

Cloudflare suffered a global outage. Many websites behind Cloudflare showed error pages. Media outlets reported that large services were affected because Cloudflare sits between users and many sites.

Timeline (high level)

Cloudflare said the network began failing to deliver core traffic at 11:20 UTC and core recovery progressed after it deployed a fix and rolled back a bad artifact.

Who was affected

Cloudflare protects and accelerates a huge portion of the web. So its failures can look like “many sites are down,” even though the origin servers may be fine. The Verge listed a wide set of affected services during the incident.

Root cause (confirmed trigger)

Cloudflare’s postmortem said the outage was not caused by an attack. It was triggered by a database permissions change. That change made a query output multiple entries into a Bot Management “feature file.” The file doubled in size, exceeded a hard limit, and caused failures in systems that process traffic.

Why it spread

- The feature file was generated and distributed as part of normal operations.

- The failure mode was harsh: once the file exceeded the limit, the software could crash instead of degrading gently.

- A single bad artifact spread fast across a global network.

What changed after

Cloudflare described changes to reduce risk in generation logic, validation, and rollout safety for critical artifacts like this file.

Key lesson

Treat config pipelines as production systems. Add strong validation. Limit blast radius. Build safer failure modes.

3. Google Cloud Outage — June 12, 2025

What happened

Google Cloud had a major disruption. Many Google Cloud products and external services saw elevated errors. Reuters reported that services like Spotify and Discord saw major spikes in outage reports at the same time.

Timeline (high level)

Google’s official incident page lists the incident from 2025-06-12 10:51 to 18:18 (US/Pacific).

Reuters described large user-report spikes during that window.

Who was affected

This was not just “a Google problem.” It spilled into apps and services that use Google Cloud. Reuters cited large numbers of user reports, such as tens of thousands for Spotify and thousands for Google Cloud and Discord.

Root cause (confirmed trigger)

Google’s incident report, summarized by The Register, said a new feature was added to Service Control to support extra quota checks. The failing code path was not exercised during rollout because it needed a specific policy change to trigger it. Then, on June 12, a policy change with unintended blank fields replicated globally and triggered a crash loop in Service Control.

Why it spread

- Service Control sits in the request path for many APIs. If it crashes, many products fail together.

- The policy data replicated fast across regions, so the trigger became global quickly.

What changed after

Google described mitigation using a “red button” approach and changes to prevent similar crash loops from taking down large parts of the platform.

Key lesson

Control planes must fail safely. A bad policy field should not crash the gatekeeper for a whole cloud.

4. Microsoft Azure Front Door Outage — October 29, 2025

What happened

Azure Front Door (AFD), a global edge delivery service, had a major incident that caused service degradation and customer impact. Third-party monitoring teams also observed global issues.

Timeline (high level)

Microsoft described two October incidents (Oct 9 and Oct 29) and the lessons learned. The Oct 29 incident was customer-impacting and involved broad AFD degradation.

Who was affected

When an edge front door breaks, many apps fail in similar ways. Users see timeouts, broken sign-in flows, and failed connections. AFD also serves both Microsoft services and many customer apps.

Root cause (confirmed trigger)

Microsoft’s Azure Networking Blog said incompatible customer configuration metadata progressed through protection systems. Then a delayed async processing task resulted in a crash due to another latent defect, which impacted connectivity and DNS resolutions for applications onboarded to AFD.

Why it spread

- Edge services concentrate traffic, so a data-plane crash hits a lot of users fast.

- Bad metadata can slip through if validation is incomplete.

- “Last known good” snapshots can be risky if they accidentally capture a bad state.

What changed after

Microsoft described work to strengthen protections and validate metadata earlier, with changes aimed at reducing the chance of bad states spreading globally.

Key lesson

Validate config and metadata early. Use strict canaries. Keep a true safe rollback point.

5. Microsoft 365 Outage (Outlook) — March 1–2, 2025

What happened

Microsoft 365 users reported trouble logging in and using Outlook services. Microsoft said it identified a likely cause and reverted code to reduce impact. theregister.com+1

Timeline (high level)

The Register reported issues started around 2100 UTC on a Saturday and that Microsoft blamed a code change and reverted it.

Who was affected

Outlook downtime hits both personal users and businesses. Even a few hours can break customer support, internal comms, and login flows.

Root cause (confirmed trigger)

Microsoft attributed it to a “problematic code change.” Reports said Microsoft reverted the suspected code to alleviate the impact.

Key lesson

Release safety is reliability. Canary rollout plus fast rollback often matters more than fancy architecture.

6. Slack Outage — February 26, 2025

What happened

Slack users could not send or receive messages reliably, load channels, use workflows, or even log in for parts of the day.

Timeline (high level)

Slack’s status page lists the incident from 6:45 AM PST to 4:13 PM PST.

Who was affected

Slack is a work backbone for many teams. When Slack degrades, incident response can slow down, support teams lose coordination, and work stalls.

Root cause [confirmed trigger]

Slack said the incident was caused by a maintenance action in a database system, combined with a latency defect in the caching system. That mix overloaded the database and caused about 50% of instances relying on it to become unavailable.

Key lesson

Test the “boring stuff.” Routine maintenance can expose hidden defects and cause big outages.

7. Zoom Outage — April 16, 2025

What happened

Zoom meetings and related services failed for many users because the zoom.us domain did not resolve reliably.

Timeline (high level)

GoDaddy Registry stated that on April 16, between 2:25 PM ET and 4:12 PM ET, zoom.us was not available due to a server block.

Who was affected

Zoom is used for work, school, and support. A domain failure blocks all of it at once.

Root cause (confirmed trigger)

GoDaddy Registry said the domain was blocked due to a server block. It stated that Zoom, Markmonitor (Zoom’s registrar), and GoDaddy worked quickly to remove it, and that there was no product, security, or network failure during the incident.

Additional reporting described a communication mishap between the registrar and the registry.

Key lesson

Your domain is critical infrastructure. Use registry lock, tight controls, and monitoring for DNS and domain status.

8. SentinelOne Global Service Disruption — May 29, 2025

What happened

SentinelOne customers lost access to key platform services and the management console. SentinelOne said this was not a security incident, but it still disrupted visibility for security teams.

Timeline (high level)

Coverage described an hours-long global disruption, with recovery and backlog processing after services returned. SentinelOne published an official RCA.

Who was affected

Even if endpoints still protect devices, losing console access hurts. Security teams need logs, alerts, and control during real incidents.

Root cause (confirmed trigger)

SentinelOne’s RCA said a software flaw in an infrastructure control system removed critical network routes, causing a widespread loss of connectivity within the platform. SentinelOne said it was not security-related.

Key lesson

Automation that changes routes must be limited and reversible. High-risk actions need approval gates and safer defaults.

9. Ingram Micro Outage — July 2025 [Ransomware]

What happened

Ingram Micro, a large IT distributor, suffered a multi-day disruption tied to ransomware. That disrupted ordering and other operations for many customers.

Timeline (high level)

Ingram Micro issued a statement on July 5, 2025 saying it identified ransomware on some internal systems and took certain systems offline to secure the environment.

Reuters reported similar details and noted the company notified law enforcement and began an investigation with cybersecurity experts.

Who was affected

Distributors sit in the supply chain. So downtime can slow ordering, licensing, and shipping for many resellers and businesses.

Root cause (confirmed trigger)

The company confirmed ransomware was identified on internal systems, and it took systems offline as part of the response.

Key lesson

Ransomware response often requires shutdowns. Businesses need backup ordering paths, clean backups, and tested restore plans.

10. Conduent Disruption — January 2025 [Cyber Incident]

What happened

Conduent, a government and business services contractor, confirmed an outage tied to a cybersecurity incident. The disruption affected state systems, including payment processing in some cases.

Who was affected

These were not “nice-to-have” services. They included payment processing and social support systems in some states. Cybersecurity Dive reported that Wisconsin was one of several states affected by delays linked to the incident.

Root cause (confirmed trigger)

Conduent confirmed the outage was due to a cyber incident. Public reporting did not always include deep technical details about the initial entry point, but the company confirmed the incident itself.

Impact example (with numbers)

GovTech reported a case where a cyber incident temporarily halted child support payments, preventing an estimated 121,000 families from receiving around $27 million in collective payments before resolution.

Key lesson

Critical public services need a fallback plan. Vendor risk must be treated like infrastructure risk.

Root Causes Explained [Why Outages Keep Happening]

When you step back, these outages look different. But the causes often repeat.

1. Unsafe change and weak validation

A permissions update broke Cloudflare’s file generation.

A metadata protection gap allowed bad config states in Azure Front Door.

A code change disrupted Microsoft 365.

This is why teams should study outages with root causes. They show that change control is not paperwork. It is a safety system.

2. DNS and domain control failures

DNS is simple in theory. In real life, DNS is fragile because it connects everything.

- AWS described DNS resolution issues tied to DynamoDB endpoints.

- Zoom’s domain was blocked at the registry level, which broke resolution for zoom.us.

If your name does not resolve, your service can disappear.

3. Control plane chokepoints

Google Cloud showed how a control plane crash can take down many APIs. It began when a globally replicated policy change triggered an untested code path and crash loop.

A healthy platform needs a control plane that degrades safely.

4. Cyber incidents and forced shutdowns

Ingram Micro confirmed it took systems offline after ransomware was identified.

Conduent confirmed a cybersecurity incident behind its outage.

Sometimes the outage is not the attack. It is the containment.

Patterns We Saw in 2025

Cascading failures are common

Modern services depend on shared layers. A DNS issue can become a database issue, which becomes an auth issue, which becomes a user login issue. AWS and Google Cloud are strong examples of cascade behavior.

Fast rollback reduces downtime

When teams can roll back quickly, user harm shrinks.

- Cloudflare replaced the bad file and restored traffic.

- Microsoft reverted a problematic code change.

- Google used a high-impact mitigation step to stop the failing path and begin recovery.

“Small” events can be huge at scale

A permission change. A policy edit. A database maintenance action. These are everyday tasks. At scale, they can take down the world if safety checks are weak.

How to Reduce Downtime [Practical Checklist]

You cannot prevent every failure. But you can reduce how often it happens and how long it lasts. This checklist is made for real teams, not perfect teams.

Architecture

- Avoid single-region critical paths for core features when you can.

- Use isolation. Cells, zones, and strict limits reduce blast radius.

- Use multi-provider DNS for critical services, and test failover.

Change and release safety

- Roll out changes in stages. Use canary releases.

- Treat config like code. Validate inputs and outputs.

- Keep rollback fast. Practice it often.

Monitoring and incident response

- Monitor the user path. Login, checkout, send message, join meeting.

- Monitor dependencies like DNS, edge gateways, and databases.

- Keep clear incident roles. Keep updates short and frequent.

Resilience testing

- Run “game days.” Break things on purpose in a safe way.

- Test boring events like maintenance and permission changes under load.

- Test what happens if one key dependency is gone.

If you want fewer repeat incidents, use this checklist to turn outages with root causes into action items in your own system.

Final Words

The biggest outages of 2025 were not random. Many started with normal actions: a config change, a policy update, a maintenance job, or an automated system doing its job the wrong way. Then they spread across shared layers.

The most useful habit you can build is to read and learn from outages with root causes. They show where systems are fragile. They show where rollback is slow. They show where “small” changes can cause big harm. If you take those lessons seriously, you can reduce downtime, limit blast radius, and recover faster the next time something breaks.