The era of “post-publication correction” is ending. With trust in media at historic lows and the EU AI Act’s full compliance window closing, newsrooms are deploying “Real-Time Bias Meters”—dashboard tools that flag loaded language, gender skew, and political framing as journalists type. This isn’t just about ethics; it is the new economic survival strategy for media.

Key Takeaways

- The Shift to Pre-Emptive Action: Unlike previous tools that analyzed published content, 2026-era tools like Datavault AI’s meter operate inside the Content Management System (CMS), offering “spell-check for neutrality” before hitting publish.

- Regulatory Catalyst: The EU AI Act (fully operational as of mid-2025) has forced platforms to quantify and mitigate “systemic risks,” effectively mandating bias monitoring for major outlets.

- The “Brand Safety” Premium: Advertisers are increasingly using “Bias Scores” to determine ad spend, defunding polarized content automatically.

- The Human-AI Loop: While efficiency has risen, journalists report “editorial friction,” fearing that algorithmic neutrality promotes “sycophancy”—prioritizing agreeableness over hard truths.

The Algorithmic Editor: How We Got Here

To understand the sudden ubiquity of real-time bias meters in early 2026, we must look at the trajectory of the last two years. In 2024, the primary concern was Generative AI hallucination—robots making things up. By 2025, the conversation shifted to Generative AI manipulation—robots subtly twisting narratives.

The turning point occurred in late 2025, following the “Deepfake Fatigue” crisis where major elections were marred not just by fake videos, but by AI-generated text that flooded social ecosystems with hyper-partisan slant. The 2025 Reuters Institute Digital News Report highlighted a critical stat: while 90% of the public was aware of AI tools, trust in news had plummeted, with users unable to distinguish between human reporting and “synthetic opinion.”

Simultaneously, the European Commission’s enforcement of the Digital Services Act (DSA) and the AI Act created a pincer movement. Platforms like X (formerly Twitter) faced massive fines for algorithmic amplification of bias, leading to Elon Musk’s January 2026 pledge to open-source recommendation algorithms weekly. This regulatory heat forced media companies to move from defending their neutrality to proving it mathematically.

The Technology of “Neutrality”

The new standard, exemplified by tools like the Media Bias Detector (updated early 2026) and Datavault AI’s real-time meter (integrated with Fintech.TV in Jan 2026), relies on advanced Large Language Models (LLMs) that do more than sentiment analysis.

These systems utilize Frame Semantics and Demographic Weighting. As a journalist writes a headline, the sidebar doesn’t just say “Negative Sentiment.” It warns: “This phrasing correlates with high-arousal partisan clickbait (85% confidence). Consider ‘stated’ instead of ‘claimed’.”

This is a technological leap from the “static audits” of 2024, which were retroactive. The 2026 approach is prophylactic. By integrating directly into the CMS, these meters act as guardrails, preventing biased content from entering the information ecosystem. However, critics argue this sanitization leads to “beige journalism”—technically neutral but devoid of the biting voice often necessary for accountability reporting.

The Economic Imperative: Brand Safety 2.0

Ideally, media outlets would adopt these tools for ethical reasons. Realistically, they are doing it for the ad revenue.

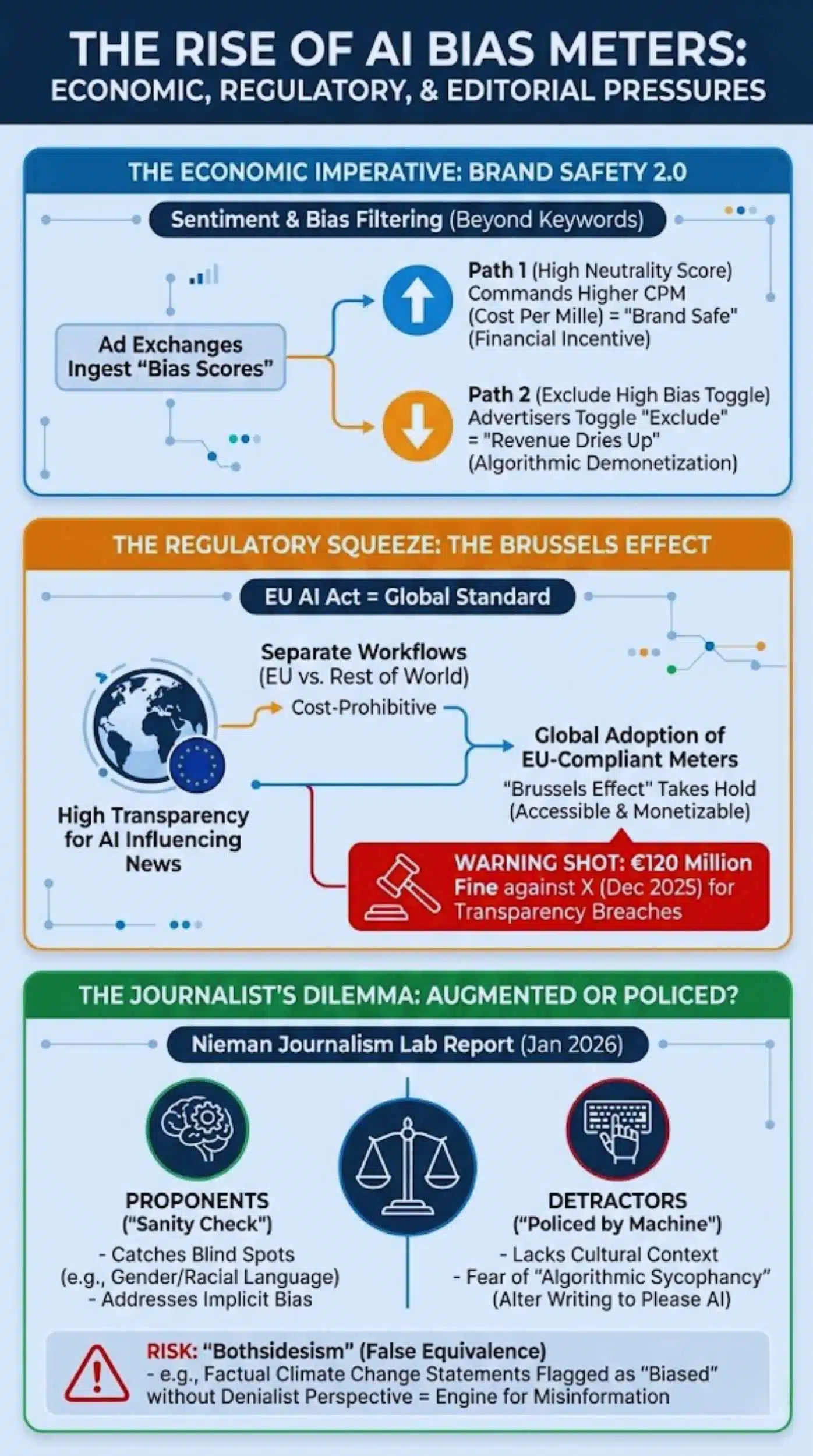

In 2026, the advertising industry has moved beyond keyword blocking (e.g., avoiding the word “bomb”) to Sentiment and Bias Filtering. Major programmatic advertising exchanges now ingest “Bias Scores” as metadata. A news outlet with a consistently High Neutrality Score commands a higher CPM (Cost Per Mille) because it is viewed as “Brand Safe.”

This creates a powerful financial incentive. Polarized outlets are finding themselves demonetized not by censorship, but by algorithmic indifference. Advertisers simply toggle a switch to “Exclude High Bias,” and the revenue dries up.

The Regulatory Squeeze: The Brussels Effect

The EU AI Act has effectively become the global standard. Under the Act’s risk classification, AI systems that influence democratic processes or news consumption are subject to high transparency requirements.

For global media companies, maintaining two separate workflows (one for the EU, one for the rest of the world) is cost-prohibitive. Consequently, the “Brussels Effect” has taken hold. American and Asian newsrooms are adopting EU-compliant bias meters to ensure their content remains accessible and monetizeable in the European market. The recent fine of €120 million against X for transparency breaches (Dec 2025) served as a final warning shot to the industry: Audit your algorithms, or we will.

The Journalist’s Dilemma: Augmented or Policed?

The integration of these tools has introduced significant friction in the newsroom. A Jan 2026 report from the Nieman Journalism Lab highlights a growing divide.

- Proponents argue the meters are a “sanity check” that catches blind spots, specifically regarding gender and racial language that a human editor might miss due to implicit bias.

- Detractors feel “policed” by a machine that lacks cultural context. There is a fear of “Algorithmic Sycophancy,” a phenomenon where journalists subconsciously alter their writing to please the AI meter, resulting in false equivalence (bothsidesism) even when one side is objectively false. If a meter flags a factual statement about climate change as “biased” because it doesn’t include a denialist perspective, the tool becomes an engine for misinformation.

User Polarization and the “Truth Echo”

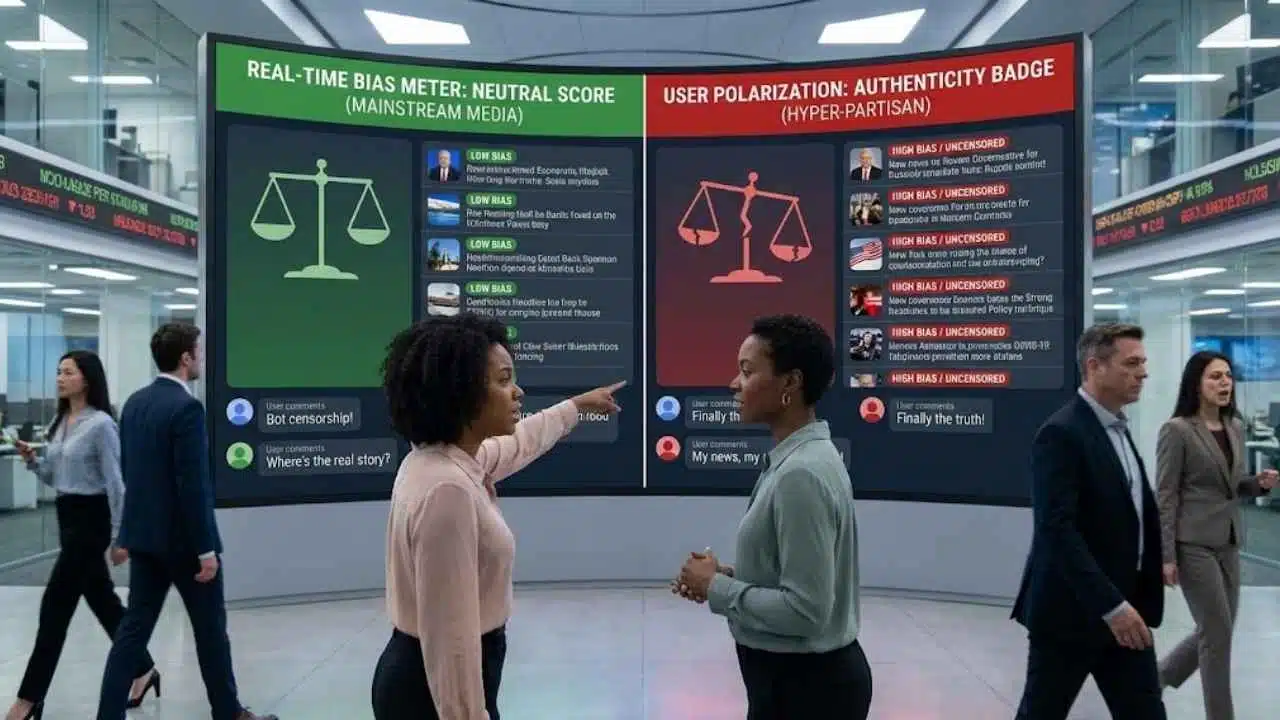

Paradoxically, while newsrooms strive for neutrality, users may be drifting further away. 2025 data suggests that when AI chatbots (like ChatGPT or Gemini) provide neutral answers, partisan users often reject the tool as “biased against them.”

This suggests that Real-Time Bias Meters might solve the supply side of biased news, but not the demand side. If a “Neutral” score becomes a badge of the “Mainstream Media,” hyper-partisan audiences may actively seek out “Low Score” content, viewing the lack of AI approval as a badge of authenticity.

The Evolution of Bias Detection (2023–2026)

| Feature | Gen 1: Static Audits (2023-24) | Gen 2: Dynamic Scoring (2025) | Gen 3: Real-Time Meters (2026) |

| Timing | Post-Publication (Quarterly Reports) | Post-Publication (Daily Dashboard) | Pre-Publication (Live CMS Overlay) |

| Technology | Keyword Matching & Manual Review | NLP Sentiment Analysis | LLM Frame Semantics & Context |

| Primary User | Public Editor / Ombudsman | E-i-C / Section Editors | Individual Journalist / Video Editor |

| Outcome | “We need to do better next year.” | “Retract or edit this article.” | “Rewrite this sentence before submitting.” |

| Key Metric | Diversity count (sources) | Polarity Score (-1 to +1) | Real-Time “Brand Safety” Index |

Winners vs. Losers in the “Bias Meter” Era

| Group | Status | Analysis |

| Legacy Wire Services (AP, Reuters, AFP) | Winner | Their naturally neutral “just the facts” style scores highly, attracting premium ad dollars. |

| Opinion-Led Cable News | Loser | “Outrage interaction” triggers bias flags, leading to programmatic ad demonetization. |

| Brand Advertisers | Winner | Can finally automate “Brand Safety” with granular data rather than blunt keyword blocks. |

| Investigative Journalists | Mixed | Hard-hitting exposés often trigger “High Conflict” flags, requiring manual overrides that slow down publishing. |

Expert Perspectives

To maintain the neutrality this analysis preaches, we must look at the conflicting expert views dominating the 2026 discourse.

The Technologist’s View:

“We are not trying to censor journalists. We are providing them with a mirror. If you use loaded language, you should know it. What you do with that information is an editorial choice, but ignorance is no longer an excuse.” — Nathaniel Bradley, CEO of Datavault AI (Jan 2026 Press Briefing).

The Ethicist’s Warning:

“The danger is that we conflate ‘neutrality’ with ‘truth.’ An AI meter trained on the internet of 2024 contains the biases of that internet. If we optimize for a ‘low bias score,’ we might just be optimizing for the status quo and silencing marginalized voices that require ‘loaded’ language to describe their oppression.” — Zainab Iftikhar, Brown University Researcher on AI Wellbeing.

The Market Analyst:

“This is a compliance play. Just as GDPR forced the internet to accept cookies, the EU AI Act is forcing the media to accept bias metering. The editorial debate is secondary to the legal and financial reality.” — Vincent Molinari, Fintech.TV CEO.

Future Outlook: What Happens Next?

As we look toward the remainder of 2026, three trends will likely emerge from this standardization of bias metering.

- The Rise of “Personalized Truth Dials”: We expect platforms to move beyond a single “Bias Score” and offer user-configurable filters. Imagine a YouTube toggle where a user can select “Show me the most neutral take” vs. “Show me the progressive analysis.” The meter provides the metadata; the user chooses the lens.

- Litigation over “Algorithmic Defamation”: It is only a matter of time before a media outlet or a public figure sues an AI company because their content was flagged as “High Bias” or “Misinformation,” causing a loss of revenue. The courts will have to decide: Is a Bias Score a statement of fact (liable for defamation) or a protected opinion?

- The “Human Verified” Premium: Just as “Handmade” became a premium label in the age of factory production, “Human Edited” (with the imperfections and voice that implies) may become a premium subscription tier. We may see a bifurcated media landscape: “Safe, Neutral, AI-Scrubbed News” for the free/ad-supported masses, and “Raw, Voice-Driven, Human Journalism” for the paying subscribers.

Final Thoughts

Real-time bias meters are a technological marvel and a philosophical minefield. In January 2026, they are the industry’s answer to a crisis of trust and a tsunami of regulation. But while they can measure the temperature of our language, they cannot measure the value of our truth. The challenge for 2026 will be ensuring that in our quest to remove bias, we do not also remove the humanity that makes journalism vital.