Aravind Srinivas, the 31-year-old CEO of the rapidly growing AI search engine Perplexity, recently expressed he was “horrified” after witnessing a student openly boast about using his platform to cheat. The candid reaction has spotlighted a deepening crisis at the intersection of artificial intelligence and academic integrity, forcing a global conversation about the responsibilities of tech giants and the future of learning itself.

Key Facts & Quick Take

- The Incident: Perplexity AI CEO Aravind Srinivas publicly rebuked a student’s claim of using the tool for cheating, stating, “Absolutely don’t do this.” His strong stance has been praised by educators but highlights the ethical tightrope AI companies must walk.

- Widespread AI Use: Recent data indicates a massive surge in AI tool adoption among students. A study from mid-2025 shows over 70% of university students in developed nations use generative AI for their coursework, often in ways that blur the line between assistance and academic dishonesty.

- Educator’s Struggle: A significant gap exists in institutional readiness. Less than 40% of educational institutions globally have formulated clear policies on generative AI use, leaving both students and faculty in a state of confusion.

- The Tech Dilemma: While companies like Perplexity and OpenAI publicly advocate for ethical use, their business models are predicated on widespread adoption. This creates a fundamental tension between being a powerful tool and preventing its misuse for plagiarism and cheating.

- Market Context: Perplexity AI, valued at over $3 billion after its latest funding round, is a major challenger to Google. The Perplexity AI CEO’s comments carry significant weight as the company’s influence grows, positioning it as a key player in how information is accessed and synthesized globally.

The Spark: A Student’s Post and a CEO’s Public Rebuke

The controversy began with a seemingly innocuous post on social media. A university student, whose identity has been kept private, detailed how they used Perplexity AI—a tool marketed as a “conversational answer engine”—to complete a complex assignment, effectively bypassing the research and critical thinking process.

The student’s post was not a confession but a boast, framing the AI as a clever shortcut. It was this casual disregard for academic principles that drew the ire of Aravind Srinivas. In a widely circulated interview and subsequent social media follow-up, Srinivas did not mince words.

“I was horrified when I saw that,” Srinivas stated in an interview with Fortune. He later emphasized his stance on X (formerly Twitter), telling his followers, “We are pro-student, pro-learning. We are not pro-cheating. Absolutely don’t do this.”

This direct, unambiguous condemnation from the leader of a major AI firm is a departure from the often neutral, “we’re just a tool” stance adopted by many in Silicon Valley. It has thrown into sharp relief the ethical responsibilities that accompany the creation of technologies with the power to fundamentally alter societal norms.

A Tidal Wave of AI in Academia: The Latest Data

Srinivas’s alarm is not unfounded. The use of generative AI in educational settings has exploded far faster than institutional policies can adapt. The tools are no longer a niche technology for early adopters but a mainstream feature of student life.

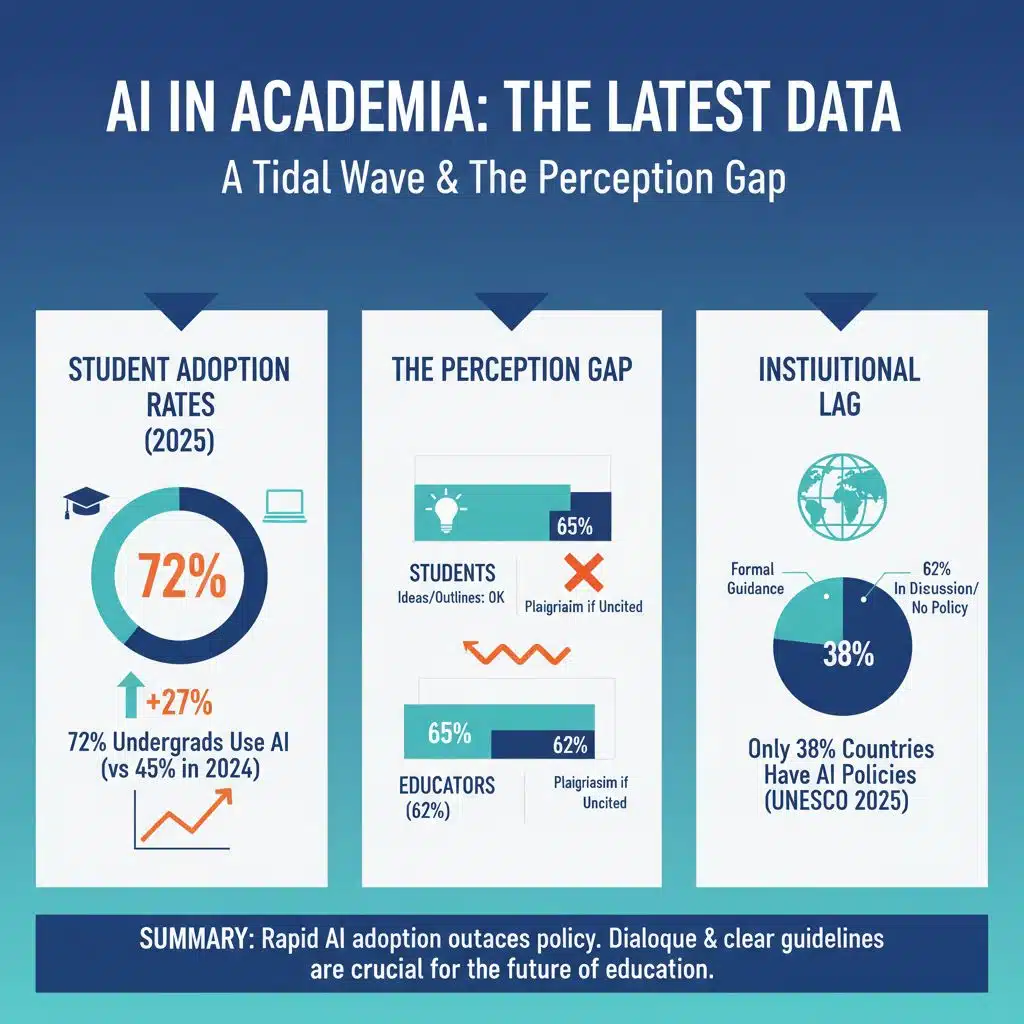

- Student Adoption Rates (2025): A comprehensive survey conducted by academic research firm BestColleges in July 2025 found that 72% of undergraduate students in the United States reported using generative AI tools like ChatGPT, Gemini, or Perplexity to help with their assignments. This represents a significant increase from just 45% in their August 2024 survey, illustrating an adoption curve of unprecedented steepness.

- The Perception Gap: The same report highlighted a critical disconnect. While a majority of students (65%) believe using AI to generate ideas or outlines is acceptable, a nearly equal percentage of educators (62%) view such practices as a form of plagiarism or academic misconduct if not properly cited.

- Institutional Lag: Globally, educational bodies are scrambling to catch up. A September 2025 report from the UNESCO Institute for Information Technologies in Education (IITE) revealed that only 38% of member countries’ higher education ministries had issued formal guidance on AI usage in schools. The remaining 62% are either “in discussion” or have no policy at all, creating a chaotic and inequitable landscape for students and teachers.

Official Responses and Expert Analysis

The Perplexity AI CEO’s comments have drawn a spectrum of reactions, from applause from beleaguered educators to skepticism from tech ethicists.

Dr. Tricia Bertram Gallant, a globally recognized expert on academic integrity and a board member of the International Center for Academic Integrity (ICAI), commented on the situation.

However, some critics argue that a CEO’s public plea is insufficient. They contend that the very design of “answer engines”—which synthesize information and present it as a clean, finished product—inherently encourages intellectual shortcuts.

Impact on the Ground: A Student’s Perspective

The pressure to succeed, coupled with the easy availability of these tools, creates a perfect storm. We spoke with a third-year computer science student from a prominent university in Dhaka, who asked to remain anonymous.

“It’s not always about cheating,” they explained. “Sometimes you have three major assignments due in the same week, and the pressure is immense. Using AI to draft an introduction or summarize a dense research paper feels less like cheating and more like survival. The university tells us ‘don’t use it,’ but they don’t teach us how to use it ethically. It’s a gray area we are all navigating alone.”

This sentiment reflects a widespread student reality: the issue is not purely one of malicious intent but also of overwhelming academic loads, a lack of clear guidelines, and the seductive efficiency of AI.

What to Watch Next

The fallout from this incident will likely accelerate several key trends:

- Policy Formulation: Expect a rush of universities and school districts to finalize and implement clear, enforceable AI usage policies before the 2026 academic year.

- Technological Arms Race: AI detection software, such as that developed by Turnitin, will become more sophisticated. In response, AI models will likely evolve to produce text that is even harder to distinguish from human writing.

- Feature Integration: In response to criticism, AI companies like Perplexity may build more robust “academic integrity” features directly into their products, such as enhanced, unmissable citations and warnings about improper use for academic assignments.

Aravind Srinivas’s visceral reaction to a single student’s boast has inadvertently done the academic world a service. It has ripped the cover off a simmering crisis, forcing a confrontation that was long overdue. The conversation is no longer about if AI belongs in education, but how it can coexist without eroding the fundamental principles of learning, critical thought, and intellectual honesty. The path forward requires a tripartite alliance: tech companies must accept their role as ethical actors, educators must innovate their teaching and assessment methods, and students must be taught to wield these powerful new tools with wisdom and integrity. The future of education may depend on it.

The Information is Collected from MSN and Yahoo.