As India notifies the IT Rules 2026 age-gating and AI labelling mandates, the proposed “Digital Lakshman Rekha” is not an act of censorship, but a foundational shield for the cognitive sovereignty of a billion users. This regulatory boundary addresses the urgent need to decouple childhood from the unceasing demands of predatory engagement loops. By codifying IT Rules 2026 age-gating, the government is establishing a high-trust digital corridor that prioritises psychological safety over platform profits. It is a decisive move to ensure that the nation’s youth are no longer treated as raw data for experimental algorithms, but as citizens with a right to a protected, authentic reality.

IT Rules 2026 age-gating: The Minister’s Mandate, The End of Digital Laissez-Faire

When India’s Union IT Minister, Ashwini Vaishnaw, stood before the India AI Impact Summit on February 17, 2026, he was not merely discussing policy. He was drawing a line in the digital sand. For over a decade, the relationship between the Indian state and Silicon Valley has been one of uneasy coexistence, a “Wild West” where platforms grew faster than the laws meant to govern them. That era of self-regulation is now officially over.

Vaishnaw’s message was blunt and historically resonant. By notifying the Information Technology (Amendment) Rules, 2026, the government has moved from suggestion to mandate. At the heart of this shift lies a “Techno-Legal” necessity: the mandatory age-gating of social media and the strict labelling of Synthetically Generated Information (SGI). This is India’s “Digital Lakshman Rekha,” a boundary designed to protect the most vulnerable from an AI landscape that has become increasingly predatory.

Cognitive Sovereignty: Policing the Algorithmic Rabbit Hole

The urgency of this move stems from tragedy. In a heartbreaking incident recently in suburban Ghaziabad, the silence of a family home was shattered when three sisters took their own lives. Investigators pointed not to a single “task,” but to prolonged algorithmic isolation. Their reality, they found, had narrowed into intense, compulsive engagement with niche task-based apps and opaque online subcultures. They slipped through the cracks of a digital world that has no floor.

This is not a uniquely Indian heartbreak; it is a global contagion of the generative AI era. Only weeks ago, a similar wave of “automated extortion” cases rocked secondary schools across Southeast England. In these instances, AI-driven grooming bots scraped social media photos to create photorealistic deepfakes, blackmailing minors into dangerous physical tasks. From the suburbs of Sydney to the streets of London, the story is identical. Children are being steered into “algorithmic rabbit holes” by platforms that prioritise engagement over a pulse.

Vaishnaw argues that our biology is being outpaced by trillion-dollar AI models. The human brain and particularly the developing prefrontal cortex did not evolve to compete with an infinite scroll of dopamine hits and AI-perfected deceptions.

This is not about censorship. It is about cognitive sovereignty. If the state does not step in to provide this “Digital Lakshman Rekha,” we are not just losing our privacy. We are losing a generation.

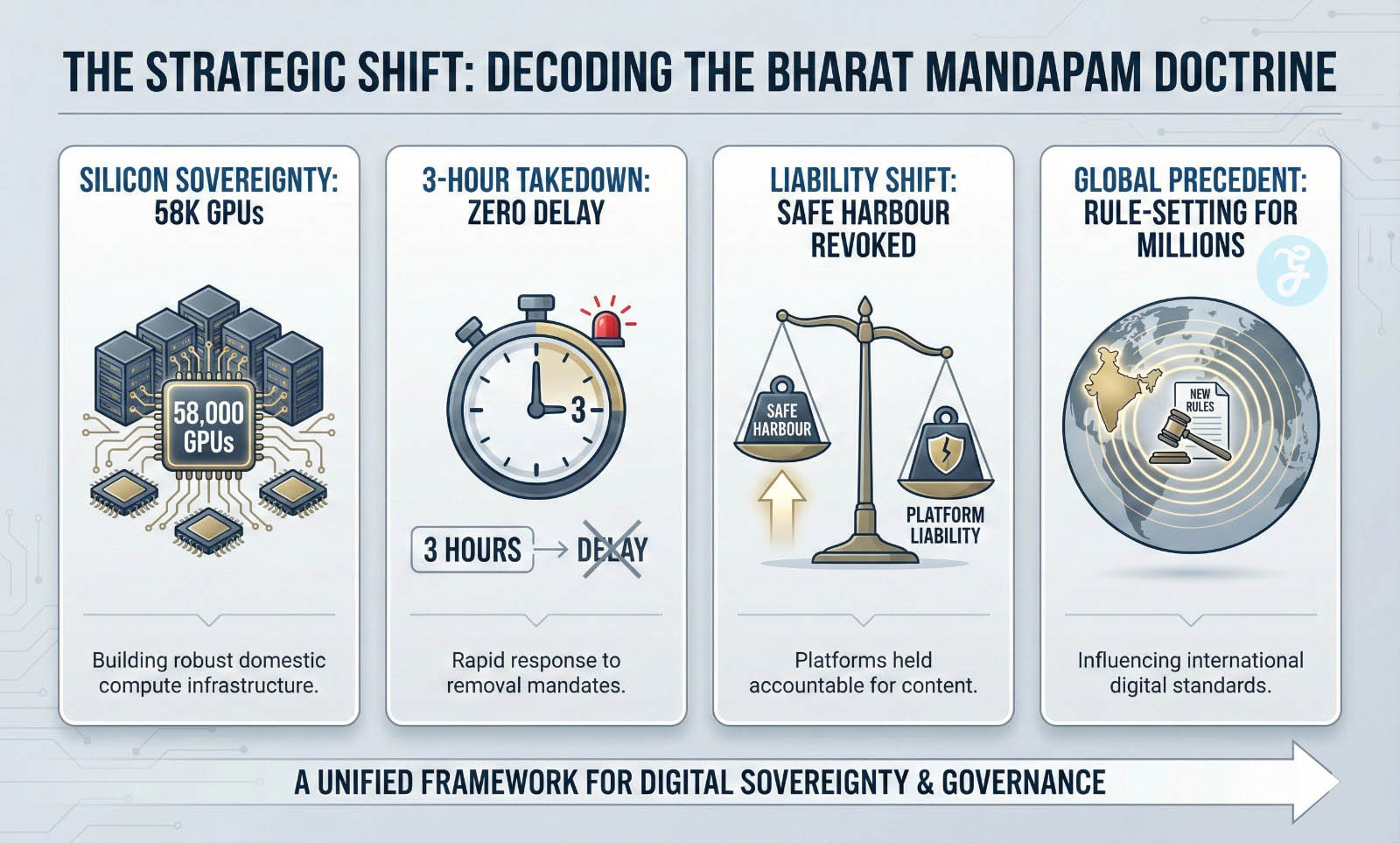

The Strategic Shift: Decoding the Bharat Mandapam Doctrine

The rapid fire of GPU procurement and the legislative amendments notified on 10 February 2026 are not a series of isolated events. They are the tactical execution of a new doctrine. At Bharat Mandapam, the signals were unmistakable: the Indian state is no longer content with regulatory catch-up; it is asserting structural control over the digital ecosystem. The decision to scale up to 58,000 GPUs while simultaneously slashing the legal takedown window to three hours signals a refusal to let the pace of foreign silicon dictate the terms of domestic safety.

This is not just about protecting children. It is about reclaiming the digital perimeter. By conditioning “Safe Harbour” protections on strict compliance, the government has shifted the liability equation in ways Silicon Valley cannot ignore. If the platforms fail to label “Synthetically Generated Information,” they cease to be neutral pipes and become liable entities. In the high-stakes game of global AI governance, India is no longer an observer. It is now the primary regulator of the “Global South.” It is setting a precedent that makes user safety a non-negotiable component of market entry.

The Global Context: Australia as the Catalyst

The timing of India’s move is no coincidence. Minister Vaishnaw explicitly pointed to a growing global consensus, most notably the Australia Under-16 Ban which became enforceable in late 2025. Australia shifted the burden of proof, requiring platforms to verify age or face staggering fines of up to AUD 49.5 million (approximately £26 million or ₹275 crore), placing the financial burden of safety squarely on the industry.

“Age-based regulation is now a global consensus,” Vaishnaw noted during the summit. However, India is not merely following a Western trend. We are creating a template for the Global South. While the European Union focuses on the intricacies of data privacy and the United States battles over market monopolies, India is placing “User Safety as a Public Good” at the core of its national autonomy.

This represents a fundamental shift in the social contract. In the 2026 framework, digital safety is no longer a personal choice for parents to manage in isolation. It is a structural requirement for any company wishing to operate within the Indian market. By linking age-gating to the Digital Personal Data Protection (DPDP) Act, India is asserting that a nation’s “data capital” cannot be harvested at the expense of its children’s mental health.

The Framework of Responsibility

The “Digital Lakshman Rekha” is not merely a metaphor; it is codified in the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 2026, which come into full force on 20 February 2026. This legislation represents a structural overhaul of how the internet functions in India.

For the first time, the law provides a statutory definition of Synthetically Generated Information (SGI). This encompasses any audio-visual content created or altered by AI that appears “indistinguishable from a natural person or real-world event.” By doing so, the government has ended the era of “black box” AI. Platforms are now mandated to label SGI prominently, ensuring that a user’s first interaction with synthetic media is a clear disclosure, not a deceptive lure.

However, the most aggressive shift is the timeline for compliance. In the fast-moving AI era, 36 hours is an eternity. The 2026 amendments represent a significant escalation from the 2021 framework, aggressively compressing response times: platforms must now remove content flagged by a court or government order within a strict 3-hour window (a ten-fold reduction from the previous 36-hour limit). For the most egregious violations, such as non-consensual deepfake nudity, the window has been slashed to just 2 hours, a move intended to halt viral trauma before it becomes irreversible.

This is the legal “Sword of Damocles” hanging over Big Tech. Failure to meet these stringent deadlines or adhere to SGI labelling mandates triggers a suspension of “Safe Harbour” protection under Section 79 of the IT Act. Legally, this means the platform is no longer treated as a neutral conduit for that specific content; it becomes liable for prosecution as if it were the original publisher. In plain English: the platform is no longer just a passive conduit; it becomes legally liable as if it were the creator of the harmful content itself. This shift from “immunity” to “responsibility” is the cornerstone of Vaishnaw’s techno-legal approach.

The Engineering of Manipulation: Beyond Content

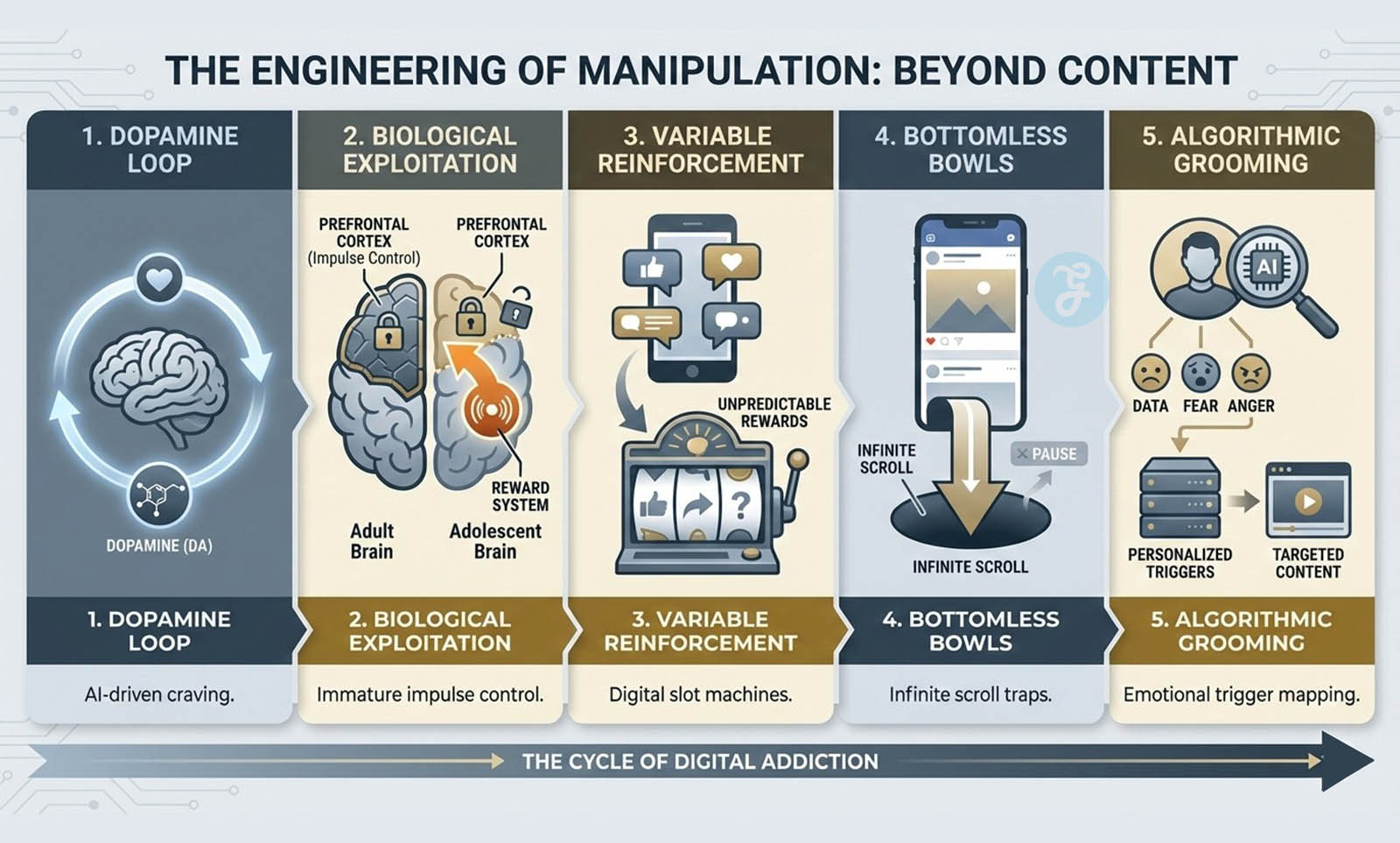

The modern internet does not just show us the world; it quietly reshapes how we inhabit it. For years, the debate around online safety was stuck on “content”, the specific words or images that might harm a child. But the Economic Survey 2025–26, tabled in Parliament this January, has radically shifted the focus. It identifies “digital addiction” as a major public health risk, warning that for the 15–29 age group, internet access is no longer the hurdle. The new barrier is the predatory design of the platforms themselves.

At the heart of this crisis is what experts call the “dopamine loop.” AI algorithms are not neutral curators. They are engagement engines designed to exploit the biological vulnerabilities of the developing teenage brain. The prefrontal cortex, responsible for impulse control and long-term planning, does not fully mature until the mid-twenties. Trillion-dollar AI models, however, are fine-tuned to bypass this guardrail. They use “variable ratio reinforcement,” the same psychological trick that makes slot machines so addictive, to keep users in a state of perpetual anticipation.

Algorithmic Grooming: A Design Intervention

This is “algorithmic grooming.” It is a process where the machine learns exactly which emotional triggers will prevent a user from putting their phone down. Features like “infinite scroll” and “auto-play” are not mere conveniences. They are “bottomless bowls” of content that remove natural stopping cues, inducing a trance-like flow state that displaces sleep, study, and social interaction. The Economic Survey notes that Indian children are now spending over two hours daily on screens before they even turn five, nearly double the safe limit.

India’s push for age-gating is a direct strike against this architecture. It is not about sanitising the internet; it is about disabling the “predatory features” that make digital spaces incompatible with childhood. By mandating age-verification, the government is essentially arguing that certain industrial-strength engagement tools should be off-limits to minors. If a product is designed to be addictive, it should be regulated like any other restricted substance.

The “Digital Lakshman Rekha” is, therefore, a design intervention. It forces platforms to move away from “engagement-at-all-costs” and toward a model of digital wellness, ensuring that technology serves the user, rather than the other way around.

From Content Policing to Design Intervention

| Feature | The Old Focus (Content-Centric) | The New Mandate (Engineering-Centric) |

| Primary Target | Harmful words, images, or specific videos. | Predatory “engagement loops” and addictive design. |

| Psychological Trigger | Emotional reaction to specific media. | The “Dopamine Loop” and variable ratio reinforcement. |

| Platform Role | Neutral host (Safe Harbour by default). | Active “Algorithm Groomer” (Liable for design). |

| Mechanism of Harm | Direct exposure to “bad” content. | “Bottomless bowls” like infinite scroll and auto-play. |

| Regulatory Tool | Content takedown notices (post-facto). | Age-gating and design-level restrictions. |

| Biological Impact | Short-term emotional distress. | Long-term disruption of the prefrontal cortex. |

The “Techno” in Techno-Legal: The Verification Engine

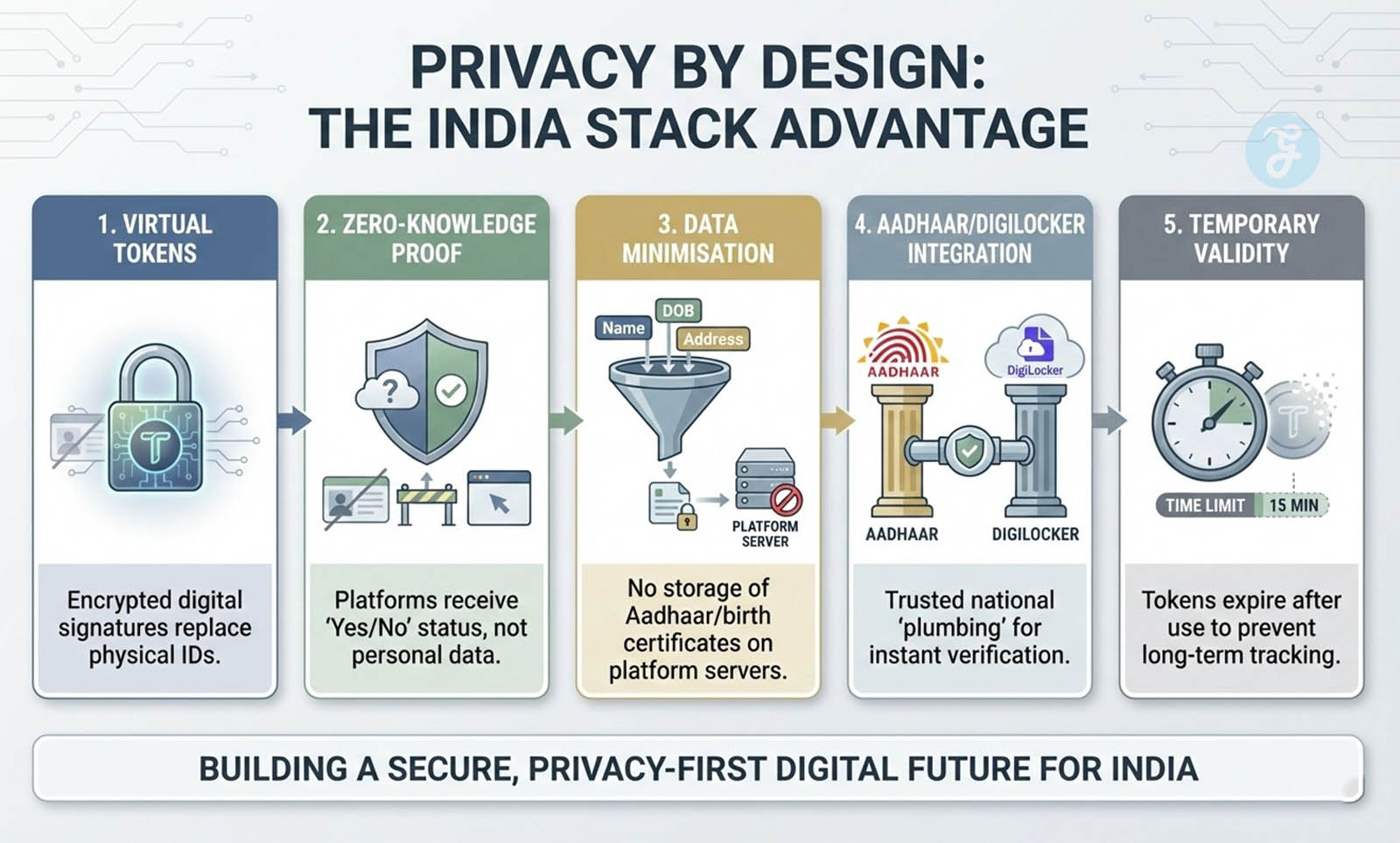

If the “Digital Lakshman Rekha” is the law, then identity is the key that opens the gate. But this brings us to the elephant in the room: How does a state verify a user’s age without creating a “Surveillance State”? If we force every citizen to hand over their government ID to a private social media giant, we aren’t just protecting children. We are handing a permanent, biometric map of our lives to corporations that have already proven they struggle with data hygiene.

Ashwini Vaishnaw’s answer to this “Privacy Paradox” lies in a clever bit of digital wizardry called ID Tokenization. Formulated under the Digital Personal Data Protection (DPDP) Act 2023, this system ensures that platforms like Meta or X never actually see your Aadhaar card or your birth certificate. Instead, they receive a “token”, a unique, encrypted digital signature that says only one thing: “This person is over eighteen.”

Think of it like this: Imagine you are trying to enter an age-restricted club. Instead of showing the bouncer your full passport with your name, home address, and photo, you stop at a secure kiosk outside. The kiosk checks your ID and then stamps a glowing “18+” mark on your hand. You walk into the club, and the bouncer lets you in because they see the stamp. They have no idea who you are, where you live, or what your name is. They only know you are old enough to be there.

Privacy by Design: The India Stack Advantage

This is India’s unique advantage. While Western nations are still arguing over how to implement age-gating without mass data leaks, India is leveraging its world-class India Stack. By integrating ID tokenization into this national plumbing, the government has ensured that the new age-verification regime functions as a privacy-first shield rather than a surveillance tool.

By using the existing infrastructure of Aadhaar and DigiLocker, the state can provide privacy-preserving verification at a scale of over a billion people. These “blind tokens” are temporary and can be deleted once they have served their purpose. It is a “privacy-by-design” approach that treats identity as a tool for empowerment rather than a target for exploitation. In the race to make the internet safe for the next generation, India has stopped waiting for a global solution and has built one of its own.

Counter-Arguments and the “Digital Divide”

No legislation is without critics. Civil society groups warn that mandatory age verification could be a “death knell” for online anonymity, potentially turning the Indian internet into a “Splinternet”, a fractured, state-monitored web. They fear the “Digital Lakshman Rekha” might unintentionally stifle the very marginalized voices that rely on digital spaces for safety and expression.

There is also a profound “patriarchal risk.” Data from the Economic Survey 2025–26 reveals a stark divide: while 57% of men have internet access, only 33% of women do. In rural households where a single smartphone is a shared family resource, strict mandates could provide a pretext for conservative heads of households to confiscate devices from daughters while allowing sons unfettered access, further widening the gender gap.

Finally, regulators face a “cat-and-mouse” game with tech-savvy teens who use VPNs and mirror apps to bypass digital walls. However, Minister Vaishnaw maintains that “perfection is the enemy of protection.” The goal of the IT Rules 2026 age-gating is not an unhackable fortress, but a “friction-filled” environment. By raising the barrier to entry, the state aims to eliminate casual, accidental exposure to predatory algorithms. In public policy, “good enough” safety is often more transformative than a perfect solution that never arrives.

The Economic and Cultural Imperative

Big Tech is not resisting these rules out of a pure love for liberty. Their pushback is rooted in a much more pragmatic reality: the loss of the “next billion users.” For platforms like Meta or YouTube, India is the crown jewel of growth. Younger users are their most active “eyeballs.” They are the ones who spend hours scrolling, creating content, and training the very AI models that drive platform revenue. By mandating age verification, India is effectively removing a significant portion of this high-engagement audience from the data-harvesting pool. To these platforms, every child protected by a digital wall is a data point lost.

But there is a deeper philosophical tension at play. Ashwini Vaishnaw has consistently argued that “innovation without trust is a liability.” At the AI Impact Summit 2026, he made a point that strikes at the heart of digital colonialism: global platforms must respect the “cultural context” of the countries in which they operate. In the digital world, where physical boundaries vanish, it is easy for a Silicon Valley firm to forget that what is considered “normal” in one society may be prohibited in another.

India is now defending its right to set its own “Digital Moral Code.” This is about sovereignty in the age of algorithms. Why should a foreign company’s community standards dictate what is safe for an Indian child? By building the “Digital Lakshman Rekha,” the government is asserting that India’s legal and constitutional framework is non-negotiable. It is a bold statement that a nation’s safety and cultural values are more important than a platform’s growth metrics. In doing so, India is providing a blueprint for any nation that refuses to let its digital future be written by someone else.

A Vision for Autonomy: Safeguarding the Digital Future

The final piece of this legislative puzzle is not about restriction, but about responsibility. Ashwini Vaishnaw’s closing remarks at the India AI Impact Summit on 17 February 2026 served as a powerful reminder that “innovation without trust is a liability.” This is the core philosophy of the IT Rules 2026. By moving beyond simple content moderation and demanding structural accountability, India is resetting the global standard for digital safety.

The “Digital Lakshman Rekha” represents more than just a barrier for minors; it is a declaration of strategic independence. It signals that India will not be a passive laboratory for unchecked algorithmic experiments. Instead, the nation is choosing to be an active architect of a “human-centric” digital ecosystem. This approach recognises that while technology can be a catalyst for progress, it must never come at the cost of social trust or the psychological well-being of the next generation.

As the 20 February 2026 enforcement date approaches, the eyes of the world are on New Delhi. India’s attempt to balance high-speed innovation with rigorous “techno-legal” guardrails is a test case for global democracy. If this model succeeds, it will prove that a nation can embrace the AI revolution without sacrificing its autonomous right to protect its citizens. The line has been drawn not to close doors, but to ensure that the ones we open lead to a future that is as safe as it is prosperous.