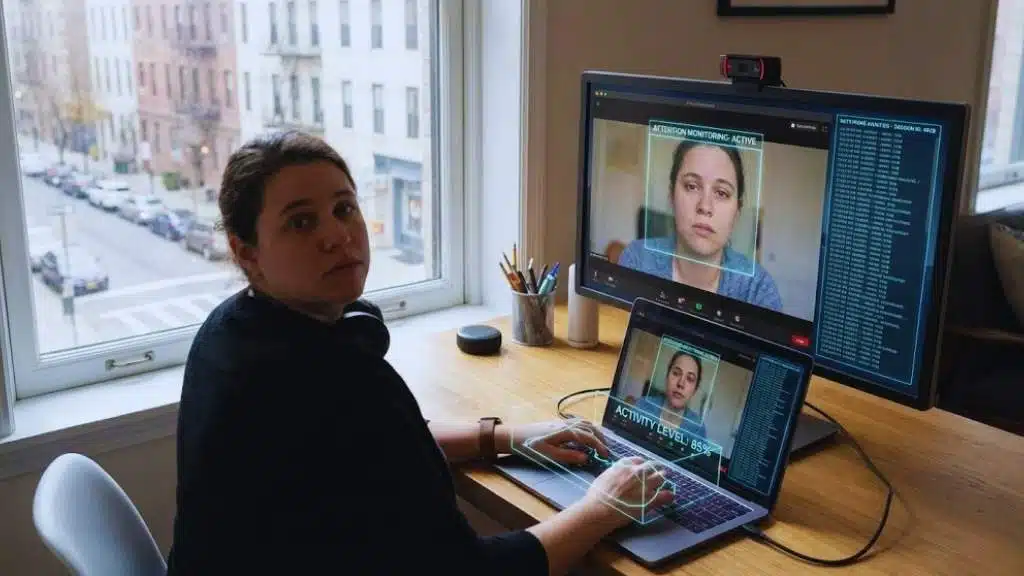

You know how it feels when you walk into a room and suspect someone has been talking about you? That uneasy sense of being watched is no longer just a feeling—it is the reality for millions of remote workers. In my 15 years as a lifestyle strategist helping executives in Dubai and the US build sustainable careers, I have seen this shift from trust to tracking completely reshape company cultures.

This is not just about checking in. It is about a fundamental change in how we define work. According to a February 2025 survey by ResumeBuilder, 74% of US employers now use online tracking tools to monitor their teams. That means if you are working remotely, the odds are high that an algorithm is watching your progress.

From what I have seen, the data suggests a clear divide. Companies that use these tools to support their teams thrive, while those that use them to police every keystroke often face high turnover and low morale. The Ethics Of AI Surveillance is a hot topic because this digital monitoring can hurt employees’ privacy and freedom.

So, let’s break down exactly what these tools are doing, the specific legal lines they might be crossing, and the practical steps you can take to protect your privacy and your peace of mind.

What Is AI Surveillance in Remote Work?

AI surveillance in remote work uses artificial intelligence to monitor employees while they work from home. This technology goes far beyond a simple green dot next to your name. Modern tools use sophisticated algorithms to analyze behavior, flag “suspicious” gaps in activity, and even predict future performance.

To understand the scope, you need to look at the specific tools dominating the market. Hubstaff, for example, is widely used for its activity levels feature, which calculates a productivity percentage based on keyboard and mouse usage. It can take random screenshots of your desktop to prove you are working on the right task.

On the more intense end of the spectrum is Teramind. This software focuses heavily on security and “insider threat detection.” Its features include optical character recognition (OCR), which allows the system to read and record text on your screen in real-time, and keystroke logging, which captures everything you type. For a manager, this offers granular control. For an employee, it can feel like having a boss breathing down your neck every second of the day.

Employers say this helps boost productivity and keeps data secure, but the practice raises big questions about privacy and ethics. Workers often do not give full consent for how their data is used. These systems can collect more than needed and may record personal details without telling employees.

Why Is AI Surveillance Growing in Remote Work Environments?

Remote work has changed how managers oversee employees. Without the ability to see someone physically at their desk, many leaders have turned to digital substitutes to regain a sense of control.

The numbers show just how deep this trend runs. Data from 2025 reveals that 90% of US firms now utilize some form of algorithmic management to assign, track, or evaluate work. This explosion in adoption is not accidental. It is driven by the promise of “guaranteed” ROI and the fear of time theft.

Employers want more control since they cannot see staff at their desks each day. AI technology offers instant reports on everything from screen time to keystrokes and video calls. Platforms like Insightful market themselves on the ability to identify “bottlenecks” and “overworked” employees, framing surveillance as a tool for operational efficiency rather than just discipline.

Still, using these tools can put worker privacy, autonomy, and trust at risk; it also raises questions about consent for personal data use in the workplace setting. When a piece of software flags a dedicated employee as “idle” simply because they were brainstorming on paper, the technology fails to capture the nuance of human work.

Key Ethical Issues in AI Surveillance

AI surveillance in remote work sparks big questions about privacy, fairness, and trust. When algorithms replace human judgment, the results can be messy and, in some cases, legally actionable.

Privacy and Consent Violations

Digital surveillance at work-from-home jobs can collect details about workers’ screens, keystrokes, and even conversations. The most significant issue here is the “blurring of lines.” A tool installed on a personal device might inadvertently capture private banking data or personal messages during a lunch break.

Legal standards are trying to catch up. In New York, Section 52-c of the Civil Rights Law now mandates that private employers must provide written notice to new hires if they monitor phone, email, or internet usage. This law forces a baseline of transparency, ensuring workers at least know they are being watched.

Workers may feel they have lost control of their own digital lives. This constant monitoring can erode job autonomy and make it hard to trust the organization’s intentions. Studies show that 1 in 3 remote employees worry about how much employers watch them.

Algorithmic Bias and Discrimination

AI surveillance tools can make unfair choices if the data used to train them is not diverse or balanced. A “productivity score” might penalize a worker with a disability who uses dictation software instead of a keyboard, flagging them as “inactive” due to a lack of keystrokes.

We have already seen major legal consequences for this type of algorithmic failure. In a landmark case, the iTutor Group agreed to pay $365,000 to settle a lawsuit after its hiring software automatically rejected female applicants over 55 and male applicants over 60. This wasn’t a human decision—it was a biased algorithm hard-coded to discriminate.

Ethical problems increase when these AI systems are hard for employees to understand or question. Trust between employers and employees can decline if people see that these monitoring tools treat some groups unfairly. Ethical frameworks must address this issue so workplace surveillance does not harm worker rights or create inequality in remote workforce settings.

Lack of Transparency (The “Black Box” Problem)

Many remote workers cannot see how AI monitoring tools make decisions. They receive a performance rating at the end of the week, but the criteria used to generate it remain hidden inside a “black box.”

This leaves them unsure if the technology is fair, safe, or unbiased. Employers might use complex software that even experts find hard to explain. For instance, if an AI tool flags you as a “flight risk” because you updated your LinkedIn profile, you might be passed over for a promotion without ever knowing why.

Some companies collect large amounts of employee data without clear consent or open communication. Tools can record every keypress, website visit, or screen capture while hiding how these details shape evaluations and reports. Without clear rules about transparency, worker anxiety over privacy and ethical risks grows.

Function Creep and Data Misuse

AI monitoring tools in remote work often start by tracking simple things, like logins and app usage. Over time, these tools may begin to collect extra data outside their original purpose. This is called function creep.

A disturbing example of this is the rise of “emotion detection” features in modern bossware. Some tools now claim to analyze an employee’s facial expressions via webcam to determine if they are “engaged” or “stressed.” What starts as time tracking morphs into a pseudo-psychological assessment that employees never agreed to.

Employers might use this information in ways that harm privacy or workplace wellbeing. Data collected for checking attendance could later be used to judge someone’s behavior or job security unfairly. These risks show how easy it is for basic AI employee monitoring to turn into a larger threat to trust and autonomy at work.

Power Imbalances Between Employers and Employees

Employers hold much more control than workers in remote jobs with AI surveillance. They can track every mouse click, document opened, and break taken. This imbalance creates a culture of fear rather than accountability.

A 2024 study from Cornell University highlighted the direct fallout of this dynamic. The researchers found that algorithmic surveillance led directly to “resistance behaviors” from staff. Instead of working harder, employees under surveillance were more likely to complain, perform worse, and actively look for new jobs.

Productivity tracking tools aim to increase efficiency but often weaken workplace privacy and well-being. As a result, fear of constant monitoring affects morale and makes speaking up harder for many people at home. These problems show the need for strong ethical guardrails around digital monitoring at work.

Legal and Ethical Frameworks for AI Surveillance

Laws and clear ethical rules shape how companies use AI surveillance. While technology moves fast, the legal landscape in the US is beginning to erect significant barriers against unchecked monitoring.

“The differentiator is not adoption. The differentiator is restraint, transparency, and how leaders use the information.” — High5Test 2025 Report

Transparency and Explainability Requirements

Clear rules must guide how companies use AI monitoring tools in remote work. The gold standard for this is explainability—the idea that a human should be able to understand how an AI reached a decision.

This honesty helps build trust and addresses fears about privacy violations or hidden motives. If an employee is flagged for low productivity by a machine, they deserve to know how the algorithm judged them. Was it low keystrokes? Time spent on a specific website? Without this clarity, doubts grow over fairness and ethical considerations.

Accountability and Oversight Mechanisms

Clear rules and strong oversight help prevent the misuse of AI surveillance in remote work. Employers must put checks in place to watch how monitoring tools collect, store, and use data.

The National Labor Relations Board (NLRB) has been a key player here. While recent shifts in 2025 have led to some uncertainty regarding the “Abruzzo Memo” (GC 23-02), the core principle remains a hot button issue: using surveillance to interfere with workers’ rights to organize or discuss wages is a major legal risk.

Rules should demand clear record-keeping around employee monitoring decisions. Anonymous reporting channels let staff point out mistakes or unfair treatment without fear of losing their jobs. Strong oversight builds trust within organizations and makes sure workplace surveillance follows strict legal standards.

Data Minimization and Protection Standards

Data minimization limits the amount of information collected about workers during AI surveillance. The rule of thumb I always recommend is simple: if you don’t need the data to measure a specific outcome, don’t collect it.

For example, if a company uses monitoring tools at home, it must not collect private photos or unrelated browser history. Protection standards require strong security to keep employee data safe from leaks or hackers. Companies need to use passwords, encryption, and regular checks on their systems.

Inclusivity to Address Algorithmic Bias

Companies need to design AI surveillance systems that include all types of employees. If the data used for monitoring only reflects certain groups, workers outside those groups may face unfair treatment or discrimination.

For instance, biased algorithms can flag certain behaviors as unproductive just because they differ from the majority. This kind of bias hurts workplace equity, trust in organizations, and employee rights. Ethical considerations push for diverse input when creating and testing these tools.

Balancing Productivity and Employee Rights

Finding the right balance between workplace monitoring and individual privacy stirs debate among many remote workers. Companies must weigh how much surveillance supports productivity without harming trust or personal freedom—an ongoing challenge in today’s digital workplaces.

| Surveillance Approach | Employee Impact | Better Alternative |

|---|---|---|

| Keystroke Logging | High stress, privacy invasion, fear of making mistakes. | Outcome-Based Goals (Measure completed projects, not typing). |

| Screen Recording | Feeling of “Big Brother,” performance anxiety. | Regular Check-ins (Weekly video calls to discuss blockers). |

| “Idle Time” Tracking | Prevents deep thinking, encourages “mouse jiggling.” | Results-Only Work Environment (ROWE) models. |

The Role of Consent in AI Monitoring

Consent protects worker privacy and autonomy during AI surveillance. Unfortunately, “consent” is often buried in a dense employment contract that no one reads. Explicit consent means workers know what data is collected, how it will be used, and who can access it.

Workers should have a choice about joining these systems unless there is a legal reason for mandatory monitoring. Without true consent, AI surveillance can erode trust between staff and management quickly. Companies must explain AI monitoring rules with simple terms so everyone understands the risks and benefits.

Encouraging Collaboration Over Surveillance

Many remote workers feel uneasy about AI monitoring. Tracking every move can reduce trust and harm workplace wellbeing. Instead, companies should focus on teamwork and open feedback to support both productivity and employee rights.

Using digital tools for collaboration can replace invasive surveillance technology. Platforms like Slack or Microsoft Teams allow people to share ideas in real time without pressure from constant monitoring. This approach protects worker rights, strengthens workplace privacy, and builds genuine trust in organizations.

The Future of AI Surveillance in Remote Work

AI tools will keep changing how companies watch and help remote teams. New rules and smarter systems could shift what workers expect from their jobs…and how much privacy they get.

Emerging Technologies and Ethical Safeguards

New surveillance technology uses artificial intelligence to track employee productivity, gather work data, and even monitor behavior. We are moving toward “Wellness Ware”—tools that claim to monitor for burnout but rely on the same invasive data collection methods.

Lack of consent or privacy rules can harm workplace wellbeing and trust among staff. To protect workers’ rights, ethical safeguards must keep pace with new monitoring tools. Clear consent rules set boundaries for what data employers collect and how they use it.

Global Regulations and Their Impact

Countries set strict rules for AI surveillance in remote work. The European Union’s GDPR, since 2018, forces companies to protect employee data and get clear consent before monitoring. In the United States, the landscape is shifting dramatically with the California Delete Act (SB 362).

Effective as of January 2026, this act empowers Californians to request the deletion of their personal data from all registered data brokers with a single click. This is a game-changer for digital privacy. It means that the data collected by third-party monitoring firms is no longer theirs to keep forever—it belongs to the worker.

Strict global standards push firms to limit the amount of data they collect and use advanced security steps. These regulations try to prevent privacy breaches, algorithmic bias, and unethical data misuse that can erode trust between workers and leaders.

Final Thoughts

AI surveillance in remote work affects privacy, trust, and employee rights at every step. Clear rules, honest communication, and smart use of data can help keep workplaces safer and fairer for everyone.

Tools that respect people’s consent are easy to use and support both productivity and well-being without crossing the line. For those eager to learn more or improve their work environment, plenty of helpful guides on digital privacy are just a click away.

Every small step toward ethical monitoring builds stronger teams where respect leads the way. Your choices today shape a healthier future for all remote workers.