Neuromorphic computing—brain-inspired hardware designed to run AI with less energy—is moving from lab prototypes to large deployed systems, as researchers and national labs test whether spiking, event-driven designs can reduce costs and power limits in real-world computing.

What neuromorphic computing is—and why it matters now?

Neuromorphic computing is a style of computing that takes cues from how biological brains process information. Instead of pushing every part of a chip forward on a rigid clock cycle, neuromorphic systems often work in an event-driven way: they do meaningful computation mainly when something changes—like a new signal arriving from a sensor or a “spike” traveling across a network.

That matters because many of today’s AI systems are expensive to run. Large models require high-performance chips, large memory bandwidth, and massive data movement between memory and processors. That translates into rising electricity use, rising cooling needs, and rising cost—especially in data centers. At the same time, more AI is being pushed out of the cloud and into the real world: phones, cameras, robots, industrial sensors, vehicles, and medical devices that must react instantly without sending every decision to the internet.

Neuromorphic computing aims to meet that moment by improving efficiency in a different way than the usual “make the GPU bigger” approach. The most common promises are:

- Lower power for always-on intelligence. Many real devices need to listen, watch, and react 24/7. Event-driven chips can potentially avoid doing constant work when inputs are quiet.

- Fast response with less overhead. If computation triggers only when needed, latency can drop for specific tasks like detection and control.

- Better fit for time-based signals. The world is continuous and time-based. Spiking approaches handle timing naturally, which may help with streaming sensor data.

- New paths to “learning on device.” Some neuromorphic research focuses on whether systems can adapt locally without retraining giant models in the cloud.

It is important to be clear about what neuromorphic computing is not, at least for now. It is not a full replacement for conventional CPUs. It is not a guaranteed replacement for GPUs in training the largest modern deep-learning models. Instead, it is best understood as a new class of compute being tested for the parts of AI where energy, latency, and real-time interaction are the biggest constraints.

Major deployments: Hala Point and NERL Braunfels signal a shift to scale

For years, neuromorphic computing was often discussed in terms of small chips, demos, and research papers. What has changed is that the field is now showing larger deployments intended to support serious experimentation at scale—especially around energy efficiency and real workloads.

Two widely discussed systems illustrate this shift Hala Point (built around Intel’s Loihi 2 neuromorphic processors) and NERL Braunfels (built around SpiNNaker2). These platforms are not identical in design philosophy, but they share a common goal: make brain-inspired computing large enough that researchers can test real applications and measure results under practical constraints.

Key milestones and claims at a glance

| System | Organization / Site | Public timing | Scale (reported) | Notable power / efficiency claims (reported) | Main purpose |

| Hala Point (Loihi 2) | Installed at Sandia National Laboratories | Announced April 2024 | 1,152 Loihi 2 processors; ~1.15B “neurons” and ~128B “synapses” | Up to ~2,600W max power; high TOPS/W figures reported for certain workloads | Research platform for scalable neuromorphic AI |

| NERL Braunfels (SpiNNaker2) | Sandia + SpiNNcloud collaboration | Publicly detailed June 2025 (arrived March) | ~175M “neurons” | Reported ~18× more efficient than GPUs for specific target workloads | Research platform for efficiency-focused brain-style computing |

These numbers are reported using “neurons” and “synapses” as convenient scaling terms. They do not mean the systems replicate the human brain, nor do they guarantee performance on every AI task. What they do show is that neuromorphic hardware is now being built and deployed in sizes large enough to matter for experiments beyond toy workloads.

Why scale changes the conversation?

Scale brings three practical benefits:

- Realistic stress testing. Many AI and simulation problems do not reveal their true costs at small sizes. Larger systems reveal bottlenecks in communication, memory access, scheduling, and software tooling.

- More credible measurement. Efficiency claims are easier to validate when systems run sustained workloads under controlled conditions, not just short demos.

- Ecosystem pressure. When hardware becomes big and expensive enough to be treated as infrastructure, it forces the field to improve software, standards, and benchmarks.

What these systems are trying to prove?

Both Hala Point and NERL Braunfels are part of a broader attempt to answer a few hard questions:

- Can event-driven hardware deliver repeatable energy savings on important workloads?

- Can neuromorphic platforms be programmed reliably by non-specialists, not just by expert labs?

- Can they scale without communication overhead wiping out the expected efficiency gains?

- Can they integrate into real computing environments—alongside CPUs, GPUs, and conventional accelerators?

If the answer is “yes” for even a subset of workloads, neuromorphic computing could become a practical addition to future computing stacks—especially in power-limited environments.

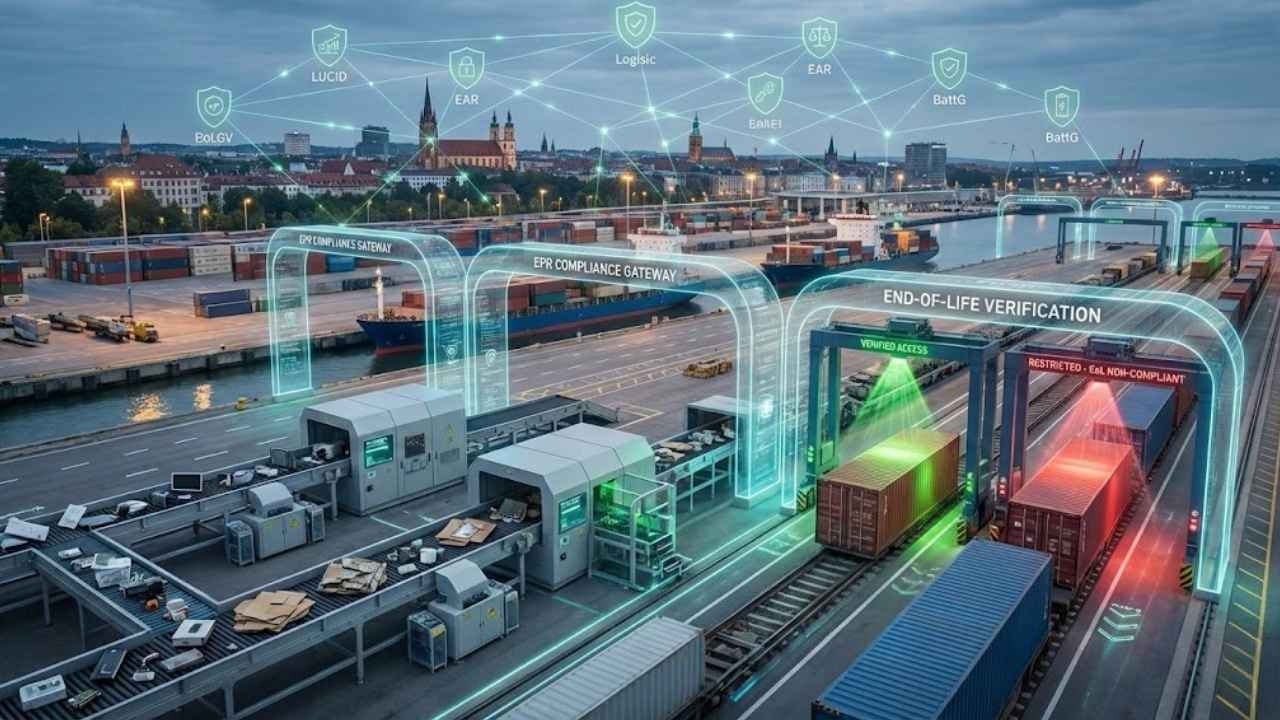

The software, standards, and benchmarking push behind the hardware

Hardware alone rarely changes an industry. The technology becomes useful only when developers can build applications without reinventing everything for each new chip. Neuromorphic computing has long struggled with fragmentation: different platforms, different programming models, and different performance metrics that make it hard to compare results fairly.

That is why the software layer is now becoming a central part of the story. Three connected efforts matter most: developer frameworks, interoperability standards, and benchmarks.

Frameworks that aim to make neuromorphic usable

Modern AI grew fast partly because tools became accessible: shared libraries, model repositories, and workflows that let developers iterate quickly. Neuromorphic computing is trying to build a similar foundation.

Open frameworks in the ecosystem emphasize ideas such as:

- Modular building blocks so networks, sensors, and learning rules can be reused.

- Backends that allow experimentation on conventional hardware before deployment on neuromorphic chips.

- Event-based messaging so systems can represent sparse activity efficiently.

The goal is not to force everyone to write low-level code. It is to make neuromorphic development feel closer to modern AI development: define a model, test it, measure it, and deploy it.

Interoperability: a common “model language” for neuromorphic systems

Another barrier is portability. A spiking model that runs on one simulator may not translate cleanly to another hardware platform. To fix that, researchers are proposing intermediate representations—formats that describe the essential computation in a platform-independent way.

This approach mirrors what happened in other fields: when shared representations emerge, ecosystems grow faster because tools can interconnect. Interoperability also reduces vendor lock-in. For institutions investing in neuromorphic hardware, that matters: they want the freedom to move workloads as platforms evolve.

Benchmarks: the key to separating promise from performance

Neuromorphic computing has often been criticized for unclear comparisons. Some claims look strong in narrow conditions but do not translate broadly. That is why benchmarking frameworks are becoming a big deal.

A useful benchmark effort does three things:

- Defines tasks clearly. What is being measured? Detection? Classification? Control? Streaming inference?

- Separates algorithm vs. system. A brilliant spiking model is different from a fast chip. Benchmarks should show both.

- Uses realistic metrics. Not just accuracy, but energy, latency, throughput, and stability under real inputs.

Why this matters for adoption?

If neuromorphic computing is to move into products and infrastructure, buyers will demand the same things they demand from other compute platforms:

- Clear programming interfaces

- Reliable toolchains

- Debugging support

- Performance predictability

- Transparent evaluation

In many ways, the current moment in neuromorphic computing resembles early-stage GPU computing: powerful ideas existed, but broad adoption required a software revolution.

Where neuromorphic computing could win first—and what it likely won’t replace yet?

The most important practical question is simple: What is neuromorphic computing for? The most credible near-term answers focus on situations where traditional architectures pay a steep price.

Likely early wins

Neuromorphic approaches are most promising in environments with one or more of the following conditions:

- Always-on sensing: Cameras, microphones, radar, and industrial sensors that must monitor continuously. If the input is sparse or changes only occasionally, event-driven computation may save energy.

- Edge devices with tight power budgets: Drones, wearables, mobile robots, and remote monitoring systems where every watt matters and cloud connectivity may be unreliable.

- Real-time control and robotics: Systems that must react quickly to changing conditions, often using streaming data. Timing is central, and spiking approaches naturally represent time.

- Scientific and national-lab experimentation: Institutions testing alternative computing for simulations, optimization, and domain-specific workloads under power and scaling constraints.

Where GPUs still dominate?

Neuromorphic computing does not automatically outperform GPUs across the board. GPUs remain extremely strong in:

- Large-scale training of deep learning models with dense matrix operations

- General-purpose high-throughput inference at scale in data centers

- Mature developer ecosystems with proven reliability and wide tool support

Neuromorphic platforms may complement GPUs rather than replace them. In a realistic future, a system might use:

- GPUs for training and heavy batch inference

- Neuromorphic accelerators for always-on, low-power, real-time tasks

- CPUs for orchestration, control flow, and general computing

A practical comparison for decision-makers

| Question | Conventional AI stack (CPU/GPU) | Neuromorphic computing (typical goal) |

| Strength | High throughput, mature tools | Power efficiency for event-driven tasks |

| Best fit | Dense compute, big training jobs | Sparse, streaming, time-based workloads |

| Developer ecosystem | Very mature | Still emerging |

| Key risk | Power and cost scaling | Fragmentation, limited portability, unclear workload fit |

| Near-term role | Backbone of AI today | Specialized accelerator + research platform |

This is why the current phase of neuromorphic computing is heavily focused on finding the right workloads and proving value with repeatable, benchmarked results.

What to watch next?

Neuromorphic computing is entering a more serious phase. The conversation is shifting from “interesting research idea” to “installed systems with measurable performance.” Large deployments at major labs indicate growing confidence that brain-inspired computing is worth testing as an efficiency strategy—especially as AI pushes power limits in both the cloud and at the edge.

Here is what to watch over the next 12–24 months:

- Benchmark results that hold up across platforms. If multiple systems show consistent efficiency and latency advantages on the same tasks, adoption becomes much easier to justify.

- Tooling improvements that reduce complexity. Better compilers, debugging tools, and model portability will matter as much as hardware innovation.

- Clear workload success stories. The field needs practical examples—edge sensing, robotics, optimization, or simulation—where neuromorphic systems beat conventional stacks in total cost or energy.

- Hybrid computing architectures. The most realistic future may be heterogeneous: neuromorphic accelerators working alongside CPUs and GPUs, each handling the tasks they do best.

Neuromorphic computing may not be the headline replacement for today’s AI hardware, but it is increasingly positioned as a serious contender for the parts of AI where efficiency and real-time behavior matter most.