Just two months after its quiet launch, the AI hardware startup Unconventional AI has pulled off one of the biggest seed funding rounds in history, raising $475 million at a whopping $4.5 billion valuation. This deal, announced by founder Naveen Rao on Sunday, signals massive investor excitement for fresh solutions to the skyrocketing energy costs of AI computing. Traditional AI systems, especially those powered by GPUs, guzzle electricity like there’s no tomorrow, and Unconventional AI steps in with a promise of far more efficient hardware designed from the ground up for AI workloads.

The funding round came together at breakneck speed, co-led by powerhouse venture firms Andreessen Horowitz (a16z) and Lightspeed Venture Partners. It also pulled in heavy hitters like Lux Capital, DCVC, Databricks—the very company Rao left to start this venture—and even Jeff Bezos, the Amazon founder with a keen eye for bold tech bets. Rao himself chipped in $10 million on identical terms to the other investors, underscoring his rock-solid belief in the mission. This isn’t the end either; Rao hinted this is just the opening tranche of a potential $1 billion total raise, with the team still assessing how much more capital they’ll deploy as they ramp up. Earlier whispers in October pointed to ambitions of a full $1 billion at a $5 billion valuation, showing how quickly momentum built.

Adding depth to the founding team, Unconventional AI boasts co-founders with impressive pedigrees: Michael Carbin from MIT, Sara Achour, and MeeLan Lee, blending academic rigor with practical tech experience to tackle this ambitious redesign. Rao first teased the company on X (formerly Twitter) in September 2025, right after departing Databricks, framing it as a bold rethink of computer architecture to create a “new substrate for intelligence” that’s as power-thrifty as biological systems.

Pioneering Energy-Efficient AI Hardware from Scratch

At its core, Unconventional AI is engineering a fundamentally new type of computer optimized for AI applications, prioritizing energy efficiency over the brute-force power of current setups. Rao, armed with a PhD in neuroscience and electrical engineering degrees from Stanford and Brown, draws inspiration from biology’s elegant efficiency—think how the human brain processes complex tasks with minimal watts compared to data center behemoths. The approach centers on analog computing chips, which leverage the inherent nonlinear behaviors in electronic circuits rather than sticking to rigid digital logic that’s great for precision but terrible for AI’s probabilistic workloads.

This isn’t about mimicking the brain neuron-for-neuron in a neuromorphic style; instead, it’s selectively borrowing nature’s tricks to supercharge efficiency for specific AI models like diffusion models, flow matching, and energy-based systems that thrive on these dynamics. Rao emphasizes that biology sets an aspirational bar, not a strict blueprint, freeing the team to innovate without nature’s constraints. They’re not keeping secrets either—expect research papers and findings to drop in coming months, fostering collaboration rather than years of stealth development. Down the line, this evolves into complete ecosystems: custom silicon chips paired with tailored server infrastructure to deliver end-to-end AI acceleration.

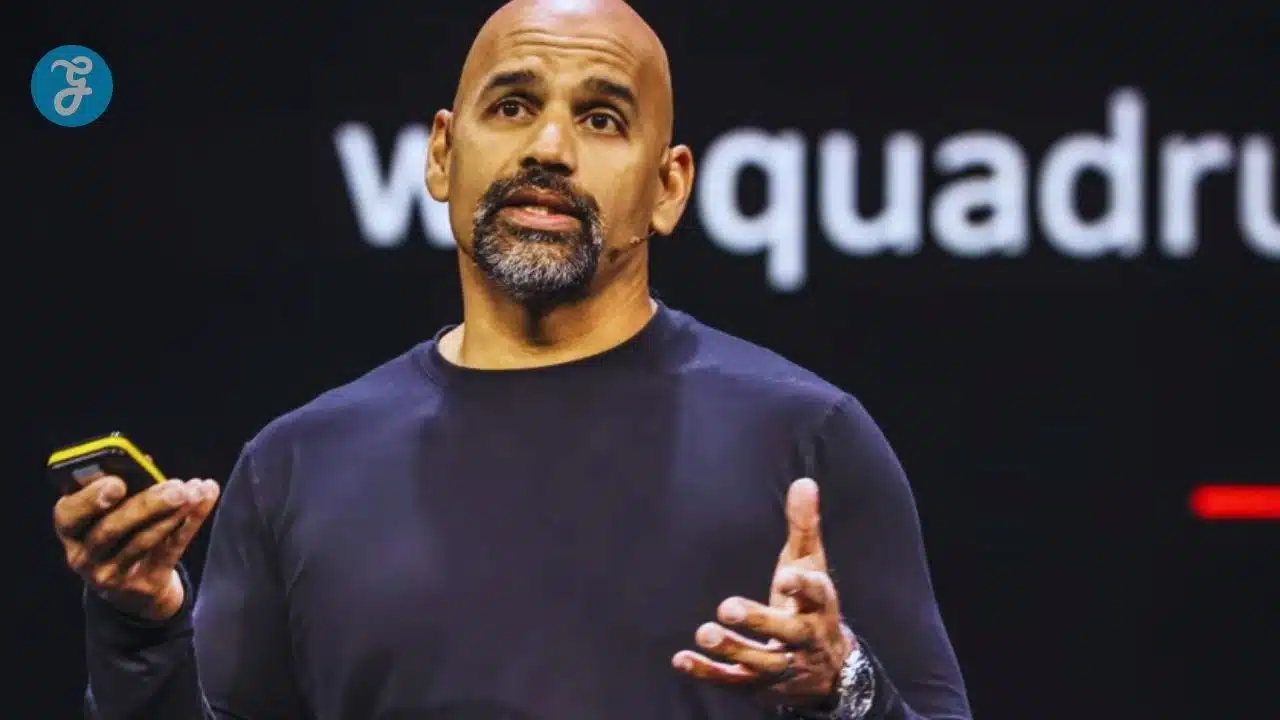

Naveen Rao: A Serial AI Infrastructure Winner

Naveen Rao isn’t starting from zero—he’s a proven force in AI hardware with a string of high-profile exits that validate his vision. Back in 2016, Intel snapped up his Nervana Systems, a machine learning platform, for around $408 million, recognizing its potential to streamline deep learning at scale. Fast-forward to 2023: Databricks acquired his MosaicML for $1.3 billion. Founded in 2021, MosaicML cracked the code on making massive neural network training more accessible and affordable, democratizing AI development for enterprises.

These successes stem from Rao’s knack for identifying bottlenecks in AI infrastructure and building practical fixes that attract giant acquirers. His time at Databricks, leading AI efforts until September 2025, gave him frontline exposure to the exploding demands of generative AI, fueling this latest pivot. Investors aren’t betting on a rookie; they’re backing a track record that screams “next big thing in AI compute.”

AI’s Escalating Energy Crunch Demands Innovation

The timing couldn’t be better—or more urgent—as AI’s power hunger threatens to overwhelm global energy grids. In 2024 alone, U.S. data centers consumed about 200 terawatt-hours of electricity, with AI-specific servers gobbling up 53 to 76 terawatt-hours of that slice. Looking ahead, researchers project AI energy use could balloon to 165-326 terawatt-hours per year by 2028—enough juice to power roughly 22% of all U.S. households. Rao sounds the alarm: without radical efficiency gains, energy scarcity will throttle AI’s growth long before other limits kick in.

Datacenters are already straining under the load, with power availability lagging far behind compute demand. Unconventional AI positions itself as a direct counterpunch, aiming to slash those wattage needs while matching or exceeding performance on key AI tasks. This isn’t hype; it’s a response to real-world physics where silicon efficiency plateaus under Nvidia-style scaling.

Disrupting Nvidia’s AI Chip Empire

Nvidia reigns supreme with 80-90% control of the AI chip market, its GPUs the gold standard for training and inference. Yet cracks are showing: the hotly anticipated Blackwell GPUs are reportedly backordered for a full 12 months, leaving hyperscalers scrambling. This supply crunch creates prime real estate for disruptors offering novel architectures that sidestep digital power pitfalls. Unconventional AI dives into this fray with investor firepower, betting on analog-inspired designs to carve out a niche in efficiency-starved segments. Big VCs like a16z see echoes of past compute shifts, where alternatives eventually chipped away at incumbents by solving unmet needs.

Even pre-product, the $4.5B valuation reflects conviction that Rao’s team can deliver, potentially reshaping AI infrastructure amid unrelenting demand surges. As AI adoption accelerates across industries, solutions like this could redefine what’s possible without bankrupting the planet’s power budget.