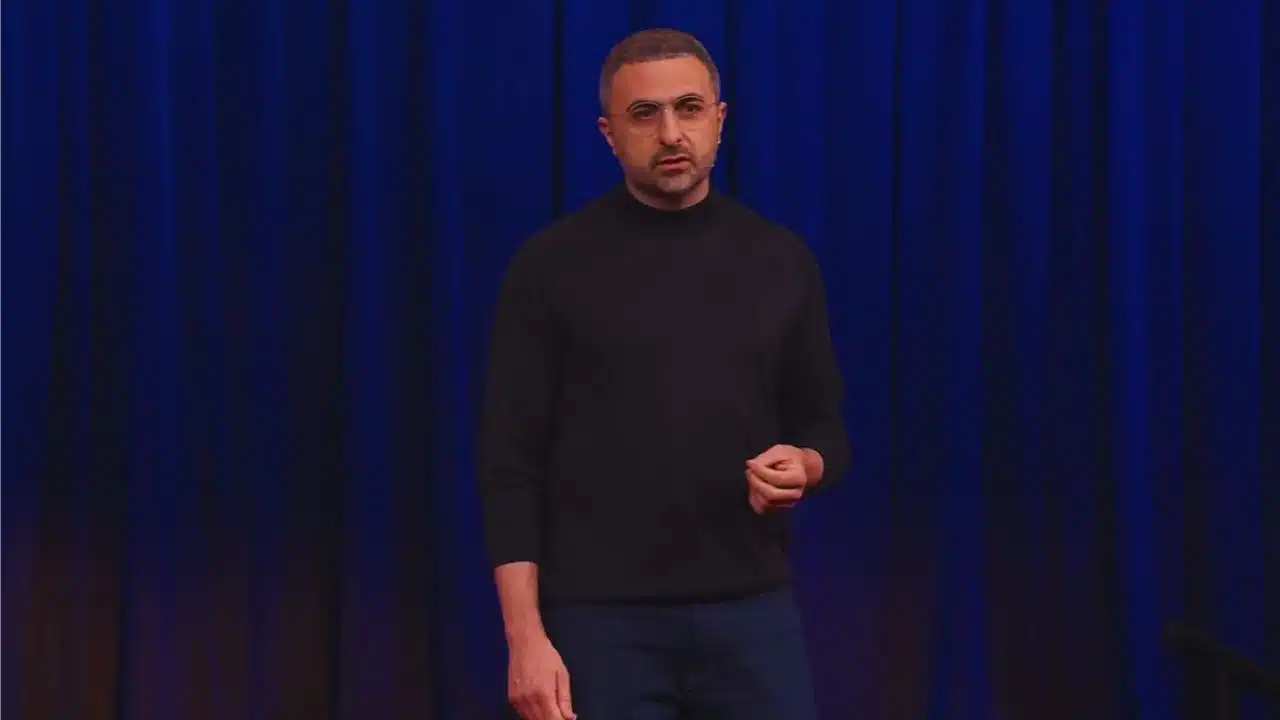

Microsoft has created a new advanced research unit inside Microsoft AI called the MAI Superintelligence Team. It will be led by Mustafa Suleyman, the CEO of Microsoft AI who previously co-founded DeepMind and later co-founded and ran Inflection. The group’s mandate is explicit: pursue highly capable AI that remains grounded, controllable, and focused on solving concrete human problems rather than chasing an open-ended, undefined “superintelligence.” The announcement emphasizes boundaries and accountability—stating clearly that the aim is not superintelligence “at any cost,” but systems designed from the outset to benefit people and remain within human oversight.

This move lands amid an industry-wide race to hire elite AI researchers and scale frontier models across consumer and enterprise products. Microsoft already uses external foundation models in Bing and Copilot and runs significant AI workloads on Azure, while also strengthening in-house efforts and reducing reliance on a single outside partner. Suleyman’s background—spanning foundational research at DeepMind and product-oriented work at Inflection—signals a leadership focus on both scientific ambition and practical deployment with safety controls and product discipline baked in.

What the team says it will build—and why it matters now

The MAI Superintelligence Team frames its goal as “human-serving superintelligence”: powerful specialists that can be measured, audited, and directed toward tangible outcomes. Instead of seeking a single, open-domain general mind, the team prioritizes targeted domains where performance can be verified and risks can be bounded.

Initial application areas the team highlights:

- Healthcare and diagnostics: Aim for expert-level performance across a broad range of diagnostic tasks, coupled with planning and prediction in real clinical workflows. The focus is not only on model accuracy, but on end-to-end usefulness—triage, decision support, and operational integration—while retaining strict human control.

- Education companions: Build capable assistants that elevate learning quality, personalization, and access without sacrificing safety or reliability. The intention is to create tools that guide, explain, and adapt to learners rather than replace teachers.

- Renewable energy and production efficiency: Explore narrow areas where advanced modeling, planning, and simulation can unlock improvements in clean-energy generation, storage, and grid or plant operations.

Design principles repeatedly stressed by the team:

- Controllability over open-ended autonomy: Systems should remain steerable, auditable, and interruptible. The emphasis is on tools that extend human capability rather than agents acting on their own.

- Clear problem definitions and measurable outcomes: Choose domains where success can be quantified (e.g., diagnostic accuracy, time to correct decision, energy yield), enabling rigorous evaluation instead of hype.

- Responsible scaling with constraints: Investment and compute will be applied with explicit limits. The goal is progress that stands up to safety review, reliability testing, and real-world validation, not headline-driven leaps.

Competitive context, strategic posture, and what to watch next

Across the tech sector, leading companies are assembling “superintelligence”-branded teams and making major commitments to frontier research. Microsoft’s positioning is deliberately distinct: specialize first, prove value in high-impact domains, and keep humans in the loop. Strategically, this aligns with a multi-model, multi-partner stance while building stronger internal research capacity. It also addresses investor scrutiny over unchecked AI spending by committing to progress with boundaries and domain-specific milestones.

- Clinical pilots and benchmarks: Look for evidence that diagnostic systems meet or exceed expert performance under real hospital constraints—data drift handling, workflow fit, safety guardrails, and reduction of false positives/negatives that actually improves patient outcomes.

- Education impact studies: Expect controlled trials showing learning gains, curriculum alignment, and measurable improvements in comprehension and retention—alongside robust safeguards for bias, privacy, and age-appropriate behavior.

- Energy and operations milestones: Track concrete results such as optimization gains in renewables output, reliability improvements, or cost reductions tied to model-driven planning and simulation.

- Governance artifacts: Anticipate published safety frameworks, red-team methodologies, alignment evaluations, and post-deployment monitoring protocols that demonstrate “controllability” beyond slogans.

- Tooling for oversight: Interfaces for clinicians, teachers, and operators—dashboards, explanation tools, and decision logs—will indicate how the team turns controllability into day-to-day practice, not just a research promise.

Microsoft is formalizing a path toward superhuman capability in carefully chosen, high-stakes domains—healthcare first—coupled with a hard constraint: usefulness, measurability, and control come before breadth. If the team delivers validated gains in diagnostics, learning outcomes, and energy efficiency—without sacrificing safety—the “serve humanity” promise will read as an operating model, not a marketing line.

The Information is Collected from CNBC and India Today.