In a groundbreaking development that could reshape how we interact with artificial intelligence, OpenAI has released GPT-5, its latest and most powerful language model to date. The company claims that GPT-5 offers PhD-level expertise across a wide range of disciplines, including software development, complex reasoning, and content generation—marking what CEO Sam Altman calls “a new era of AI.”

GPT-5 is being described as smarter, faster, and more human-like than any of its predecessors, sparking discussions across the tech industry, academia, and ethical research circles about what this leap in AI capability could mean for businesses, users, and society at large.

GPT-5: Smarter, Faster, and Designed for Deep Reasoning

At the heart of GPT-5 is what OpenAI calls a “reasoning model,” engineered to offer step-by-step logic, inference, and critical thinking—mimicking the way human experts solve problems. This represents a shift from prior models like GPT-3 and GPT-4, which were highly capable but often failed to show their thought process or relied on shallow pattern recognition.

Key improvements include:

| Capability | GPT-5 Performance Level |

| Reasoning & Logic | Demonstrates step-by-step problem solving and deduction |

| Coding Proficiency | Can develop complete software solutions and troubleshoot code |

| Reduced Hallucinations | Fewer made-up or inaccurate responses due to better data training |

| Emotional Intelligence | More balanced, human-like communication in emotionally sensitive situations |

| Multimodal Training | Trained on text and code, though not yet generating images or audio |

| Conversational Flow | Feels natural and responsive, with a deeper understanding of context |

According to Altman, the difference in experience is striking. He compared previous versions as follows:

- GPT-3 felt like talking to a high school student

- GPT-4 resembled a college-level assistant

- GPT-5 finally delivers the feel of talking to a subject-matter expert or PhD

This is not just marketing language—the model’s new reasoning abilities allow it to justify answers, back up claims, and show its process, making it particularly valuable in professional, academic, and technical environments.

Applications: GPT-5 as a Developer’s AI Assistant

One of the biggest selling points of GPT-5 is its strong appeal to software developers. The model can now generate entire applications, write clean and efficient code, suggest optimizations, and even explain technical concepts as a tutor would.

This move follows trends in the industry. Anthropic, another AI firm, recently launched Claude Code, targeting coders and programmers with similar claims. Google and Meta are also racing to integrate AI more deeply into software development tools.

With GPT-5, OpenAI is positioning itself as a serious competitor in this high-stakes arena—hoping to not only support individual coders but also integrate into enterprise development environments where AI-assisted engineering is becoming a norm.

Rivalry with Elon Musk’s Grok AI Intensifies

OpenAI’s launch of GPT-5 comes just weeks after Elon Musk announced major upgrades to Grok, the AI assistant integrated into his X platform (formerly Twitter). Musk boldly claimed that Grok is “better than PhD level in everything” and referred to it as the “smartest AI on Earth.”

This claim was met with skepticism by the AI research community, given Grok’s limited test cases and closed evaluations. But the timing of both releases suggests an increasingly competitive landscape among top AI developers. While OpenAI remains the leader in commercial deployment and model refinement, challengers like Anthropic, xAI (Musk’s company), Google DeepMind, and Mistral are rapidly gaining ground.

GPT-5’s ability to show logical thought, behave ethically, and communicate like a professional may give it an edge—but the true battle lies ahead, as all models are now being judged by real-world results and user trust.

Experts Caution: “Marketing Hype Can’t Replace Reality”

Despite the enormous claims made by OpenAI, some experts are cautious about overestimating the significance of GPT-5.

Professor Carissa Véliz, from the Institute for Ethics in AI at the University of Oxford, pointed out that GPT-5, while impressive, is still imitating human intelligence, not replicating it. She also warned that AI companies are under pressure to keep public interest high—sometimes through exaggerated marketing.

“There is a fear that we need to keep up the hype, or else the bubble might burst,” said Prof. Véliz.

She added that most of these tools are not yet profitable, and their real-world impact has yet to meet the scale promised in press releases.

Ethics and Regulation: A Growing Concern

With each new leap in AI capability, the gap between innovation and regulation grows wider. Experts are warning that while models like GPT-5 can now mimic human experts, there’s still little oversight on how these models are used, how they’re trained, and who is held accountable for their output.

Gaia Marcus, Director at the Ada Lovelace Institute, called for urgent attention from lawmakers:

“As these models become more capable, the need for comprehensive regulation becomes even more urgent.”

The concern is that AI systems are becoming so advanced that public trust and transparency are being outpaced by technological speed.

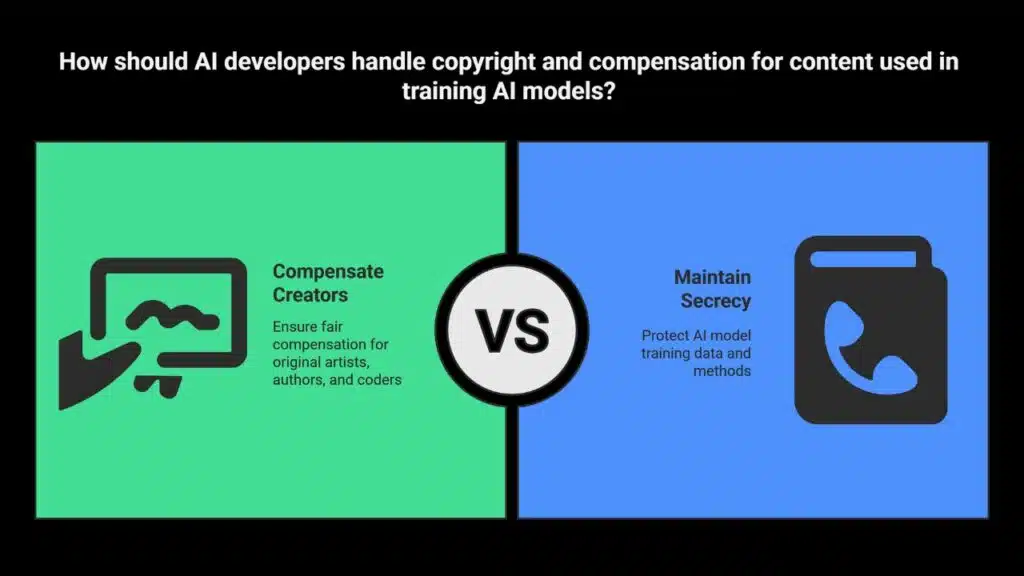

Protecting Creators: Who Owns the Data Behind GPT-5?

Another key issue raised during the GPT-5 launch was about how the model was trained—and whether creators are being compensated when their content is used.

Grant Farhall, Chief Product Officer at Getty Images, warned that as AI becomes more capable of generating photorealistic and high-quality content, it’s essential to protect the people behind the creativity.

Farhall stated:

“Authenticity matters—but it doesn’t come for free.”

OpenAI has not fully disclosed the dataset used to train GPT-5. As scrutiny over copyright, ownership, and compensation intensifies, many believe that AI developers should compensate original artists, authors, and coders whose work is scraped from the internet and fed into models like GPT-5.

Anthropic Blocks OpenAI Over API Dispute

In a surprising development, Anthropic has cut off OpenAI’s access to its API, accusing OpenAI of violating its terms of service. According to Anthropic, OpenAI used their API to test GPT-5’s performance prior to launch—without proper authorization.

An OpenAI spokesperson responded:

“Evaluating other models is an industry-standard practice for safety and benchmarking.”

They added that their own API remains open to Anthropic, implying a double standard. This API conflict reveals the fragile alliances and deep competition among AI labs—even those previously seen as collaborators in research.

GPT-5 for Everyone: Free Access and ChatGPT Updates

OpenAI confirmed that GPT-5 will be available to all ChatGPT users, including those on the free tier, starting Thursday. This follows a broader trend of democratizing access to advanced AI, aiming to build wider adoption and user familiarity.

Additionally, OpenAI is making important changes to ChatGPT’s tone and behavior, especially when addressing emotionally sensitive issues.

For example:

- The model will no longer give definitive personal advice such as “Yes, you should break up with your boyfriend.”

- Instead, it will guide the user to think critically, listing pros and cons or asking questions to help them decide.

These changes are meant to prevent dependency, especially in users who might form emotional attachments to AI.

Altman acknowledged this on OpenAI’s podcast:

“People will develop parasocial relationships with AI. Society needs to create new guardrails.”

Scarlett Johansson Controversy Still Lingers

This cautious approach follows a controversial incident earlier in 2024 when OpenAI launched a voice assistant that many said sounded like Scarlett Johansson, who voiced the AI character in the movie Her.

Johansson accused OpenAI of using her voice without permission, calling the similarity “shocking” and “deeply upsetting.” OpenAI later pulled the voice option but denied copying her voice intentionally.

This incident raised urgent questions about voice cloning, identity rights, and consent in the age of hyperrealistic AI.

GPT-5 is Powerful, But Caution Is Key

The launch of GPT-5 is undeniably a technological milestone. With its enhanced reasoning, expert-level responses, and improved safety, it shows that AI is entering a more mature phase—one where the line between machine and expert grows thinner.

However, the road ahead is still filled with challenges:

- Ensuring ethical AI usage

- Protecting creators

- Regulating tech giants

- Preventing misinformation

- Managing user dependency

In the days and weeks ahead, the world will be watching GPT-5 closely to see if it lives up to the hype—and how society responds to its capabilities.

The Information is Collected from BBc and Sky News.