Apple is holding early discussions with Google about the possibility of using Gemini, Google’s flagship AI system, to power a completely reworked version of Siri. The iPhone maker has been criticized for falling behind rivals in the generative AI race, particularly when it comes to voice assistants. Siri, which once defined smartphone interaction when it launched in 2011, has struggled to evolve in comparison to AI assistants like ChatGPT, Gemini, and other conversational models.

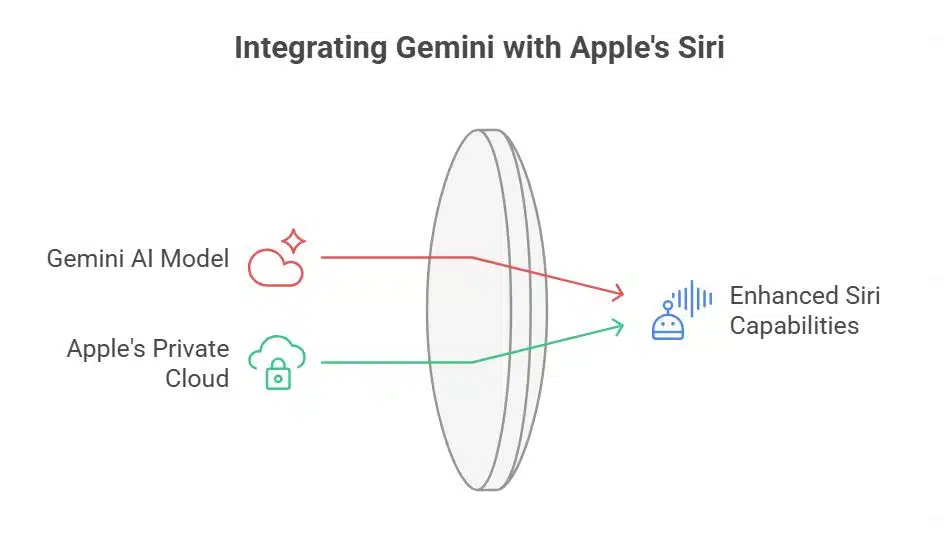

The plan under consideration would see Google train a custom Gemini model that could run on Apple’s private servers. This would represent a significant shift in Apple’s strategy, as the company has historically insisted on developing and controlling most of its AI systems internally.

Weighing Partners: Google, Anthropic, and OpenAI

Apple has been actively exploring multiple options for enhancing Siri. Earlier this year, it approached both Anthropic, the maker of Claude, and OpenAI, the developer of ChatGPT, as potential partners. The company evaluated whether these models could serve as the foundation of a smarter, more capable assistant.

Although Anthropic was once considered the frontrunner, the financial terms of a deal raised concerns within Apple’s leadership. That prompted executives to expand the search and bring Google into the conversation. By mid-summer, Apple had narrowed the possibilities to three main approaches: continue developing Siri with its own Apple Foundation Models, outsource to a partner such as Google, or pursue a hybrid approach that combines both.

Internal “Bake-Off”: Linwood vs. Glenwood

Apple has been testing two parallel versions of the next Siri. One, code-named Linwood, is powered by Apple’s own Foundation Models team. This group has already created the AI engines that drive Apple Intelligence features such as text summarization, writing tools, and custom emoji. The other, code-named Glenwood, uses third-party AI technology like Gemini.

This internal competition is designed to measure which system delivers the best accuracy, reliability, and user experience. Apple executives, including Craig Federighi and Mike Rockwell, are closely evaluating the results. The final decision on which direction to pursue is expected within weeks.

Siri Upgrade Delay and Leadership Changes

Apple’s struggle to modernize Siri has been highlighted by repeated delays. A major overhaul was initially scheduled for release in spring 2025. The update was supposed to allow Siri to carry out complex commands using personal data and enable users to control their devices almost entirely by voice. However, engineering setbacks forced Apple to postpone the launch by a full year.

The delays led to leadership changes inside the company. John Giannandrea, Apple’s AI chief, was removed from overseeing Siri. Responsibility shifted to senior software executive Craig Federighi and Vision Pro creator Mike Rockwell. This reshuffling underlined how critical the project has become for Apple’s broader AI strategy.

Financial Market Reaction to the Talks

When Bloomberg reported on the exploratory conversations between Apple and Google, both companies’ shares jumped to session highs. Alphabet stock rose close to 3%, while Apple shares gained about 1.5%. Investors interpreted the talks as a sign that Apple is taking aggressive steps to address its lag in AI capabilities, while Google could benefit from securing another major AI deployment deal.

Internal Turmoil at Apple’s AI Teams

Behind the scenes, Apple’s internal AI unit has faced instability. In July, Ruoming Pang, the chief architect of Apple’s Foundation Models group, departed for Meta Platforms. He was reportedly offered a $200 million compensation package and a senior role at Meta’s new Superintelligence Labs. His departure was followed by several other team members leaving or interviewing with competitors.

The uncertainty over whether Apple will adopt external models has fueled dissatisfaction inside the company. Some engineers worry that years of internal research could be sidelined in favor of outsourcing. Others see the changes as inevitable, given the scale of resources companies like Google and OpenAI are pouring into AI research.

How Gemini Could Change Siri

Google’s Gemini is a multimodal AI model capable of handling text, images, video, audio, and code. It is widely considered one of the most advanced systems available, ranking highly in benchmarks for reasoning, problem solving, and programming. By adopting Gemini, Apple could give Siri far more flexibility—allowing it to summarize documents, generate creative responses, handle complex follow-up questions, and manage tasks across apps.

The model could also integrate with Apple’s Private Cloud Compute system, where sensitive data is processed securely on servers built with Apple silicon. This setup would ensure that the third-party model never runs directly on user devices but instead operates in a secure environment consistent with Apple’s privacy standards.

Balancing Privacy and Outsourcing

Apple has always emphasized privacy as a core value, often keeping as much AI processing on the device as possible. Outsourcing Siri’s intelligence to an external provider like Google would represent a significant departure from that philosophy. While the processing would remain within Apple’s secure cloud servers, it still introduces new risks around intellectual property, licensing, and long-term independence.

Some Apple executives argue that without a partnership, the company risks falling irreversibly behind competitors. Others remain cautious, emphasizing that reliance on outside models could reduce Apple’s control over the user experience and expose it to regulatory or antitrust challenges.

Wider Industry Context

If Apple proceeds with a Gemini deal, it would mark the second major collaboration between the two companies. Google already pays Apple billions annually to remain the default search engine on iPhones and Macs. That deal is under antitrust scrutiny in the United States, and a new AI arrangement could invite further regulatory attention.

Meanwhile, competitors are moving fast. Google has embedded Gemini deeply into its Pixel phones and Android ecosystem. Samsung already relies on Google’s AI for many of its Galaxy features. Microsoft has partnered with OpenAI to integrate GPT models into Windows, Office, and its Azure cloud. Apple, by contrast, has yet to deliver generative AI features at the same scale.

Apple’s Broader AI Efforts

Beyond Siri, Apple has been experimenting with different ways to bring AI into its ecosystem. In iOS 26, Apple introduced optional ChatGPT integration for generating images and text. The company also canceled a project to build its own AI-based coding assistant, choosing instead to rely on tools like ChatGPT and Claude.

At the same time, Apple continues to research its own next-generation models. Engineers recently began testing a trillion-parameter model—much larger than the 150 billion-parameter models currently running in Apple’s AI data centers. However, this powerful model remains in the research phase and is not expected to be deployed to consumers anytime soon.