In 2026, AI stops getting credit for “potential” and starts getting graded on receipts. With compliance deadlines nearing, lawsuits maturing, and infrastructure costs squeezing margins, the AI boom is entering a “show me” phase where revenue must be defensible, auditable, and sustainable—not just impressive.

Beyond The Hype: AI Accountability 2026 And The “Show Me” Revenue Year

AI’s first act was wonder. Its second was adoption. The third, now unfolding, is accountability.

That word can sound moralistic, but in 2026 it is mostly financial. Accountability is the mechanism that turns AI from a breathtaking demo into a repeatable business outcome that a CFO can sign, a regulator can inspect, and a court can evaluate. The theme of this year is not “Can it do the task?” It is “Can you prove it did the task safely, legally, and cost effectively—and can you keep doing that at scale?”

Two forces make 2026 feel like a turning point.

One is economics. The AI infrastructure build-out is enormous, and investors are increasingly asking whether returns can keep pace if power, chips, and construction stay expensive. Some market watchers now frame “AI-driven inflation” as an underpriced risk—one that could complicate rate cuts, keep cost of capital higher, and compress tech multiples just as companies try to lock in long-term AI revenue.

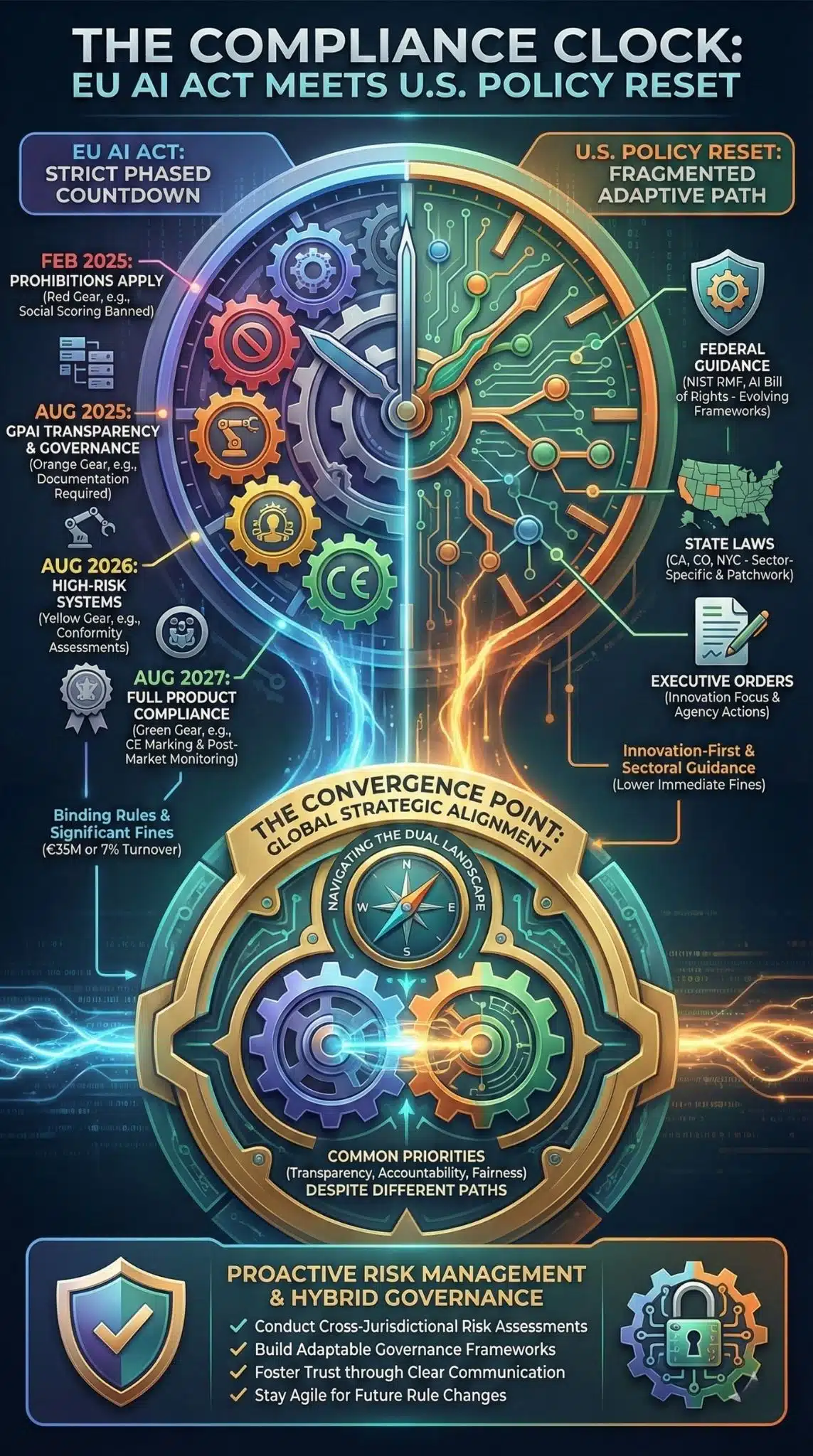

The other is governance. The European Union’s AI Act is moving from text to timetable, with major provisions converging through 2025–2026 and broad application expected in August 2026. In parallel, U.S. federal posture has shifted toward fewer central guardrails and more emphasis on deployment speed and a single federal approach rather than a patchwork of state rules.

Put differently: 2026 is when AI’s revenue story collides with its accountability story. For companies building AI, buying AI, regulating AI, or litigating AI, that collision determines who grows, who gets fined, and who gets left behind.

Key Statistics That Define The “Show Me” Year

- Global AI spending is forecast to exceed $2.0 trillion in 2026, driven heavily by AI embedded in consumer devices and sustained hyperscaler infrastructure investment (Gartner forecast, reported widely in 2025 coverage).

- Inference is getting cheaper fast: the Stanford AI Index reports the cost to query an AI model at GPT-3.5 level fell more than 280× between November 2022 and October 2024, while hardware costs dropped ~30% annually and energy efficiency improved ~40% annually.

- AI incidents are rising: the AI Index also reports AI-related incidents increased 56.4% from 2023 to 2024, tightening the link between safety claims and real-world harm.

- Data center investment needs are measured in trillions: multiple industry estimates suggest multi-trillion-dollar capex is required by 2030 to meet AI compute demand.

- Memory and AI components show tightness: widely reported market pricing signals (e.g., sharp swings in DDR5/HBM dynamics) underline how the AI boom propagates into the broader semiconductor supply chain.

These numbers point to the same conclusion: the market is scaling, but the cost, risk, and scrutiny scale too.

How We Got Here: From “Move Fast” To “Prove It”

The past three years rewarded speed. First came the breakthrough moment, then a scramble to integrate copilots, chatbots, and generation tools into every workflow. The early winners were those who shipped.

But the “ship first” era created three debts that come due in 2026.

The cost debt: Training and serving frontier models demanded capital expenditures on GPUs, networking, storage, and power at a pace most industries are not used to. Falling inference costs help, but those savings are often eaten by usage growth and more ambitious models. As AI becomes embedded everywhere, “cheaper per request” can still mean “more expensive overall” if total volume explodes.

The legal debt: Companies trained on internet-scale data while courts and legislatures were still deciding what permissions were required. By 2025, dozens of copyright disputes were active across jurisdictions. By 2026, more of these disputes are reaching meaningful procedural stages, settlements, or early rulings—enough to shape what “lawful training” looks like in practice, and how licensing markets will form.

The trust debt: AI moved into customer support, hiring, lending, content, and education before organizations had reliable ways to measure hallucination risk, bias, or provenance. Incident counts are climbing; the political and consumer appetite for “just trust us” is shrinking.

Accountability is how the industry services those debts without killing growth.

The New Unit Economics: When Inference Meets CFO Scrutiny

If 2024 was the year of pilots and 2025 was the year of scaling, 2026 is the year those deployments get priced properly.

On the supply side, providers are racing to monetize usage while controlling inference costs. The AI Index’s 280× inference cost decline is real, but it does not guarantee margin relief. Lower costs often expand the addressable market, pushing usage up until power, cooling, and chip availability become the constraint. That’s why “cost per token” is less important than “cost per outcome.”

On the demand side, buyers increasingly separate “AI spend” into three buckets:

- Substitution: using AI to replace existing spend (support tickets, basic content, internal search).

- Expansion: using AI to do more work than before (personalization at scale, synthetic data, new product features).

- Speculation: investing in capabilities whose payoff is uncertain (agents, autonomous workflows).

Only the first two survive the 2026 budget cycle reliably when CFOs ask for proof. Accountability is the bridge between pilots and procurement-grade deployment: it’s the audits, logs, evaluation reports, and governance controls that make spend defensible.

Comparative Snapshot Of Global AI Spending (Forecast)

| Market Segment (Worldwide) | 2024 (USD, Millions) | 2025 | 2026 |

| Total AI Spending | 987,904 | 1,478,634 | 2,022,642 |

| GenAI Smartphones | 244,735 | 298,189 | 393,297 |

| AI-Optimized Servers | 140,107 | 267,534 | 329,528 |

| AI Processing Semiconductors | 138,813 | 209,192 | 267,934 |

These forecasts are a reminder that 2026 is a “revenue year” because the big lines aren’t abstract research—they’re devices, servers, and semiconductors. Accountability becomes about supply chains, depreciation, utilization, and performance per watt, not only model cleverness.

Infrastructure Becomes The Macro Risk: Power, Chips, And Inflation

The most underappreciated 2026 story is that AI is no longer only a tech cycle. It is a physical economy cycle.

Energy agencies and market analysts have been warning that data centers and AI are reshaping electricity demand. The bottleneck in many regions is grid connection and permitting, not just GPU procurement. Power is not merely an operating expense; it is a hard constraint on growth.

Meanwhile, component tightness shows up in pricing and competitive dynamics. Memory matters more than most consumers realize: AI performance depends on moving and storing huge volumes of data efficiently, which elevates the importance of advanced memory supply. When memory prices spike or supply is constrained, the economics of AI serving change.

This is where accountability becomes macroeconomic. If AI infrastructure drives persistent cost pressure in energy and chips, central banks and investors respond. That changes the cost of capital for the whole AI ecosystem, making “show me the revenue” inseparable from “show me the efficiency.”

The Compliance Clock: The EU AI Act Meets A U.S. Policy Reset

Regulation is often described as a brake, but in 2026 it is also a market design tool. It determines which features become mandatory and which liabilities shift from users to vendors.

Europe: From Principles To Enforcement

Europe is building a compliance regime that shifts AI procurement toward auditable products. With the AI Act moving into application in 2026, compliance becomes schedulable work with real procurement consequences. Buyers will increasingly demand documentation, testing results, and audit trails because they need those artifacts to defend their own deployments.

For revenue, the implication is simple: compliance-readiness becomes a sales feature. Products that can’t produce documentation and risk evidence will be filtered out—not because they don’t work, but because they can’t be defended.

The United States: Fewer Central Guardrails, More Federal Preemption

In the U.S., the governance narrative has shifted. Federal direction has emphasized removing barriers to AI adoption and pushing toward a single national approach that reduces state-by-state fragmentation. Whether one views that as pro-innovation or pro-incumbent, it has a clear market effect: faster deployment pressure paired with a messy liability landscape, especially where consumer harms, discrimination claims, and copyright conflicts are concerned.

The divergence matters for global companies. Europe is exporting documentation requirements through supply chains; the U.S. is signaling faster deployment with fewer centralized rules. Multinationals may default to the strictest regime for operational simplicity, effectively turning EU-style accountability into a de facto global standard for enterprise procurement.

Timeline Of Accountability Pressure Points

| Milestone | What It Changes | Why It Matters For Revenue |

| 2025: EU guidance and definitions expand | Clarifies what is off limits and what counts as AI | Reduces ambiguity for go-to-market choices |

| 2025–2026: Enterprise procurement hardens | RFPs begin requiring audits, logs, evaluation reports | “Compliance-ready” vendors win cycles faster |

| Aug 2026: Broad EU AI Act application | Compliance becomes enforceable at scale | Vendor shortlists shift toward auditable systems |

Liability Moves From Theory To Balance Sheets

If you want to understand why 2026 feels different, follow the lawyers.

Copyright and data rights disputes are no longer edge cases. They are defining what kinds of training data are safe to use and what licensing markets will emerge. In some cases, large rights holders are choosing settlement and licensing over long litigation, signaling a possible “licensing economy” around training data. In other cases, courts are issuing rulings that vary by jurisdiction, underscoring that “legal certainty” may remain fragmented for years.

For revenue, the key point is simple: uncertain liability raises the cost of goods sold. It can do so directly through licensing and indirectly by forcing vendors to build data provenance systems, filters, or model behavior constraints that reduce performance or increase compute.

That tradeoff becomes central in a revenue year. When margins are thin, a model that is slightly more capable but legally riskier becomes harder to sell to enterprises that need predictable outcomes.

Trust Becomes A Product Feature: Testing, Provenance, And Content Credentials

The accountability phase is not only about laws. It is about trust systems.

In practice, organizations are building a new stack of proof:

- Proof the model was evaluated (safety, bias, hallucination controls).

- Proof the output is attributable (logs, traceability, citations/grounding workflows where appropriate).

- Proof the media is authentic (provenance and credentialing).

A concrete example is the growing focus on content provenance standards, often discussed under the umbrella of “Content Credentials.” The principle is straightforward: if synthetic media is everywhere, you need robust ways to distinguish authentic from generated, and to track editing histories. The limitation is also clear: metadata can be stripped, so provenance must be durable and layered with policy and platform support.

The business consequence is direct. Media, advertising, and platform companies cannot industrialize generative content without credible provenance. Provenance becomes the digital equivalent of a supply chain label—and in 2026, labels sell.

Governed Deployment Replaces “Shadow AI”: Standards Move Into Procurement

Accountability becomes real when it becomes purchasable.

That is why frameworks and standards are gaining traction as procurement language, not just governance talk.

- NIST’s AI Risk Management Framework has become a common reference for organizations building responsible AI programs, complemented by a Generative AI profile designed to help address genAI-specific risks.

- ISO/IEC 42001 reframes AI governance as a management system, giving organizations a structure for roles, controls, documentation, and continuous improvement.

- Large organizations—especially governments and regulated industries—have moved toward formal oversight expectations for AI use, which typically translate into documentation demands that vendors must satisfy.

The Accountability Artifact Stack

| Accountability Need | What Buyers Ask For | Typical Anchor |

| Risk governance | Policies, roles, escalation paths | AI management system approaches |

| Model risk management | Testing, monitoring, genAI risk controls | NIST-style RMF programs |

| Deployment defensibility | Logs, auditing, incident response | Internal controls + monitoring |

| Media authenticity | Provenance metadata, durable credentials | Content credentialing standards |

The interpretation is simple: standards are becoming revenue multipliers. Vendors who can hand buyers a compliance-ready packet shorten sales cycles. Vendors who cannot get pushed into low trust, low margin segments.

Expert Perspectives: Two Theories Of The Accountability Era

The accountability phase has two credible narratives.

Narrative A: Accountability unlocks mass adoption.

Under this view, regulation and standards reduce uncertainty and create predictable requirements. Enterprises invest confidently when they can measure and defend AI systems. Accountability becomes the bridge between innovation and mainstream infrastructure—similar to how security and compliance standards accelerated cloud adoption in regulated sectors.

Narrative B: Accountability slows innovation and entrenches incumbents.

Here, compliance becomes a fixed cost that favors hyperscalers and the biggest model labs. Smaller firms struggle to fund audits, documentation, and legal defenses. Meanwhile, policy directions that prioritize fast deployment and reduced fragmentation reflect a belief that excessive regulation can shift advantage to competitors.

Both narratives can be true at different layers. Accountability can expand the total market while concentrating the most profitable tiers among firms that can bear the cost of proof.

Winners And Losers In The 2026 Accountability Phase

| Likely Winners | Why | Likely Losers | Why |

| Auditable AI vendors | Faster enterprise procurement and renewals | “Black box” tool providers | Harder to clear compliance reviews |

| Semiconductor and AI server ecosystem | Spending surge through 2026 forecasts | Low margin hardware segments | Component inflation pressures squeeze profits |

| Governance and security platforms | Demand for monitoring and controls rises | Teams relying on “shadow AI” | Governance clampdowns force restrictions |

| Provenance tooling | Trust requirements for media workflows grow | Content firms without rights strategy | Legal exposure and licensing costs increase |

This is less about virtue and more about market structure: accountability rewards the ability to document, test, and defend.

What Comes Next: The 2026 Milestones To Watch

A forward-looking view of 2026 has three checkpoints.

Watch procurement language. When RFPs start requiring evaluation reports, monitoring, logging, and governance alignment, accountability becomes an operating requirement, not a PR claim.

Watch Europe’s enforcement reality. As 2026 progresses, enforcement clarity and implementation guidance will shape vendor shortlists across industries that sell into Europe or to European multinationals.

Watch infrastructure constraints. If power and chip tightness persist, CFOs will force a clearer separation between AI that replaces cost and AI that adds cost. That will favor workflows where ROI can be measured quickly and reliably.

Prediction, Clearly Labeled

Market indicators suggest that by late 2026, the most valuable AI products will be those that combine capability with proof: measurable outcomes, traceable behavior, and defensible compliance. The “best model” will matter less than the “most adoptable model,” and adoptability will be a function of accountability.

That does not mean innovation slows. It means innovation becomes legible to outsiders—regulators, auditors, and judges.

Final Thoughts

The “Show Me” revenue year is not a backlash against AI. It is the moment the world tries to price AI correctly.

In the first phase, AI was sold on possibility. In the accountability phase, AI is sold on evidence: evidence of ROI, evidence of safety controls, evidence of lawful data practices, evidence of authenticity. Companies that treat accountability as a product—not paperwork—will convert 2026’s spending wave into durable revenue. Those that treat it as an afterthought will learn that trust, once lost, is more expensive than GPUs.