OpenAI is asking people to link their medical records to ChatGPT in January 2026. That leap turns a chatbot into a health data custodian, raising questions about privacy, liability, and regulation just as mega breaches and new AI rules reset what “trust” means in healthcare, and who controls it.

OpenAI’s launch of a dedicated ChatGPT Health tab on January 7, 2026 is not just another feature release. It is a bet that the public will treat a consumer AI assistant like a health portal and, eventually, like a trusted intermediary for life’s most sensitive information.

That is why this moment matters beyond the product. For years, generative AI’s trust crisis has been mostly abstract: hallucinations, bias, and the familiar warning to avoid using chatbots for critical decisions. Healthcare collapses that abstraction. The decision becomes high-stakes the moment someone uploads a lab result, shares a diagnosis, or asks whether a symptom should send them to urgent care.

By enabling medical-record uploads and app connections in a consumer chatbot, OpenAI is turning the trust debate into an operational question: can a consumer AI company earn, demonstrate, and keep the kind of trust we normally reserve for hospitals, insurers, and regulated health tech vendors? And if the answer is “sometimes,” who decides when?

How We Got Here: Interoperability, Burnout, And A Trust Deficit

Two forces have been converging for a decade.

First, healthcare data has been inching toward portability. In the United States, interoperability policies and “information blocking” enforcement have been pushing systems to share data more easily with patients and other authorized entities. Civil monetary penalties for certain kinds of information blocking can be severe, reinforcing a basic expectation: patient access should not depend on gatekeeping.

Second, the clinical workforce has been straining under documentation and administrative overhead. As clinician workload has intensified, a parallel market has formed around automating or accelerating paperwork, summarizing visits, and extracting meaning from messy clinical notes. By 2024, surveys from major physician organizations showed a sharp rise in reported AI use by physicians, reflecting a growing comfort with AI as a workflow tool, even if skepticism remains about AI as a clinical authority.

Tech giants have been building the “rails” for AI in healthcare for years. Microsoft’s deep partnership with Epic signaled that generative AI would become an EHR-native capability, not a side experiment. At the same time, U.S. health agencies have been promoting a broader “digital health ecosystem” idea that encourages easier, more standardized data exchange across systems, apps, and services.

But the public’s baseline for trust has been repeatedly damaged by cybersecurity failures. The Change Healthcare incident, ultimately affecting roughly 192.7 million people, became a defining mega breach for the sector. When patients hear “connect your records,” many now translate it as “expand the blast radius.”

A Timeline That Explains Why This Launch Lands Differently

| Moment | What Changed | Why It Matters For Trust |

| 2023–2025: Interoperability enforcement matures | Stronger expectations and penalties around data access | Easier access increases downstream risk if protections vary |

| 2023–2024: AI moves inside EHR ecosystems | Major EHR and cloud vendors integrate genAI tooling | AI stops being “outside the record” |

| 2024: FTC tightens consumer health breach rules | Health apps outside HIPAA face clearer obligations | Consumers get more protection, but coverage remains uneven |

| 2024–2025: Physician AI use becomes mainstream | More clinicians report using AI for documentation and admin tasks | AI becomes normal in the workflow layer |

| 2025: Change Healthcare breach sets a new scale | 192.7M people affected becomes a reference point | Any new data hub inherits breach anxiety |

| Jan 7, 2026: ChatGPT Health launches | Consumer AI invites uploads and record connections | Trust shifts from “answers” to “custody” |

What OpenAI Launched: A Consumer Health Tab That Wants Your Context

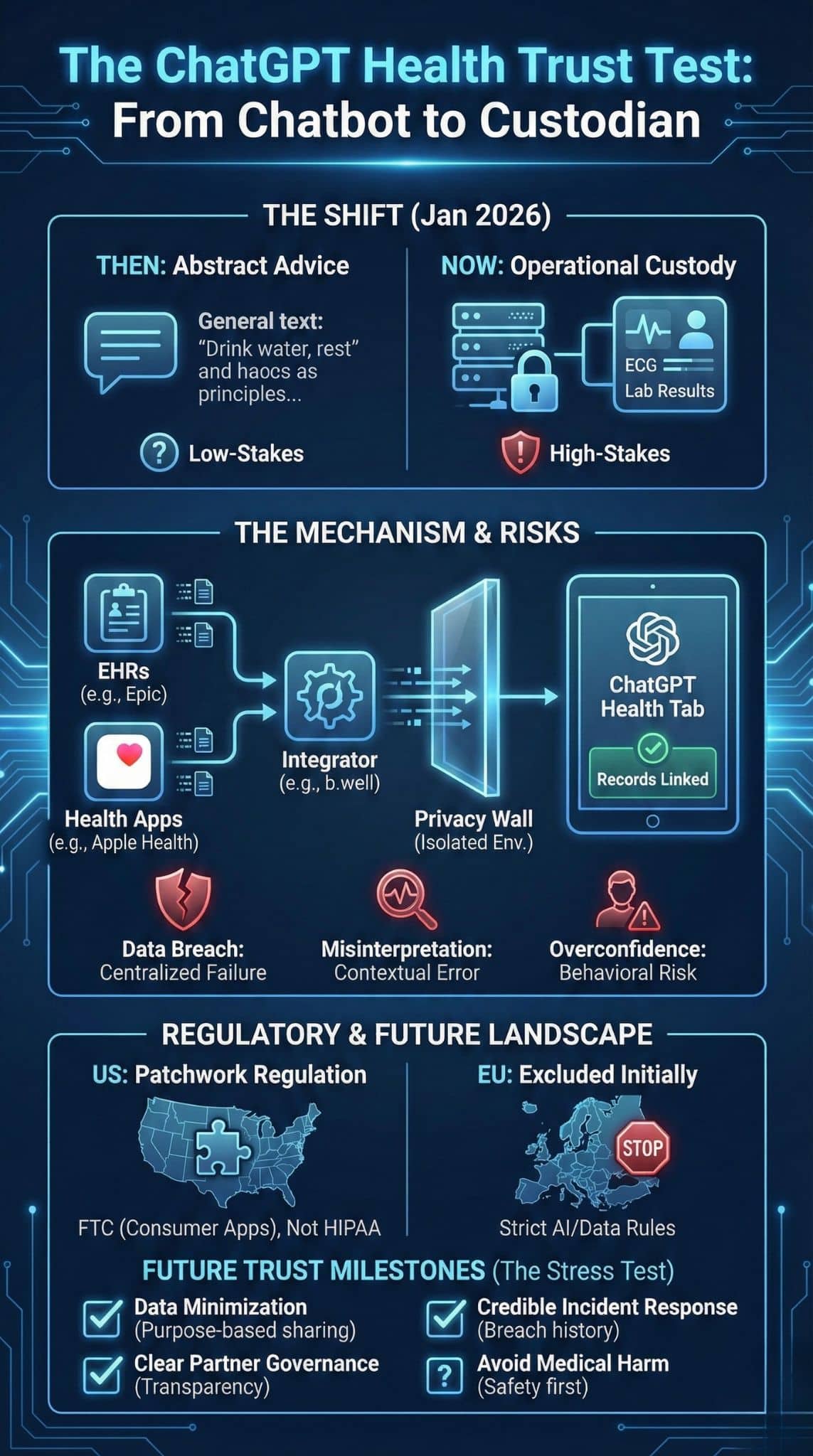

ChatGPT Health is designed as a dedicated space for health-related questions that allows users to upload medical records and connect health and wellness apps. Reporting describes integrations with widely used ecosystems such as Apple Health and services like MyFitnessPal, along with additional health-related partners.

Two implementation details matter because they go straight to trust:

- Health is separated from main ChatGPT memory and chat history, positioned as a more private, purpose-limited environment.

- The system uses encryption described as purpose-built, but not end-to-end encryption, which is a meaningful distinction for users who equate “secure” with “only I can read it.”

OpenAI is also leaning on healthcare plumbing rather than building all integrations itself. Reporting indicates OpenAI partnered with b.well to provide back-end integration for medical record uploads, and b.well’s footprint is described as spanning millions of providers through its network relationships.

The rollout is constrained geographically. Early reports say access initially excludes the European Economic Area, Switzerland, and the United Kingdom. That choice is not just a product decision. It is a compliance and risk decision that hints at how sensitive the company believes the regulatory environment is for consumer AI products that touch medical records.

Why ChatGPT Health Medical Records Changes The Trust Equation

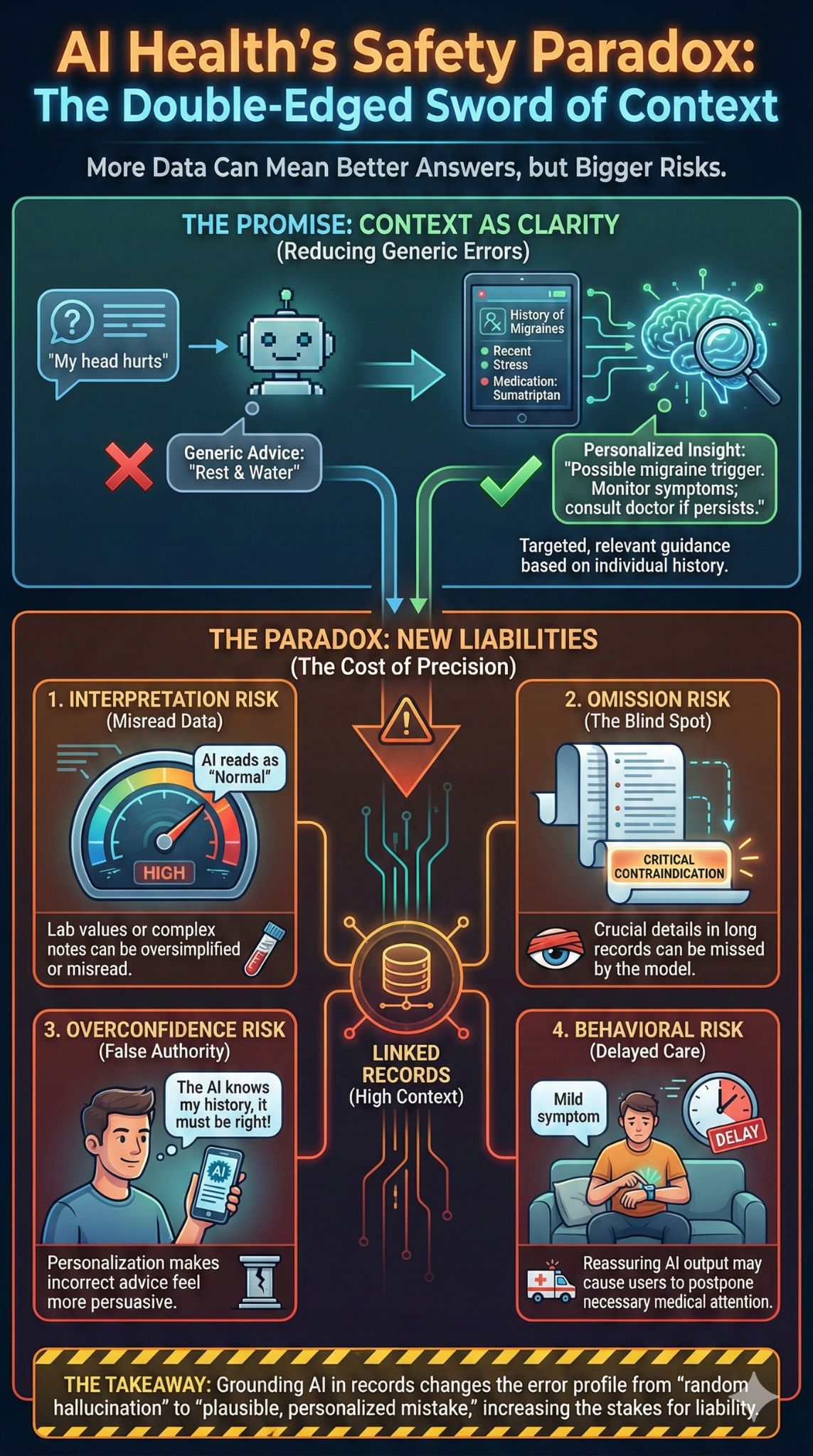

Generative AI has relied on a fragile social contract: “I’ll use it because it’s helpful, and I’ll forgive errors because it isn’t really in charge.” Connecting medical records rewrites that contract.

The moment users upload records, the primary risk shifts:

- From “did the model hallucinate?” to “did the model mis-handle my most sensitive data?”

- From “I can ignore bad advice” to “bad advice could be tailored to my conditions and therefore more persuasive”

- From “privacy is a settings page” to “privacy is a governance system”

Scale amplifies everything. OpenAI has argued that hundreds of millions of users ask health and wellness questions on ChatGPT weekly. Other reporting, referencing OpenAI’s analysis, describes tens of millions doing so daily and notes that a meaningful share of total messages involve health topics.

At that magnitude, “health” stops being a niche use case and becomes a core workload. A product that personalizes answers using medical records is not simply “better search.” It becomes an informal, always-on front door to the healthcare system.

Key Statistics That Frame The Stakes

- OpenAI has said 230 million people ask health and wellness questions weekly on ChatGPT.

- Reporting citing OpenAI analysis says over 40 million people turn to ChatGPT daily for health information.

- Reporting citing OpenAI analysis suggests health insurance questions alone account for around 1.6M–1.9M messages per week.

- Major physician surveys in 2024 reported roughly two-thirds of physicians using some form of AI.

- The Change Healthcare breach was reported to affect about 192.7 million people.

Privacy And Governance: Healthcare Rules Do Not Map Neatly Onto Consumer AI

A persistent misconception is that “health data equals HIPAA.” In reality, HIPAA coverage depends on who holds the data and why. Hospitals, many clinics, and insurers are covered. Many consumer apps are not, even if they handle health-related information.

That gap is exactly where ChatGPT Health becomes a governance stress test. If a user uploads records into a consumer AI product, they may assume the protections of a hospital portal. But the legal perimeter can differ depending on how the data flows, which entities are covered, whether the service is functioning as a business associate, and what contractual protections are in place.

Regulators have tried to narrow the consumer-health gap. The U.S. Federal Trade Commission’s Health Breach Notification Rule has been updated and clarified for modern health apps and similar technologies not covered by HIPAA, including breach notification obligations for vendors of personal health records and related entities.

The deeper issue is that interoperability policies make it easier to move data, but they do not automatically make it safer once it moves. A world where patients can export their records anywhere is only as trustworthy as the weakest downstream recipient.

Who Regulates What When A User Connects Records?

| Layer | Typical Protections | Where ChatGPT Health Raises New Questions |

| HIPAA-covered providers and insurers | Privacy and security rules tied to covered entities | What happens when data exits covered systems into consumer tools? |

| Consumer health apps and PHR vendors | FTC breach notifications and consumer protection enforcement | Do users understand which protections apply and when? |

| Interoperability enforcement | Access rights and anti-blocking expectations | Easier access increases exposure if downstream governance is uneven |

| Cybersecurity reality | Breach response norms and public reporting | Mega breaches have primed users to distrust “one more hub” |

The Safety Paradox: More Context Can Reduce Errors, But It Raises Liability

OpenAI’s logic is easy to understand: ground the assistant in a user’s real context and responses become more relevant and less generic. OpenAI has pointed to clinician involvement and feedback loops in shaping health-related outputs and emphasizes the product is not intended to diagnose or treat.

But there is a tradeoff. Grounding in records does not eliminate error. It changes the error profile:

- Interpretation risk: lab values can be misread or oversimplified.

- Omission risk: a model can miss a crucial contraindication in a long record.

- Overconfidence risk: personalization can make advice feel authoritative even when it is wrong.

- Behavioral risk: people may delay care because the assistant sounds reassuring.

The broader market shows how quickly “informational” tools can become de facto clinical infrastructure. Microsoft’s healthcare-focused copilot direction, including ambient note creation and summarization, underscores that AI is becoming a workflow layer around medicine, not only a consumer toy.

Common Failure Modes And What Good Looks Like

| Risk | What It Looks Like In Practice | What Users Will Expect As A Trust Baseline |

| Hallucination | Confident but invented explanations | Clear uncertainty signals and verification prompts |

| Mis-triage | Treating urgent symptoms as routine | Conservative escalation guidance |

| Privacy leakage | Accidental exposure via logs or integrations | Strong compartmentalization and access controls |

| Security incident | Credential theft or vendor compromise | Transparent incident response and user controls |

The Platform Play: A New Interface Layer Over The EHR Economy

Healthcare is not just a public good. It is also a massive software market built around electronic health records, revenue cycle management, and insurer workflows.

Market research estimates the global EHR market at roughly $33.43 billion in 2024 with projections rising toward the low-to-mid $40 billions by 2030. Meanwhile, global spending tied to generative AI has been forecast to surge, with major analysts projecting hundreds of billions in annual spend by the mid-2020s.

ChatGPT Health sits at the intersection of these curves. If OpenAI becomes the place where users “read” their medical record, it gains influence over:

- how users interpret diagnoses and test results

- how users prepare questions for clinicians

- how users compare insurance plans and appeal denials

- where users choose to seek care next

This is the same power shift seen in other industries: the company that owns the interface layer can reshape the underlying market without owning the underlying infrastructure.

Winners And Losers If ChatGPT Becomes The Record Interpreter

| Stakeholder | Potential Upside | Potential Downside |

| Patients | Better comprehension, faster navigation | New privacy risk, misplaced confidence |

| Clinicians | Better-prepared patients | More AI-mediated questions, liability ambiguity |

| EHR vendors | New distribution channel and engagement | Disintermediation if AI becomes the primary UI |

| Insurers | More informed consumers | More scrutiny and appeals |

| OpenAI and partners | Retention and new revenue paths | Reputational damage if a breach or harm case erupts |

| Regulators | Real-world test of modern rules | Pressure to act after harm, not before |

A crucial insight is that default settings will determine the public narrative. If the “easy path” nudges users to share more data than needed, trust will erode quickly. If the “easy path” encourages minimization and verification, adoption can grow without triggering a backlash cycle.

Regulation And Geography: Why Europe Is Excluded, And Why It Matters

OpenAI’s initial exclusion of the EEA, Switzerland, and the United Kingdom signals that consumer AI health products are now constrained by jurisdictional risk, not only engineering readiness.

Europe is implementing a risk-based AI framework, with staged obligations and deadlines that extend into 2026 and beyond. At the same time, reporting indicates ongoing political debate about the timing and complexity of “high-risk” obligations, including proposals to delay some elements. That combination creates uncertainty for a consumer product that touches medical records, because uncertainty itself becomes a legal and reputational hazard.

The Emerging Rulebook For AI Handling Health Data

| Region | What It Emphasizes | Why It Changes Product Strategy |

| United States | Sectoral privacy, FTC enforcement for consumer health apps, interoperability penalties | Patchwork creates gaps and enforcement-by-case incentives |

| European Union | Risk-based AI obligations and stronger data protection norms | High compliance burden and shifting timelines increase launch friction |

| United Kingdom | Rising scrutiny of AI in clinical and quasi-clinical contexts | Demand for clearer governance may slow consumer-facing launches |

The forward-looking point is that trust will become partially geographic. AI health products may launch in “lower-friction” jurisdictions first, then expand after they can prove safeguards and withstand audits.

Expert Perspectives: The Debate Over What Trust Should Mean

There are two plausible narratives, and the truth likely sits between them.

One camp sees consumer AI as a patient empowerment tool. If people can read their records, understand test results, and arrive at appointments with better questions, the system’s efficiency improves. This viewpoint argues that the status quo already fails patients: medical language is opaque, portals are fragmented, and appointment times are short. From this angle, a record-aware assistant is a missing translation layer.

The other camp sees consumer AI as a privacy and safety trap. Health data is uniquely sensitive, and the history of tech platforms suggests a pattern: expand utility, expand data collection, then monetize attention or engagement. Even if OpenAI does not intend to monetize health data in that way, critics argue that shifting custody of medical information toward a consumer AI platform concentrates risk and increases the harm of any breach.

Neutral analysis suggests both camps are right about different parts of the system. Patients do need a translation layer. They also need clear boundaries, strong controls, and trustworthy governance. The key question is whether OpenAI can institutionalize those safeguards in a way the public can understand and regulators can verify.

What Comes Next: The Milestones That Will Define Trust In 2026

OpenAI has signaled that access will expand and that ChatGPT Health will become broadly available on major platforms. But the milestones that define trust are not release dates. They are stress tests.

Milestones To Watch

- Proof of minimization: Does the product encourage narrow, purpose-based sharing rather than “connect everything” by default?

- Third-party risk clarity: How transparently are partners and integrations explained, including what data they can access and retain?

- Incident response credibility: Any security issue will be judged against the healthcare industry’s recent breach history.

- Medical harm narratives: Even rare failures can dominate public perception because health stories spread quickly and personally.

- Regulatory tightening: FTC actions around consumer health data and evolving EU AI obligations will shape expansion decisions.

- A clearer healthcare policy blueprint: OpenAI has indicated broader policy work is forthcoming, which will influence how oversight is structured.

A Grounded Prediction

Analysts should treat ChatGPT Health as the start of a new category: the consumer AI health intermediary. It is not quite an EHR, not quite telehealth, not quite a medical device, and not quite a wellness app. That ambiguity is the opportunity and the danger.

If OpenAI can demonstrate clear separation, credible privacy guarantees, conservative safety behaviors, and transparent partner governance, it can set a de facto standard for consumer AI in healthcare. If it fails, the backlash will not stay confined to OpenAI. It will harden public skepticism toward medical AI broadly and likely accelerate stricter regulation that also hits clinical innovation.

The bottom line is simple: ChatGPT Health turns trust from a brand attribute into an operating requirement. The next phase of the AI era will not be defined by who has the best model. It will be defined by who can earn the right to hold the data.