A fresh wave of controversy is surrounding Perplexity AI, an emerging AI-powered search and chatbot platform, after a detailed report from Cloudflare claimed the company is bypassing website restrictions to illegally retrieve and repurpose content. Despite previous accusations and public denials, new evidence suggests Perplexity may be intensifying these practices—raising ethical, technical, and legal questions across the AI industry.

Background: Perplexity’s History With Scraping Allegations

Concerns around Perplexity’s web crawling methods began surfacing in mid-2024, when media organizations such as Wired, The New York Times, and BBC accused the company of violating standard internet protocols by scraping content from their sites without permission.

The issue revolves around robots.txt, a web standard known as the Robots Exclusion Protocol, which allows websites to set rules for automated bots—essentially telling them what can and cannot be accessed. Though not a law, this protocol is widely respected by responsible companies, especially those building crawlers for indexing or AI training.

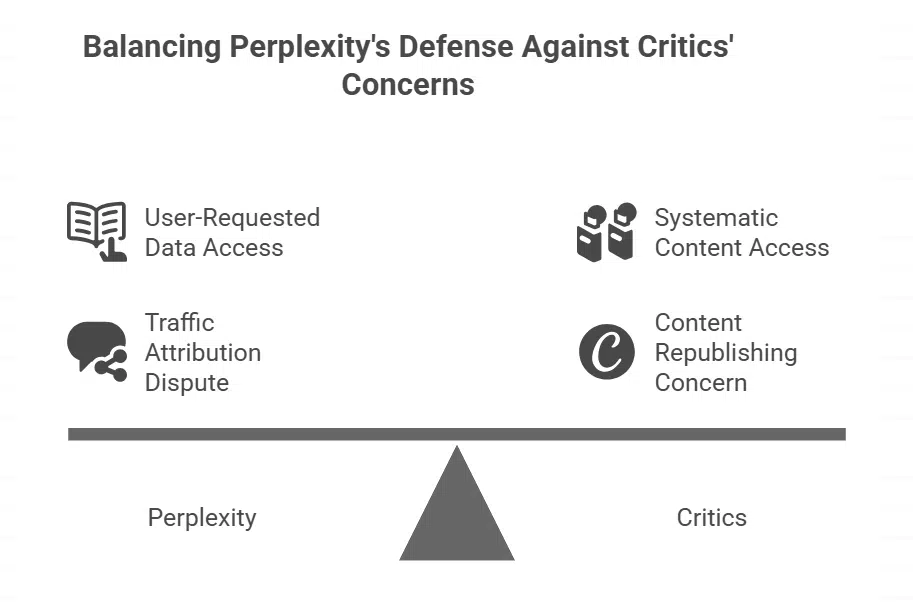

At the time, Perplexity tried to distance itself from the accusations by blaming the behavior on unnamed third-party vendors. The company also attempted to clarify that when a user manually provides a web URL, its system fetches the content only in response to that request. However, media outlets, legal experts, and technical watchdogs argued that pulling content from a blocked site and republishing summaries—especially at scale—still constitutes scraping, regardless of whether a user initiated it.

Over time, major media companies issued legal threats, accusing Perplexity of plagiarism, unfair use of intellectual property, and acting in bad faith. While Perplexity dismissed these claims, the debate continued to grow within tech circles.

What Cloudflare Discovered: Stealth Tactics and IP Rotation

The controversy has now escalated due to a new investigation by Cloudflare, a major internet infrastructure and security firm that protects websites from harmful traffic. In August 2025, Cloudflare published a technical report exposing what it describes as coordinated, stealthy crawling activity tied to Perplexity.

Cloudflare created controlled test environments using websites with strict robots.txt files, disallowing any access by bots—including those using Perplexity’s declared user-agent names (such as “PerplexityBot” and “Perplexity-User”). Even so, Perplexity’s systems still accessed these pages.

Here’s what Cloudflare uncovered:

-

When access was denied, Perplexity initially tried using its known user-agents and IP addresses.

-

After being blocked, the platform switched tactics, disguising its identity by posing as a Google Chrome browser running on a macOS system, using a generic user-agent string.

-

These requests came from rotating IP addresses that were not registered under Perplexity’s known infrastructure, making it hard to detect.

-

The crawler rotated through different Autonomous System Numbers (ASNs) to evade IP-based firewall blocks.

-

This activity was seen across tens of thousands of domains, generating millions of unauthorized content requests per day.

Using machine learning tools and network fingerprinting, Cloudflare matched these patterns to Perplexity’s infrastructure—concluding that the company had created a system specifically designed to bypass anti-bot protections and extract content from blocked websites.

Not Just a Technical Issue — Ethical and Legal Dimensions

While robots.txt is not a legally binding regulation, it plays a critical role in maintaining internet ethics and fair digital conduct. Companies like Google, Microsoft, and OpenAI have historically respected it, even when not legally obligated to do so.

Cloudflare noted that OpenAI’s crawlers stopped at blocked robots.txt directives, while Perplexity allegedly continued by using deceptive methods.

This distinction is important. When bots ignore robots.txt—or worse, disguise their identity—it erodes the trust between website owners and the broader internet ecosystem. Content creators, especially news publishers and educators, rely on tools like robots.txt to control where and how their content is used.

Perplexity’s alleged evasion methods undermine that balance. If true, they suggest the company was intentionally circumventing standard safeguards, rather than operating in good faith.

Perplexity’s Response and Public Defense

In reaction to the Cloudflare report, Perplexity denied any wrongdoing. The company dismissed the accusations as based on misunderstandings or misinformation, calling Cloudflare’s report an attempt to gain publicity.

Perplexity maintains that its technology only fetches pages when explicitly requested by users, and claims this behavior doesn’t count as traditional crawling. The company also suggested that some of the traffic attributed to it may not have come from its own infrastructure.

However, security researchers and journalists argue that this line of reasoning doesn’t hold up when such requests are made at scale and when content is systematically accessed, summarized, and republished without consent.

Not an Isolated Case in the AI Race

To be fair, Perplexity isn’t the only AI company facing such allegations. Other players like OpenAI and Anthropic have also been accused of overreaching in their efforts to collect training data. Some critics have even described OpenAI’s crawling behavior as resembling Distributed Denial-of-Service (DDoS) attacks, due to the massive traffic spikes experienced by websites.

What makes Perplexity’s case stand out is the specificity and scale of its evasive behavior, as well as its direct conflict with major infrastructure providers like Cloudflare.

Apple’s Possible Acquisition: A Risky Move?

This controversy comes at a particularly sensitive time, as reports continue to suggest that Apple is considering acquiring Perplexity AI to strengthen its AI capabilities.

While the acquisition hasn’t been confirmed, the timing raises serious questions about Apple’s commitment to privacy, ethics, and transparency. Apple has historically branded itself as a protector of digital rights and responsible data handling.

Acquiring a company with Perplexity’s alleged record of unauthorized data extraction and defensive public relations could damage Apple’s credibility unless it takes strong steps to clean house and enforce ethical standards.

If Apple moves forward with the deal, it may hope that its own governance and policies can reform Perplexity’s operations. However, critics argue that the end does not justify the means, and that legitimizing such a company could send the wrong signal to the broader AI community.