Right now, the AI race is shifting from data centers to the physical world. Tesla’s FSD is one of the biggest real-world tests of “edge AI” at scale. Nvidia can win the GPU war and still lose the next phase if autonomy’s data flywheel and in-car compute standardize around closed, Nvidia-light stacks.

Nvidia’s modern dominance was forged in data centers, where it set the standard for training and increasingly for inference. But the next competitive frontier is not just bigger models. It is reliable intelligence running in messy environments: driving, robotics, warehouses, factories, and logistics. Nvidia has branded this transition “physical AI,” and Tesla’s Full Self-Driving (FSD) program is one of the most consequential experiments in that domain, because it tries to push autonomy into everyday consumer use and learn from it continuously.

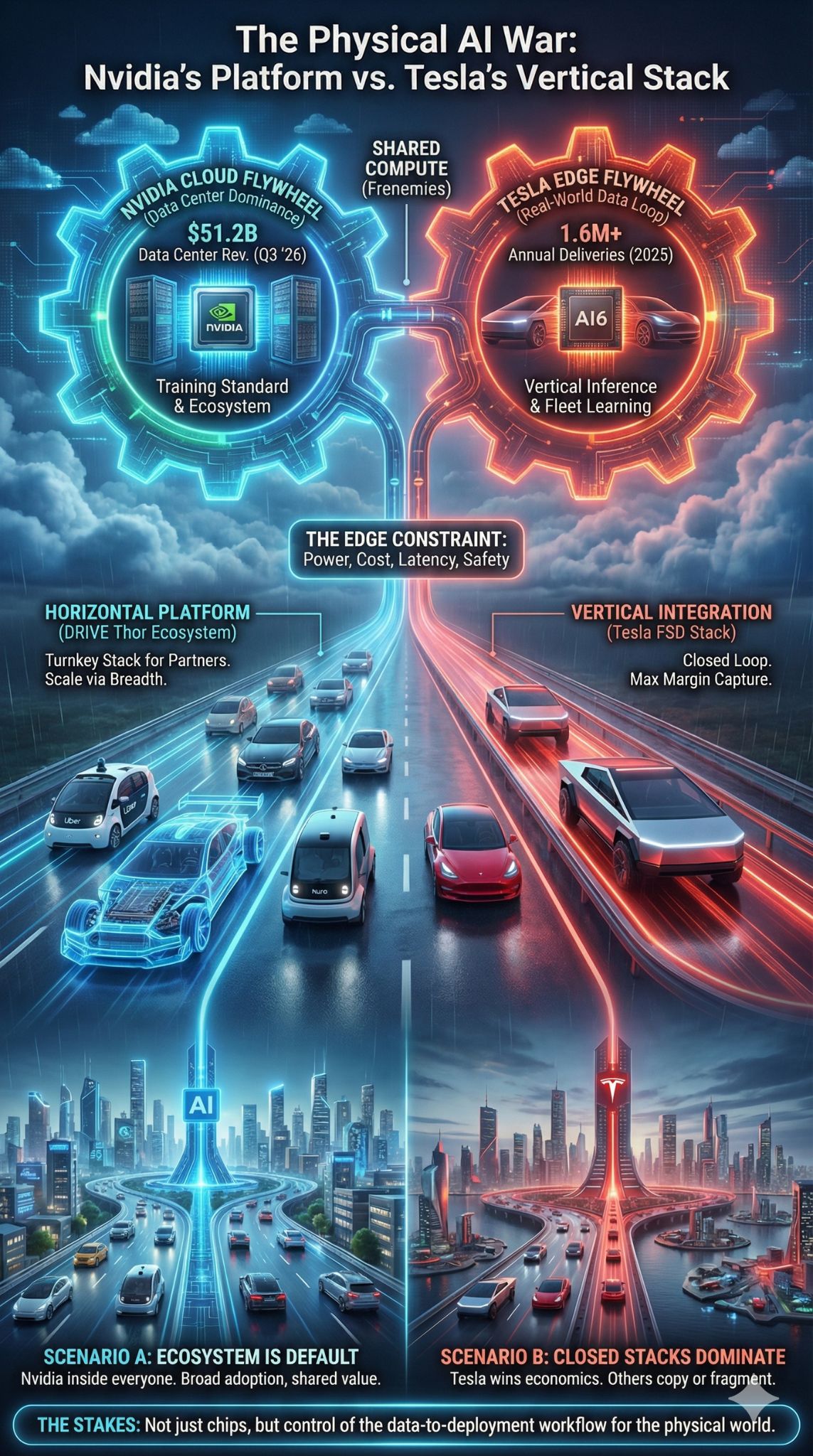

The “frenemies” framing is not drama. It is strategy. Tesla is simultaneously (1) a compute-hungry AI builder that can strengthen Nvidia’s ecosystem and (2) a vertical integrator that could prove Nvidia is optional in the most valuable edge-AI market. The stakes are not limited to whether Nvidia sells a chip into Tesla vehicles. The stakes are whether Nvidia’s platform becomes the default workflow for physical AI, or whether the future belongs to closed stacks that treat Nvidia as a temporary supplier for training, not a lasting standard.

How We Got Here: Two Flywheels Converged

Nvidia’s recent financials tell a simple story: generative AI demand is enormous and still expanding. In its fiscal Q3 2026 results (quarter ended October 26, 2025), Nvidia reported $57.0B in revenue, driven overwhelmingly by Data Center revenue of $51.2B, while Automotive revenue was $592M. This split matters. It shows the “next frontier” (automotive and robotics) is strategically important but still small in today’s income statement.

Tesla’s financial and operational story is different. It remains a car-and-energy company in revenue composition, but it increasingly communicates like an AI company in ambition. In its Q3 2025 update, Tesla emphasized autonomy expansion and scaling AI training capacity, describing its training compute as 81k “H100 equivalents.” Whether that figure represents direct Nvidia hardware, mixed vendors, or normalization, it signals the same reality: autonomy training is now a frontier-scale compute problem, not a side project.

Then came a noteworthy pivot signal in 2025. Reuters and other outlets reported Tesla disbanded its Dojo supercomputer team, implying Tesla would rely more on external compute suppliers rather than a single internal moonshot. Even if Dojo’s ideas persist in other forms, the market takeaway was clear: the autonomy compute bill is too large and too time-sensitive to bet on one homegrown path.

At the same time, Nvidia has been assembling an autonomy ecosystem that can scale without Tesla. The Lucid–Nuro–Uber robotaxi partnership highlighted at CES 2026 is a public proof point for Nvidia’s in-vehicle compute platform (DRIVE AGX Thor) and its strategy of selling a full stack to many partners rather than building a single robotaxi service.

That is the collision: Tesla is trying to build autonomy as a closed-loop product, while Nvidia is trying to become the platform layer for everyone else’s autonomy. Both strategies can win. They can also clash.

Key Statistics That Frame The Stakes

- Nvidia revenue (Fiscal Q3 2026): $57.0B

- Nvidia Data Center revenue (Fiscal Q3 2026): $51.2B

- Nvidia Automotive revenue (Fiscal Q3 2026): $592M

- Tesla revenue (Q3 2025): $28.095B

- Tesla operating margin (Q3 2025): 5.8%

- Tesla free cash flow (Q3 2025): $3.99B

- Tesla training compute disclosure: 81k H100 equivalents

- Tesla deliveries (Q4 2025): 418,227

- Tesla full-year deliveries (2025): 1,636,129

- Tesla energy storage deployments (Q4 2025): 14.2 GWh

These numbers highlight the asymmetry. For Nvidia, automotive is still an option value. For Tesla, autonomy is the route to higher-margin software economics. That difference in incentives is what creates “frenemies.”

Nvidia Tesla FSD And The Data Flywheel That Changes Everything

The most valuable asset in physical AI is not just compute. It is learning loops that convert real-world interaction into better models, faster than competitors can copy.

Driving is long-tail. The rare events are the hardest. Construction zones, poor lane markings, unusual merges, erratic pedestrians, emergency vehicles, mixed signage, rain glare, fog, and region-specific driving culture create a combinatorial explosion of edge cases. This is why autonomy has advanced more slowly than many early forecasts predicted.

Tesla’s bet is that a large deployed fleet plus continuous iteration can turn this long-tail problem into an advantage. In Tesla’s own communications, it repeatedly positions its approach as a “universal model” that improves and scales across cities, rather than bespoke city-by-city mapping as the primary constraint. Even if that vision is only partially realized, the strategic direction is unmistakable: the fleet becomes a training asset.

For Nvidia, this is the crux of why Tesla matters. Nvidia can keep winning the cloud training race and still face an uncomfortable outcome: the biggest real-world autonomy learning loop could standardize around a stack that does not meaningfully expand Nvidia’s influence at the edge. If Tesla proves that vertical integration is the best path to autonomy economics, other companies may respond by copying the vertical pattern where possible, or by tightening their dependence on a single platform supplier.

Nvidia’s most durable advantage has been its ecosystem: CUDA, developer tooling, libraries, platform integrations, and the maturity of its optimization stack. In physical AI, the ecosystem prize is bigger: it is the workflow from data capture to simulation, training, validation, and deployment on constrained edge hardware. Tesla’s FSD is an extreme stress test of that workflow.

If Nvidia is not part of the autonomy learning loop, then the “AI war” gradually shifts from being about GPUs to being about closed-loop deployment systems where hardware is replaceable and the learning loop is not.

Compute Arms Race: Training Is Still Nvidia’s Home Turf

Tesla’s “H100 equivalents” disclosure is important because it places autonomy training closer to frontier AI labs than to traditional automotive R&D. It also clarifies why this story is bigger than “does Tesla buy Nvidia chips.”

Training autonomy models is expensive for three reasons:

- Data volume and refresh rate: driving generates enormous video data and labels are complex.

- Iteration speed: edge-case learning requires constant retraining and evaluation.

- Simulation and validation: safety requires testing across huge scenario spaces.

Nvidia benefits when autonomy developers scale training. It benefits even more if autonomy developers standardize on Nvidia tooling and model deployment paths.

But the competitive context is tightening. Hyperscalers and large AI buyers increasingly pursue custom silicon for cost control and supply independence. AWS’s push to scale Trainium-based clusters (including Project Rainier) reflects a broader trend: major buyers do not want to be structurally dependent on a single vendor forever. Tesla has the same impulse, which is why it has invested in in-house chips and manufacturing partnerships for AI silicon.

So Nvidia’s strategic question becomes: Can Nvidia turn training dominance into end-to-end physical AI dominance, or does training become a commoditized phase where customers diversify away over time?

A Snapshot Of Where The Money Is Today

| Company | Segment Most Relevant To AI War | Latest Disclosed Figure | What It Suggests |

| Nvidia | Data Center | $51.2B (Fiscal Q3 2026) | Cloud AI demand remains the engine |

| Nvidia | Automotive | $592M (Fiscal Q3 2026) | Physical AI is early-stage in revenue |

| Tesla | Total revenue | $28.095B (Q3 2025) | Tesla remains hardware-heavy today |

| Tesla | Free cash flow | $3.99B (Q3 2025) | Autonomy compute investment is fundable |

| Tesla | Training compute | 81k H100 equivalents (Q3 2025) | Autonomy is a frontier-scale compute buyer |

This table also highlights why Nvidia may “need” Tesla in a narrative sense. Tesla is among the few consumer-facing companies that can make physical AI feel inevitable, not theoretical. That perception matters for capital allocation across the industry.

Edge Inference: The Next AI War Is Inside A Power Budget

If training made Nvidia the king, inference at the edge is where power dynamics change.

Data centers let you solve problems by spending more watts and more servers. Cars cannot. In-vehicle compute must hit cost targets, survive temperature swings, meet reliability constraints, and pass safety and compliance requirements. That reality favors specialized silicon and vertical integration.

Tesla is historically strong here. It has a track record of custom vehicle compute and a preference for controlling the software-to-silicon interface. That approach can yield better efficiency and a tighter optimization loop. It is also a direct threat to Nvidia’s ambitions to sell in-car autonomy compute broadly.

Nvidia’s response is not to sell a chip alone. It sells a platform: DRIVE hardware, software, simulation, developer tooling, safety concepts, and integration support. Nvidia’s pitch is that the platform reduces time-to-market and de-risks autonomy development for companies that cannot build Tesla-style vertical stacks.

The Lucid–Nuro–Uber robotaxi partnership is a symbolic win for Nvidia’s platform play. It demonstrates a world where autonomy is assembled as a stack: a vehicle partner, an autonomy system builder, a fleet and distribution partner, and Nvidia as the compute and platform layer.

Tesla is the counterfactual. Tesla is the world where the stack is internalized.

The strategic tension is this: Tesla’s success expands the autonomy market, but it may also convince the best-positioned players to internalize more of the stack, limiting Nvidia’s share of edge inference.

Safety, Regulation, And The Level-2 Ceiling That Shapes Everything

Autonomy is not only an engineering problem. It is also a governance and liability problem.

The SAE J3016 taxonomy has become a global reference language for “levels” of driving automation. The difference between Level 2 (driver assistance) and higher levels is not just capability. It is responsibility. Tesla’s own investor materials emphasize that current systems labeled “supervised” still require active driver supervision and do not make the vehicle autonomous.

Regulators care about that boundary because it controls consumer expectations and crash accountability. In the United States, NHTSA scrutiny around Autopilot and driver engagement has shaped how driver-assistance systems are messaged and updated. These investigations and recalls are not mere headlines. They are signals about how quickly autonomy can scale and how strict the compliance environment will remain.

For Nvidia, regulation can either unlock or delay its automotive opportunity:

- If regulation remains tight and consumer deployments remain supervised for years, the market for high-end autonomy compute grows slower.

- If supervised systems transition into broadly accepted commercial robotaxi deployments, demand for edge inference, validation tooling, and simulation platforms can accelerate sharply.

Tesla is pivotal here because it is the most visible autonomy brand in consumer vehicles. Its safety record, messaging, and regulatory posture shape public trust for the category.

This is another reason Nvidia “needs” Tesla’s FSD to succeed, at least at the level of category momentum. Nvidia’s platform story becomes easier when the public believes physical AI is ready for mainstream deployment.

Platform Wars: Vertical Integration Versus A Horizontal Stack

The future of autonomy could look like one of three archetypes:

- Vertical integration: one company owns vehicles, autonomy software, fleet operations, and economics.

- Horizontal platform ecosystem: OEMs and autonomy developers assemble a stack with platform suppliers.

- Operator-centric robotaxi: a dedicated operator controls vehicles, sensors, software, and service delivery.

Tesla is closest to the vertical model. Nvidia is building for the horizontal model. Waymo and similar efforts resemble the operator model.

These archetypes matter because they determine who captures value:

- In vertical integration, value accrues to the owner of the loop, and hardware becomes a tool.

- In horizontal ecosystems, value is shared, and platform suppliers can take meaningful share.

- In operator models, value accrues to the operator, and suppliers compete for slots.

Nvidia’s best-case scenario is a horizontal market, because it can scale across many partners. Tesla’s best-case scenario is vertical dominance, because it captures most of the economics of autonomy.

Comparing The Three Models

| Dimension | Tesla-Style Vertical Model | Nvidia-Style Platform Model | Waymo-Style Operator Model |

| Control | Centralized | Distributed across partners | Centralized |

| Scaling lever | Fleet learning loop | Many OEMs and developers | City-by-city operations |

| Hardware role | Often internal and custom | Platform slot for suppliers | Dedicated system design |

| Key risk | Regulatory and liability concentration | Fragmentation and partner execution | Operational cost and expansion pace |

| What “winning” looks like | Highest autonomy margin capture | Widest platform adoption | Highest service reliability and scale |

The “frenemies” insight is that Nvidia benefits if Tesla proves autonomy is commercially real, while Nvidia also benefits if Tesla does not become the template everyone can replicate.

Geopolitics And Supply Chains: The AI War Is Also An Export-Control Story

Nvidia’s growth is sensitive to export controls and licensing regimes. Any uncertainty in its highest-margin data center business increases the strategic appeal of diversifying into automotive, robotics, and industrial AI. Even if automotive revenue is small today, it is a path to reducing reliance on any single region’s demand or any single policy environment.

Tesla’s supply chain strategy also reflects hedging. Tesla’s willingness to partner on manufacturing advanced AI chips (including large semiconductor manufacturing deals reported in 2025) signals a long-term plan to keep autonomy compute under its control and reduce bottlenecks.

This matters because “physical AI” is not only about algorithms. It is about secure, scalable production of high-performance compute. If the AI race becomes more fragmented geopolitically, companies will value supply assurance as much as raw performance.

That reality cuts both ways for Nvidia:

- It creates demand for Nvidia’s proven platform and supply chain coordination.

- It encourages customers like Tesla to diversify suppliers and increase internal capability.

Winners And Losers: What Different Outcomes Mean

The relationship between Nvidia and Tesla does not have a single end state. Several plausible futures exist, and each reshapes the AI landscape.

| Scenario | What Happens | Likely Winners | Likely Losers |

| Tesla stays supervised for longer | FSD improves but remains Level 2 supervised in practice | Suppliers of driver-assist components, insurers with clear liability rules | Robotaxi timelines, platform suppliers banking on rapid autonomy scale |

| Tesla achieves scalable robotaxi economics | Tesla proves autonomy can generate durable high-margin revenue | Tesla, autonomy compute ecosystem, cities that attract deployment | Traditional ride-hailing margins, OEMs without autonomy differentiation |

| Nvidia becomes the default “physical AI” platform | Many OEMs and AV builders standardize on Nvidia end-to-end tooling | Nvidia, partner ecosystems like Nuro-style builders | Fragmented smaller stacks without integration capacity |

| Vertical integration spreads | More players attempt Tesla-like in-house stacks | Large tech and OEM giants with capital | Pure-play platform suppliers, smaller OEMs |

| Regulation tightens after high-profile events | Deployment slows, compliance costs rise | Incumbents with strongest safety validation | Aggressive deployment strategies, smaller AV startups |

This table makes the key point: Nvidia’s upside is not strictly dependent on selling chips into Tesla vehicles. Nvidia’s bigger upside depends on whether the world chooses the platform model at scale, and Tesla’s success influences that choice.

Expert Perspectives And The Best Counterarguments

A neutral analysis must take seriously the strongest objections.

Nvidia does not need Tesla because automotive is tiny in revenue.

True in today’s numbers. Nvidia’s data center business dwarfs automotive. But revenue mix can change quickly when a category becomes credible. Autonomy, robotics, and industrial AI are credibility-driven markets. Once deployment proves profitable, the infrastructure buildout can accelerate.

Tesla will keep the core stack closed, so Nvidia cannot “win” through Tesla.

Also plausible. But Nvidia can still benefit from Tesla as a category catalyst. Tesla’s achievements can force competitors to spend, and most competitors will not build Tesla-style vertical stacks. They will buy platforms, and Nvidia wants to be the best platform.

Custom silicon will erode Nvidia’s dominance over time.

A real risk. Custom chips can lower costs and reduce dependence on Nvidia. Yet custom silicon does not automatically replace a mature software ecosystem. Nvidia’s moat is tooling, optimization, and integration velocity. The question is whether Nvidia can make that moat portable to the edge, not just the cloud.

What Comes Next: The 2026 Milestones That Will Reveal The Direction

Predictions should remain conditional. Autonomy timelines can slip, and regulatory shifts can change the pace. Still, several near-term milestones can clarify whether Nvidia Tesla FSD becomes a deeper strategic story or remains a loose coexistence.

Milestones To Watch

| Milestone | Why It Matters | What A “Signal” Looks Like |

| Tesla autonomy disclosures in 2026 earnings updates | Indicates whether compute investment is accelerating | Rising AI capex language, clearer robotaxi rollout targets |

| Expansion of supervised FSD availability across regions | Tests whether Tesla can globalize its learning loop | New country approvals and faster iteration cadence |

| More platform-based robotaxi announcements | Shows Nvidia can scale without Tesla | Additional OEM and AV partnerships using Nvidia DRIVE |

| Regulatory actions and rulemaking updates | Sets the ceiling for consumer deployment speed | Clearer definitions, stricter driver monitoring expectations |

| Supply chain and export-control stability | Impacts Nvidia’s urgency to diversify growth | Fewer licensing shocks, steadier order visibility |

A Reasoned 2026 Outlook

Market indicators point to a two-track autonomy world in the near term.

- Track one: supervised consumer deployments keep improving, but remain constrained by liability and behavior, which limits the pace of “true autonomy” claims.

- Track two: robotaxi pilots expand through partnerships and controlled operating domains, where responsibility can be centralized and validated more rigorously.

In that world, Nvidia’s best path is to become the platform for track two and the tooling backbone for track one. Tesla’s best path is to prove a closed-loop consumer fleet can be the fastest improvement engine, then convert that advantage into service economics.

This is why the “frenemies” angle is so revealing. Tesla’s progress validates the category that Nvidia wants to platformize. Tesla’s vertical ambition also threatens Nvidia’s edge footprint. Nvidia does not need Tesla to survive. Nvidia benefits materially if Tesla accelerates physical AI adoption, because the rest of the market will need a turnkey stack. But Nvidia should also assume the most capable players will try to internalize more of the value chain over time.

Final Thoughts

Nvidia Tesla FSD is not just a story about two famous companies. It is a preview of the next AI era, where value shifts from training benchmarks to deployed intelligence in the real world. The winners will be the companies that control the workflow from data to deployment and can prove safety, efficiency, and reliability at scale.

If Tesla turns autonomy into a profitable, repeatable service, it will pull the entire industry forward. Nvidia’s opportunity is to make that pull translate into platform dominance across the broader ecosystem. Nvidia’s risk is that Tesla proves the most valuable physical AI loops are closed, vertically integrated systems where hardware vendors are interchangeable.

The next twelve months will not settle the autonomy question, but they will reveal whether the market is standardizing around platforms or fragmenting into closed stacks. That is the real “AI war” that comes after the GPU boom.