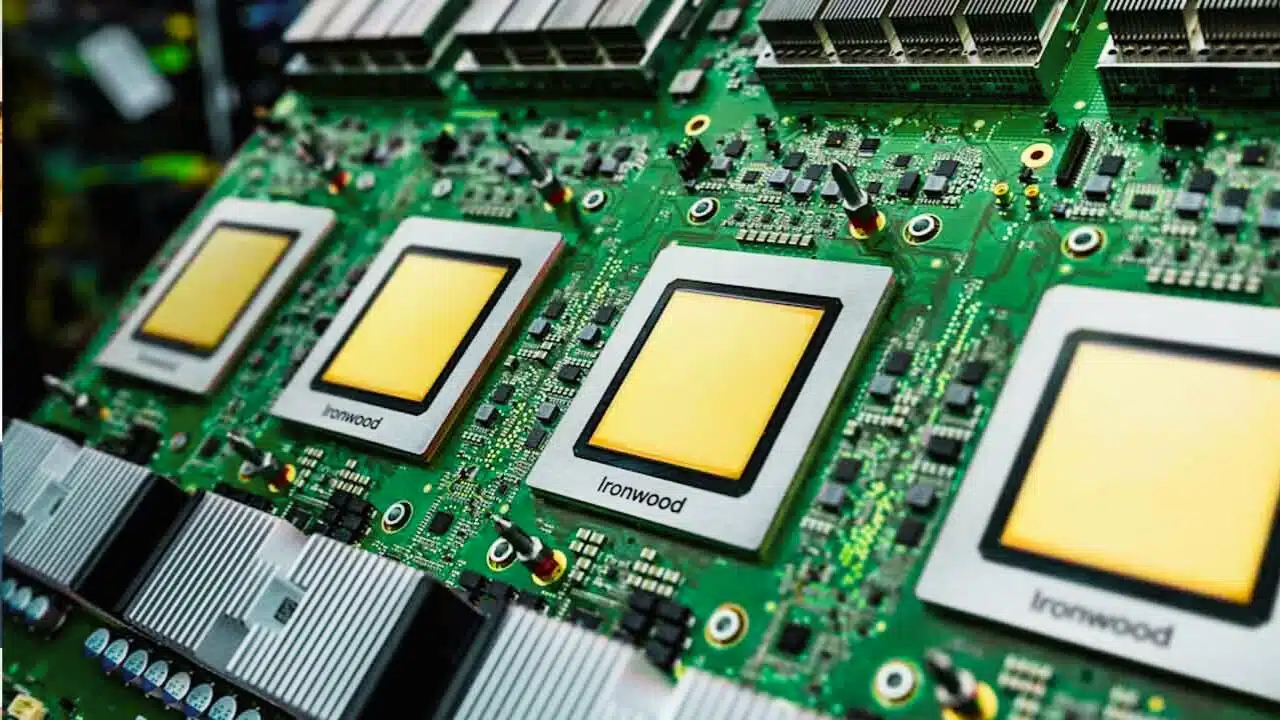

Google officially unveiled Ironwood on November 6, 2025, marking it as the seventh-generation Tensor Processing Unit (TPU) in its custom silicon lineup, specifically engineered to handle the escalating demands of artificial intelligence workloads ranging from massive model training to real-time inference applications.

This launch represents a pivotal escalation in Google’s strategy to erode Nvidia’s stronghold in the AI hardware sector, where Nvidia’s GPUs have long been the go-to solution for data centers and cloud providers due to their versatility and ecosystem support. Developed entirely in-house over the past decade, Ironwood emphasizes not just raw computational power but also seamless integration within Google Cloud’s infrastructure, enabling developers to scale AI operations without the bottlenecks that plague general-purpose hardware.

The timing of the rollout aligns with surging global AI adoption, as enterprises seek alternatives to Nvidia’s supply-constrained and energy-intensive chips, positioning Ironwood as a cornerstone of Google’s AI Hypercomputer platform that unifies compute, storage, and networking under a single management layer. Early adopters, including AI powerhouse Anthropic, have already signaled massive commitments, with plans to deploy up to one million Ironwood TPUs by 2026 to supercharge models like Claude, underscoring the chip’s potential to reshape enterprise AI economics.

Detailed Specifications and Architecture

At its core, each Ironwood TPU chip adopts a dual-die configuration that delivers 4,614 TFLOPs of performance in FP8 precision, a metric optimized for the dense matrix multiplications central to AI neural networks, while incorporating 192 GB of high-bandwidth HBM3e memory per chip with an impressive 7.3 TB/s bandwidth to ensure data flows rapidly without stalling computations. This memory subsystem marks a significant upgrade, featuring eight stacks of HBM3e to address the memory hunger of large language models (LLMs) and mixture-of-experts (MoE) architectures, allowing for 1.77 PB of directly addressable shared memory across a full pod configuration.

The chip’s interconnect fabric operates at 9.6 Tb/s per link via a proprietary Inter-Chip Interconnect (ICI) network, facilitating ultra-low-latency communication between accelerators and supporting dynamic voltage and frequency scaling to optimize energy use during varying workload intensities. Reliability is baked in through advanced features like built-in self-testing (BIST), logic repair mechanisms, and real-time arithmetic verification to detect and mitigate silent data corruption, drawing from lessons in Google’s previous TPU deployments that achieved 99.9% uptime in liquid-cooled systems.

Additionally, Ironwood leverages AI-assisted design via Google’s AlphaChip team, which optimized the ALU circuits and floorplan for peak efficiency, resulting in roughly twice the performance per watt compared to the Trillium (TPU v6e) predecessor. Cooling relies on Google’s third-generation liquid cooling system, essential for sustaining high-density operations, while the chip’s I/O bandwidth reaches 1.2 TB/s to handle diverse data ingress and egress patterns in reinforcement learning or multimodal AI tasks.

Scalability and Performance Benchmarks

Ironwood’s true prowess emerges in its pod-scale deployments, where up to 9,216 chips can interconnect into a single supercomputing unit, collectively unleashing 42.5 ExaFLOPs of FP8 compute—equivalent to processing power that dwarfs the El Capitan supercomputer by over 24 times and vastly outpaces Nvidia’s GB300 NVL72 system’s 0.36 ExaFLOPs in comparable metrics. This scalability is enabled by innovative optical circuit switching (OCS) technology, which forms a reconfigurable fabric allowing pods to dynamically adapt to failures by rerouting traffic in milliseconds, restoring from checkpoints without halting workloads and supporting configurations from 256 chips for smaller inference setups to full-scale clusters spanning tens of pods.

In practical terms, a full Ironwood pod consumes around 10 MW of power but provides instantaneous access to memory equivalent to about 40,000 high-definition movies, eliminating data bottlenecks for training the world’s largest models or serving high-volume, low-latency applications like AI agents and chatbots.

Compared to earlier generations, Ironwood achieves over 10 times the peak performance of TPU v5p and more than four times the per-chip efficiency of Trillium, with benchmarks showing it excels in the “age of inference” by handling reasoning models, LLMs, and MoE architectures at scales previously unattainable without custom hyperscaler infrastructure. These pods integrate with Google Cloud’s Pathways software stack for effortless model distribution across thousands of accelerators, treating the entire system as a unified machine and enabling seamless transitions between training phases and production serving.

Strategic Competition with Nvidia

Google’s Ironwood launch intensifies the AI hardware arms race, directly targeting Nvidia’s dominance by offering custom silicon that prioritizes cost savings, power efficiency, and tight ecosystem integration over the broader applicability of Nvidia’s CUDA-optimized GPUs like the H100 or upcoming B200 series.

While Nvidia’s hardware excels in on-premises versatility and third-party cloud support, Ironwood counters with superior per-watt performance for AI-specific tasks, potentially reducing operational costs by up to 60% when paired with Google Cloud’s full-stack services, as evidenced by IDC analyses showing 353% three-year ROI, 28% lower IT spending, and 55% higher efficiency for Hypercomputer users. The chip’s design philosophy—focusing on massive parallelism and inference optimization—addresses Nvidia’s vulnerabilities, such as GPU shortages and high energy demands, by providing hyperscalers with an in-house alternative that locks users into Google’s ecosystem for faster, cheaper AI scaling.

This move mirrors trends among competitors like Amazon (with Trainium) and Microsoft (with Maia), but Google’s decade of TPU iteration gives Ironwood an edge in maturity, with early commitments from firms like Anthropic and Lightricks validating its appeal for multimodal and reasoning AI development. As AI inference workloads proliferate—projected to consume 80% of future compute resources—Ironwood positions Google to capture a larger slice of the multibillion-dollar cloud AI market, compelling Nvidia to accelerate innovations in efficiency and supply chain resilience.

Broader Ecosystem and Adoption Impacts

Complementing Ironwood, Google introduced Axion, its first Arm-based CPU for cloud servers, built on the Neoverse v2 platform with up to 50% better performance and 60% higher energy efficiency than contemporary x86 processors, featuring 2 MB private L2 cache per core, 80 MB L3 cache, DDR5-5600 support, and Uniform Memory Access for versatile AI and general-purpose tasks. Axion-powered instances like the C4A (up to 72 vCPUs, 576 GB DDR5, 100 Gbps networking, and 6 TB local SSD) are now generally available, enhancing Ironwood pods for hybrid workloads and further differentiating Google Cloud from AWS and Azure through affordability and speed.

Adoption is accelerating, with Anthropic’s multibillion-dollar deal for one million TPUs aimed at expanding Claude’s capabilities for Fortune 500 clients and startups, while Lightricks deploys Ironwood for its LTX-2 multimodal system, highlighting real-world gains in training efficiency and serving latency.

This infrastructure push not only bolsters Google’s revenue streams amid AI-driven cloud growth but also democratizes access to exascale computing, fostering innovations in fields like robotics, healthcare AI, and climate modeling by making high-fidelity inference economically viable for smaller players. Overall, Ironwood’s rollout signals a maturing era where custom ASICs challenge commoditized GPUs, potentially lowering barriers to advanced AI while intensifying competition that benefits global technological progress.