The interview room has changed. It used to be just you, the candidate, and perhaps a nervous glass of water. Today, there is a third presence in the room: Artificial Intelligence. Whether you are hiring for a marketing role, a software developer, or an administrative assistant, the reality of hiring in the age of AI is unavoidable.

Candidates have powerful “copilots” like ChatGPT, Claude, and Perplexity at their fingertips. They can generate cover letters in seconds, polish their resumes to perfection, and—if you aren’t careful—script their interview answers in real-time.

This shift has terrified many hiring managers. The fear is valid: How do I know if this person actually wrote this code? Is this writing sample theirs or an algorithm’s?

But there is a flip side. We shouldn’t just be trying to catch cheaters. We should be trying to find pilots. The best candidates today aren’t the ones who ignore AI; they are the ones who use it to become 10x more productive.

This guide is your new hiring playbook. We are moving away from the “gotcha” questions of the past and toward a hybrid approach. Below, you will find 10 strategic interview questions to ask in the age of AI candidates divided into two categories:

- AI-Proof Questions: designed to verify human authenticity and emotional intelligence.

- AI-Literacy Questions: designed to test how well a candidate can wield modern tools.

Let’s redefine how we hire.

Why Traditional Interviewing Is Broken

Before we dive into the questions, we need to understand why your old interview script is failing.

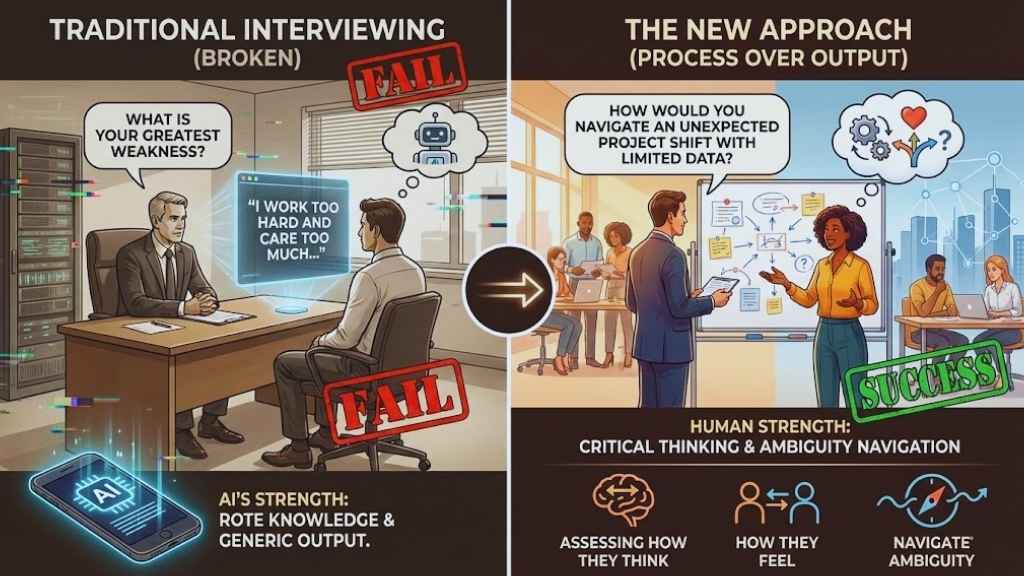

For decades, we relied on informational retrieval. We asked candidates to define terms, explain standard processes, or solve generic logic puzzles.

- “What is your greatest weakness?”

- “Explain the difference between Java and JavaScript.”

- “Write a marketing email for a summer sale.”

In 2022, these were fair questions. Today, they are useless. A candidate can plug these prompts into an LLM (Large Language Model) and get a “perfect” answer in milliseconds. If you are conducting a remote interview, the candidate might even be reading a live transcript generated by AI on the side of their screen.

When we ask questions that rely on rote knowledge or generic scenarios, we are playing to the AI’s strengths, not the human’s. To find the best talent, we must pivot to process over output. We need to assess how they think, how they feel, and how they navigate ambiguity—areas where AI still hallucinates or sounds uncomfortably robotic.

The “AI-Proof” Questions (Verifying Authenticity)

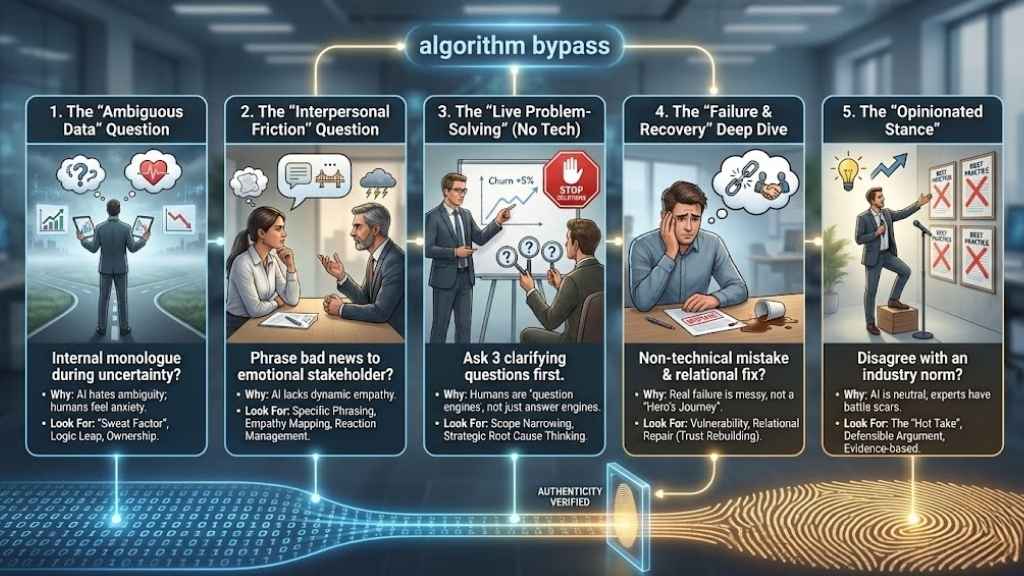

These first five questions are designed to bypass the algorithm. They rely on deep personal experience, specific emotional nuance, and “messy” human reality. An AI can fake a story, but it rarely captures the friction and anxiety of a real high-stakes moment.

1. The “Ambiguous Data” Question

The Question

“Describe a time you had to make a high-stakes decision with incomplete or conflicting data. What was your internal monologue during that uncertainty?”

Why This Works

AI models are designed to be helpful and definitive. They love patterns and logic. If you ask ChatGPT how to solve a problem, it gives you a clean, bulleted list of steps.

However, human leadership is rarely clean. It involves anxiety, gut feelings, and the fear of being wrong. This question forces the candidate to recall the feeling of uncertainty, not just the solution.

What to Look For

- The “Sweat” Factor: Does the candidate admit to being nervous or unsure? Authentic candidates will talk about the risk.

- The Logic Leap: Look for phrases like, “The data said X, but my experience with the client suggested Y, so I took a gamble on…”

- Ownership: They should own the decision, including the potential downsides they considered.

Red Flag Answer

“I analyzed the data, realized there was a gap, conducted a quick survey to fill the gap, and then made the correct decision.” This is too linear. It sounds like a textbook case study, not real life.

2. The “Interpersonal Friction” Question

The Question

“Tell me about a time you had to deliver bad news to a stakeholder who you knew would react emotionally. Walk me through exactly how you phrased it and why you chose those words.”

Why This Works

Assessing soft skills in the AI era is critical. While an AI can write a polite script for firing someone or delaying a project, it cannot replicate the dynamic tension of the room. It doesn’t understand why you might soften your tone for one client but be firm with another.

What to Look For

- Specific Phrasing: You want to hear the actual dialogue. “I started by saying, ‘John, I know how much you care about this feature, but…'”

- Empathy Mapping: The candidate should explain why they chose their approach based on the other person’s personality type.

- Reaction Management: How did they handle the pushback?

Red Flag Answer

“I sent them a detailed email explaining the situation clearly so there was no confusion.” This avoids the “friction” part of the question entirely.

3. The “Live Problem-Solving” (No Tech)

The Question

“I’m going to give you a vague problem: ‘Our customer churn rate increased by 5% last month.’ Before you offer any solutions, I want you to ask me 3 clarifying questions to narrow the scope.”

Why This Works

This is one of the ultimate ChatGPT-proof interview questions. AI models are “answer engines.” When you prompt them, they immediately try to solve the problem. Humans, especially senior ones, are “question engines.”

Great candidates know that you cannot solve a problem until you define it. By forcing them to ask questions first, you are testing their diagnostic skills.

What to Look For

- Scope Narrowing: “Is the churn happening in a specific region?” or “Did we change pricing recently?”

- Strategic Thinking: Questions that dig into the root cause rather than surface-level symptoms.

Red Flag Answer

The candidate immediately starts listing solutions: “We should improve onboarding and offer discounts.” This shows a lack of critical thinking and a reliance on generic “best practices.”

4. The “Failure & Recovery” Deep Dive

The Question

“Tell me about a specific mistake you made that wasn’t technical—perhaps a communication error or a bad judgment call. How did you fix the relationship, not just the output?”

Why This Works

AI-generated stories about failure often follow a “Hero’s Journey” arc: I had a problem, I worked hard, and it became a triumph. They sound sterile and overly polished.

Real human failure is often embarrassing. It involves misreading a room, forgetting a deadline, or accidental rudeness. This question tests for humility and the ability to repair trust—something an algorithm cannot do for you.

What to Look For

- Vulnerability: A genuine admission of a “cringe” moment.

- Relational Repair: Concrete steps they took to apologize or rebuild trust with a colleague.

Red Flag Answer

The “Humble Brag.” “My mistake was that I worked too hard and burned out,” or “I cared too much about the project’s perfection.”

5. The “Opinionated Stance”

The Question

“What is a ‘best practice’ in our industry that you completely disagree with, and why?”

Why This Works

Large Language Models are trained to be agreeable and neutral. They reflect the “consensus” of the internet. They rarely take a sharp, controversial stance unless explicitly prompted to play devil’s advocate.

A human expert, however, has battle scars. They have seen “best practices” fail. They have strong opinions. This question tests for critical thinking and the confidence to go against the grain.

What to Look For

- The “Hot Take”: A clear, defensible argument against a common norm. (e.g., “I think Daily Stand-up meetings are a waste of time for creative teams because…”)

- Evidence: Backing up their opinion with experience, not just preference.

The “AI-Literacy” Questions (Testing Modern Skills)

Now we pivot. You have verified they are human; now you need to verify they are a modern human.

If a candidate tells you they “don’t use AI,” that is a warning sign. It suggests a resistance to innovation. You want employees who use AI as a force multiplier. These questions assess their AI literacy.

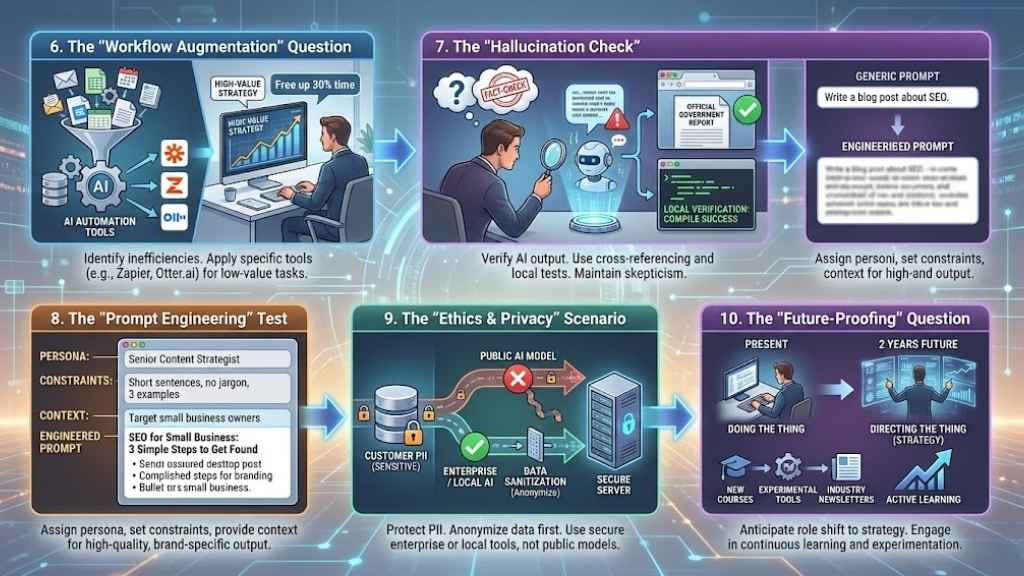

6. The “Workflow Augmentation” Question

The Question

“If you had to automate 30% of your daily repetitive tasks using AI tools today, which tasks would you pick, which tools would you use, and why?”

Why This Works

This moves beyond “Have you used ChatGPT?” to “How do you operationalize it?” It tests their ability to identify inefficiencies in their own workflow and apply the right tech stack to fix them.

What to Look For

- Tool Specificity: Mentions of specific tools (e.g., “I’d use Zapier to connect leads to the CRM,” or “I’d use Otter.ai for meeting transcripts”).

- Value Assessment: They focus on automating low-value tasks to free up time for high-value strategy.

7. The “Hallucination Check”

The Question

“Tell me about a time an AI tool gave you incorrect, biased, or low-quality information. How did you spot the error, and how did you verify the truth?”

Why This Works

Blind faith in AI is dangerous. You need candidates who possess a healthy skepticism. This question reveals if they fact-check their “copilot.”

What to Look For

- Verification Methods: “I cross-referenced the statistic with the official government report,” or “I ran the code locally to see if it actually compiled.”

- Understanding of Limitations: An awareness that AI can make up citations or hallucinate legal precedents.

8. The “Prompt Engineering” Test

The Question

“I’m going to give you a generic prompt: ‘Write a blog post about SEO.’ How would you rewrite this prompt to get a high-quality, brand-specific output from an LLM?”

Why This Works

Prompt engineering is the new communication skill. A generic prompt gets a generic result. A skilled user knows how to assign a persona, set constraints, define the format, and provide context.

What to Look For

- Persona Assignment: “Act as a Senior Content Strategist…”

- Constraints: “Use short sentences, avoid jargon, and include 3 examples.”

- Context: “Target audience is small business owners…”

9. The “Ethics & Privacy” Scenario

The Question

“Imagine you need to analyze a large dataset containing customer PII (Personally Identifiable Information) to find trends. How would you use AI tools for this without violating privacy policies?”

Why This Works

This is a critical compliance test. Many employees inadvertently leak company secrets by pasting sensitive data into public AI models. You need to know if they understand the boundary between efficiency and security.

What to Look For

- Data Sanitization: “I would anonymize the data first by removing names and emails.”

- Tool Selection: “I would use an enterprise instance of the tool where data isn’t used for training,” or “I would use a local open-source model.”

10. The “Future-Proofing” Question

The Question

“How do you see AI changing this specific job role over the next 2 years? How are you preparing yourself for that shift?”

Why This Works

This tests their strategic foresight. Are they passive observers, or are they active learners?

What to Look For

- Shift to Strategy: A recognition that “doing the thing” is becoming less important than “directing the thing.”

- Continuous Learning: Mentions of new courses, tools they are experimenting with, or newsletters they follow.

Red Flags: How to Spot “Copy-Paste” Candidates

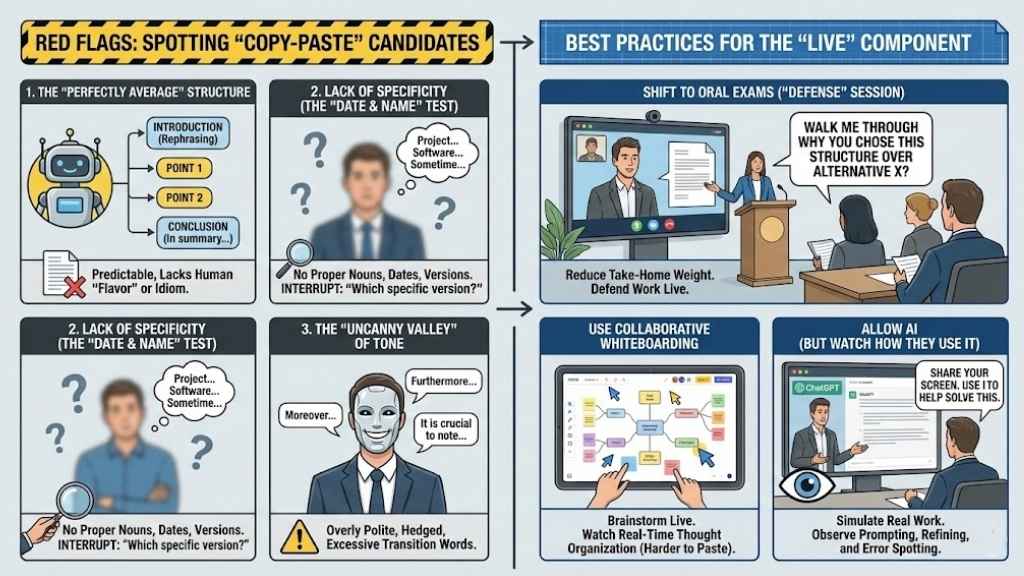

Even with these questions, you need to be observant. If you are conducting a remote interview or reviewing take-home assessments, look for these signs that the candidate is over-relying on AI without adding human value.

1. The “Perfectly Average” Structure

AI tends to structure answers in a very predictable way:

- Introduction rephrasing the question.

- Point 1.

- Point 2.

- Point 3.

- Conclusion starting with “In summary” or “Ultimately.”

If every answer sounds like a well-structured high school essay but lacks “flavor” or idiom, dig deeper.

2. Lack of Specificity (The “Date & Name” Test)

AI is bad at proper nouns unless they are famous. If a candidate tells a story about a project but never mentions the specific software version, the client’s industry, or the specific month it happened, they might be hallucinating a scenario.

- Pro Tip: Interrupt politely. “Sorry, just curious—what specific software version was that?” A human remembers or says “I forget.” A script-reader stumbles.

3. The “Uncanny Valley” of Tone

AI text is often overly polite and hedged. It uses transition words like “Furthermore,” “Moreover,” and “It is crucial to note” far more often than people speak in casual conversation.

Best Practices for the “Live” Component

To truly “AI-proof” your hiring, you must change the format, not just the questions.

Shift to Oral Exams

Reduce the weight of take-home tests. A take-home coding challenge or writing sample is now essentially an AI literacy test. Instead, invite the candidate to a “Defense” session.

- “I see you wrote this code/article. Walk me through why you chose this specific structure over [Alternative X].”

Use Collaborative Whiteboarding

Tools like Miro, FigJam, or even a shared Google Doc are great. Ask the candidate to brainstorm live. You can watch their cursor. You can see how they organize thoughts in real-time. It is much harder to copy-paste into a mind map than a text box.

Allow AI (But Watch How They Use It)

Consider a “Whiteboarding with AI” session. Tell the candidate: “For this next 15 minutes, you can use ChatGPT or Google to help you solve this problem. Share your screen.”

Watch how they prompt. Do they accept the first answer? Do they refine it? Do they spot errors? This is a simulation of real work in 2024.

Final Thoughts

The goal of the “Age of AI” interview isn’t to start a war with technology. It is to identify the humans who can lead it. We are moving away from hiring “knowledge workers” and toward hiring “wisdom workers.” We need people who have the judgment to know when the AI is right, the experience to know when it’s wrong, and the emotional intelligence to manage the people it impacts. Use the 10 questions above to filter out the robots and find the architects.