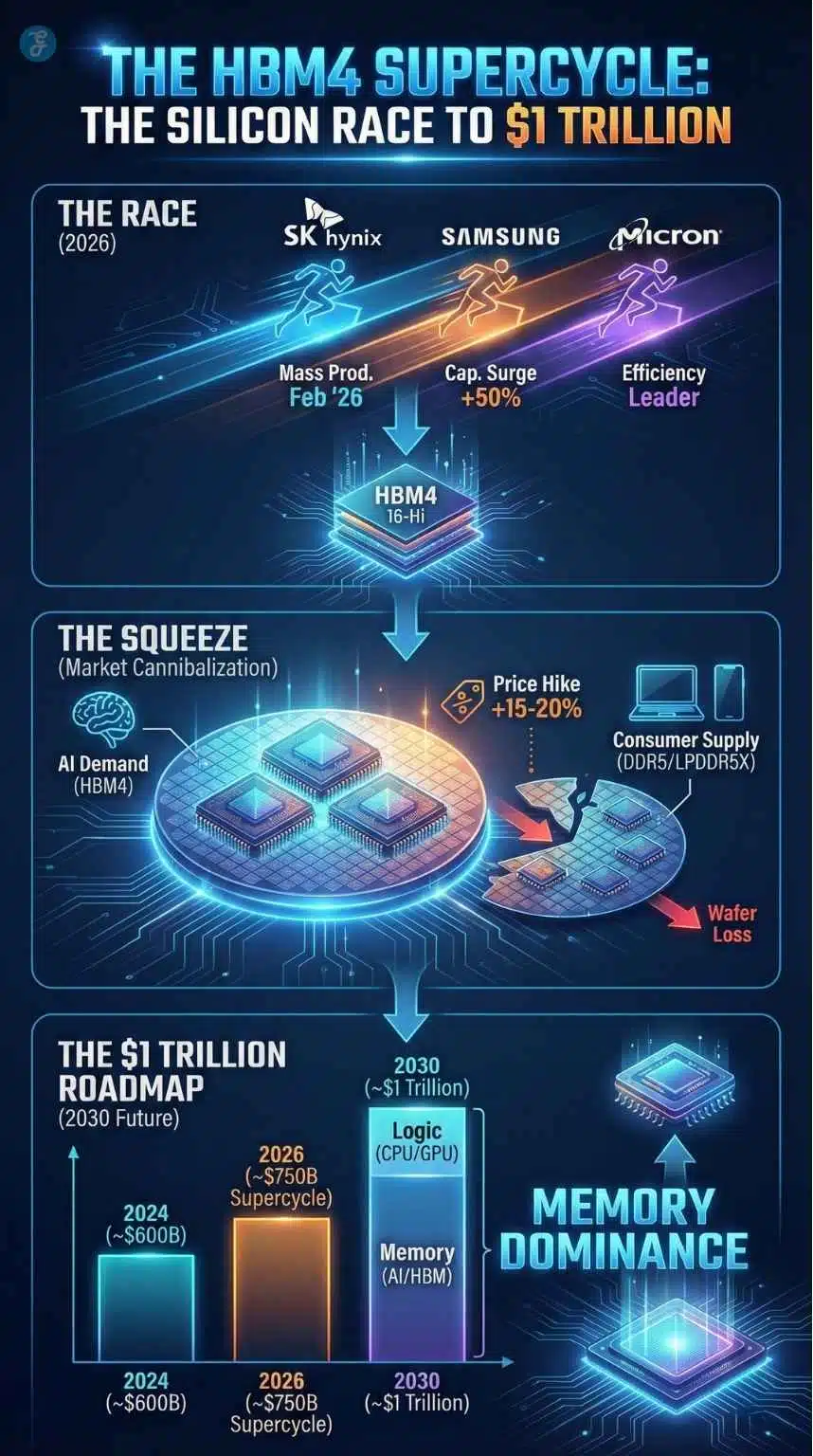

We have officially entered the era of the “AI Memory Supercycle.” For decades, the semiconductor industry followed a predictable, almost rhythmic boom-and-bust cycle. The industry is no longer just growing; it is undergoing a structural pivot. The insatiable demand for AI-grade memory, specifically the newly standardized HBM4 Supercycle (High Bandwidth Memory, Gen 4), has created a market distortion so severe that analysts are calling it “The Great Silicon Squeeze.”

While the headlines celebrate the race toward a $1 trillion semiconductor market (a milestone now forecast for 2030), the reality on the factory floor is far more chaotic. Mass production of 16-Hi HBM4 chips is set to begin next month, in February 2026. To make this happen, manufacturers are cannibalizing their own production lines, sacrificing the supply of standard chips used in laptops and smartphones to feed the AI beast.

The result? A two-speed economy where AI infrastructure thrives, and the consumer electronics market faces its steepest price hikes in a decade.

Key Takeaways: The HBM4 Supercycle

-

The Trigger: The era of the “AI Memory Supercycle” has begun. Mass production of HBM4 (16-Hi) starts in February 2026 to power NVIDIA’s next-gen “Rubin” platform.

-

The “Squeeze”: We are facing “Wafer Cannibalization.” Producing one AI memory chip consumes the silicon capacity of 3–4 standard PC chips.

-

The Cost: Consumers will pay the price. Expect a 15–20% price hike on high-end laptops and smartphones by Q3 2026 as standard memory supplies tighten.

-

The Leader: SK Hynix remains the market “Anchor” with its exclusive TSMC partnership, while Samsung races to catch up via turnkey solutions.

-

The Big Picture: The semiconductor market is accelerating toward a $1 trillion valuation, driven for the first time by memory revenue rather than logic processors.

The Hardware Race: What Makes HBM4 Different?

To understand why this shortage is happening, you have to understand the engineering marvel that is HBM4. This isn’t just a “faster” version of the memory in your PC; it is a fundamental architectural shift.

In late 2025, JEDEC (the solid-state technology association) finalized the standards that are now governing this race. The leap from the previous generation (HBM3E) to HBM4 is massive:

-

The 2048-Bit Interface: Imagine a highway. HBM3E had 1,024 lanes for data to travel. HBM4 doubles that to 2,048 lanes. This allows massive amounts of data to flow into the GPU without needing to run the chips at dangerously high, heat-generating speeds.

-

16-Hi Stacking: Manufacturers are now vertically stacking 16 DRAM dies on top of each other. This verticality is essential for NVIDIA’s upcoming “Rubin” (R100) platform, which reportedly requires up to 288GB of memory per unit.

-

The “Smart” Base Die: This is the game-changer. Previously, the bottom layer of a memory stack was a dumb controller. In HBM4, that base layer is a logic processor built on cutting-edge nodes (like TSMC’s 5nm). Effectively, the memory is becoming part of the processor itself.

This complexity is the root of the problem. Building HBM4 is incredibly difficult, and it requires a manufacturing technique called Hybrid Bonding (connecting chips directly copper-to-copper) that is prone to lower yields.

The Technical Leap (HBM3E vs. HBM4)

Purpose: To visually prove why HBM4 is a revolution, not just an evolution.

| Feature | HBM3E (Current Standard) | HBM4 (The New Standard) | The Impact |

| Interface Width | 1024-bit | 2048-bit | Doubles the “lanes” for data, reducing traffic jams. |

| Stack Height | 8-Hi / 12-Hi | 16-Hi | Allows 40% more memory capacity in the same physical space. |

| Base Die | Standard Controller | Logic Process (5nm/12nm) | Turns the memory stack into an active processor. |

| Bonding Tech | Micro-bumps | Hybrid Bonding | Eliminates bumps for direct copper-to-copper connection (harder to make). |

| Target GPU | Nvidia Hopper (H100/H200) | Nvidia Rubin (R100) | Essential for next-gen AI training clusters. |

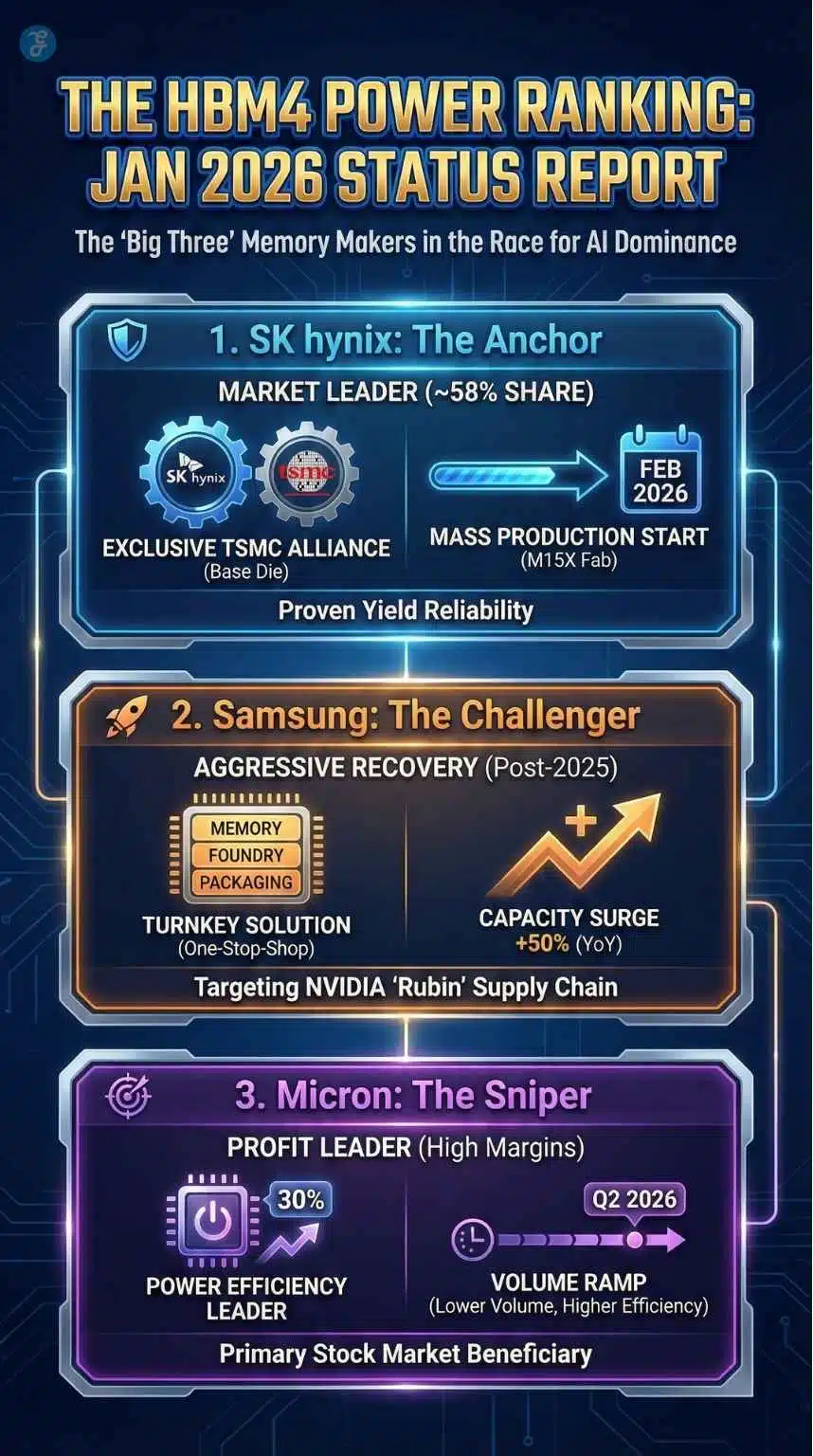

The “Big Three” Status Report: January 2026

The memory market has effectively consolidated into a high-stakes poker game between three players: SK Hynix, Samsung, and Micron. As of this week, here is where they stand in the race to supply NVIDIA and AMD.

| Company | Current Status | Key Strategy (Jan 2026) | Market Position |

| SK Hynix | The Anchor |

Partnership: Utilizing an exclusive alliance with TSMC for the base die.

Production: Mass production at the M15X fab starts Feb 2026. |

Leader (~58% Share)

Currently, the only supplier is fully trusted for the highest-end NVIDIA configurations. |

| Samsung | The Challenger |

Turnkey Solution: Offering a “one-stop-shop” deal—Samsung makes the memory, the logic die, and handles the packaging.

Capacity: Aggressively converting standard DRAM lines to HBM, aiming for a 50% capacity surge. |

Aggressive Recovery

After 2025 yield struggles, they are back in the game, heavily targeting the “Rubin” supply chain. |

| Micron | The Sniper |

Efficiency: Focused on power efficiency (30% better than peers).

Financials: The primary stock market beneficiary, as its lower volume is offset by massive profit margins. |

Profit Leader

They don’t have the most capacity, but they have the most efficient chips. |

The Relief Valve: Enter CXL (Compute Express Link)

While the world fixates on the shortage of HBM, a quiet revolution is happening in the server racks to keep the AI industry from stalling. It is called CXL (Compute Express Link), and as of CES 2026 (held just this week in Las Vegas), it has shifted from an experimental standard to a critical survival tool.

With HBM4 supply functionally capped, data center architects are turning to CXL 3.0 to break the “Memory Wall.”

-

The Concept: Traditionally, a CPU or GPU could only access the memory sticks physically plugged into its own motherboard. CXL changes the rules, allowing processors to access a shared “pool” of memory across the entire server rack.

-

The 2026 Pivot: At CES 2026, SK Hynix and Samsung both showcased CMM-Ax (CXL Memory Module-Accelerators). These devices allow standard, cheaper DDR5 memory to be “pooled” and accessed by AI accelerators.

-

Why It Matters: It acts as a pressure release valve. While CXL is not as fast as HBM4, it allows AI models to run on “tiered” memory—keeping the most critical data on the scarce HBM chips and offloading the rest to the abundant CXL pool. Without this technology, the 2026 AI expansion would likely hit a hard ceiling by Q3.

The “Consumer Tax”: Why Your Next Laptop Will Cost More

This is the part of the story that impacts the average consumer. You might not be buying an HBM4 chip, but you are going to pay for it. The industry is currently suffering from “Wafer Cannibalization.”

Physical silicon wafers, the discs that chips are printed on, are a finite resource. An HBM4 chip is roughly 300% larger than a standard DDR5 chip used in a PC. Furthermore, because HBM4 is so complex to stack (16 layers high), the “yield” (the number of working chips per wafer) is much lower.

The Math of Scarcity:

-

To produce 1 HBM4 chip, a manufacturer uses the same amount of silicon wafer capacity that could have produced 3 to 4 standard PC memory chips.

-

Because profit margins on HBM4 are sky-high (~75%), manufacturers like Samsung and SK Hynix are diverting nearly all their new equipment to HBM.

The downstream effect is a shortage of standard LPDDR5X (smartphone RAM) and DDR5 (PC RAM).

Market Impact for 2026:

-

PCs & Laptops: Expect the “standard” RAM configuration to stagnate. Budget laptops will likely remain stuck at 8GB or 12GB, while 16GB/32GB models will see a price premium.

-

Smartphones: Flagship phones launching later this year (like the iPhone 18 series or Galaxy S26) face a “margin squeeze.” Analysts predict a 15-20% retail price hike to offset the cost of memory.

The “Consumer Tax” Forecast (2025 vs. 2026)

| Item | Avg. Price (Jan 2025) | Forecast Price (Q3 2026) | Change |

| 16GB DDR5 RAM (PC) | $45 | $75 – $85 | ~75% Increase |

| Flagship Smartphone | $1,199 | $1,399 | ~15% Increase |

| Budget Laptop (8GB) | $499 | $549 | ~10% Increase |

| Wafer Availability | 100% (Balanced) | Allocated (Shortage) | Standard chips de-prioritized |

The Geopolitical Shadow: Export Controls 2.0

Looming over the supply chain is the renewed complexity of US-China relations. Following the “thaw” negotiations of late 2025, the trade landscape for 2026 has shifted from a blanket ban to a high-stakes obstacle course.

-

The “Conditional” Channel: As of January 2026, the US Department of Commerce has opened a narrow, “conditional” pathway for exporting specific high-end AI chips (like the H200) to China. However, this comes with a 25% tariff and rigorous end-user licensing reviews.

-

The February Target: Industry sources indicate that the first wave of these licensed shipments is targeted for mid-February 2026. This timeline is critical. If these shipments are delayed or blocked, Chinese tech giants may panic-buy legacy memory (DDR4/DDR5) to build domestic stockpiles, further exacerbating the global consumer memory shortage.

-

The Domestic Push: In response, China has accelerated its domestic “legacy” chip production, flooding the low-end market. This creates a bizarre market paradox for 2026: While high-end AI memory is critically scarce and expensive, the market for “dumb” chips used in washing machines and toys might actually see a glut.

The Road to $1 Trillion

Despite the consumer pain, the financial outlook for the semiconductor sector has never been brighter. The industry is hurtling toward a $1 trillion valuation, a milestone previously not expected until the early 2030s, now potentially arriving sooner.

Historically, “Logic” chips (CPUs like Intel’s or Qualcomm’s) were the kings of revenue. In 2026, we are seeing a flipping of the script. Memory is becoming the dominant revenue driver. Exports from South Korea—the epicenter of memory production—hit record highs in December 2025, driven entirely by this supercycle.

Final Words: Data’s New Barrel

HBM4 is an engineering miracle. It is the fuel that will allow the next generation of AI models to think faster, reason better, and learn more efficiently. But miracles aren’t free.

The “Supercycle” is actually a “Squeeze” for everyone who isn’t buying an AI server. As we move through 2026, the divide between the AI-haves (Datacenters) and the AI-have-nots (Consumer Electronics) will widen. In 2026, data is the new oil, HBM4 is the barrel it comes in—and right now, there simply aren’t enough barrels to go around.