Green cloud computing is becoming one of the most important, least visible parts of the sustainability conversation. People often think of emissions as something tied to cars, factories, or power plants. But the digital world is physical too. Every search query, video stream, AI request, payment transaction, and cloud backup touches a network of data centers, cooling systems, servers, storage arrays, and power infrastructure. If that system is inefficient or powered by high-carbon electricity, the “clean” feeling of digital life becomes misleading.

At the same time, cloud computing can reduce emissions when it is done well. Shared infrastructure can be more efficient than millions of underused private servers. Modern data centers can run at high utilization with optimized cooling and energy management. Cloud platforms can also shift workloads to cleaner regions or times when grids are greener.

This is why green cloud computing matters. It is not about feeling good. It is about real operational choices: power, cooling, hardware efficiency, workload design, and carbon accounting. This cluster guide breaks down what a data center’s carbon footprint actually means, how cloud systems create emissions, what changes make the biggest difference, and how businesses and consumers can make smarter cloud decisions in 2026 and beyond.

Why Cloud Sustainability Is Now A Big Deal

Cloud usage is growing across every industry. Businesses are moving apps and storage to the cloud. Consumers store more photos and videos than ever. AI workloads are expanding, and they are compute-intensive. Streaming platforms push high-bandwidth content to billions of devices. All of this increases demand for data center capacity.

Two things make this a sustainability issue.

First, electricity demand rises with digital growth. Even if individual servers become more efficient, total usage can still climb.

Second, AI and high-performance computing can consume far more energy per task than traditional web services. This makes infrastructure efficiency and energy sourcing critical.

Green cloud computing is the response to this new reality. It aims to reduce the carbon footprint of digital services by making infrastructure cleaner and more efficient.

What A Data Center’s Carbon Footprint Actually Includes

A data center’s carbon footprint is not just the power it uses today. It includes multiple emissions categories.

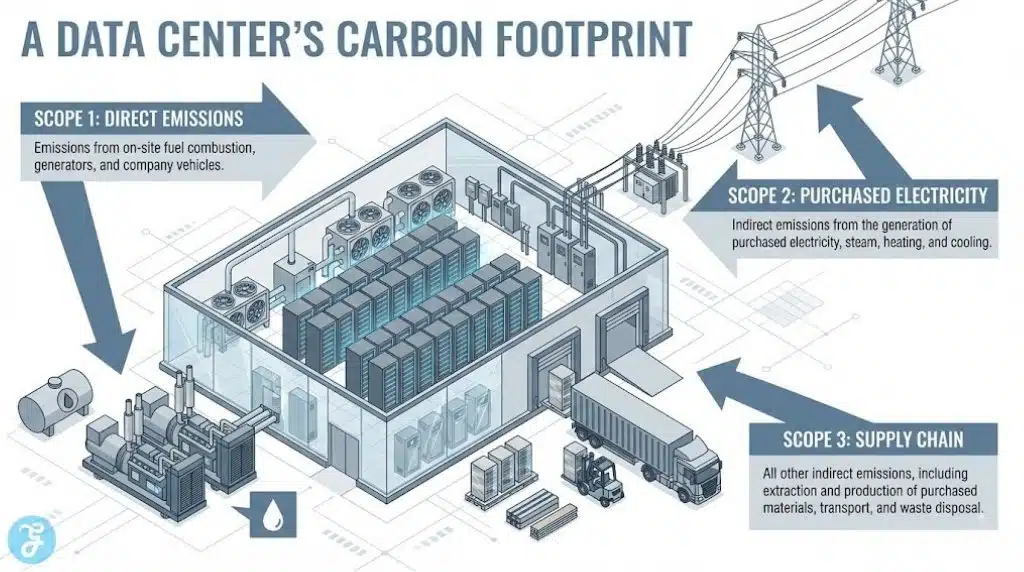

The Three Emissions Buckets

- Scope 1: direct emissions from on-site fuel use, generators, and heating systems

- Scope 2: emissions from purchased electricity powering servers and cooling

- Scope 3: emissions from the supply chain, hardware manufacturing, construction, and upstream energy production

For cloud sustainability, scope 2 is often the most visible because it ties directly to electricity. But scope 3 can be huge, especially when you consider server manufacturing, chip production, and data center construction.

Carbon Footprint Components Table

| Footprint Component | What It Includes | Why It Matters |

| Electricity use | Servers, cooling, networking | Usually the largest operational driver |

| Backup power | Diesel generators testing and use | Scope 1 emissions and local pollution |

| Water use | Cooling towers and water systems | Sustainability beyond carbon |

| Hardware lifecycle | Manufacturing and transport of equipment | Major scope 3 contribution |

| Construction | Concrete, steel, building materials | Large upfront carbon cost |

Green cloud computing deals with all of these, but electricity and cooling remain the fastest levers for improvement.

The Hidden Energy Cost Of Digital Life

Digital services feel weightless. But they run on constant compute and storage. Many activities that feel “small” scale into significant loads because billions of people do them daily.

Examples include:

- Video streaming and auto-play content

- Cloud backups that keep multiple copies in multiple regions

- Constant syncing across devices

- Always-on analytics and event tracking

- AI inference requests and personalization

The sustainability question is not whether these services should exist. It is whether they can be delivered with lower energy per unit of value.

Why Cloud Can Be Greener Than On-Prem, But Not Always

One of the strongest arguments for cloud adoption is efficiency. A well-run cloud data center can maintain high utilization, while many private data centers run underutilized servers that still consume power.

Cloud can reduce waste through:

- Higher server utilization

- Modern cooling systems and energy management

- Consolidation of workloads on fewer machines

- Automated scaling that turns resources off when not needed

But cloud is not automatically greener. If cloud usage encourages overprovisioning, excessive data retention, and constant high-bandwidth workloads, emissions can rise.

Green cloud computing is about using cloud efficiency without creating new digital waste.

The Most Important Metric: PUE And Why It Still Matters

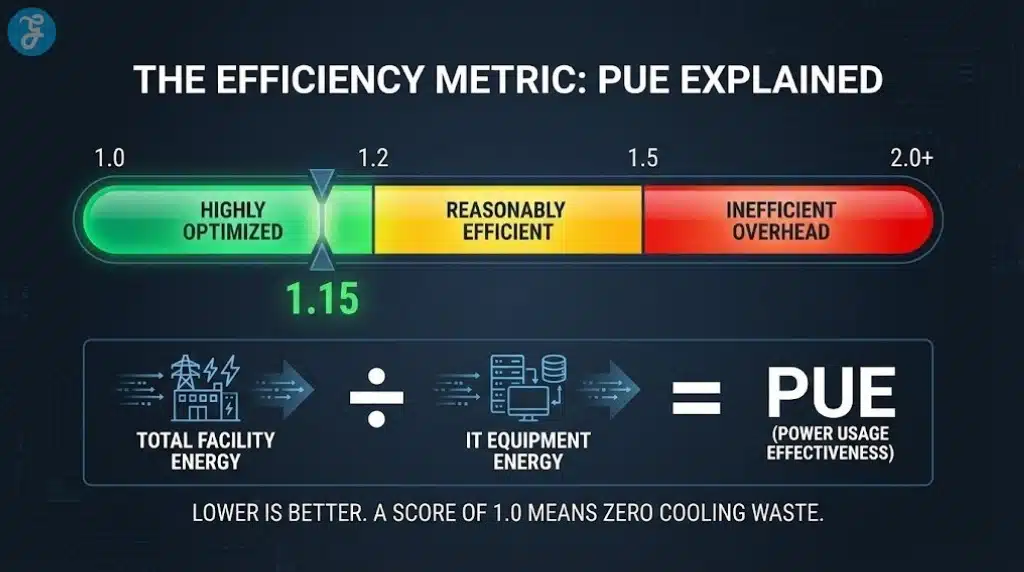

Power Usage Effectiveness, often called PUE, is a common data center efficiency metric. It compares total facility energy use to IT equipment energy use.

- PUE closer to 1.0 means less overhead for cooling and facility operations

- Higher PUE means more energy is spent on non-compute overhead

PUE is not a complete sustainability metric, but it is useful for operational efficiency. It does not capture grid emissions intensity or hardware lifecycle impact, but it does show whether a facility wastes power on overhead.

PUE Reality Table

| PUE Range | What It Suggests | Practical Meaning |

| 1.0–1.2 | Highly optimized | Low overhead, modern cooling |

| 1.2–1.5 | Reasonably efficient | Some overhead but manageable |

| 1.5+ | Less efficient | Cooling and facility waste likely |

Green cloud computing uses PUE as one piece of a bigger story, not the entire story.

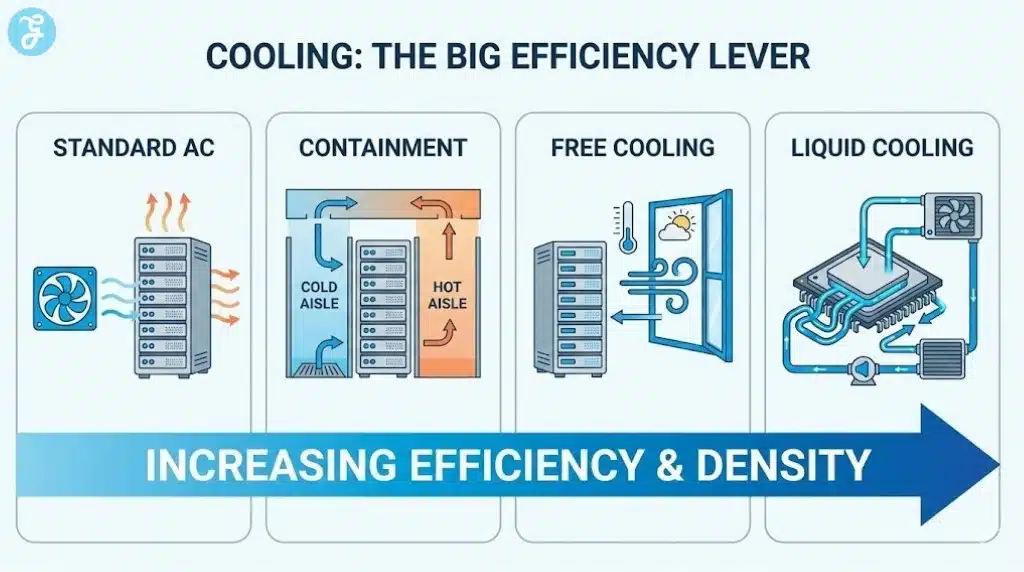

Cooling: The Big Lever That Most People Ignore

Cooling is one of the largest non-IT energy uses in data centers. Servers generate heat, and heat must be removed to keep systems stable.

Modern cooling strategies can cut overhead dramatically:

- Hot aisle and cold aisle containment

- Liquid cooling for high-density workloads

- Free cooling using outside air in suitable climates

- Advanced airflow management and monitoring

- AI-driven cooling optimization systems

Cooling is also where water use becomes a big sustainability issue. Some cooling systems consume significant water, especially evaporative methods. So green cloud computing increasingly includes water-efficient cooling strategies.

Cooling Methods Table

| Cooling Method | Strength | Tradeoff |

| Air cooling with containment | Mature and cost-effective | Less efficient at very high density |

| Free cooling | Low energy use in suitable climates | Depends on local weather |

| Evaporative cooling | Efficient in some conditions | Water consumption |

| Liquid cooling | High efficiency for dense compute | Higher infrastructure complexity |

| AI-optimized cooling | Reduces waste dynamically | Requires sensors and control systems |

As compute density increases due to AI, liquid and hybrid cooling will become more common.

Where Most Cloud Emissions Actually Come From

To reduce emissions, you need to know what drives them.

For many cloud workloads, emissions come from:

- Electricity used for compute and storage

- Cooling overhead energy and water impacts

- Idle and overprovisioned resources

- Data transfer and content delivery networks

- Hardware manufacturing and refresh cycles

The fastest savings often come from reducing waste rather than chasing exotic tech.

Green cloud computing works best when both providers and customers reduce unnecessary usage.

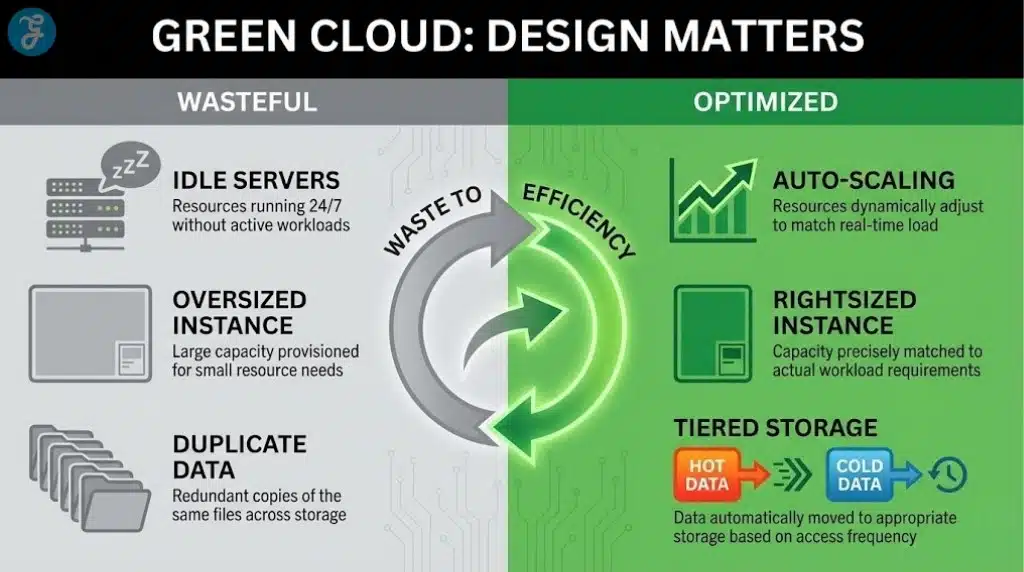

Green Cloud Computing Starts With Workload Design

Many companies talk about cloud sustainability like it is only the provider’s job. Providers matter, but customer behavior matters too. If an application is poorly designed, it will waste resources no matter where it runs.

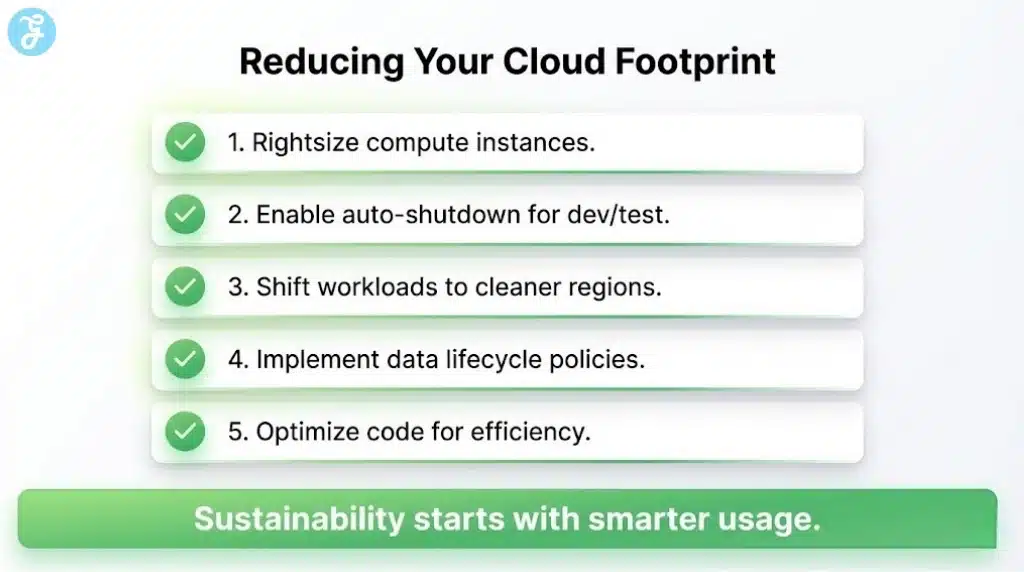

High-impact workload design changes include:

- Rightsizing compute instances to match real demand

- Autoscaling so idle resources shut down

- Using serverless or managed services, where they reduce waste

- Reducing data retention and duplicate storage

- Compressing and optimizing media files and images

- Using caching to reduce repeated computation for the same outputs

Workload Waste Table

| Waste Pattern | What It Looks Like | Sustainability Fix |

| Overprovisioning | Large instances running at low utilization | Rightsize and monitor |

| Always-on staging | Test environments running 24/7 | Schedules and auto shutdown |

| Excess logs | Keeping high-volume logs forever | Retention policies |

| Duplicate backups | Multiple copies without need | Tiered storage and policies |

| Inefficient code | Heavy compute for simple tasks | Profiling and optimization |

This is where green cloud computing becomes actionable for companies. You can cut emissions without changing your provider by reducing waste in your architecture.

Storage: The Quiet Carbon Cost Everyone Underestimates

Storage seems cheap, so people keep everything. But storage has ongoing energy cost, replication overhead, and hardware lifecycle impact.

Common storage sustainability issues include:

- Keeping old backups that no one will ever restore

- Storing multiple uncompressed versions of media files

- Replicating data across regions without clear reason

- Using high-performance storage for cold data

- Retaining logs far beyond compliance needs

A sustainable cloud strategy typically includes tiered storage:

- Hot storage for active data

- Warm storage for occasional access

- Cold or archival storage for rare access

Tiering reduces energy waste by matching storage type to actual usage.

The Role Of Renewable Energy And Carbon-Free Power

Energy sourcing matters. A data center with excellent efficiency still emits heavily if it runs on a high-carbon grid. Conversely, a moderately efficient center can be far cleaner if it runs on low-carbon electricity.

Cloud providers increasingly use:

- Renewable energy purchasing agreements

- On-site solar in some locations

- Carbon-free energy matching strategies

- Regional load shifting where possible

But claims can be confusing. “100% renewable” sometimes means annual matching rather than hourly matching. The carbon impact depends on when and where electricity is used.

For green cloud computing, the most meaningful direction is shifting from annual renewable matching to more granular, time-based matching, so power use aligns with clean generation.

Energy Claims Table

| Claim Type | What It Often Means | Why You Should Care |

| “100% renewable” | Annual matching via certificates | Does not guarantee clean power hourly |

| “Carbon neutral” | Offsets and accounting | May not reduce real emissions |

| “24/7 carbon-free” | Hourly matching goal | Stronger indicator of real decarbonization |

| “Net zero” | Scope targets with timelines | Depends on transparency and progress |

The best approach is transparency, not slogans.

Hardware Lifecycle: The Scope 3 Problem

Server manufacturing has a big footprint. Chips, boards, memory, and storage require energy-intensive production. Data center construction also carries heavy embodied carbon, especially from concrete and steel.

A key sustainability question becomes: how often do providers refresh hardware?

New hardware can be more energy efficient, but frequent replacement increases manufacturing emissions. The best strategy balances performance gains with longer hardware life and responsible reuse.

A mature green cloud computing approach includes:

- Extending hardware life where possible

- Reusing equipment in lower-intensity roles

- Recycling responsibly at end-of-life

- Designing for maintainability and component replacement

AI Workloads: The New Carbon Hotspot

AI has changed the cloud sustainability conversation. Training and inference can be energy-intensive, especially when models are large and run at scale.

The sustainability approach for AI includes:

- Efficient model architectures

- Quantization and optimization for inference

- Choosing regions and times with cleaner energy

- Using specialized hardware that reduces energy per operation

- Avoiding unnecessary inference calls through caching and batching

AI does not need to be wasteful, but it often becomes wasteful when it is treated like an unlimited resource.

This is where green cloud computing will be tested most aggressively in 2026.

How To Measure And Manage Cloud Carbon In A Business

Businesses increasingly want to track cloud emissions. The challenge is attribution. Cloud is shared infrastructure. You need models that estimate emissions based on usage, region, and provider energy data.

A practical approach includes:

- Measuring usage at service level

- Mapping usage to region and grid intensity

- Tracking changes through optimization efforts

- Reporting operational improvements over time

A Practical Cloud Carbon Program

- Establish baseline usage and cost reports

- Identify top services by usage and spend

- Optimize the top 20% that drives most consumption

- Apply governance rules for shutdown and retention

- Review monthly and track impact

This turns green cloud computing into a management practice, not just a marketing statement.

Consumer-Level Cloud Sustainability Choices

Most people cannot choose data center designs. But consumers can reduce cloud impact by changing how they use digital services.

High-impact habits include:

- Reducing unnecessary cloud backups and duplicates

- Lowering video streaming resolution when it does not matter

- Deleting unused files stored across multiple services

- Turning off auto-upload of high-resolution media where unnecessary

- Keeping fewer devices constantly syncing in the background

These changes seem small, but they scale across billions of users.

How Green Cloud Computing Fits The Green Tech Revolution

The Green Tech Revolution is about making systems more efficient, not just swapping products. Cloud is a system. It is the backbone of modern life. If cloud becomes cleaner and more efficient, countless digital services become cleaner too.

Green cloud computing connects to eco-innovation because it:

- Reduces energy waste through better cooling and utilization

- Encourages efficient design and data minimization

- Supports renewable energy adoption at large scale

- Improves transparency through carbon accounting tools

- Pushes the tech industry to treat digital growth as a resource problem

In a world where everything becomes digital, the sustainability of digital infrastructure becomes a major climate variable.

Regional Workload Shifting: The Fastest Way To Cut Cloud Emissions

Green cloud computing improves when workloads run where electricity is cleaner. Grid carbon intensity can vary widely by region and by time of day. If a cloud platform allows region choice or multi-region routing, companies can reduce emissions without changing the application’s core purpose.

Practical ways workload shifting is used:

-

Running batch processing in regions with cleaner grids

-

Scheduling non-urgent jobs for hours when renewable generation is high

-

Keeping latency-sensitive services close to users, but moving background tasks elsewhere

-

Using multi-region redundancy strategically instead of duplicating everything by default

Workload Shifting Table

| Workload Type | Best Strategy | Why It Works |

|---|---|---|

| Batch analytics | Run in cleaner regions | Low latency requirements |

| AI training jobs | Schedule for cleaner hours | Long runtimes, high energy |

| Backups and archiving | Store in low-carbon regions | Rarely accessed data |

| Real-time apps | Keep near users | Latency matters most |

This approach is especially useful because it often reduces emissions without major new spending.

Carbon-Aware Architecture: Designing Apps That Waste Less Compute

Many cloud applications are built for maximum convenience, not minimum resource use. Carbon-aware design is about removing unnecessary compute and data movement while keeping user experience strong.

High-impact design changes include:

-

Caching repeated responses to avoid duplicate computation

-

Batching background tasks instead of running them constantly

-

Using event-driven processing so systems work only when needed

-

Deleting unused microservices and orphaned resources

-

Optimizing database queries that silently waste CPU

A key insight is that cloud waste often looks like “small inefficiencies” multiplied by millions of requests.

Carbon-Aware Design Quick Table

| Design Choice | Waste Pattern It Fixes | Sustainability Benefit |

|---|---|---|

| Caching | Recomputing the same output | Lower CPU and energy use |

| Event-driven jobs | Always-on background processes | Fewer idle resources |

| Batching | Constant small compute spikes | Smoother, lower total load |

| Query optimization | Heavy database CPU usage | Less compute for same results |

Governance: The Policies That Keep Cloud Sustainability From Slipping

Most companies lose sustainability progress when governance is weak. Teams spin up resources quickly, forget to shut them down, and keep data forever “just in case.” Green cloud computing becomes sustainable only when rules prevent waste from reappearing.

Useful governance policies include:

-

Automatic shutdown schedules for dev and test environments

-

Mandatory tagging for cost and carbon accountability

-

Storage retention rules based on actual compliance needs

-

Approval steps for high-energy workloads like large AI training runs

-

Monthly reviews of top services driving usage growth

Governance Controls Table

| Control | What It Prevents | Why It Matters |

|---|---|---|

| Auto shutdown | 24/7 idle environments | Saves energy instantly |

| Tagging | Unowned resources | Enables cleanup and accountability |

| Retention rules | Infinite storage growth | Cuts ongoing footprint |

| Review cycles | Invisible sprawl | Keeps optimization continuous |

These operational controls are often the difference between a “green cloud plan” and real results.

On a Final Note

Green cloud computing matters because the digital world runs on electricity, hardware, cooling, and construction. A data center’s carbon footprint is shaped by efficiency, energy sourcing, hardware lifecycle, and workload design. Cloud can reduce emissions compared to scattered on-prem systems, but only when resources are used efficiently and powered by cleaner energy.

The biggest wins often come from simple changes: rightsizing compute, shutting down idle resources, optimizing storage, improving cooling, and choosing cleaner energy regions. As AI workloads grow, these decisions become even more important because energy demand can rise quickly.

Green cloud computing is not a buzzword. It is a practical strategy for reducing the footprint of modern digital life, one infrastructure decision at a time.