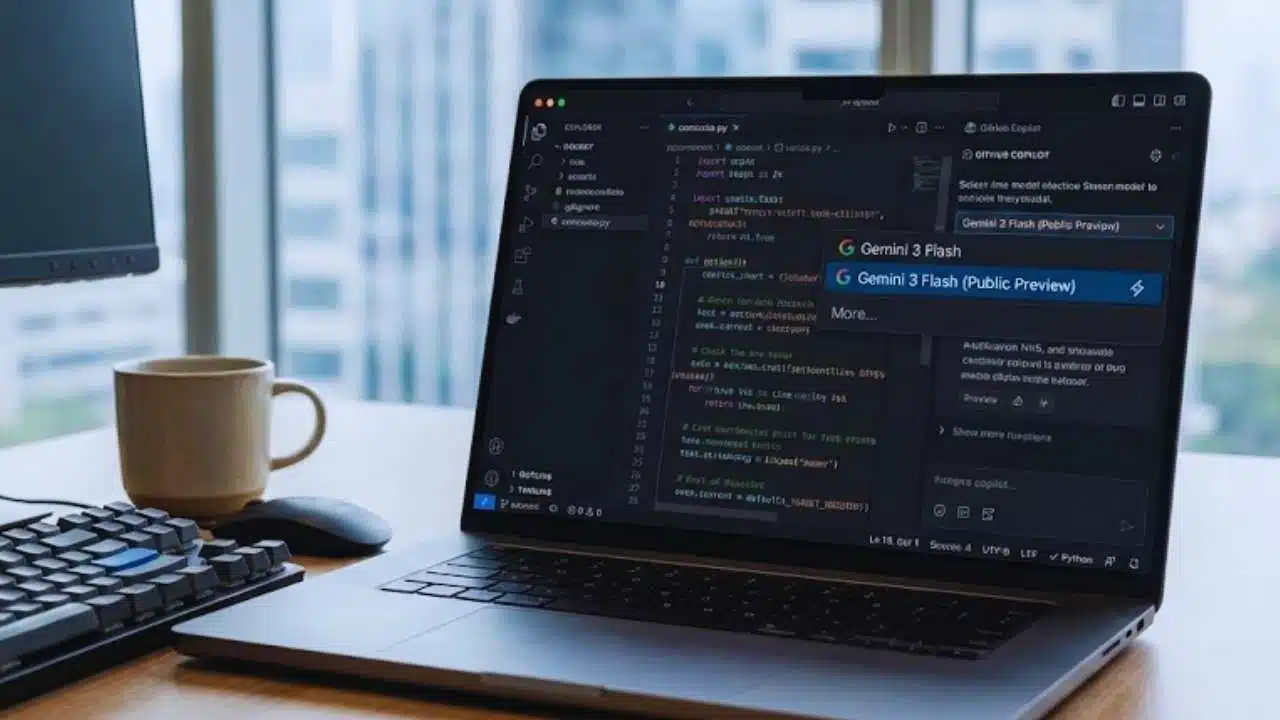

GitHub Copilot has begun rolling out Google’s Gemini 3 Flash in public preview, giving Copilot subscribers another speed-focused model option across Copilot Chat and supported clients, with access controlled by user and organization policies.

What GitHub is rolling out—and who gets it

GitHub’s Copilot now lists Gemini 3 Flash as a public preview model in its supported model lineup, alongside Gemini 3 Pro and other models from multiple providers. The model appears across Copilot’s supported plans (including Copilot Pro, Pro+, Business, and Enterprise) and across common Copilot surfaces where users can pick models in chat.

For organizations, GitHub’s Copilot documentation indicates that access to models can be governed by policy: individual subscribers may see a one-time allow/enable prompt when using a new model, while organizations and enterprises can manage which models are available for their seats through Copilot policies.

Where Gemini 3 Flash shows up inside Copilot

GitHub’s model availability tables indicate Gemini 3 Flash is supported across major Copilot clients. In practice, that means it can be available anywhere Copilot Chat’s model picker is available—depending on your plan and policy settings.

Model availability snapshot

| Area | What to expect with Gemini 3 Flash |

|---|---|

| Copilot plans | Available under paid tiers where chat model selection is supported (subject to plan limits and policy controls). |

| Clients | Listed as available across GitHub.com and popular IDE clients where Copilot Chat supports model selection. |

| Modes | Listed as supported in Copilot Chat modes such as agent/ask/edit where Copilot supports those experiences. |

Important for Business/Enterprise: Admins may need to enable model access via Copilot policies before users can select Gemini 3 Flash.

Why Gemini 3 Flash matters: speed, cost, and “good-enough” reasoning

Gemini 3 Flash is positioned by Google as a model built for low-latency workflows—use cases where developers want quick, frequent interactions such as:

- rapid code explanations and refactors

- short-turn debugging loops

- generating tests and small feature scaffolds

- “chatty” iteration while working in an editor

In Copilot terms, this type of model is usually most attractive when developers care about response time and throughput more than maximum depth on long, complex reasoning tasks.

Pricing context from Google: what “Flash” costs in the Gemini API

Google’s published Gemini API pricing for Gemini 3 Flash Preview provides a clear benchmark for how the model is positioned economically (even though Copilot pricing is packaged differently than pay-per-token API usage).

Gemini 3 Flash Preview pricing (Gemini API list rates)

| Metric | Gemini 3 Flash Preview |

|---|---|

| Input (text/image/video) | $0.50 per 1M tokens |

| Output (incl. thinking tokens) | $3.00 per 1M tokens |

| Audio input | $1.00 per 1M tokens |

| Context caching (text/image/video) | $0.05 per 1M tokens |

This helps explain why “Flash” models are often used for high-frequency requests: they’re designed to keep costs and latency down while still delivering strong general capability.

A multi-model Copilot is becoming the default strategy

GitHub has steadily expanded Copilot’s model roster, leaning into a multi-model approach rather than a single default model for everyone. Over 2025, GitHub has added and promoted multiple models across providers, and has also published detailed docs on:

- which models are supported

- where they’re available (by client)

- how plans affect access

- how administrators can restrict or enable models

That strategy aims to let teams choose the “best fit” model by task—faster models for quick iteration, and heavier models for more complex agentic work.

Data handling and enterprise considerations: where prompts go

For enterprise users, model choice is not only about quality and speed—it’s also about data handling. GitHub’s Copilot model hosting documentation states that when using Google-hosted Gemini models in Copilot, prompts and metadata are sent to Google Cloud Platform, and GitHub also describes the use of measures like content filtering and prompt caching to reduce latency and improve service quality.

Market scale: Copilot’s footprint keeps growing

GitHub has publicly described Copilot’s scale as exceeding 20 million users across 77,000 enterprises, a context point that helps explain why model additions and policy tooling matter: changes to default availability, model pickers, and admin controls can impact large numbers of developers and organizations at once.

Gemini 3 Flash’s arrival in Copilot public preview reinforces two trends happening at the same time: developers want faster AI in the editor, and platforms are moving toward “model choice” rather than one-size-fits-all. If GitHub continues adding models at this pace, the differentiator for Copilot will increasingly be the quality of its policy controls, UX for model switching, and reliability across IDEs—not just which single model is “best” in a benchmark.