The industrial sector has officially moved past the “Chatbot Era.” While 2024 and 2025 were defined by Generative AI on screens, 2026 has ushered in the age of Embodied Intelligence (or “Physical AI”). The critical engineering challenge has shifted from generating text to generating motion in unstructured, high-entropy environments.

At CES 2026 this week, the narrative was clear: we are witnessing a transition from “deterministic automation”—where robots followed hard-coded paths and froze at unexpected obstacles—to “probabilistic embodied intelligence.” These new systems utilize end-to-end neural networks that perceive, reason, and act without explicit instruction lines.

The headline statistic: By Q1 2026, leading “Lights-Out” facilities (such as Tesla’s Gigafactory Texas and Hyundai’s smart plants) are reporting a 40% reduction in custom integration code. This code is being replaced by general-purpose Foundation Models that learn tasks via demonstration rather than rigid programming.

Key Takeaways

-

The “Brain” Upgrade: Robots have moved from following lines to understanding the world through VLA (Vision-Language-Action) models that process “Physics Tokens.”

-

Hardware Leap: The Nvidia Jetson Thor chip has brought server-grade AI compute (2000+ TFLOPS) to the edge, enabling autonomy without constant cloud connection.

-

Standardization: VDA 5050 v2.1 provides the necessary “Corridor” logic to let AI robots drive themselves within safe boundaries.

-

Safety First: AI Middleware is the critical firewall preventing generative AI errors from corrupting physical inventory data.

Software Architecture: The Rise of “VLA” Models

The era of fragmented “sense-think-act” loops is effectively over. In 2026, robotic software has coalesced into unified Vision-Language-Action (VLA) models, replacing brittle code stacks with a singular neural network that turns raw perception directly into fluid physical motion.

1. From Modular Stacks to End-to-End Learning

For the past decade, robotic software architecture relied on a brittle “modular stack” (often built on ROS 1/2). Engineers built separate blocks for Perception (What do I see?), Path Planning (Where do I go?), and Motor Control (How do I move?). If one module failed—for example, if a vision sensor encountered a reflection it couldn’t classify—the entire stack crashed, or the robot froze in a “recovery behavior” loop.

In 2026, the industry standard has shifted to Vision-Language-Action (VLA) models.

-

The Mechanism: A single, massive neural network takes raw pixels and natural language commands (“Pick up the red gear”) as input and outputs direct motor torques (actions).

-

Tokenization of Physics: Just as LLMs process text tokens, VLA models process “action tokens.”

-

Input: High-res video frames are encoded via Vision Transformers (ViT).

-

Processing: A large transformer (like Google DeepMind’s RT-2 successor or Nvidia’s GR00T) aligns these visual embeddings with language goals.

-

Output: The model generates a sequence of discrete action tokens (e.g., arm_x_pos, gripper_torque), which are detokenized into continuous signals at 50Hz-100Hz.

-

-

Engineering Benefit: The primary advantage is generalization. A VLA-equipped robot trained to pick up a cardboard box can now successfully pick up a crushed box, a wet box, or a clear plastic box without a single line of code being rewritten.

2. World Models & The “Sim-to-Real” Bridge

Training a robot in the physical world is slow, expensive, and dangerous. The 2026 solution is the deployment of World Models, such as the newly released Nvidia Cosmos.

These models function like a “video generator” for the robot’s brain. They simulate the physics and consequences of an action before the robot moves. By treating physical interactions as tokens, the AI can “auto-complete” complex physical tasks, predicting the next 30 seconds of reality to ensure a successful grab or maneuver. This allows for “Zero-Shot Transfer,” where a robot can execute a task in the real world that it has only ever “dreamed” of in simulation.

| Feature | Legacy Stack (2024) | Embodied VLA Stack (2026) |

| Input | Structured Data / LiDAR Points | Raw Pixels + Natural Language |

| Logic | If-Then-Else Rules / State Machines | Probabilistic Neural Networks |

| Adaptability | Fails on edge cases (e.g., dropped box) | Generalizes to new objects/orientations |

| Latency | High (Module-to-Module serialization) | Low (End-to-End Inference) |

| Training | Manual Tuning of PID Controllers | Offline Reinforcement Learning (RL) |

The “Synthetic Reality” Pipeline

In 2026, the bottleneck for AI is no longer compute—it is data. Real-world robot data is “expensive” (it requires a physical robot to move) and “sparse” (robots rarely fail, so they don’t learn from mistakes). The engineering solution is the Synthetic Data Supply Chain.

1. The “GR00T-Mimic” Workflow

Engineers are no longer hand-labeling images. Instead, they use pipelines like Nvidia’s Isaac GR00T-Mimic to generate training data.

-

The Process: A human operator performs a task once in VR. The system then generates 10,000 variations of that motion in simulation—changing the lighting, the friction of the floor, and the texture of the objects.

-

Cosmos Transfer: The key 2026 innovation is “Cosmos Transfer,” which applies a photorealistic neural filter to these simulations. It adds “sensor noise,” motion blur, and lens distortion to trick the robot’s brain into thinking the simulation is real life. This engineering pipeline has effectively solved the “Sim-to-Real” gap.

2. Shadow Mode Validation

Before any code reaches the factory floor, it undergoes “Shadow Mode” testing. A new model runs in the background on the physical robot, receiving camera inputs and predicting actions without actually moving the motors. If the Shadow Model’s predictions deviate from the safety controller’s actions by more than 0.01%, the update is automatically rejected.

AI Infrastructure: The “Hybrid Compute” Backbone

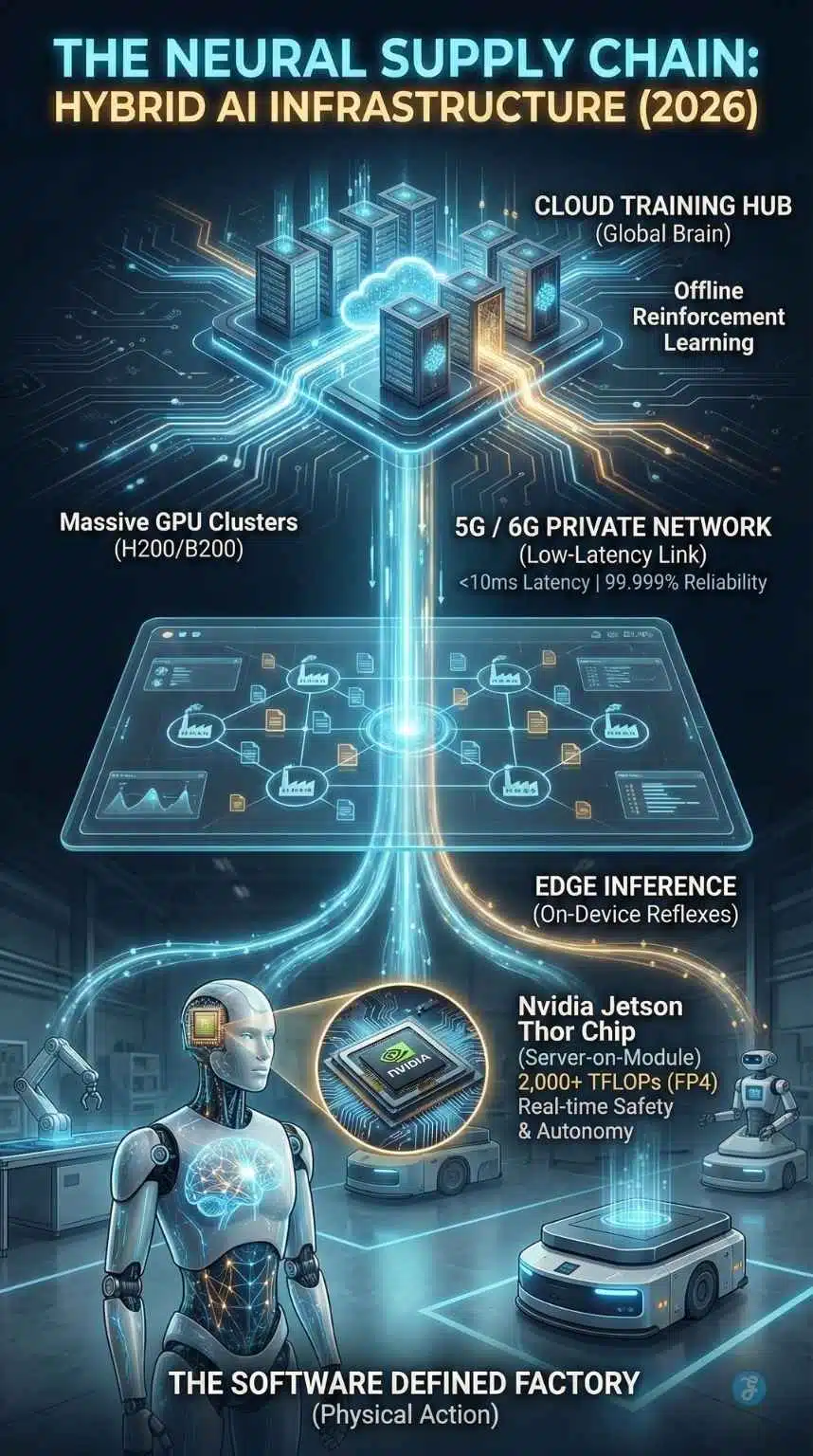

The logistics facility of 2026 operates on a “split-brain” architecture where intelligence is ubiquitous, but computation is highly specialized. This hybrid backbone seamlessly links massive cloud clusters for nightly model training with server-grade edge silicon that handles critical, split-second physical decisions on the floor.

1. The “AI Mesh” Topology

The 2026 logistics facility relies on a split-brain architecture known as the AI Mesh:

-

Cloud (Training): Massive GPU clusters (running Nvidia H200/B200s) execute “Offline Reinforcement Learning.” Every night, data from the global fleet, including “disengagement reports” where a human had to intervene, is uploaded to update the Foundation Model.

-

Edge (Inference): The robot itself handles real-time decisions. The new industry standard chip, Nvidia’s Jetson Thor, is capable of 2,070 TFLOPS (FP4) of AI compute. This “Server-on-Module” allows the robot to run huge transformer models locally within a 130W power envelope, ensuring safety even if the network connection is lost.

2. Connectivity: Private 5G & The Dawn of 6G

Wi-Fi has been deemed insufficient for the bandwidth-heavy, low-latency demands of mobile robotics.

-

Private 5G: Is now the baseline facility infrastructure, guaranteeing <10ms latency and 99.999% reliability for fleet coordination.

-

6G Pilots: Early 2026 pilots are utilizing 6G for “Holographic Teleoperation.” This allows a human expert to virtually “inhabit” a robot avatar with microsecond latency to handle hazardous materials or fix jammed machinery. This “Human-in-the-Loop” fallback is critical for reaching 99.9% uptime.

The Neuromorphic Shift & Energy Engineering

Running a ChatGPT-level brain on a battery-powered robot is an energy crisis. In 2024, robots ran out of battery in 4 hours. In 2026, engineers are solving this with Neuromorphic Computing and Energy-Aware Algorithms.

1. “Spiking” Neural Networks (SNNs)

Traditional chips process every pixel in a frame, 30 times a second. New 2026 neuromorphic chips (like Intel’s Loihi 3 or BrainChip Akida 2.0) use Event-Based Vision.

-

Engineering Shift: The chip only processes changes in the scene (a moving hand, a falling box). If the scene is static, power consumption drops to near-zero. This “biological” approach has extended AMR battery life by 40%, allowing for full 8-hour shifts on a single charge.

2. Energy-Aware RL Algorithms

The software has also changed. We are seeing the deployment of AD-Dueling DQN (Attention-Enhanced Dueling Deep Q-Networks). Unlike old path planners that only optimized for “shortest distance,” these new algorithms optimize for “lowest joules.” They will route a heavy robot around a carpeted area to a smooth concrete path to save battery, even if the route is 10 meters longer.

Engineering the “Software Defined Factory”

The modern warehouse is transitioning from a collection of isolated hardware to a programmable platform where every conveyor, door, and droid functions as a connected node. This engineering shift requires a robust interoperability layer to harmonize the fluid behaviors of generative AI with the rigid, deterministic logic of legacy facility systems.

1. The Interoperability Crisis & VDA 5050

A major hurdle has been making a Tesla Optimus talk to a legacy conveyor belt and a generic forklift. The industry has coalesced around VDA 5050 (Version 2.1+).

-

The “USB” of Logistics: This standard protocol, utilizing MQTT and JSON payloads, allows a centralized Master Control System to command heterogeneous fleets.

-

The “Corridor” Update: Crucially, Version 2.1 introduced the concept of “Corridors.” Unlike old AGVs that followed a strict virtual line, VDA 5050 v2.1 defines a “navigable zone” (a corridor). The Embodied AI is free to use its own path planning to dodge obstacles within that corridor, combining the flexibility of AI with the traffic management of a standard protocol.

2. Middleware: The “Secure Abstraction Layer”

Directly connecting a Generative AI robot to a Warehouse Management System (WMS) like SAP is dangerous due to the risk of “hallucinations” (e.g., the robot inventing inventory that doesn’t exist).

-

The Solution: AI Middleware (or the “Safety Envelope” architecture). This is a deterministic code layer that “sanitizes” AI requests.

-

Workflow Example:

-

Robot AI (VLA): “I perceive a box. I am taking 500 units of Item X.”

-

Middleware (Deterministic): Queries the WMS database. “Error: Only 50 units exist in this location.”

-

Action: Middleware blocks the physical grab command and triggers a “Re-Scan” behavior in the robot.

-

The Security Paradox: When Code Becomes Kinetic

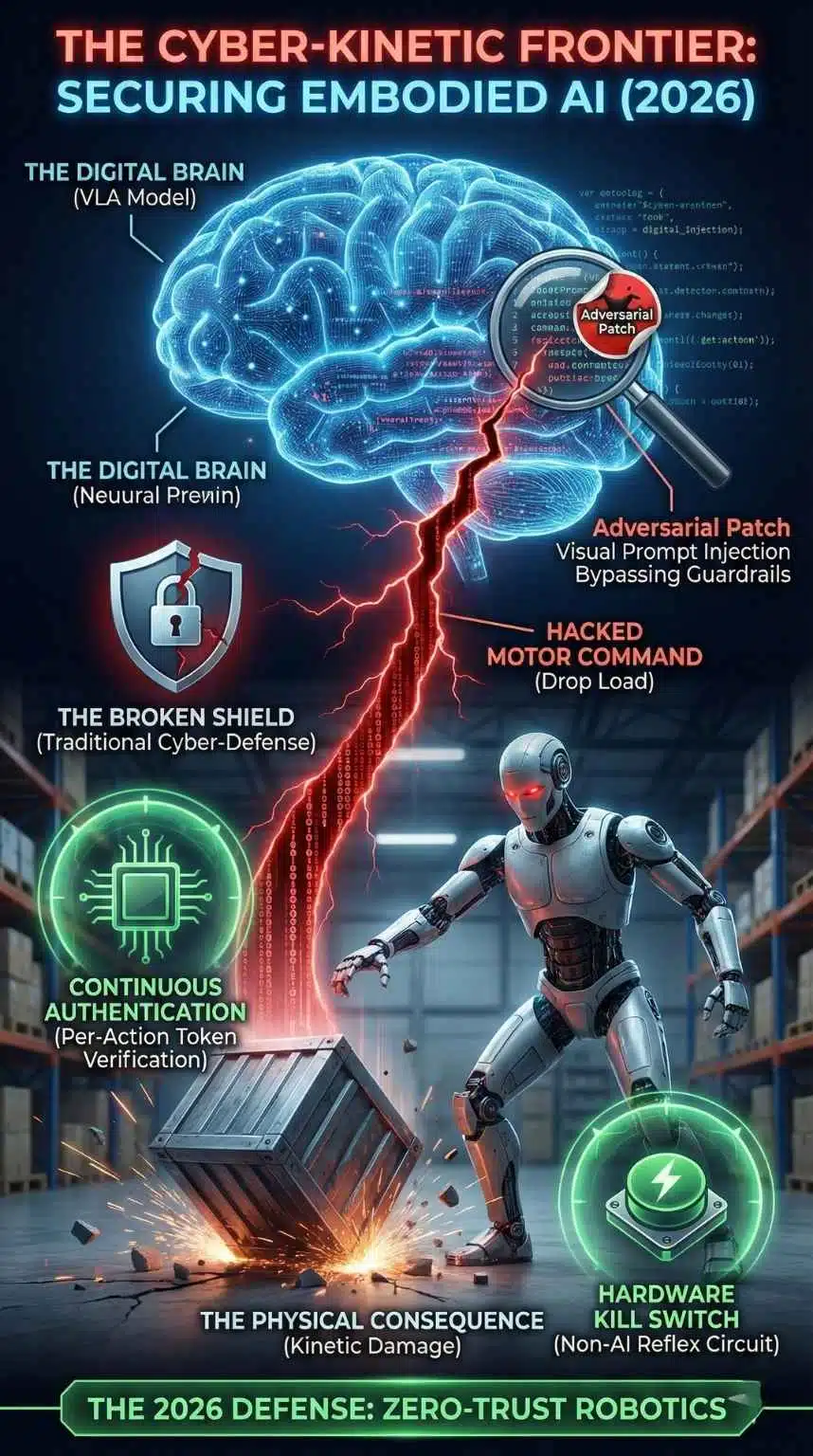

As the digital brain merges with the physical body, the “attack surface” of a logistics facility has exploded. In 2026, cybersecurity is no longer just about protecting data confidentiality (preventing leaks); it is about protecting Control Authority (preventing physical hijacking).

1. Adversarial Attacks on VLA Models

The most sophisticated threat vector in 2026 is the “Adversarial Patch.” Researchers at Black Hat 2025 demonstrated that applying a specifically patterned sticker (an “adversarial patch”) to a shipping crate could trick a VLA model into perceiving the heavy crate as “styrofoam,” causing the robot to use insufficient grip force and drop the payload.

-

The “Jailbreak” of Physics: Just as users once “jailbroke” chatbots to say forbidden words, attackers can now use “Visual Prompt Injection” to bypass safety guardrails. By introducing subtle visual noise into the robot’s camera feed, bad actors can trigger “dormant” behaviors—like forcing a fleet of AMRs to suddenly congregate in a fire exit zone, creating a physical blockade.

2. Zero-Trust Robotics & IEC 62443

To combat this, the industry has aggressively adopted the IEC 62443 standard (originally for industrial automation) adapted for mobile robotics.

-

The “Air Gap” Fallacy: You cannot air-gap a robot that needs cloud training. Instead, the 2026 standard is “Continuous Authentication.” A robot does not just log in once; every major motor command (e.g., “Lift > 50kg”) requires a micro-authorization token verified against the facility’s central security policy.

-

Kill Switches: Every VLA-driven robot is now mandated to have a hardware-level “reflex” kill switch—a non-AI circuit that cuts power to actuators if acceleration exceeds human safety limits, bypassing the neural net entirely.

The Regulatory “Cobot” Death

A major “news” element for 2026 is the release of ISO 10218-1:2025, which has fundamentally changed the legal landscape for robotic safety.

1. The End of the “Cobot”

The new standard has officially deprecated the term “Collaborative Robot” (Cobot). Engineering teams must now certify the “Collaborative Application,” not the robot itself. A “safe” robot arm holding a sharp knife is no longer “safe.”

Monitored Standstill: The concept of “Safeguarded Space” (cages) has been replaced by “Monitored Standstill.” Robots no longer need to stop completely when a human enters their zone; they simply need to mathematically prove they can stop before contact. This dynamic safety layer enables humans and heavy industrial arms to coexist without the “stop-start” inefficiencies of the past.

The “Black Box” Maintenance Crisis

The shift from “If-Then” code to Neural Networks has created a maintenance nightmare: Lack of Interpretability. When a traditional robot failed, an engineer checked the logs to find the line of code that caused the error. When a VLA robot fails, the logs simply show millions of floating-point numbers.

1. The Rise of “Neural Debuggers”

In 2026, the “screwdrivers” of the maintenance team are software tools like Nvidia Isaac Lab’s “Explainability Suite.”

-

Attention Maps: When a robot drops a box, engineers don’t look at code; they look at “Attention Heatmaps.” These visualizations show exactly which pixels the robot was focusing on when it made the decision. Did it drop the box because it slipped, or because it mistook a shadow on the floor for an obstacle?

-

Counterfactual Simulation: Maintenance teams now use “Digital Twins” to run Counterfactual Analysis. They replay the failure scenario 1,000 times in simulation, tweaking one variable each time (lighting, object texture, angle) to isolate exactly what environmental factor triggered the neural net’s failure mode.

2. “Drift” Management

Physical AI suffers from “Concept Drift” faster than software. If a warehouse changes its lighting from fluorescent to LED, the visual input changes, and robot accuracy can degrade by 5-10%.

The Fix: 2026 facilities run “Shadow Mode” validation. Before a nightly model update is pushed to the physical fleet, it controls a “virtual fleet” in the facility’s Digital Twin for 4 hours. Only if the virtual performance matches the physical KPI is the update deployed to the real robots.

Final Thought

The logistics facility of 2026 is no longer just a place where goods are stored; it is a computational environment. The robots are not just tools; they are embodied intelligence. For engineers and CTOs, the mandate is clear: stop building “automated” systems and start architecting adaptive ones. The era of the “blind” robot is over.