Hey there, are you puzzled by how artificial intelligence is shaking up the news world? AI in journalism is growing fast, with tools like machine learning and natural language processing changing how stories are told.

In this blog, we’ll break down nine big challenges journalists face with AI integration, making it super easy to grasp. Stick around, it’s gonna be eye-opening!

Key Takeaways

- AI in journalism brings ethical issues like transparency and bias in tools such as GPT-4, needing global rules for fairness.

- Accuracy is tough, with 27% of journalists struggling to keep credibility in automated reporting using AI.

- About 40% of journalists rely more on data analysis this year, showing AI’s growing role in newsrooms.

- Job fears rise as AI changes roles, but new tasks like managing machine learning can open opportunities.

- Small media outlets face hurdles with limited access to AI, risking a digital divide in the industry.

Ethical Challenges in AI-Driven Journalism

Hey, have you ever wondered how AI, like those fancy large language models, shapes the news we read? It’s a bit of a tightrope walk for journalists to keep things fair and honest with tools like GPT-4 churning out stories!

Balancing transparency in AI-generated content

Let’s chat about how tricky it can be to stay open with AI-generated content in journalism. You see, media outlets gotta spill the beans if they’re using artificial intelligence to whip up stories.

It’s all about keeping that public trust alive, ya know? If readers don’t know whether a human or a machine wrote something, doubts creep in fast.

Heck, think of it like a recipe. You wanna know if the chef used a shortcut mix or made it from scratch. That’s why there’s a push for a big, global agreement on AI in media. Disclosing the use of tools like large language models isn’t just nice; it’s a must to keep journalistic integrity intact.

Addressing potential biases in AI algorithms

Hey there, folks, let’s chat about a sneaky problem in AI journalism, those pesky biases in algorithms. You see, AI tools, like large language models (LLMs), can pick up unfair ideas from the data they’re trained on.

It’s like teaching a kid with a warped storybook; they might repeat stereotypes without even knowing it. This algorithmic bias is a huge ethical concern, especially since there are no global rules to keep AI neutral across the board.

Now, think of AI in newsrooms as a fast but flawed helper. If we don’t watch out, it can spread misleading information or favor certain views over others. Fixing this isn’t just about tweaking code; it’s a fight to keep journalism fair and honest.

We’ve gotta dig deep, question the data, and make sure AI doesn’t twist the truth. Stick with me as we unpack more challenges like this!

Accuracy and Reliability of AI Tools

Hey there, ever wonder if those fancy AI tools in journalism always get the facts right? Let’s chat about how tricky it can be to trust machine learning outputs, like those from large language models, for spot-on news stories.

Verifying AI-generated information for credibility

Let’s chat about a big hiccup in AI-driven journalism, folks. Verifying AI-generated content for credibility isn’t a walk in the park. Sometimes, AI tools spit out “hallucinations,” which are just fancy words for misleading info.

Imagine a robot reporter making up a wild story, and you’re stuck figuring out if it’s true. That’s the challenge media outlets face every day with artificial intelligence in newsrooms.

So, how do we tackle this mess? News teams must double-check every piece of AI content before it hits your screen. They’ve got to disclose AI use to keep public trust alive. Without that honesty, fake news can sneak in, especially on social media.

It’s a tough job, but sticking to journalistic standards keeps the media landscape real and reliable for you.

Maintaining journalistic standards in automated reporting

Hey there, readers, let’s chat about keeping high standards in automated reporting with AI in journalism. It’s a big deal, since 27% of journalists say maintaining credibility is their toughest hurdle.

AI tools, like large language models, can churn out stories fast, but we must double-check every fact. A tiny slip can spread false information, and nobody wants that mess on their hands.

Speaking of data, 40% of journalists now lean more on data analysis compared to last year. That’s huge, right? But, with AI-generated content in the mix, sticking to codes of ethics is vital.

We can’t let machines mess with the truth or skip the human touch in newsrooms. So, let’s keep our eyes sharp and hold fast to what makes journalism real.

Impact on Employment and Job Roles

Hey, have you thought about how AI is shaking up the newsroom? It’s like a storm brewing, changing journalists’ jobs in ways we’re just starting to grasp, so stick around to dig deeper into this shift!

Redefining the role of journalists in AI-integrated newsrooms

Let’s talk about how AI is transforming the role of journalists in modern newsrooms. Imagine, folks, AI tools in journalism are more than just assistants; they’re revolutionizing the field.

With artificial intelligence handling tasks like data analysis and automated transcription, journalists are no longer just crafting stories. They’re taking on new roles, such as “journalist developers,” merging tech expertise with storytelling passion.

It’s almost like turning a pen into a powerful tool, right?

Here’s the key point: editorial teams bear the ethical responsibility for AI use, not individual reporters. So, while AI in newsrooms accelerates content creation, journalists must evolve to manage these machine learning tools.

Consider it like teaching a new dog; you’ve got to steer it properly. This change means mastering natural language processing and large language models, ensuring the human essence remains in every story.

How exciting is this shift in the media landscape?

Addressing fears of job displacement

Hey there, folks, it’s no secret that AI in journalism is shaking things up. Many journalists worry about job displacement, and I get it, that fear stings like a bee. With tools like generative AI taking over tasks in newsrooms, the concern about losing jobs feels real, especially when automation writes stories or handles data analysis.

Truth is, small media outlets often miss out on these AI tools due to limited access, which adds to the stress. But, hang tight, because this isn’t just a dead end. AI can also create new roles, like managing machine learning systems or crafting audience engagement strategies.

So, while the worry about jobs in the media landscape looms large, there’s a chance to adapt and grow with artificial intelligence by your side.

Accountability and Legal Concerns

Hey, who’s to blame when AI spits out false info in a news story? Let’s dig into the messy, legal side of AI in journalism, and figure out how to hold the right folks accountable!

Determining responsibility for AI-produced misinformation

Let’s talk straight, folks. Figuring out who’s to blame for AI-produced misinformation is a real puzzle in today’s media landscape. When AI tools in journalism spit out wrong info, like those sneaky AI “hallucinations” that create misleading content, it’s a mess.

Editorial teams, not lone journalists, carry the ethical responsibility for this. That’s a big deal, right? It means the whole crew has to step up and watch what artificial intelligence churns out.

Now, think of AI in newsrooms as a fast but clumsy helper. If it slips up with fake facts, who gets the flak? The editors must own it, making sure AI-generated content doesn’t trick readers.

With machine learning and large language models in play, staying sharp is key. After all, trust is the name of the game, and messing up on data privacy or truth can sink a story fast.

Navigating legal implications of AI integration

Diving straight into the legal mess of AI in journalism, it’s a bit like walking a tightrope. One wrong step, and you’re in hot water. With AI-generated content, issues like copyright and plagiarism pop up fast.

Who owns the story an AI writes? That’s a big puzzle. Reporters Without Borders stepped up with the Paris Charter on AI and Journalism to tackle these headaches. Their goal is to set some ground rules for fairness.

Now, think about algorithmic authorship. If a machine spits out a piece, who gets the blame for errors? Legal risks in AI in newsrooms are real, and they tie into ethical responsibility.

A tiny glitch could lead to lawsuits over misinformation. Plus, data privacy laws, like GDPR, add another layer of worry when handling sensitive info. Stick with us to see how this unfolds!

Threats to Editorial Independence

Hey, have you ever wondered if machines could sway a newsroom’s voice? With AI tools creeping into journalism, there’s a real risk they might nudge editors off their true path, especially when algorithms start prioritizing clicks over truth.

Ensuring AI tools do not influence editorial judgment

Let’s discuss a subtle issue in newsrooms today. AI tools in journalism can be incredibly useful, but they sometimes interfere with editorial judgment. Imagine it as a quiet nudge, prompting you to favor one story over another.

Algorithm manipulation is a genuine obstacle here, promoting content that might not align with a journalist’s authentic perspective. We must remain vigilant and prevent AI from taking control.

Think of this as a constant struggle. Journalists need to hold their ground, ensuring their decisions in the media environment stem from human understanding, not merely machine learning prompts.

It’s about preserving that natural intuition, even with advanced technology like natural language processing at work. Protecting this autonomy ensures stories remain truthful and authentic for you, the reader.

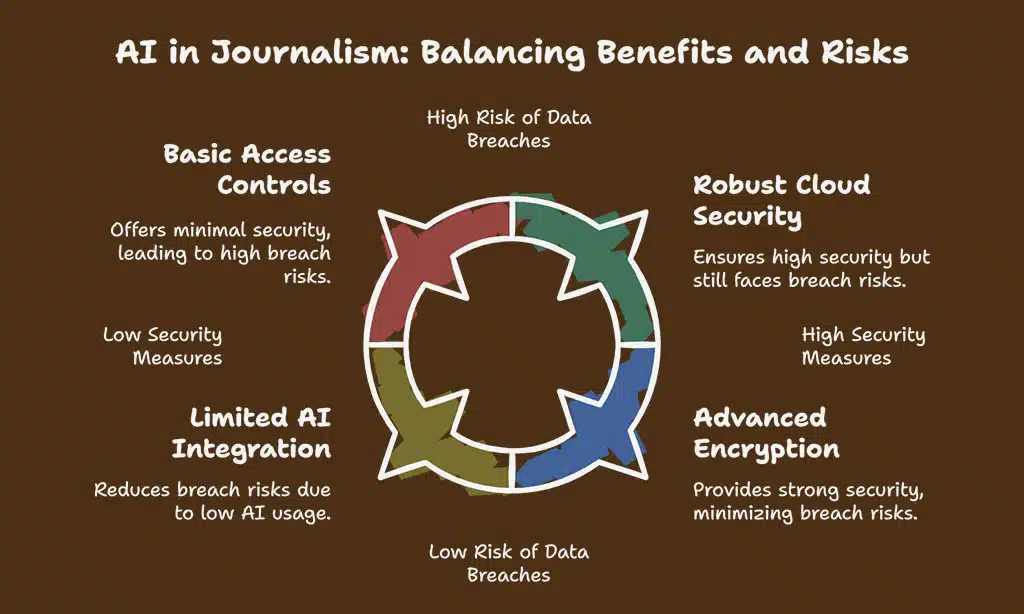

Data Privacy and Security Risks

Hey, did you know that using AI in journalism can put sensitive info at serious risk, especially when data breaches happen on cloud platforms? Stick around to dig deeper into this tricky issue!

Protecting sensitive information in AI-powered workflows

Protecting sensitive information in AI-powered workflows is a big deal for journalists. Small media outlets, especially, face huge risks with data privacy. AI in journalism must guard private details, like sources or unpublished stories, from leaks.

Think of AI as a helpful buddy, but one that might spill secrets if not watched closely.

Imagine leaving your diary on a busy street; that’s how risky it can be with AI tools in newsrooms. Data breaches can ruin trust and harm reputations fast. So, using solid cloud security and strict access rules is a must.

Let’s keep those digital doors locked tight, folks, to save our stories and our sources!

Managing risks of AI data breaches

Hey there, folks, let’s chat about a big worry in AI journalism, data breaches. With AI in newsrooms handling tons of sensitive info, keeping it safe is a real challenge. Data privacy violations can hit hard, exposing personal details or key stories.

It’s like leaving your front door wide open, inviting trouble right in.

Now, think of AI as a super-smart assistant that needs a strong lock on its toolbox. News teams must guard against leaks in AI-powered workflows to stop hackers cold. Using tight security steps for data privacy is vital in this digital game.

Stick with us as we dig into keeping that info under wraps!

Accessibility and Skill Gaps

Hey, are you curious about how some journalists struggle to keep up with fancy tech like AI tools in newsrooms? Stick around to dig deeper into this gap!

Addressing the digital divide in AI adoption

Let’s discuss the digital divide in AI adoption, folks. Not every newsroom can embrace the artificial intelligence movement. Small media outlets, in particular, face challenges with limited access to AI tools in journalism.

It’s like trying to enter a race without proper running gear. They’re left behind while larger players speed ahead with advanced tech like natural language processing and machine learning.

This disparity creates an uneven advantage, and it’s a genuine issue for fair competition in the media landscape.

Now, imagine this situation worsening without intervention. Equipping journalists with AI tool training is essential, no doubt about it. Without those skills, many reporters can’t utilize data analytics or manage cloud software for streamlined workflows.

It’s like cooking without guidance, just estimating and hoping for a good outcome. Small outlets need support to bridge this gap, so they can compete equally with the major players.

Let’s continue advocating for equal access to innovations like generative AI and conversational chatbots.

Training journalists to effectively use AI tools

Hey there, readers, let’s chat about getting journalists up to speed with AI tools in newsrooms. It’s vital to train them well, since artificial intelligence can change how stories get told.

Many reporters need help to grasp machine learning or natural language processing, but with the right guidance, they can shine. Think of it like teaching a kid to ride a bike, wobbly at first, but soon they’re zooming along.

Smaller media outlets often miss out on this tech, sadly. Limited access to AI in journalism means not everyone gets a fair shot. So, we gotta push for training that reaches all, no matter the size of the news team.

Imagine AI as a trusty sidekick, helping with data analysis or automated transcription, making work faster and sharper for every journalist out there.

Financial and Resource Limitations

Hey, did you know that adopting AI in newsrooms can cost a pretty penny, especially for smaller outfits struggling to keep up with big players? Stick around to dig deeper into this challenge and more!

Balancing the cost of AI adoption with newsroom budgets

Small newsrooms often struggle with tight funds, and adding AI tools can feel like a punch to the gut. The price of artificial intelligence in journalism, from software to training, can drain budgets fast.

For tiny media outlets, this is a real hurdle, as they fight to keep up with bigger players who snatch up fancy tech like candy.

Think of it as trying to run a race with worn-out sneakers while others zoom by in high-tech gear. Small organizations need clever ways to balance costs, maybe by using affordable cloud computing or shared AI services.

This way, they can still play in the game of AI in newsrooms without breaking the bank.

Ensuring small organizations can compete with AI advancements

Hey there, folks, let’s chat about how tiny news outlets can keep up with the big dogs in this AI in journalism game. Financial limitations hit hard for small media groups, and grabbing hold of fancy artificial intelligence tools often feels like chasing a wild goose.

But, guess what? There’s hope yet, if they play their cards right with smart, affordable options.

Think of AI as a trusty sidekick, not a budget-busting villain, for these smaller teams. Many cloud technologies and software as a service solutions, like basic machine learning apps, can fit tight budgets while boosting efficiency.

Small outfits can tap into user-friendly AI for data analysis or automated transcription, leveling the playing field against giant newsrooms with deeper pockets. Let’s cheer them on as they find clever ways to shine!

The Risk of Over-Reliance on AI

Hey there, ever feel like we’re handing over the storytelling wheel to machines, especially with tools like large language models (LLMs) and generative AI taking the lead? It’s a slippery slope, folks, risking the heart of human creativity in journalism.

Stick around to dig deeper into this tricky balance!

Avoiding dependency on AI at the cost of human creativity

Let’s chat about keeping AI in check, folks. Artificial intelligence in journalism can whip up stories fast with tools like generative AI and large language models. But, here’s the rub, it can’t match human intuition or grasp the messy, beautiful layers of socio-cultural understanding.

AI lacks that emotional spark, that gut feeling we humans bring to storytelling. Overusing it risks turning news into bland, cookie-cutter content with no soul.

Think of AI as a trusty sidekick, not the star of the show. It can crunch data or draft quick reports, but the heart of a story, the raw emotion and context, that’s all you. Relying too much on AI in newsrooms might dull your creative edge.

So, strike a balance, keep your voice loud and clear, and let AI just play backup to your brilliance.

Preserving the human element in storytelling

Hey there, folks, let’s chat about something vital in journalism today. Keeping the human touch in storytelling matters a lot, especially with AI in newsrooms growing fast. Machines, like those using natural language processing, can churn out articles in a snap, but they miss the heart and soul of a story.

AI can’t grasp emotional intelligence or cultural quirks the way we do. That’s a big gap, right?

Think of it like a robot trying to tell a bedtime story. It might get the facts straight, but where’s the warmth, the little giggles, or the personal spin? Preserving the human element in storytelling means journalists must keep pouring their feelings and insights into their work.

With tools like generative AI around, it’s tempting to lean on tech, but nothing beats the real, raw connection we humans bring to the table.

Takeaways

Wrapping up, let’s think about the wild ride journalists are on with AI in newsrooms. It’s a bit like juggling flaming torches, exciting but risky. AI tools, such as machine learning and natural language processing systems, can speed up data analysis and content creation.

Yet, the human touch in storytelling must shine through all the tech dazzle. So, how do we keep that balance while tackling these nine big challenges?

FAQs on The Dark Side of AI in Journalism

1. What’s the big deal with AI in journalism today?

Hey, let’s chat about how artificial intelligence, or AI, is shaking up the media landscape. It’s like a storm brewing in newsrooms, with AI tools in journalism changing how stories get told. From generative AI to machine learning, it’s a wild ride for reporters trying to keep up.

2. How does AI mess with data privacy for journalists?

Listen up, data privacy is a hot potato when using AI in newsrooms. Those fancy systems, like natural language processing (NLP) and large language models (LLMs), can dig deep into personal info, and that’s a real worry for keeping sources safe.

3. Why are journalists sweating over ethical responsibility with AI?

Well, pal, ethical responsibility with artificial intelligence is like walking a tightrope. AI-generated content might twist facts or fuel polarization if not checked, and journalists gotta stay sharp to keep objectivity in their work. It’s a tough gig, balancing tech like conversational AI with good old-fashioned truth.

4. Can AI in journalism cause information bubbles?

You bet, my friend, information bubbles are a sneaky trap with recommendation systems and predictive analytics. These AI tricks on social platforms can lock readers into echo chambers, making it hard for journalists to reach a broad crowd for true audience engagement.

5. What’s the snag with copyright violations and AI tools?

Here’s the rub, copyright violations are a thorny issue with generative artificial intelligence. When AI, say from OpenAI’s models or Google’s tech, whips up content, it might swipe ideas without credit, landing journalists in hot water fast.

6. How does AI impact user experience in news delivery?

Yo, let’s talk user experience, or UX, in this digital economy. AI, through stuff like sentiment analysis and dynamic paywalls, can tailor news to your taste, but it might also mess with operational efficiency if the tech, maybe on software as a service (SaaS) platforms, glitches out. Journalists are stuck figuring out how to keep readers hooked without losing the plot.