The ink is barely dry on what officials now describe as a 2026 “Global AI Accord” moment, yet its aftershocks are already visible across capitals. Power is shifting from oil fields and shipping chokepoints to GPU clusters, data jurisdictions, and energy grids, turning Compute Sovereignty into the new lever of state influence.

Compute Sovereignty has moved from a technical niche to the core currency of diplomacy because AI governance is no longer mainly about ethics. It is about who controls the chips, the grid, the clouds, and the legal jurisdictions that decide who can build, run, and scale modern intelligence.

How We Got Here: From Borderless Internet To Territorial Compute

For decades, the internet trained policymakers to believe information wants to be borderless. AI is reversing that assumption in a very physical way. You can copy code instantly. You can hire talent across time zones. You can move money with a click. But you cannot conjure advanced compute out of thin air, and you cannot scale frontier-grade AI without a web of scarce, regulated inputs.

That is why the governance arc matters. Early summits pushed countries to treat frontier AI risks as shared. Major economies tried to standardize safety expectations through guiding principles and voluntary codes of conduct. The European Union moved the conversation from statements to enforcement clocks, tying market access to compliance. Meanwhile, energy regulators and national security agencies entered a policy arena once dominated by innovation ministries.

By early 2026, the diplomatic calendar added another signal. Global gatherings such as the Delhi AI Impact Summit reinforced how AI governance is being discussed alongside accountability frameworks and cross-border participation. The message was unmistakable: AI is no longer just a commercial tool. It is a geopolitical pillar.

Put these threads together, and you get what many governments now mean by a “Global AI Accord,” even without one universal treaty. It is the emerging alignment of three realities:

- Compute is a strategic infrastructure.

- Data governance is geopolitical tradecraft.

- Energy capacity is AI capacity.

This is where Compute and AI Sovereignty stop being a slogan. It becomes a survival strategy.

Silicon, Not Crude: Why Compute Sovereignty Feels Like The New Oil

The oil analogy works because oil shaped the 20th century’s growth, militaries, alliances, and coercion. But compute differs in one crucial way. Oil is fungible and tradable. Compute is conditional. It depends on hardware access, grid access, software stacks, legal permissions, and security screening.

The new chokepoints are not only straits and canals. They are advanced fabs, chip packaging capacity, subsea cables, hyperscale cloud regions, export licensing rules, and the interconnection queues that determine whether a data center can even turn on.

This is why politics is shifting away from classic border disputes toward “cluster disputes.” Governments now negotiate over where high-end GPU clusters can be deployed, which clouds can host sensitive workloads, and whose standards define what is “safe” or “trustworthy” AI.

The shift also reflects a bigger change in what states fear. In the petro era, dependence meant vulnerability to supply shocks. In the silicon era, dependence means something more intimate. It means external actors can shape your national productivity, your defense readiness, and your information ecosystem by gating access to computation and the tools built on it.

How Power Metrics Shifted In Practice

| Metric | Petro Era (Around 2022) | Silicon Era (By 2026) |

| Primary strategic asset | Crude oil and gas reserves | Compute capacity and AI infrastructure |

| Geopolitical chokepoints | Shipping lanes and pipelines | Advanced fabs, subsea cables, cloud regions |

| Core diplomatic instrument | Embargoes and sanctions | Export controls, cloud access rules |

| Sovereignty benchmark | Territorial integrity | Data and compute autonomy |

| Strategic vulnerability | Fuel supply shock | Compute denial, grid constraints |

Once this becomes the baseline, AI governance transforms into resource governance.

The Physical Substrate: Energy Is The New AI Policy

A useful way to understand 2026 AI geopolitics is to stop thinking like a software engineer and start thinking like a utilities planner. AI runs on megawatts. Training and inference require continuous power, long-term procurement, and stable grid connection.

That reality turns the “latency of the ocean” into a strategic tax and makes permitting speed feel like a national security capability.

Electricity demand from AI-driven data centers is projected to rise dramatically toward the end of this decade. That projection alone changes the power map:

- Countries that can add grid capacity faster will scale AI faster.

- Countries with cheaper, cleaner baseload can attract strategic workloads.

- Energy diplomacy starts blending into compute diplomacy.

This helps explain why Compute Sovereignty increasingly resembles industrial policy and alliance strategy rolled into one.

Subsea Cables, Latency, And The New Geography Of Influence

For years, geopolitics obsessed over chokepoints you could point to on a map. In the silicon era, some of the most strategic chokepoints are harder to visualize: subsea cables, landing stations, internet exchange points, and the physical paths that connect GPU clusters to data sources and end users. Compute Sovereignty isn’t only about owning GPUs. It’s about controlling the routes that make compute usable at scale.

Why “Latency Of The Ocean” Becomes A Strategic Tax

The farther your compute is from your users and datasets, the more you pay in:

-

Speed penalties (slower inference, weaker real-time applications)

-

Reliability risks (single cable disruptions can degrade entire regions)

-

Security exposure (more transit points, more interception and coercion risk)

-

Compliance complexity (data crossing borders triggers legal obligations)

This is why states increasingly treat connectivity like energy: not just a utility, but leverage. If oil pipelines once decided who could fuel an economy, data routes now influence who can run AI reliably and legally.

Where The Pressure Builds First

Certain AI uses are especially sensitive to latency and routing control:

-

Public services AI: identity, benefits systems, health triage, emergency response

-

Financial AI: fraud detection, real-time risk scoring, automated compliance

-

Industrial AI: smart factories, ports, grid optimization

-

Defense-adjacent inference: surveillance analysis, logistics, decision support

When these sand “trusted routes” that keep critical inference near home.

What Countries Are Quietly Doing About It

You can see a common playbook emerging:

-

More domestic landing stations and stronger control over cable access

-

National cloud region incentives (tax, power, permitting fast lanes)

-

Regional peering mandates to keep traffic local

-

Redundancy planning (multiple routes so one cut doesn’t cripple services)

Bottom line: In the AI era, geography matters again—not because land borders are expanding, but because compute, data, and connectivity must physically meet.

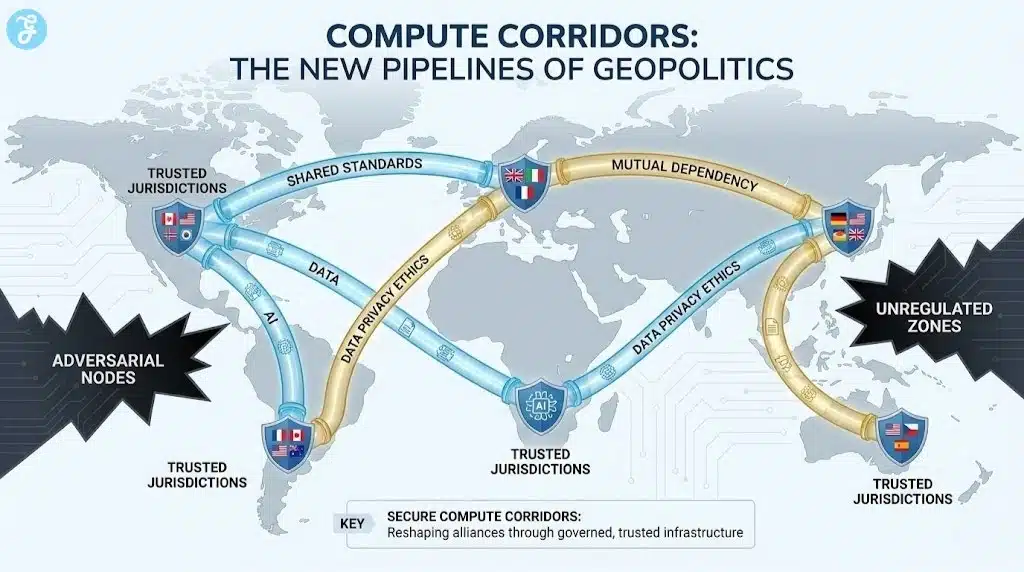

Compute Corridors: The New Pipelines

One of the most consequential outcomes of the 2026 Global AI Accord mindset is the recognition of compute corridors.

These corridors function as the 21st-century equivalent of energy pipelines. They ensure that AI training and inference workloads can move between aligned jurisdictions without transiting adversarial nodes, violating local laws, or exposing sensitive datasets.

The result is profound: digital information is no longer treated as universally borderless. It is profoundly territorial, routed through trusted infrastructure and governed spaces.

Corridors do not just reduce friction. They reshape alliances.

Countries are no longer only security partners or trade partners. They are compute partners, bound by shared standards and mutual dependency.

Decentralized GPU Clusters: Strategic Reserves In The AI Age

Centralized supercomputers made sense when the goal was a national prestige machine. In the inference-heavy AI economy, centralization becomes brittle.

Demand for AI inference has exploded across healthcare, government services, finance, logistics, and media. This pushes compute toward distributed models that can pool underutilized GPUs and route workloads dynamically.

Decentralized GPU networks illustrate how quickly this market is maturing. These systems aggregate compute across thousands of smaller nodes, creating “Uber-style” compute marketplaces.

Why Does this Matter Geopolitically?

Because decentralized clusters can let middle powers achieve partial-stack sovereignty. They may not fabricate chips or build trillion-dollar hyperscale regions, but they can still keep sensitive workloads domestic, support public services, and reduce reliance on foreign hyperscalers.

In the 2026 policy mindset, controlling decentralized compute starts to look like defending a strategic reserve.

Not oil underground, but compute capacity that can be mobilized during crises.

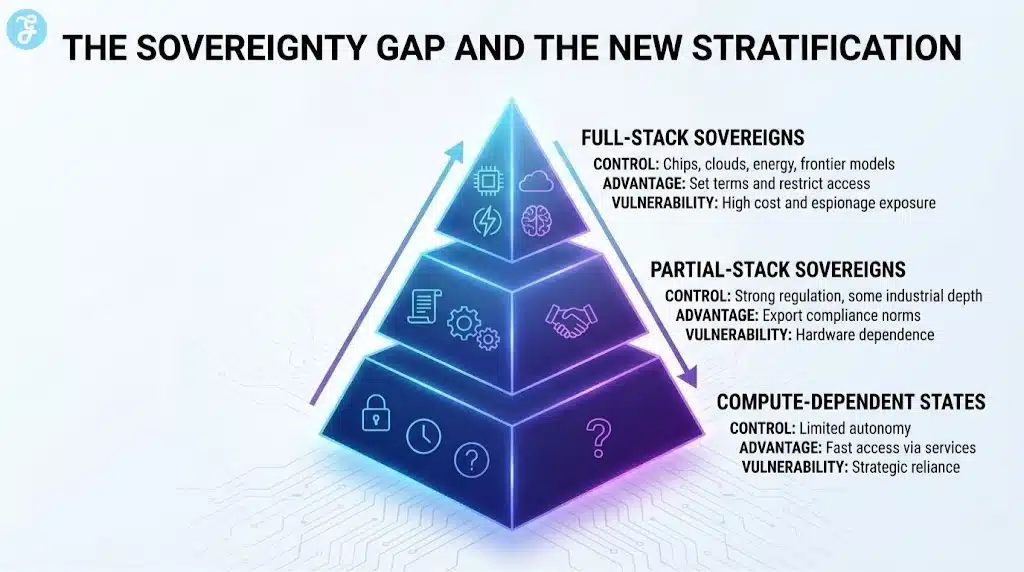

The Sovereignty Gap And The New Stratification

The most important political concept in this debate is the sovereignty gap. That gap is the distance between a nation’s legal claims over data and its material capacity to process and protect that data under its own rules.

This creates a hierarchy that will shape diplomacy, trade negotiations, and development pathways.

| Tier | What They Control | Advantage | Vulnerability |

| Full-stack sovereigns | Chips, clouds, energy, frontier models | Set terms and restrict access | High cost and espionage exposure |

| Partial-stack sovereigns | Strong regulation, some industrial depth | Export compliance norms | Hardware dependence |

| Compute-dependent states | Limited autonomy | Fast access via services | Strategic reliance |

The point is unavoidable: legal sovereignty without compute capacity is symbolic.

In the AI age, power flows to those who can run the models, not just regulate them.

Export Controls And The Hardening Of The Stack

Compute sovereignty becomes most visible when it turns into denial.

Export controls have tightened worldwide, restricting access to advanced chips, semiconductor equipment, and high-end AI training infrastructure.

The effect is predictable:

- The more controls are tightened, the more states invest in local capability.

- The more local capability expands, the more the world fragments into compute blocs.

- The more blocs form, the more AI governance becomes alliance governance.

Semiconductors are no longer neutral trade goods. They are strategic instruments.

Compute becomes the means through which diplomacy is enforced.

The New Bargain: “Compute-For-Data” And “Compute-For-Energy” Diplomacy

Once compute becomes scarce and strategic, diplomacy starts sounding like procurement. The emerging pattern is not “aid” in the traditional sense. It’s swaps and structured dependency: compute access in exchange for data access, energy access, or strategic alignment.

How The Bargain Works In Practice

Think of three currencies nations can trade:

-

Compute (GPU capacity, cloud access, preferential pricing, dedicated clusters)

-

Data (health datasets, language corpora, mobility data, public records)

-

Energy & Land (cheap power, permits, water access, industrial zones)

A compute-rich state (or a compute-rich corporate ecosystem) can offer “trusted compute capacity” to a partner. In return, it may secure:

-

preferential market access,

-

strategic basing rights for data centers,

-

influence over standards,

-

or privileged access to valuable sovereign datasets.

This isn’t necessarily sinister. It can be mutually beneficial. But it creates a new risk category: policy capture through infrastructure dependence.

Where The Deals Create Hidden Vulnerabilities

Even “friendly” compute dependence can become leverage when:

-

export-control rules change overnight,

-

cloud compliance requirements tighten,

-

or political leadership shifts.

This is why many mid-sized states are pursuing partial-stack sovereignty: enough domestic compute and governance capacity to avoid being forced into a single dependency relationship.

A Practical Framework: What To Watch In Any AI Partnership

Use this checklist to judge whether a “compute partnership” strengthens sovereignty or weakens it:

-

Who controls the kill switch? (shutdown authority in crisis)

-

Where do logs and telemetry live? (visibility into model behavior)

-

Who owns the model weights and fine-tunes? (real control vs rented intelligence)

-

What happens if sanctions expand? (continuity planning)

-

Can the workloads be moved quickly? (exit option)

Common Partnership Models And Their Tradeoffs

| Partnership Model | What You Gain | What You Risk |

|---|---|---|

| Foreign hyperscaler region inside your country | fast deployment, mature tooling | vendor lock-in, policy leverage |

| Joint “trusted compute corridor” with allies | scale + political protection | bloc dependency, shared failure risk |

| Decentralized pooled compute via local operators | resilience, local routing | uneven performance, governance complexity |

| Sovereign cloud built fully domestic | control, compliance clarity | high cost, slower iteration |

Data Privacy Ethics As A Diplomatic Barrier

The 2026 Global AI Accord is as much about ethics as it is about hardware.

Privacy rules do not only protect individuals. They define market access and cross-border trust.

In the EU model, ethics becomes enforceable governance. In other models, sovereignty means industrial acceleration or state control.

The geopolitical effect is that ethics becomes a trade filter.

If you want to deploy AI systems into regulated markets, you must meet transparency obligations, accountability requirements, and safety expectations.

This creates compliance corridors where aligned jurisdictions can exchange services and data with less friction.

Ethics is no longer only moral. It is commercial and strategic.

How Major Governance Models Compete

| Governance style | What it prioritizes | What it exports | What it risks |

| EU-style compliance gravity | Rights and transparency | Market access standards | Slower innovation cycles |

| U.S.-style security-first lead | Capability dominance | Export controls and selective alliances | Fragmentation |

| China-style industrial sovereignty | State-controlled scaling | Centralized ecosystems | Trust deficits abroad |

| Global South impact-first model | Development outcomes | Legitimacy and equity framing | Resource constraints |

Winners, Losers, And The New Silicon Order

Scarce strategic resources always produce winners, losers, and brokers.

| Stakeholder | Likely position | Why it shifts |

| Advanced chip and fab players | Winners | They sit on bottlenecks |

| Countries with fast power expansion | Winners | Grid speed becomes AI speed |

| Trustworthy regulatory jurisdictions | Winners | They attract sensitive workloads |

| Middle powers with smart strategy | Brokers | They trade alignment for access |

| Compute-dependent states | Vulnerable | They risk service-based dependency |

| Small firms without data access | Squeezed | Scale and compliance costs rise |

States will increasingly measure readiness not only by GDP or defense spending, but by compute availability, deployment speed, and legal credibility.

The Counterargument: Why Interdependence Still Matters

A fair opinion piece must admit the other side.

Full self-sufficiency is brutally expensive. It may also be technologically unrealistic for most states.

Many governments want partnership structures that preserve audit access, kill switches, and exit options.

The real debate is not globalism versus nationalism. It is the form of interdependence is acceptable. The fight is over trust.

What Happens Next: The Coming Grid And Cluster Battles

If 2023 and 2024 were about waking up, and 2025 was about rule-writing, then 2026 is about infrastructure stress tests.

Three developments will define the next stage:

AI Policy Merges With Energy Policy

The countries that build generation and transmission fastest will gain a compounding advantage.

The next AI crisis may not be a chip crisis. It may be a grid crisis.

Compute Corridors Become Alliance Infrastructure

Expect more formal trusted compute arrangements between allies, built on standards, screening, and shared investment.

Decentralized Clusters Expand The Middle Power Playbook

Distributed compute and sovereign cloud models will help states reduce exposure to dependency, even if they remain hardware importers.

This is the most underrated trend because it changes who gets to matter.

The petro era favored those sitting on reserves. The compute era may favor those who can orchestrate and govern distributed capability.

What This Means For The Next 18 Months

Expect more deals that look like trade agreements but are really compute agreements:

-

Compute corridors solidifying among allies

-

Sovereign data zone enforcement shaping where models can operate

-

Energy-backed AI zones offering “power + permits” as investment magnets

-

Standard-setting battles where compliance frameworks become geopolitical exports

In the petro era, power moved through barrels and pipelines. In the silicon era, power moves through compute capacity, routing control, and enforceable data rules. The nations that understand the new bargain—and insist on real exit options—will keep their sovereignty intact while still gaining the benefits of AI.

The New Rules Of Geopolitical Influence

The Global AI Accord of 2026 matters less as a single document than as a turning point in elite consensus. States now treat compute as a strategic asset. They tie it to national security. They regulate it as infrastructure. They negotiate it as diplomacy. Oil created a world where chokepoints could be blockaded. Compute is creating a world where chokepoints can be licensed, denied, throttled, or priced out. The contest will not only be about who has the best model. It will be about who controls the stack that makes models deployable at scale. In that world, Compute Sovereignty is not a buzzword. It is the modern definition of leverage.