In today’s fast-paced digital landscape, artificial intelligence (AI) is no longer a futuristic concept—it’s a business imperative. Companies across industries are leveraging AI to drive innovation, optimize operations, and deliver personalized customer experiences. However, building scalable AI applications that can handle growing data volumes, user demands, and complex use cases is no small feat. At Timspark, a leading AI solutions development company, we’ve tackled these challenges head-on in real-world projects. This article explores key lessons learned from developing scalable AI applications, offering actionable insights for businesses aiming to harness AI effectively.

Why Scalability Matters in AI Applications

Scalability is the backbone of any successful AI application. As businesses grow, their AI systems must handle increased data inputs, user interactions, and computational demands without compromising performance. A scalable AI application ensures:

- Consistent Performance: Maintains speed and reliability as usage spikes.

- Cost Efficiency: Optimizes resource usage to avoid skyrocketing infrastructure costs.

- Future-Proofing: Adapts to evolving business needs and technological advancements.

Drawing from Timspark’s experience, here are the critical lessons we’ve learned when building AI applications designed to scale.

Lesson 1: Design with Modularity in Mind

One of the biggest challenges in AI development is managing complexity. A modular architecture is essential for scalability, allowing developers to isolate components, update them independently, and integrate new features seamlessly.

Real-World Example

In a recent project for a retail client, Timspark built an AI-powered recommendation engine. By designing the system with modular components—data ingestion, model training, and inference—we enabled the client to scale the application across multiple regions. When the client expanded to new markets, we could update the data pipeline without overhauling the entire system.

Key Takeaway

Adopt a microservices-based architecture for AI applications. Break down the system into independent modules (e.g., data processing, model serving, and API layers) to ensure flexibility and scalability.

SEO Tip: Use keywords like “scalable AI architecture” and “modular AI design” to attract technical readers searching for AI development strategies.

Lesson 2: Prioritize Data Infrastructure

AI thrives on data, but poor data infrastructure can cripple scalability. A robust data pipeline ensures that your AI application can ingest, process, and store massive datasets efficiently.

Real-World Example

For a healthcare client, Timspark developed an AI system to analyze patient records for predictive diagnostics. Initially, the system struggled with slow data retrieval due to a monolithic database. By transitioning to a distributed data architecture using Apache Kafka for real-time streaming and Elasticsearch for search, we reduced latency by 40% and scaled the system to handle millions of records.

Key Takeaway

Invest in a scalable data infrastructure early. Use tools like Apache Spark, Kafka, or cloud-native solutions (e.g., AWS Redshift, Google BigQuery) to manage large-scale data flows.

SEO Tip: Include terms like “AI data pipeline” and “scalable data infrastructure” to target data engineers and AI architects.

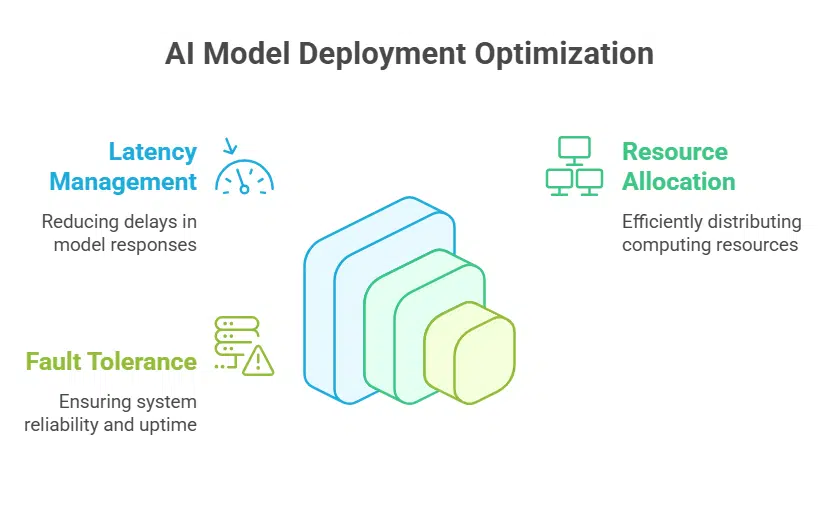

Lesson 3: Optimize Model Deployment for Scalability

Deploying AI models at scale requires careful consideration of latency, resource allocation, and fault tolerance. A poorly optimized deployment can lead to bottlenecks, especially under heavy workloads.

Real-World Example

In a financial services project, Timspark deployed an AI fraud detection system that processed thousands of transactions per second. Using Kubernetes for container orchestration and TensorFlow Serving for model deployment, we achieved sub-second inference times and automatic scaling during peak traffic.

Key Takeaway

Leverage containerization (e.g., Docker, Kubernetes) and model-serving frameworks (e.g., TensorFlow Serving, ONNX Runtime) to ensure efficient, scalable deployments. Monitor performance metrics to fine-tune resource allocation.

SEO Tip: Optimize for keywords like “AI model deployment” and “scalable AI inference” to capture searches related to AI operations.

Lesson 4: Embrace Cloud-Native Solutions

Cloud platforms offer unparalleled scalability for AI applications, providing on-demand resources and managed services that reduce operational overhead.

Real-World Example

For a logistics client, Timspark built an AI-driven route optimization tool. By leveraging AWS SageMaker for model training and AWS Lambda for serverless inference, we reduced infrastructure costs by 30% while scaling the application to support a growing fleet of vehicles.

Key Takeaway

Choose cloud-native tools that align with your AI workload. Platforms like AWS, Google Cloud, and Azure offer managed services for data storage, model training, and inference, enabling seamless scaling.

SEO Tip: Use phrases like “cloud-native AI” and “AWS for AI scalability” to attract cloud architects and DevOps professionals.

Lesson 5: Monitor and Iterate Continuously

Scalability isn’t a one-time achievement—it requires ongoing monitoring and optimization. AI applications must adapt to changing data patterns, user behaviors, and business goals.

Real-World Example

In an e-commerce project, Timspark implemented an AI chatbot that initially struggled with response accuracy as user queries grew. By integrating real-time monitoring with Prometheus and Grafana, we identified performance bottlenecks and retrained the model iteratively, improving accuracy by 25%.

Key Takeaway

Implement robust monitoring tools to track system performance, model accuracy, and resource usage. Use A/B testing and continuous integration/continuous deployment (CI/CD) pipelines to iterate quickly.

SEO Tip: Target keywords like “AI performance monitoring” and “continuous AI optimization” to appeal to technical decision-makers.

Lesson 6: Address Ethical and Regulatory Challenges

Scalable AI applications must comply with ethical standards and regulations, such as GDPR or CCPA, especially when handling sensitive data. Failing to address these can limit scalability due to legal or reputational risks.

Real-World Example

For a European client, Timspark developed an AI marketing tool that personalized campaigns based on user data. To ensure GDPR compliance, we implemented data anonymization and user consent mechanisms, enabling the client to scale the tool across multiple countries without regulatory hurdles.

Key Takeaway

Incorporate ethical AI practices, such as transparency, fairness, and data privacy, into your development process. Use tools like IBM’s AI Fairness 360 or Google’s What-If Tool to audit models for bias.

SEO Tip: Include terms like “ethical AI development” and “GDPR-compliant AI” to attract readers interested in responsible AI.

Common Pitfalls to Avoid

While building scalable AI applications, watch out for these common mistakes:

- Overlooking Latency: Failing to optimize for low-latency inference can degrade user experience.

- Underestimating Costs: Scaling without cost optimization can lead to unsustainable expenses.

- Ignoring Model Drift: Changes in data patterns can reduce model accuracy over time.

- Neglecting Security: Unsecured AI systems are vulnerable to attacks, especially at scale.

By proactively addressing these pitfalls, you can build AI applications that scale reliably and securely.

Conclusion

Building scalable AI applications requires a strategic approach that balances architecture, data, deployment, and ethics. By designing modular systems, optimizing data pipelines, leveraging cloud-native tools, and prioritizing continuous improvement, businesses can unlock the full potential of AI. At Timspark, we’ve seen firsthand how these principles drive success in real-world projects, from retail to healthcare to logistics. As AI continues to shape the future, investing in scalability today will position your business for long-term growth and innovation.

Ready to build your next scalable AI application? Contact Timspark to turn your vision into reality.

SEO Keywords: scalable AI applications, AI software development, building AI systems, cloud-native AI, AI data pipeline, ethical AI, AI model deployment, Timspark AI solutions.