A federal judge has granted preliminary approval for a $1.5 billion settlement between San Francisco-based artificial intelligence startup Anthropic and a coalition of authors who accused the company of amassing a vast library of pirated books to train its AI chatbot, Claude. The case represents one of the largest copyright settlements in the AI sector and is expected to shape how technology companies source and use copyrighted content in the years ahead.

Background of the Lawsuit

The class action lawsuit was filed by authors including Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson, along with support from the Authors Guild, a leading advocacy organization for writers. The plaintiffs alleged that Anthropic illegally copied and stored hundreds of thousands of books, many of them pirated, in order to train Claude, its rival to OpenAI’s ChatGPT.

The lawsuit argued that this practice robbed authors of both credit and compensation. While AI developers rely on enormous text datasets to train models capable of generating human-like responses, plaintiffs claimed that creating a permanent library of pirated works went far beyond permissible use.

The Settlement Terms

The settlement agreement is expansive and addresses a range of disputed practices:

-

Scope of Works Covered: The deal applies to approximately 465,000–500,000 books.

-

Compensation: Eligible authors will receive around $3,000 per work, a figure that amounts to nearly four times the minimum statutory damages available under U.S. copyright law. This payout is considered one of the most substantial in any copyright settlement involving AI.

-

Destruction of Pirated Files: Anthropic has agreed to permanently delete the pirated digital library it compiled and all derivative copies. The company will, however, retain the right to use books that it had legally purchased or licensed.

-

Claims Process: A settlement website will soon list the covered works, provide claim forms, and outline deadlines. Only authors who actively file claims will be compensated. Those who take no action may not receive payment.

The compensation fund of $1.5 billion will be distributed after court approval of claims, administrative costs, and attorney fees.

Judge Alsup’s Earlier Fair Use Ruling

In June 2025, U.S. District Judge William Alsup issued a partial ruling in the case that set important precedent for AI training. He found that the use of lawfully obtained works in AI training can qualify as transformative fair use, drawing parallels to how humans learn by reading and processing information.

However, he simultaneously ruled that the practice of downloading pirated books and maintaining them in a permanent library could not be excused under fair use. The settlement now addresses this specific piracy issue, leaving the broader fair use question largely intact for future litigation.

This nuanced distinction has major implications: while AI developers may still argue that training on licensed or purchased data qualifies as fair use, they cannot rely on pirated or unauthorized sources to shield their practices from legal liability.

Why This Case Matters

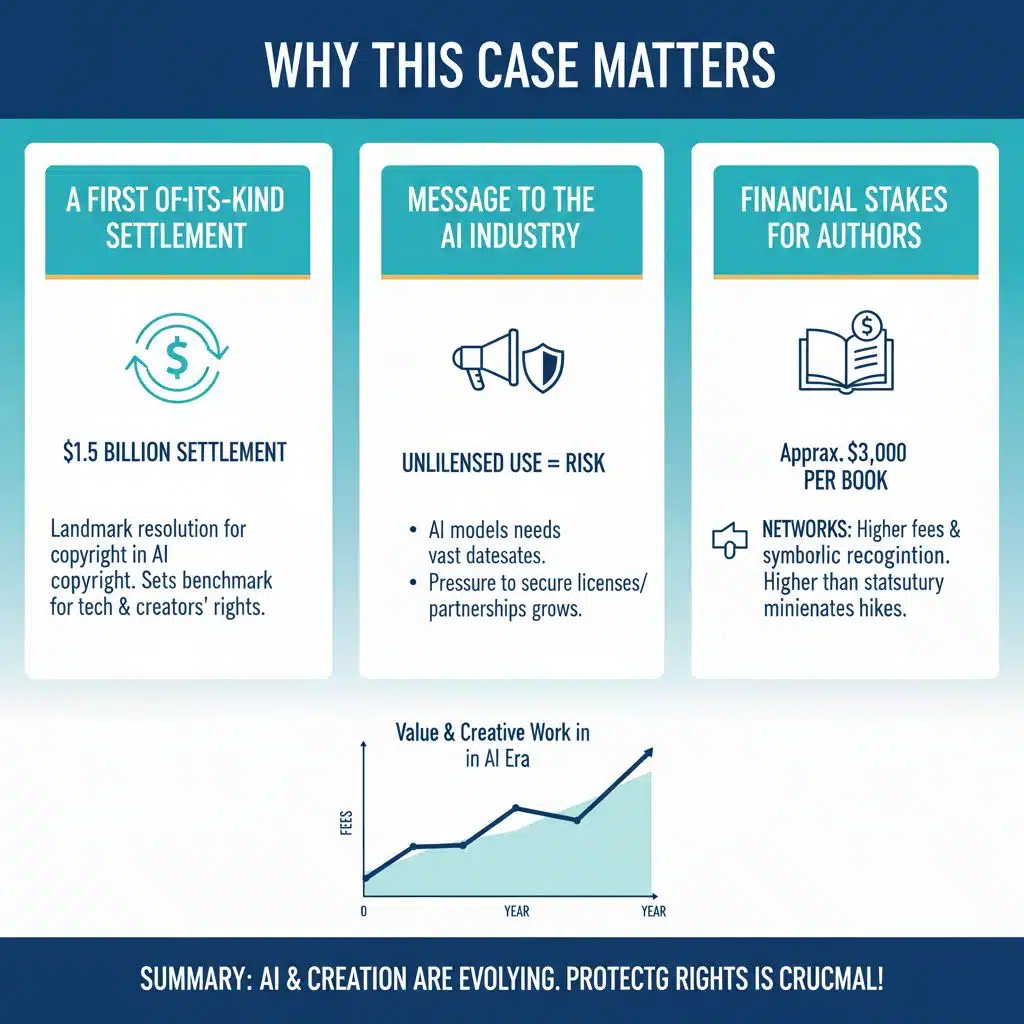

A First-of-Its-Kind Settlement

This $1.5 billion settlement is one of the first large-scale resolutions of a copyright dispute in the AI industry. It is likely to serve as a benchmark for how courts and companies navigate the balance between technological innovation and creators’ rights.

Message to the AI Industry

The agreement signals to AI firms that unlicensed use of copyrighted works carries real financial risks. With AI models depending on vast datasets to improve performance, the pressure to secure legitimate licenses or partnerships with publishers is likely to grow.

Financial Stakes for Authors

For authors, many of whom struggle financially, the compensation represents not only monetary relief but also symbolic recognition of the value of their work in the AI era. The payout, at approximately $3,000 per book, is considerably higher than statutory minimum damages and underscores the seriousness of the infringement claims.

Broader Industry Impact

The Anthropic case does not exist in isolation. Other AI companies, including OpenAI, Meta, and Google, are facing similar lawsuits over the use of copyrighted material to train large language models. Courts have not yet ruled definitively on those cases, but the Anthropic settlement will likely influence how they proceed.

Meanwhile, the robo-advisory market and AI training ecosystem are expanding rapidly. Analysts estimate that the need for massive training datasets will continue to grow, putting additional pressure on AI companies to obtain content legally.

Anthropic’s Position and Funding Context

Anthropic, founded by former OpenAI researchers and backed by investors including Amazon and Google, is one of the most highly valued startups in the AI space. In early September 2025, the company announced it had raised $13 billion in funding, pushing its valuation to $183 billion.

By settling the lawsuit, Anthropic can avoid prolonged litigation and focus on competing in the generative AI market against industry giants. The company has maintained that its settlement addresses only the narrow piracy claims, while the June ruling affirming fair use for training models remains a victory for its broader legal position.

Next Steps in the Case

The settlement has only received preliminary approval. Several key steps remain:

-

Claims Process Opens: Authors must verify whether their works are listed and file claims through the settlement website.

-

Objection Period: Other stakeholders will have the opportunity to submit objections or feedback to the court.

-

Final Approval Hearing: A hearing will be held in the coming months where the judge will decide whether to grant final approval.

-

Distribution of Funds: Once final approval is secured, payments will be distributed to claimants.

Failure to act means authors may forfeit their share of the settlement, making awareness and outreach critical.

The preliminary approval of Anthropic’s $1.5 billion settlement with authors marks a defining moment in the clash between AI innovation and copyright protection. By agreeing to compensate creators and delete its pirated library, Anthropic has acknowledged the weight of authors’ claims while avoiding the risk of even higher penalties at trial.

At the same time, the settlement leaves intact a crucial judicial finding: training AI models on legally acquired works can qualify as fair use, a principle likely to guide the industry going forward.

For the broader AI ecosystem, the case sets both a warning and a roadmap. Developers must tread carefully in sourcing data, while authors now have a precedent showing that their works hold significant value in the age of machine learning. The final approval hearing will determine how quickly authors see compensation, but the message to the AI industry is already clear: innovation cannot come at the expense of copyright law.

The information is collected from MSN and Yahoo.