Rentosertib’s move toward pivotal Phase 3 testing is a rare, measurable “moment of truth” for AI in drug development, arriving as regulators publish AI credibility frameworks and start qualifying AI tools for trials. If late-stage results hold up, AI’s promise shifts from demos to deployable industry practice.

Key Statistics Driving The “Hype vs Reality” Debate

- Average likelihood of first approval across 18 pharma companies: 14.3%

- Deloitte cohort average cost per asset (2024): $2.23B

- Deloitte projected R&D ROI (2024): 5.9%

- Rentosertib Phase 2a: 71 patients, 22 sites, +98.4 mL FVC at 60 mg QD

- Placebo comparator disclosed by Insilico: -20.3 mL mean FVC change

The Event That’s Forcing The Hype Question

For a decade, “AI will transform drug discovery” has been easy to say and hard to prove. The reason is simple: drug discovery is not where medicine succeeds or fails. Clinics are. Biology is messy, endpoints are unforgiving, and Phase 3 is where good stories go to die.

That’s why rentosertib matters more than most AI headlines. Editorialge reports that rentosertib (also known as INS018_055/ISM001-055) is poised to become the first “fully AI-designed” candidate to reach pivotal Phase 3 trials, aiming at idiopathic pulmonary fibrosis (IPF). A move into Phase 3 is not a trophy. It’s a stress test: bigger populations, longer follow-up, harder comparators, more scrutiny on safety signals, and far less tolerance for optimistic interpretation.

In a Phase 2a study described by Editorialge, 71 IPF patients across 22 sites in China were randomized, and the 60 mg once-daily group saw an average +98.4 mL change in forced vital capacity (FVC), a key lung-function measure. Insilico’s announcement adds a comparison point that matters for interpretation: a -20.3 mL mean decline in the placebo group over 12 weeks.

Taken together, this is the kind of signal that can justify the cost and risk of Phase 3. It is also the kind of signal that often shrinks, disappears, or complicates when tested at scale. That tension is exactly where “AI hype” either turns into reality or resets into another cycle of claims.

How We Got Here Without Noticing The Shift

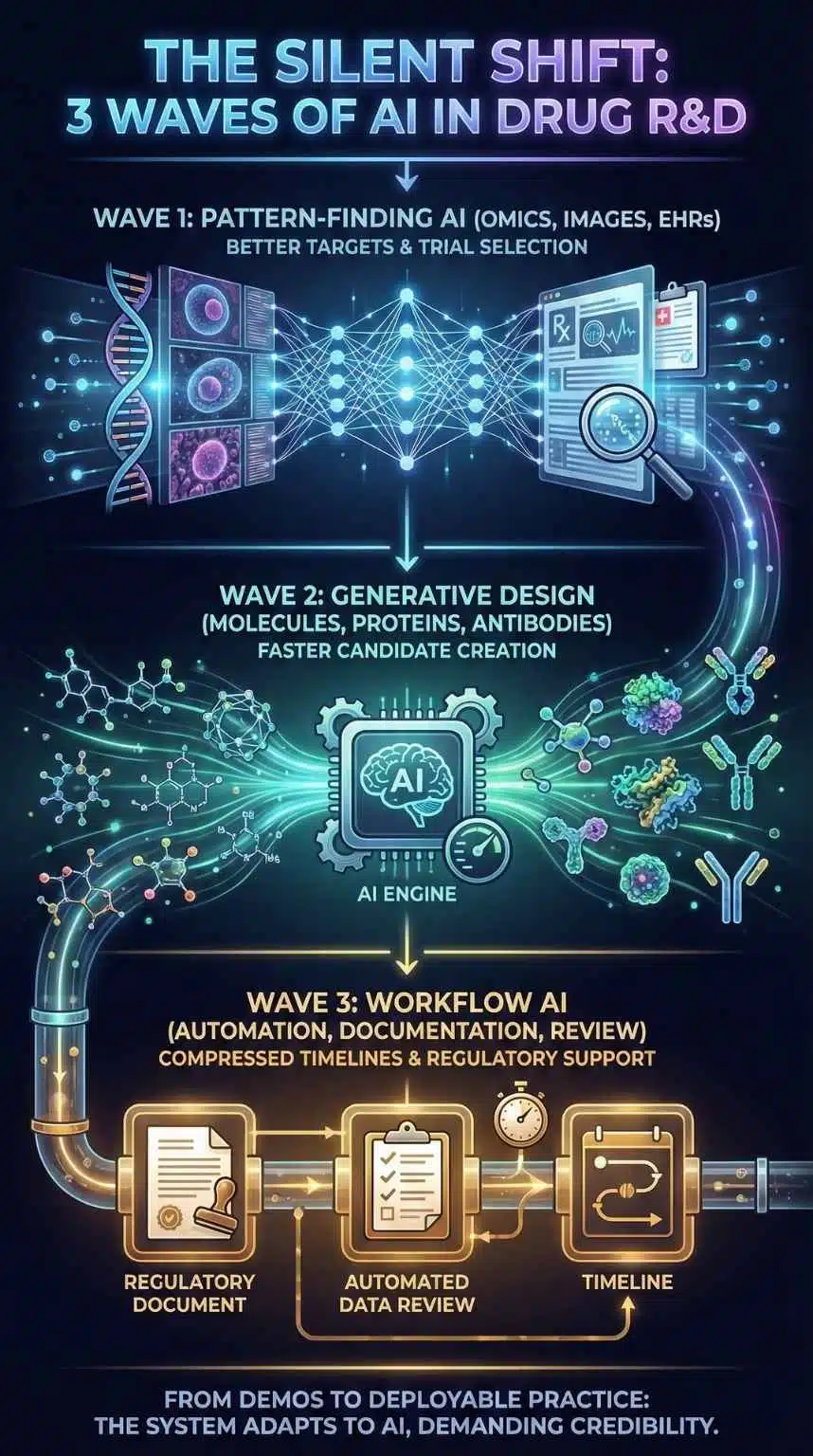

The AI story in drug development has gone through three overlapping waves:

- Pattern-finding AI (omics, images, electronic health records) that promised better targets and better trial selection.

- Generative design (molecules, proteins, antibodies) that promised faster candidate creation.

- Workflow AI (automation, documentation, review support) that promised to compress timelines across R&D and regulation.

Rentosertib sits at the junction of waves 1 and 2. But the broader “reality check” is being shaped by wave 3, especially regulation and trial operations.

In January 2025, the FDA issued draft guidance on using AI to support regulatory decision-making for drugs and biologics, including a risk-based credibility assessment framework for an AI model in a defined “context of use.” In December 2025, the FDA qualified AIM-NASH as the first AI drug development tool for use in MASH clinical trials, explicitly framing the benefit as standardization and reduced variability while keeping humans responsible for final interpretation. And in March 2025, Reuters reported the EMA’s human medicines committee accepted evidence generated by the AIM-NASH tool as scientifically valid, emphasizing reduced variability versus traditional pathologist consensus methods.

That is not just “AI adoption.” It is the system adapting its rules and workflows to accommodate AI, while also demanding testable credibility.

What Rentosertib Actually Proves And What It Does Not

Rentosertib does not prove that AI “solves” drug development. It might prove something narrower and more commercially important:

- AI can generate a credible clinical candidate fast enough to matter

- AI can help identify a target and design a molecule that survives early human testing

- AI can produce signals strong enough to justify Phase 3 investment

Editorialge positions rentosertib as an end-to-end AI story (target discovery plus generative chemistry), and points to publication in Nature Medicine as part of its legitimacy arc. Insilico’s own disclosure gives the “apples-to-apples” placebo comparison that helps analysts judge whether the signal is just stability or real improvement.

But there are limits that neither AI nor marketing can dodge:

- Phase 2 is not Phase 3.

- FVC movement over 12 weeks is not a durable benefit over a year or more.

- Safety in dozens of patients is not safety in hundreds across geographies and comorbidities.

So the correct analytical posture is not “AI did it.” It is: AI may have improved the front end of the funnel, but the back end still decides the truth.

The Bottleneck Is Still Attrition, Not Ideation

If you want to predict whether “AI hype” turns real, you have to follow the bottlenecks. A 2025 empirical analysis of clinical development success across 18 leading pharma companies reported an average likelihood of first approval (Phase I to FDA approval) of 14.3% (median 13.8%), with a broad range across companies. That is the baseline reality AI is trying to beat.

Meanwhile, Deloitte’s 15th annual pharma innovation analysis reported a projected R&D ROI of 5.9% in 2024 (up from 4.1% in 2023), while average cost per asset rose to $2.23 billion, and Phase 3 cycle times increased 12%.

And RAND added a critical nuance to the “it costs billions” narrative: using a different approach, it estimated a median direct R&D cost of $150M (mean $369M), and after adjustments, a median of $708M (mean $1.3B), highlighting how a few high-cost outliers can skew the public debate.

Put bluntly, even if AI makes discovery cheaper, Phase 3 slowness and failure can still dominate total economics. That is why rentosertib’s Phase 3 trajectory is more important than any claim about how quickly the molecule was ideated.

Comparative Snapshot: Who Wins And Who Loses If AI In Drug Development Turns Real

| Likely Winners | Why | Likely Losers | Why |

| AI-native biotechs with clinical proof | A late-stage “reference case” changes fundraising and partnering terms | AI-only vendors without wet-lab or clinical hooks | “Demo value” fades if they cannot show the outcome impact |

| Big pharma with proprietary datasets | Data becomes a compounding advantage when paired with foundation models | Firms reliant on generic public datasets | Model performance ceilings appear quickly without differentiated data |

| CROs and trial-tech that standardize endpoints | Less variability, faster reads, fewer disputes | Manual, slow, inconsistent scoring workflows | Regulators and sponsors increasingly demand consistency |

| Patients with high-unmet-need diseases | More shots on goal, potentially faster cycles | Payers if pricing keeps rising | Innovation does not automatically translate into affordability |

The key insight: the winners are not “AI companies.” They are organizations that turn AI into a system advantage, across data, automation, validation, and regulatory-grade documentation.

The Data Arms Race Is Becoming The Real Story

The next phase of AI in drug development looks less like ChatGPT and more like industrial strategy.

Reuters reported that Eli Lilly launched an AI/ML platform (TuneLab) offering biotech partners access to models trained on Lilly research data that it estimated cost over $1 billion to compile, with partners contributing training data back into the system. This is a clear signal of where competitive advantage is moving: private experimental data plus feedback loops.

At the same time, Nature’s reporting on AI-designed antibodies underscores a different constraint: proof-of-principle designs can be impressive but still lack the potency and properties of commercial biologics, and the field is racing toward clinical trials to prove relevance. That’s the same pattern rentosertib is now testing for small molecules.

This creates a “barbell” outcome:

- A small number of players with deep data and integrated labs get compounding gains.

- A long tail of firms using similar public tools converges toward similar outputs, making differentiation harder.

If rentosertib succeeds, the market implication is not “more AI startups.” It is more data consolidation, more platform partnerships, and more integration between AI and wet-lab execution.

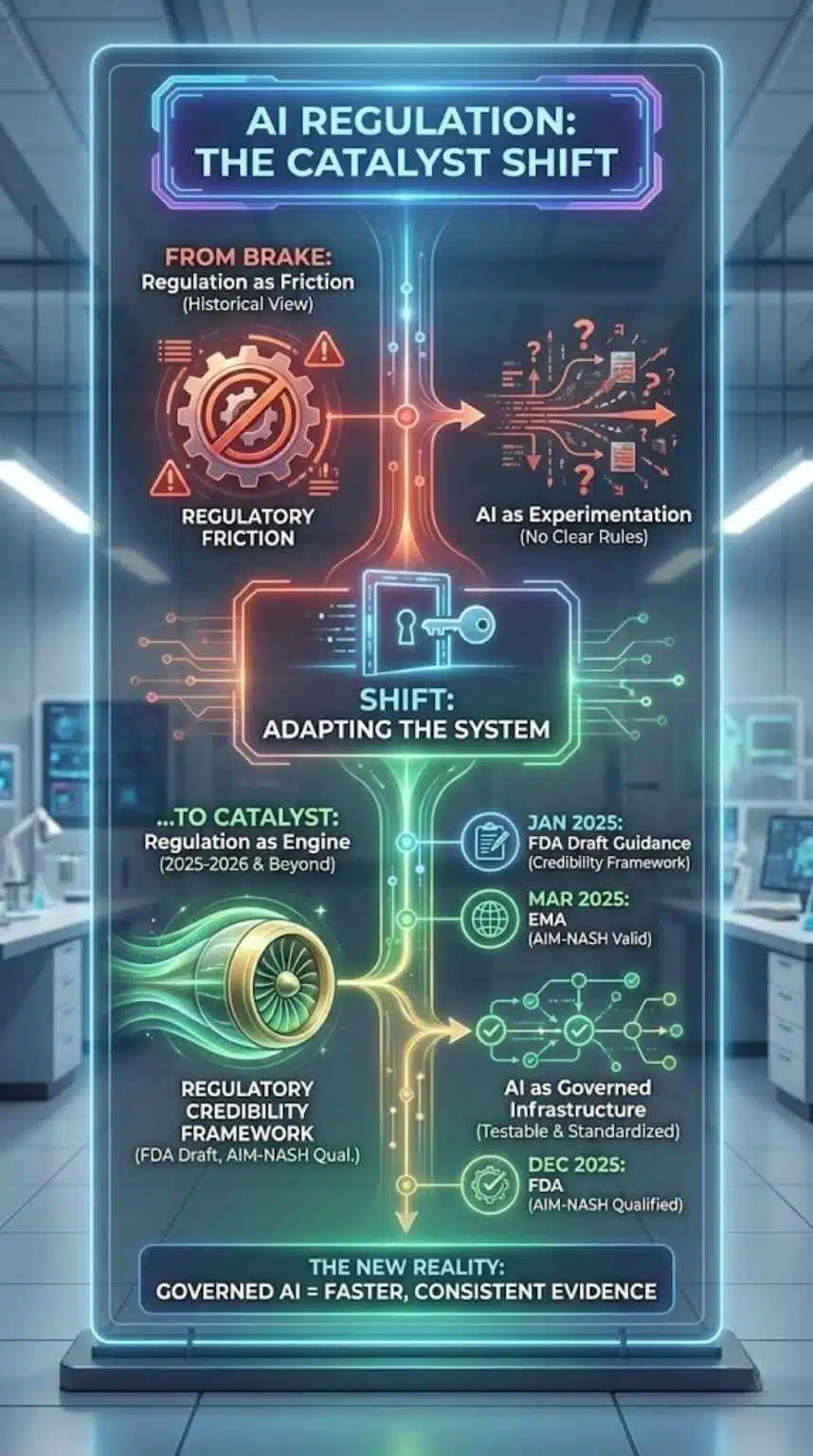

Regulation Is No Longer The Brake, It’s Becoming A Catalyst

Historically, regulation is framed as friction. But in this cycle, it may become an adoption engine by clarifying what “acceptable AI” looks like.

- The FDA’s January 2025 draft guidance explicitly addresses using AI to generate information supporting regulatory decisions and proposes a credibility framework tied to the context of use.

- The FDA’s December 2025 qualification of AIM-NASH formalizes a pathway for AI tools that standardize trial assessments, while keeping humans accountable for final reads.

- The EMA’s acceptance of AIM-NASH evidence as scientifically valid suggests cross-jurisdiction momentum around AI-assisted endpoints.

Here is what that means in practice: regulators are not asking sponsors to stop using AI. They are asking them to prove reliability, minimize bias, and document model credibility. That moves the industry from “AI as experimentation” to “AI as governed infrastructure.”

Milestones That Mark The Regulatory Shift

| Date | Institution | Milestone | What It Signals |

| Jan 2025 | FDA | Draft guidance on AI for regulatory decision-making | A formal credibility framework enters sponsor planning |

| Mar 2025 | EMA (via CHMP) | Accepts AIM-NASH use in trials | AI-assisted evidence can be considered scientifically valid |

| Dec 8, 2025 | FDA | Qualifies AIM-NASH as the first AI drug development tool | AI moves into standardized trial operations |

| Jan 4, 2026 | Industry media | Rentosertib is positioned as nearing Phase 3 | AI-designed drugs enter the “prove it” stage |

The Hidden Economic Question: Speed To Clinic Or Probability Of Success

AI advocates often sell “speed.” Investors often buy “multiples.” Patients need “approved therapies.” Those are not the same metric.

If AI reduces the time and cost to nominate candidates, companies can take more shots on goal. That can matter even if per-asset success rates do not change. But it still leaves a pivotal question unanswered: Does AI improve the probability of approval, or does it just increase throughput into the same attrition machine?

This is where rentosertib’s Phase 3 story becomes more than a single asset. It becomes a proxy measure for the claim that AI can find “better” biology, not just faster chemistry.

And the cost context matters. Deloitte reports costs rising and Phase 3 timelines lengthening, even as returns improve due to specific asset classes like GLP-1s. In other words, productivity gains can be swamped by clinical complexity and commercial forecast risk.

At the same time, RAND’s argument that median development costs can be far lower than headline “billions” complicates the narrative that speed savings automatically justify high launch prices.

The analytical takeaway: AI will be judged less on whether it is clever and more on whether it changes the economics of late-stage decision-making.

What AI Seems To Be Good At Today, And Where It Still Struggles

A practical way to test “hype versus reality” is to separate the pipeline into components and ask where AI is already producing durable gains.

| R&D Stage | Where AI Is Delivering Value Now | Where It Still Hits Limits |

| Target discovery | Pattern mining across omics and literature can surface candidates faster | Biology causality, confounding, and reproducibility remain hard |

| Molecule design | Generative models can propose novel chemistry quickly | Synthetic feasibility, ADME, tox, and off-target risks still dominate outcomes |

| Trial operations | Standardizing reads (e.g., digital pathology tools like AIM-NASH) reduces variability | Regulatory acceptance is context-specific and requires heavy validation |

| Regulatory submissions | Credibility frameworks can clarify expectations | Model documentation burden can be high, and “black box” claims are less tolerated |

Notice what is missing: nothing in this list eliminates the need for clinical truth. What AI can do is reduce waste before and during clinical testing, and make trial evidence more consistent.

That is exactly why tools like AIM-NASH matter even though they are not “AI-designed drugs.” They represent AI becoming part of the regulated machinery of development.

Expert Perspectives: The Case For Optimism And The Case For Caution

Optimists see rentosertib as the first credible, peer-evidence-adjacent demonstration that generative AI can produce clinically relevant candidates. Editorialge frames it as a “real-world test” of whether AI can deliver safer and more effective drugs faster. The Exscientia example reinforces the broader trend: firms argue AI can reduce the time from concept to clinic by narrowing search and lab workload.

Cautious voices emphasize that many AI-designed artifacts still need extensive refinement to match commercial standards, and that “proof of principle” is not potency or durability. Nature’s reporting on AI-designed antibodies explicitly notes that early designs have lacked potency and other features of commercial antibody drugs, which is why clinical trials are the real proving ground.

A neutral synthesis looks like this:

- AI is moving from claims to clinical tests.

- Regulation is building pathways, not walls.

- But the decisive variable remains late-stage reproducibility and safety at scale.

What Comes Next: The Milestones That Will Decide Whether The Hype Breaks Or Holds

If you want to track whether AI in drug development becomes “real” in 2026 and beyond, watch for four concrete milestones:

- Phase 3 initiation and design quality:

The “tell” will be endpoints, duration, stratification strategy, and whether the trial is global. Editorialge notes: Phase 3 IPF trials often require hundreds of patients and run for at least a year. - Partnering and financing terms:

If late-stage partners price risk lower (better economics, fewer contingencies), it suggests belief that AI is improving candidate quality, not just speed. - Regulatory-grade AI documentation is becoming standardized:

FDA’s credibility framework encourages sponsors to define the context of use and evaluate model credibility. Expect this to become routine in submissions where AI touches evidence generation. - More “AIM-NASH-like” qualifications:

The fastest way AI reshapes drug development may be through standardized trial measurements and decision support tools, not dramatic “AI designed it” headlines.

Predictions (Clearly Labeled)

- Analysts are likely to treat the first credible Phase 3 readout for an AI-designed asset as a valuation reset for AI-native pipelines, because it changes perceived technical risk.

- Market indicators point to a shift from “AI models” to “AI platforms + proprietary data”, with more partnerships like Lilly’s TuneLab structure.

- If Phase 3 disappoints, the field probably does not collapse, but narratives will pivot toward AI’s most reliable value: trial operations, endpoint consistency, and earlier-stage decision efficiency.

Final Thoughts: Why This Matters Right Now

Rentosertib’s Phase 3 push is not important because it is “AI.” It is important because it may become the first widely watched case where a generative pipeline meets the harshest validation stage. If it succeeds, AI in drug development stops being a productivity slogan and starts becoming an operating model, reinforced by regulators who are now building credibility frameworks and qualifying AI tools that standardize trial evidence.

If it fails, it still teaches the industry something valuable: the shortest path to real impact may run through governed, measurable improvements in trials and evidence quality, not through grand claims about “solving discovery.”

Either way, the hype era is ending. The measurement era has started.