You know that sinking feeling when you log into a game lobby and realize you’ve been shadowbanned? That’s exactly what opening Google Search Console feels like when your traffic drops off a cliff. One day, you’re ranking high, and the next, it’s like your website has been kicked from the server. I’ve seen this happen to solid sites, especially after the massive March 2024 Core Update.

Google has upgraded its anti-cheat systems. It now aggressively targets low-quality, scaled content, including the kind churned out by AI tools. If the search engine flags your pages, you could lose your rank or vanish from the index entirely. I’m going to show you how to spot AI-Generated content penalties in Google Search Console before it’s too late.

We will look at the specific red flags, the difference between a manual ban and an algorithmic “nerf,” and the exact steps to get your traffic back online.

Understanding Google Penalties

Think of Google penalties as the admins stepping in to enforce the rules. They keep the search results clean and helpful for users. If you get hit, it’s not just bad luck; it’s usually because a specific rule was broken, often related to the “Spam Policies” updated in 2024.

What are Google penalties?

A Google penalty is a negative action taken against your site that lowers your visibility. It’s the search engine’s way of saying your content doesn’t meet the quality standards. Triggers include buying links, scraping text, or publishing “Scaled Content Abuse”, a term Google uses for mass-produced, low-value pages.

For example, in the US market, many affiliate sites saw a 90% traffic drop overnight because they prioritized volume over value. You face two main types of enforcement: Manual Actions and Algorithmic Penalties. Knowing the difference is critical for your recovery strategy.

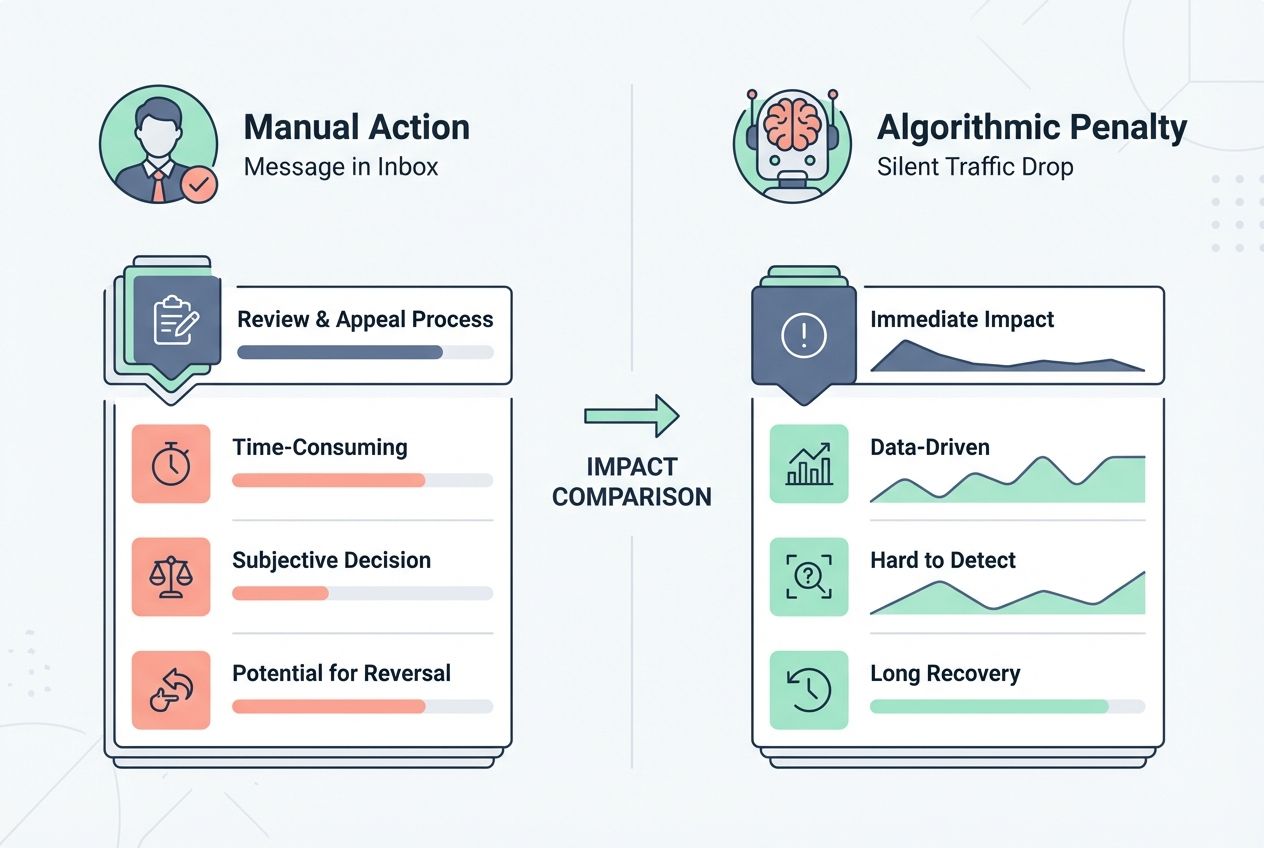

Manual vs. algorithmic penalties

A Manual Action is personal. A human reviewer at Google looked at your site, saw it violated guidelines, and pushed the “ban” button. You will see a notification in Search Console telling you exactly what went wrong, such as “Spammy automatically-generated content.”

An Algorithmic Penalty is silent. It’s like an automated anti-cheat system detecting a pattern it doesn’t like. No human checked your site, but the system lowered your rankings because your content signals (like user engagement or originality) were weak.

Here is a quick breakdown to help you spot the difference:

| Feature | Manual Action | Algorithmic Penalty |

|---|---|---|

| Cause | Human reviewer flags your site | Automated system (e.g., SpamBrain) |

| Notification | Message in “Manual Actions” tab | None (Silent traffic drop) |

| Fix | Fix issue + File Reconsideration | Improve quality + Wait for update |

Does Google Penalize AI-Generated Content?

Google doesn’t hate AI. It hates spam. There is a huge difference. The official stance is that they reward high-quality content, however it is produced. But let’s be real: if you use AI to generate 500 articles a week without editing, you are painting a target on your back.

Google’s stance on AI-generated content

Google focuses on the quality of the output, not the tool used to build it. Their “E-E-A-T” framework (Experience, Expertise, Authoritativeness, and Trustworthiness) is the gold standard.

In 2024, Elizabeth Tucker, Director of Product at Google, stated their goal was to reduce low-quality, unoriginal content by 40%. They specifically target “Scaled Content Abuse,” which is when you generate many pages to manipulate search rankings. If your AI content helps the user, it stays. If it’s just filler to catch keywords, it goes.

“Automation, including generative AI, is spam if the primary purpose is manipulating ranking in Search results.” – Google Search Central

Common reasons for penalties on AI-generated content

When I analyze sites that got hit, I usually see the same patterns. It’s not just about using ChatGPT; it’s about how you use it.

- Keyword Stuffing: Forcing “best gaming laptop” into a sentence six times makes it unreadable. Algorithms spot this instantly.

- Zero New Information: If your article just summarizes the top 3 results without adding new data, it has no unique value.

- Hallucinations: AI often invents facts. A review claiming the “PS5 has a coffee maker” will destroy your trust score.

- Publishing Velocity: Posting 50 articles a day is a clear signal of automation. Humans don’t write that fast.

- Robotic Phrasing: repetitive sentence structures (starting every paragraph with “In conclusion” or “Moreover”) trigger spam filters.

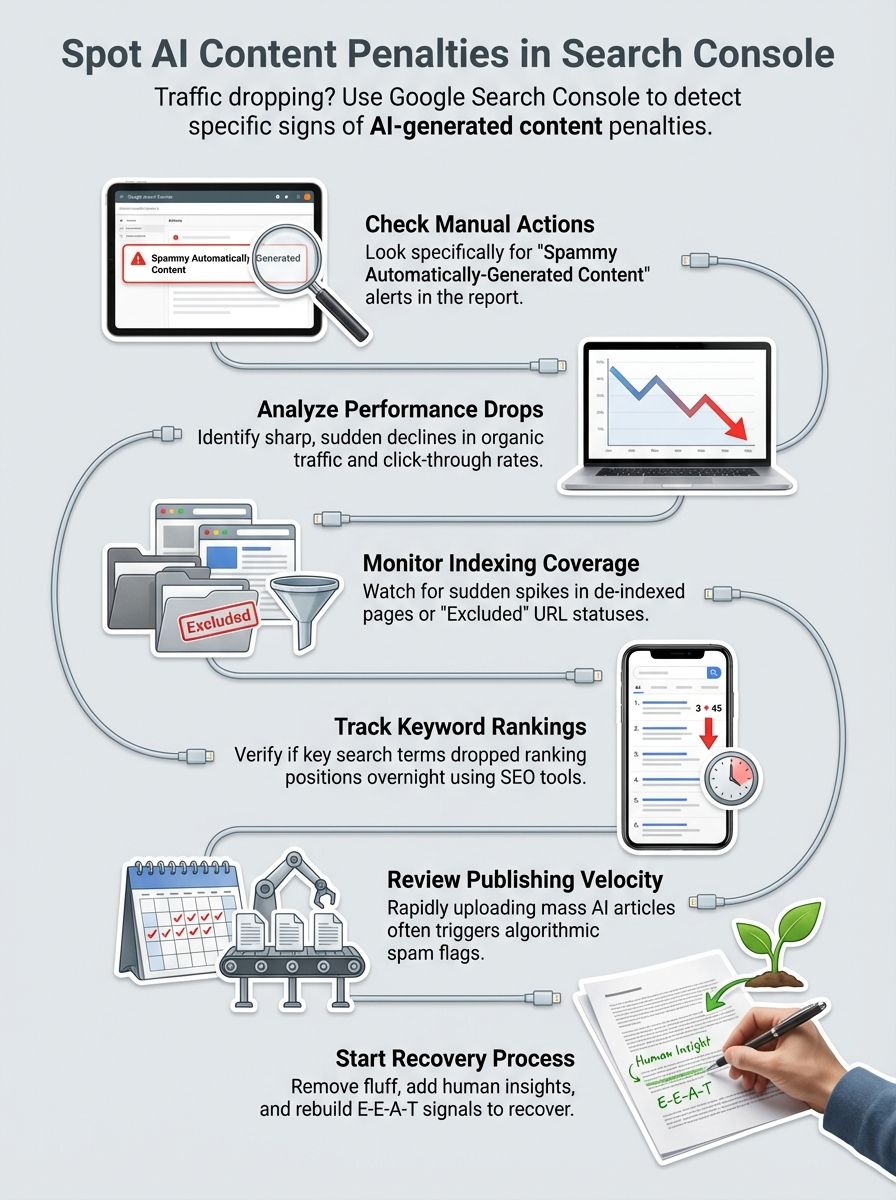

How to Spot AI-Generated Content Penalties in Google Search Console

You don’t need to guess if you’re in trouble. The data is right there in your dashboard if you know where to look. Let’s walk through the exact reports I use to diagnose a penalty.

Check the Manual Actions report

This is your first stop. Open Google Search Console and click on Security & Manual Actions in the left sidebar. If you see a green checkmark saying “No issues detected,” you are safe from human reviewers. But if you see a red warning saying “Spammy automatically-generated content,” you have a manual penalty. This means a Google employee reviewed your site and found it violated their spam policies.

This is a critical alert. You must fix the specific pages listed (or your whole site) and file a reconsideration request to get back in the game.

Analyze traffic drops in the Performance report

Most penalties are algorithmic, so they won’t show up in the Manual Actions tab. Instead, go to the Performance report.

Look for a “cliff edge” on the graph. This is where your impressions and clicks drop by 50% or more in a span of 48 hours. Compare the date of the drop with known Google updates, like the March 2024 Core Update or a Spam Update.

If your traffic flatlines while your “Average Position” (toggle this on the graph) shoots up to 50+, you’ve likely been filtered out by an algorithm.

Monitor Indexing Coverage for de-indexed pages

Go to the Pages (or Indexing) report. This is the most underrated tool for spotting AI-generated content penalties. Look for a spike in the status “Crawled – currently not indexed.”

This status is brutal. It means Googlebot visited your page, read your content, and decided it wasn’t worth adding to the search index. It’s a direct quality signal. If you have thousands of AI pages and a high count in this category, Google is telling you your content quality is too low to rank.

Track keyword ranking changes with SEO tools

Sometimes GSC data is delayed. Tools like Semrush or Ahrefs can show you real-time shifts. Watch your main keywords. If you drop from position #3 to #90 overnight, that’s a penalty. A natural decline is slow; a penalty is instant.

I also set up alerts for my brand name. If you stop ranking for your own website name, you might have been hit with a “Pure Spam” penalty, which is the hardest to recover from.

Signs of AI-Generated Content Penalties

You need to recognize the symptoms early. Saving your site is like saving a corrupted save file; the sooner you act, the better your chances.

Spammy Automatically-Generated Content alerts

This is the loudest alarm you can get. It appears directly in your GSC message center. The text will explicitly state that your site appears to have “content generated by automated processes.”

This notification confirms that Google classifies your pages as spam. It’s not a warning; it’s a notice of enforcement. Your pages are likely already de-indexed or suppressed until you take action.

Sharp declines in organic traffic

We aren’t talking about a slow bleed here. A penalty looks like a stock market crash. If your site goes from 1,000 visitors a day to 50 in a week, that is not a seasonal dip. That is an algorithmic filter kicking in. For US-based sites, check if the drop aligns with a holiday or a Google update. If it’s a Tuesday in October and you lost 90% of your traffic, start auditing your content immediately.

High content publishing velocity followed by ranking drops

Did you recently upload 2,000 articles using a bulk AI tool? That is a major risk factor. Google’s “SpamBrain” AI is trained to spot unnatural publishing patterns. If a blog that usually posts twice a week suddenly posts 100 times a day, it triggers a review. If that content is also thin or repetitive, the algorithm will classify the entire batch as spam.

I’ve seen site owners try to “brute force” SEO with volume. It works for a month, and then the site gets crushed.

Steps to Recover from an AI-Generated Content Penalty

So you got hit. It sucks, but it’s not game over. I’ve helped teams recover from this, and it takes work. You can’t just install a plugin to fix it.

Improve content quality and relevance

You need to inject human value into your pages. This is the “Humanize” phase. Go through your top pages and add things AI can’t fake: personal anecdotes, specific data points, and contrarian opinions. If you are reviewing a product, include a photo of you holding it. If you are writing about code, share a mistake you made and how you fixed it.

Use the “Information Gain” concept. Does your article provide something the other top 10 results don’t? If not, rewrite it until it does.

Rebuild E-E-A-T [Expertise, Experience, Authoritativeness, Trustworthiness]

Google needs to trust the person behind the keyboard. AI lacks “Experience”—the first E in E-E-A-T.

- Update Author Bios: Link your bio to your active LinkedIn or X (Twitter) profile. Prove you are a real person with industry history.

- Cite Real Sources: Don’t just say “studies show.” Link to the specific 2024 report from Pew Research Center or Statista.

- Show Your Work: Explain your editorial process. Add a “Why Trust Us” section to your sidebar.

Remove or refine low-value AI-generated content

This is the “Prune and Polish” method. You have to be ruthless. Run your site through an audit tool (many SEOs use Originality.ai or Winston AI for detection) to identify the worst pages. If a page has no traffic and high AI scores, delete it. It’s dead weight dragging down your site’s quality score.

For pages that have some potential, rewrite them. Aim for a mix of 80% human editing and 20% AI assistance. The goal is to lower the ratio of low-quality pages on your domain.

Submit a reconsideration request if needed

If you have a Manual Action, you must file a request. Be honest. Admit you used AI tools to scale content too quickly and explain the steps you took to fix it (e.g., “We deleted 500 thin articles and rewrote the remaining 200 with human editors”).

Do not sound like a lawyer. Sounds like a webmaster who wants to build a good site. It can take weeks to get a reply, so be patient.

How to Prevent Future AI Content Penalties

The best defense is a good offense. You want to build a site that is “penalty-proof” by design.

Focus on creating helpful, high-quality content

Write for the user, not the bot. Before you publish, ask yourself: “Would I share this link with a friend?” If the answer is no, don’t publish it.

Focus on depth. Instead of 10 shallow articles, write one massive, detailed guide that covers everything. Google’s systems are rewarding “satisfying” content, articles that answer the user’s question so well they don’t need to click back to the search results.

Use AI responsibly and with human oversight

AI is a tool, like a game controller. It doesn’t play the game for you. Use it to brainstorm outlines, summarize data, or check grammar. Do not use it to write the final draft.

Always have a human editor review every piece of content. They catch the nuance, the tone, and the “soul” that machines miss. A human editor ensures your content doesn’t sound like a generic Wikipedia entry.

Strengthen trust signals and maintain topical authority

Stick to your lane. If you write about gaming hardware, don’t suddenly publish 50 AI articles about “best car insurance” just to get clicks. That dilutes your topical authority and confuses the algorithm.

Keep your “About Us” and “Contact” pages updated with real addresses and phone numbers. These are small trust signals that add up. A legitimate business has a physical footprint; a spam farm usually doesn’t.

The Bottom Line

Spotting AI-generated content penalties in Google Search Console is the first step to getting your site back on the leaderboard. We covered how to check for manual actions, interpret the “Crawled – currently not indexed” status, and spot the algorithmic drops that hurt the most.

It’s a grind to recover, but it’s possible. Focus on quality, prune the junk, and prove you are a real expert. I’ve seen sites bounce back stronger after a cleanup. So check your reports, make your edits, and keep your content honest. GGs.