GPT-5 Store leaks, whether real or not, point to a bigger shift: ChatGPT is evolving from a chatbot into a marketplace for paid agents. With OpenAI already launching an in-chat app directory and health features, the next battle is monetizing expertise without inheriting legal, medical, and privacy liability.

From GPTs To Apps To Agents: Why A “Store” Is The Logical Next Step?

The phrase “GPT-5 Store” is not an official OpenAI product name, and the leak framing should be treated cautiously. But the direction it implies is not far-fetched. Over the past two years, OpenAI has steadily built the exact ingredients that make a “store” possible: discovery, governance, distribution inside chat, and an expanding developer surface that turns conversations into actions.

Start with the obvious. OpenAI created the GPT Store as a way to browse and share custom GPTs inside ChatGPT. Then it opened a formal app submission flow and launched an in-chat App Directory, with a review process to control what is published. In other words, the platform already has shelves, search, and moderation. If you can list an app that orders food, organizes playlists, or syncs data sources, you can also imagine listing an agent that helps prepare an insurance appeal or drafts a demand letter. The leap is not technical. It is governance.

Agents raise the stakes because they do not merely answer questions. They increasingly “do” things: pull data, structure documents, run multi-step workflows, and guide decisions. Once an agent becomes a workflow you trust, paying for it makes sense. The same economic logic that created app stores reappears: distribution is scarce, and whoever owns the distribution layer can monetize it.

The rumored “GPT-5” angle matters less as a version number and more as a signal that capability improvements are pushing the cost-benefit curve toward paid, specialized experiences. A general assistant is useful. A specialized agent that reliably completes a complex task with fewer follow-ups becomes a product.

What makes legal and medical agents especially tempting is that they sit on top of expensive, high-friction systems. Healthcare is paperwork-heavy and confusing. Legal help is often priced beyond the reach of ordinary consumers for routine needs. In both cases, even modest automation can feel like a breakthrough. But that is also why mistakes can harm people quickly, and why regulators and professional bodies care.

| Platform Milestone | Approx. Timing | What Changed | Why It Set Up A “Store” Moment |

| Custom GPT marketplace | Early 2024 | User-built GPTs became discoverable in ChatGPT | Discovery became native, not external |

| Policy boundary clarified | Late 2025 | OpenAI explicitly restricted tailored licensed advice without professional involvement | Legal and medical monetization now needs guardrails |

| In-chat App Directory + submissions | December 2025 | Developers can submit apps for review and publication | Governance shifted from theory to workflow |

| Health experiences in ChatGPT | January 2026 | Health becomes a dedicated space with record and wellness connections | Verticalization into regulated-adjacent territory is underway |

The strategic read is simple: OpenAI appears to be moving from “model provider” to “distribution platform.” If that is true, a paid agent marketplace is not a side project. It is the next business model.

Why Legal And Medical Agents Are The Highest-Value, Highest-Risk Category?

If you want to predict what a paid agent store would prioritize, follow demand. OpenAI’s own reporting and product direction show that health questions are already a major share of usage. The January 2026 OpenAI healthcare report describes millions of weekly messages about health insurance alone, and widespread use of ChatGPT to interpret bills, coverage rules, and appeals. Separately, OpenAI’s rollout of Health in ChatGPT makes it easier to ground responses in personal data like medical records and wellness app signals. That combination creates a powerful magnet: high demand plus personalization.

Legal demand is less publicly quantified by OpenAI, but the economic pressure is obvious. Everyday legal needs include lease disputes, employment issues, small claims, consumer debt, immigration paperwork, family law logistics, and contract review. Many of these tasks are procedural, repetitive, and document-driven. That is exactly where agent workflows shine: intake, checklists, drafting, summarization, and preparation for a human professional.

The risk is not only “hallucinations.” It is also misplaced confidence. Medical and legal systems are full of edge cases. The same answer can be correct in one jurisdiction and wrong in another. Two patients with similar symptoms can require different actions because of medications, history, or contraindications. And in both domains, the consequences of being wrong can be severe.

That is why the most realistic near-term “paid agent” winners will not be agents that replace doctors or lawyers. They will be agents that reduce friction around the system, while carefully stopping short of individualized diagnosis or strategy. Think of them as translators and process coaches, not final decision-makers.

| What Users Want | What A Paid Agent Can Safely Offer | Where It Becomes Dangerous Fast |

| Understanding test results | Plain-language explanation, questions to ask a clinician | Interpreting results as diagnosis, recommending treatment |

| Comparing insurance plans | Side-by-side summaries of terms and costs | Telling a user which plan to buy without human review |

| Drafting a contract | Templates, clause explanations, risk flags | Jurisdiction-specific legal advice for a specific dispute |

| Responding to a lawsuit notice | Procedural overview, deadlines checklist | Strategy on what to file, what to admit, what to claim |

| Managing chronic care routines | Reminders, wellness tracking, discussion prompts | Medical direction that substitutes for clinician supervision |

A key point is that “paid” can increase trust even when it should not. Many users implicitly assume a paid tool is vetted, endorsed, or “more correct.” That makes product labeling and friction design a safety feature. A store that wants to avoid catastrophe will need to teach users what the agent is and is not, repeatedly, and in plain language.

Medical risk also expands when tools can ingest real data. OpenAI’s Health feature positions itself as a dedicated space for health and wellness conversations, with medical record connections and supported wellness apps. That improves relevance, but it also raises the privacy temperature. A casual chat about a headache is one thing. A record-grounded conversation about diagnoses, prescriptions, or mental health is another. Paid agents will intensify this because monetization tends to encourage deeper engagement, and deeper engagement often means more sensitive information.

The same applies in law. Users often reveal details that matter to outcomes: timelines, confessions, financial constraints, immigration status, and family situations. A store that invites “legal agents” has to assume oversharing will happen.

So the “prize” is huge because demand is huge. The “trap” is that the store operator becomes a central node in some of the most sensitive decisions and data flows in a person’s life.

Guardrails As Product: Liability, Licensing, And Regulatory Pressure

A paid agent store for legal and medical “advice” collides with three rule systems at once: platform policy, professional ethics, and government regulation. This is where the leak narrative becomes most consequential, because the difference between “information” and “advice” is not just semantics. It determines who can sell what, under what supervision, and what liability follows.

OpenAI’s Usage Policies explicitly restrict the provision of tailored advice that requires a license, such as legal or medical advice, without appropriate involvement by a licensed professional. That language does not necessarily prevent health or legal agents from existing. It pushes them into one of two designs:

- General-information agents that avoid personalization beyond education and navigation.

- Professionally supervised agents where a licensed clinician or lawyer is involved in review, sign-off, or direct delivery.

The legal profession has also been formalizing its stance. The American Bar Association’s Formal Opinion 512 frames the duty lawyers carry when using generative AI: competence, confidentiality, communication, supervision, and responsibility for outcomes. That signals a likely market shape: “AI for lawyers” grows quickly, while “AI lawyer for consumers” faces tighter constraints.

In healthcare, regulators are increasingly focused on lifecycle oversight for AI-enabled systems. US FDA guidance around change control plans for AI-enabled device software functions underscores a central reality: once an AI system influences clinical outcomes, regulators care how it changes over time, how it is monitored, and how performance is validated. Even if a consumer “wellness” agent tries to stay outside medical device definitions, the boundary can blur if marketing implies diagnosis or treatment.

In parallel, the EU AI Act’s risk-based framework and phased compliance timeline increase the compliance cost for high-risk uses, including many health-adjacent and safety-critical applications. For a store operator, that creates a geography problem: a product that is easy to launch in one market may require significant controls and documentation in another. That is already visible in how health features roll out in limited regions and exclude parts of Europe and the UK at launch.

The enforcement side is also tightening. The US Federal Trade Commission has publicly signaled that “AI” does not create an exemption from deception law, and its Operation AI Comply has included cases involving claims like “AI Lawyer” services and other misleading AI marketing. A store full of paid “medical agents” and “legal agents” would be a natural target if claims outpace evidence.

| Constraint Layer | What It Demands | Practical Impact On A Paid Agent Store |

| Platform policy | No unlicensed tailored advice without professional involvement | Forces “education” framing or verified professional workflows |

| Professional ethics | Human responsibility remains with clinicians and lawyers | Encourages enterprise and professional tiers, not pure consumer replacement |

| Consumer protection enforcement | No deceptive or unsupported claims | Pushes stores toward testing, auditing, and careful marketing language |

| Health regulation | Evidence, monitoring, and lifecycle controls for higher-stakes functions | Makes “diagnose and treat” offerings far costlier to ship |

| EU AI governance | Risk management, transparency, oversight in phased timeline | Creates uneven global availability and compliance engineering |

The key insight is that guardrails are no longer a policy page. They become product features. Verification badges, disclaimers that actually interrupt behavior, audit logs, escalation prompts, and human-in-the-loop routing become part of the store’s business model.

This is also where “paid agents” may push the ecosystem toward a more institutional shape. A store full of anonymous hobbyist agents is hard enough to moderate for low-stakes categories. Moderating it for medical and legal workflows is a different order of difficulty. Past friction in marketplace moderation shows why. Early GPT marketplaces struggled with policy-violating content slipping through. If romance bots and other gray-zone GPTs could appear in the open, the bar for allowing “medical agents” will likely be much higher, and the store will need stricter entry requirements.

A mature store model may look less like a hobbyist bazaar and more like an “app pharmacy” where categories have compliance gates.

The Economics Of Paid Agents: Who Captures Value And Who Gets Squeezed?

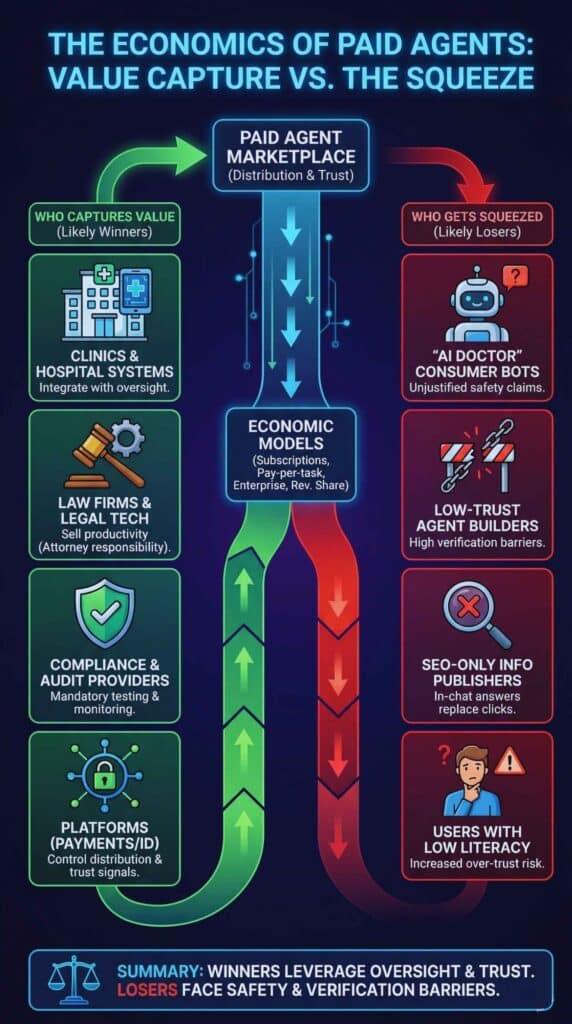

If you accept that a store for paid agents is a plausible next step, the next question is who benefits. The answer depends on two forces: distribution and trust.

Distribution is straightforward. ChatGPT has enormous reach, with leadership publicly citing roughly 800 million weekly active users in late 2025, and some market observers suggesting it may be closer to a billion in early 2026. That kind of installed base is the core asset of any store. When users already live inside the interface, discovery becomes frictionless, and switching costs rise.

Trust is trickier. In legal and medical categories, users do not just want convenience. They want assurance that the tool is safe, accurate enough, and accountable. That tends to concentrate the market around brands and institutions, not just clever prompts.

Now add competition. Similarweb-based analyses in early 2026 suggest ChatGPT’s share of generative AI chatbot site visits has declined over the last year, while Google Gemini’s share has risen meaningfully. Even if those trackers capture only parts of usage, the trend matters. A tightening competitive race pushes platforms to move beyond “best model” bragging rights and toward “best outcomes” in real workflows. That is exactly where paid agents fit.

So the economics likely tilt toward these monetization patterns:

- Subscription agents for ongoing needs (wellness management, chronic care navigation, contract drafting)

- Pay-per-task agents for episodic needs (appeal an insurance denial, prepare a small-claims filing packet)

- Enterprise agents sold to clinics, hospitals, and law firms with compliance controls

- Revenue share marketplaces where the platform takes a cut of agent revenue, plus placement incentives

But the store operator faces a classic marketplace dilemma: too permissive and risk rises, too strict and innovation slows. Legal and medical categories sharpen that dilemma because one scandal can trigger a broad crackdown.

| Likely Winners | Why They Win | Likely Losers | Why They Lose |

| Clinics and hospital systems | Can integrate agents into workflows with oversight | “AI doctor” consumer bots | Hard to justify safety claims without supervision |

| Law firms and legal tech vendors | Can sell productivity tools under attorney responsibility | Low-trust agent builders | Verification and audit expectations raise barriers |

| Compliance and audit providers | Testing and monitoring become mandatory to scale | SEO-only info publishers | Users get answers inside chat, not via search clicks |

| Platforms with payments and identity | They control distribution and trust signals | Users with low literacy | Over-trust risk rises when “paid” implies “safe” |

There is also a labor implication that deserves nuance. “Paid agents” do not automatically destroy professions. More often they unbundle them. Routine intake, first drafts, and administrative tasks become cheaper. The remaining human work shifts toward judgment, negotiation, and accountability. That can improve access for users who currently get no help at all, but it can also compress margins for commodity services.

A further implication is the rise of “agent bundling.” The most defensible products will likely bundle AI with humans, not AI alone. A legal agent that generates documents might include an optional review by a licensed attorney. A medical agent might produce a structured visit summary that a clinician can approve. In a store setting, that hybrid model is attractive because it aligns with platform policy and professional ethics while still offering automation.

Finally, there is a trust externality. If a store becomes the place where people “go” for answers, it can shape what expertise looks like culturally. Over time, that can influence everything from how patients negotiate care to how consumers handle disputes. A paid store is not just a business model. It is a behavioral infrastructure.

What Comes Next In 2026: Likely Scenarios And Milestones To Watch?

If the “GPT-5 Store leaks” narrative turns into a real product, 2026 is likely to be about governance and category design more than flashy launches. The most important milestones will be structural, and they will show up in how the store defines “approved” and “safe enough.”

Here are the most plausible scenarios, labeled clearly as forward-looking analysis rather than confirmed plans.

First, expect regulated categories to split into consumer and professional lanes. Consumer agents will emphasize education, preparation, and navigation, and they will repeatedly encourage professional consultation. Professional agents will emphasize efficiency, documentation, and integration, with explicit positioning as tools used under licensed oversight.

Second, expect verification to become a core store primitive. If a store lists “legal agents” or “medical agents,” it will need a way to indicate who built them, what credentials exist behind them, what data they use, and what evidence supports their claims. Verification may include institutions, licensed professionals, and audited vendors.

Third, expect testing and monitoring to become commercial differentiators. In 2024 and 2025, model benchmarks were a marketing tool. In 2026, category-specific evaluations and monitoring will matter more, because enforcement risk rises as soon as money changes hands and outcomes are high-stakes.

Fourth, watch for privacy segmentation. Health features already show region-by-region rollouts. As stores grow, we are likely to see paid tiers that promise stronger data protections, shorter retention, and clearer controls, especially for medical and legal workflows.

Fifth, anticipate a claims crackdown. The FTC’s posture suggests regulators will not hesitate to act when “AI doctor” or “AI lawyer” claims look deceptive or unsupported. A store that enables such claims without policing them invites enforcement and reputational damage.

| 2026 Milestone | What To Watch For | Why It Matters |

| Clear monetization rules for apps and agents | Revenue share, subscriptions, paid upgrades | Determines whether builders treat it as a real business |

| Verified professional publishing | Credential badges, institutional onboarding | Enables legal and medical categories safely |

| Category-specific evaluation standards | Health and legal benchmarks, disclosure requirements | Anchors marketing claims in evidence |

| Regional compliance expansion | EEA and UK availability changes for sensitive features | Signals readiness for stricter privacy and AI regulation |

| Enforcement headlines | Actions against deceptive “AI advice” claims | Sets the boundary for how agents can be sold |

The reason this story matters is not the leak itself. It is the direction it implies. OpenAI is turning ChatGPT into a platform where third-party experiences can be discovered, reviewed, and eventually monetized. Once you have distribution, payments are the next frontier. Legal and medical agents are the most obvious value pools, and the most dangerous ones. The outcome in 2026 will hinge on whether platforms can sell “help” without selling false certainty.