China’s Cyberspace Administration has unveiled draft regulations targeting AI systems that mimic human personalities and emotions, signaling Beijing’s push to balance rapid tech innovation with stringent safety controls. These “Interim Measures for the Management of Artificial Intelligence Human-like Interactive Services” aim to oversee consumer-facing AI chatbots, virtual companions, and similar tools that engage users through text, voice, or visuals. Open for public comment until January 25, 2026, the rules reflect growing global concerns over AI’s psychological and societal impacts.

Core Provisions of the Draft Rules

The draft mandates that AI service providers bear full safety responsibilities across the entire product lifecycle, from design to deployment. This includes establishing robust systems for algorithm filing, data security audits, and personal information protection to prevent breaches or misuse. Providers must conduct security assessments before launching human-like features and file reports with provincial regulators upon reaching milestones like one million registered users or 100,000 monthly active users.

A standout feature targets psychological risks: companies must monitor user states, emotions, and dependency levels in real time. If extreme emotions or addictive patterns emerge—such as prolonged sessions triggering distress—providers are required to intervene with warnings, session limits, or referrals to support services. Users receive mandatory notifications upon login, every two hours, and during detected overdependence, clearly stating they interact with AI, not humans.

Content generation faces strict “red lines”: no output endangering national security, spreading rumors, inciting violence, obscenity, or violating “core socialist values.” Training data must align with national standards, excluding subversive or disruptive material, while algorithms undergo ethical reviews to ensure transparency and bias mitigation.

Scope and Applicability

These rules apply broadly to any public AI service in China simulating human traits—like personality quirks, thought processes, or emotional responses—via multimedia interfaces. This covers popular apps such as virtual girlfriends, mental health bots, and humanoid avatars already gaining traction among young users. Exemptions might exist for non-public or non-emotional tools, but the focus remains on consumer products blurring human-machine boundaries.

Providers face filing obligations with the Cyberspace Administration of China (CAC), China’s top internet overseer, which leads enforcement alongside other agencies. Violations could trigger fines, service suspensions, or bans, building on prior penalties for non-compliant AI. The draft emphasizes lifecycle accountability, meaning even updates or expansions require reassessments.

China’s Broader AI Regulatory Evolution

This initiative caps a flurry of AI controls since 2023, when CAC rolled out “Interim Measures for Generative AI Services,” mandating labeling, safety tests, and content filters for tools like chatbots. Earlier, Beijing targeted deepfakes and recommendation algorithms, fining violators for spreading misinformation. The 2017 “New Generation AI Development Plan” positioned AI as a national priority, fueling investments while demanding ethical guardrails.

Recent moves include guidelines for AI in government affairs and special campaigns against abusive training data or unlabeled outputs. Unlike the EU’s risk-based AI Act, China’s approach prioritizes state security, social harmony, and ideological alignment, often through CAC-led drafts refined via public input. Experts note this human-like AI focus addresses domestic trends: surveys show millions of Chinese youth forming emotional bonds with AI companions amid social pressures.

Psychological and Ethical Risks Addressed

Human-like AI thrives in China, with apps like those from ByteDance or Tencent offering empathetic chats that rival therapy sessions. Yet risks abound: users report addiction, blurred realities, and worsened isolation, echoing cases where virtual relationships supplanted real ones. The draft’s intervention protocols—detecting “extreme emotions” via sentiment analysis—aim to curb this, requiring pop-up warnings like “Reduce usage to protect mental health.”

Ethically, mandates for “socialist values” ensure AI promotes patriotism and stability, banning outputs challenging authority. Data protections shield against leaks of sensitive interactions, vital as emotional disclosures could fuel blackmail or profiling. Critics abroad decry censorship baked into tech, but proponents argue it prevents harms seen in unregulated Western bots spreading hate or fakes.

Implications for AI Companies and Users

Domestic giants like Alibaba and Baidu must retrofit products, investing in monitoring tech that scans usage patterns without invading privacy— a tightrope under data laws. Foreign firms eyeing China’s market face compliance hurdles, potentially exporting safer models globally. Startups could innovate around rules, perhaps emphasizing non-emotional utilities to dodge scrutiny.

Users gain protections: clearer disclosures combat deception, while addiction checks foster healthier habits. However, overreach risks stifling companionship tools aiding lonely demographics, like rural elderly or stressed urbanites. Public consultation invites tweaks, but CAC’s track record suggests firm enforcement post-January.

Global Context and Comparisons

China leads in granular AI rules, contrasting the U.S.’s lighter-touch approach reliant on voluntary guidelines. The EU demands high-risk AI audits, but lacks China’s user-emotion monitoring. Singapore and South Korea eye similar emotional AI regs amid companion bot booms. Beijing’s model influences Belt and Road partners, exporting governance standards via tech aid.

Internationally, these rules spotlight ethical dilemmas: should AI nanny users? China’s data trove from billions positions it to pioneer scalable solutions. Yet tensions rise—U.S. firms decry “unfair” barriers, while hawks fear dual-use risks in emotion-manipulating tech.

Potential Challenges and Criticisms

Implementation hurdles loom: real-time emotion detection demands vast datasets, raising accuracy and bias concerns—could algorithms misflag cultural nuances? Providers gripe at costs, especially smaller ones lacking resources for constant reviews. Privacy advocates question surveillance creep, as monitoring interactions skirts data minimization principles.

Critics label ideological clauses as censorship tools, potentially muting dissent via chatbots. Enforcement inconsistencies plague past rules, with big tech skating while minnows sink. Still, voluntary compliance pilots could smooth rollout, as seen in generative AI filings.

Industry Reactions and Market Impact

Tech stocks dipped slightly post-announcement, with AI firms signaling quick adaptations. ByteDance pledged alignment, touting existing safeguards. Analysts predict a compliance boom for monitoring SaaS, boosting cybersecurity niches. Globally, safer Chinese AI could undercut lax competitors, reshaping exports.

Future Outlook for Human-Like AI in China

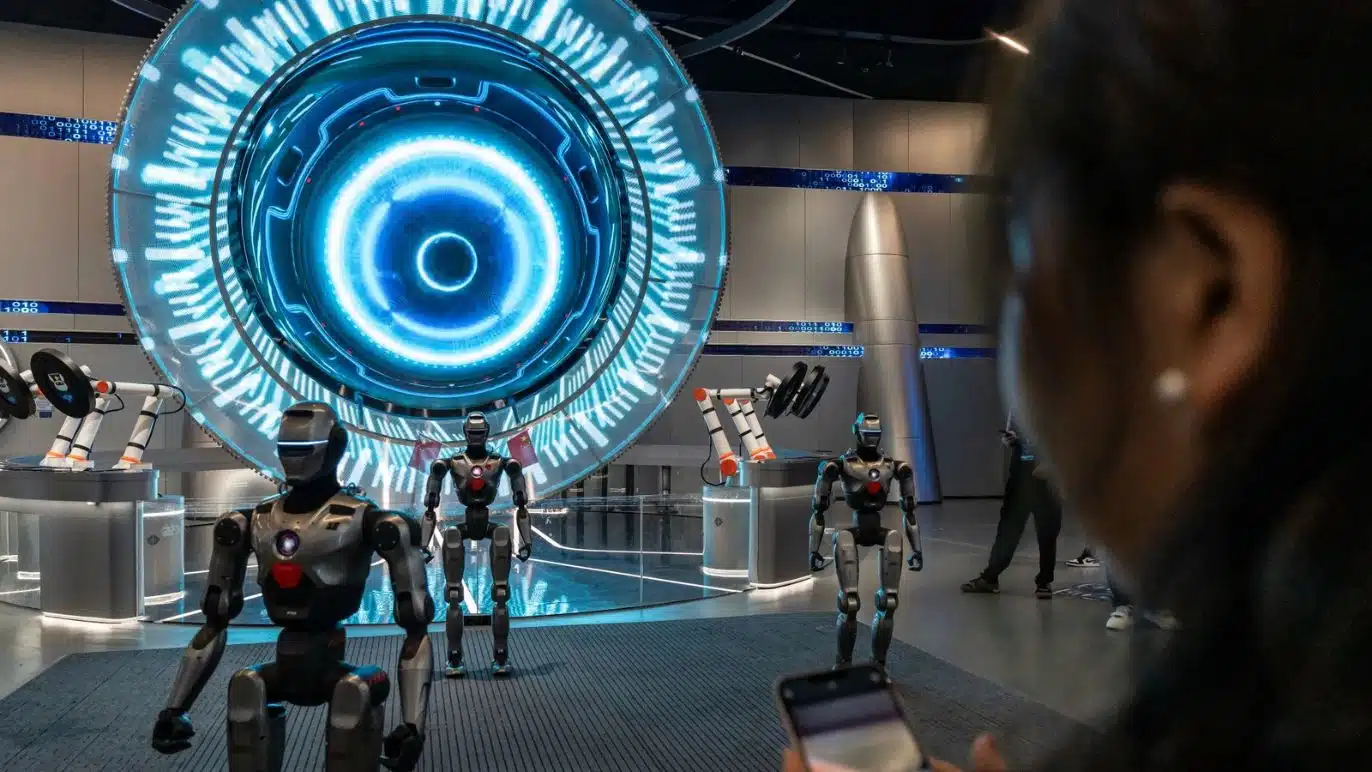

Post-comment, expect finalized rules by mid-2026, with phased enforcement favoring incumbents. This cements China’s dual role: AI superpower enforcing “responsible innovation.” Long-term, it may spawn breakthroughs in ethical AI, like verifiable empathy engines. As humanoids evolve—think robots with these traits—rules could expand, blending digital and physical oversight.

Beijing’s strategy underscores a philosophy: unleash AI’s economic might while harnessing it for harmony. With 1.4 billion users, successes here could blueprint global norms; missteps, cautionary tales. Stakeholders watch closely as China scripts the next AI chapter.