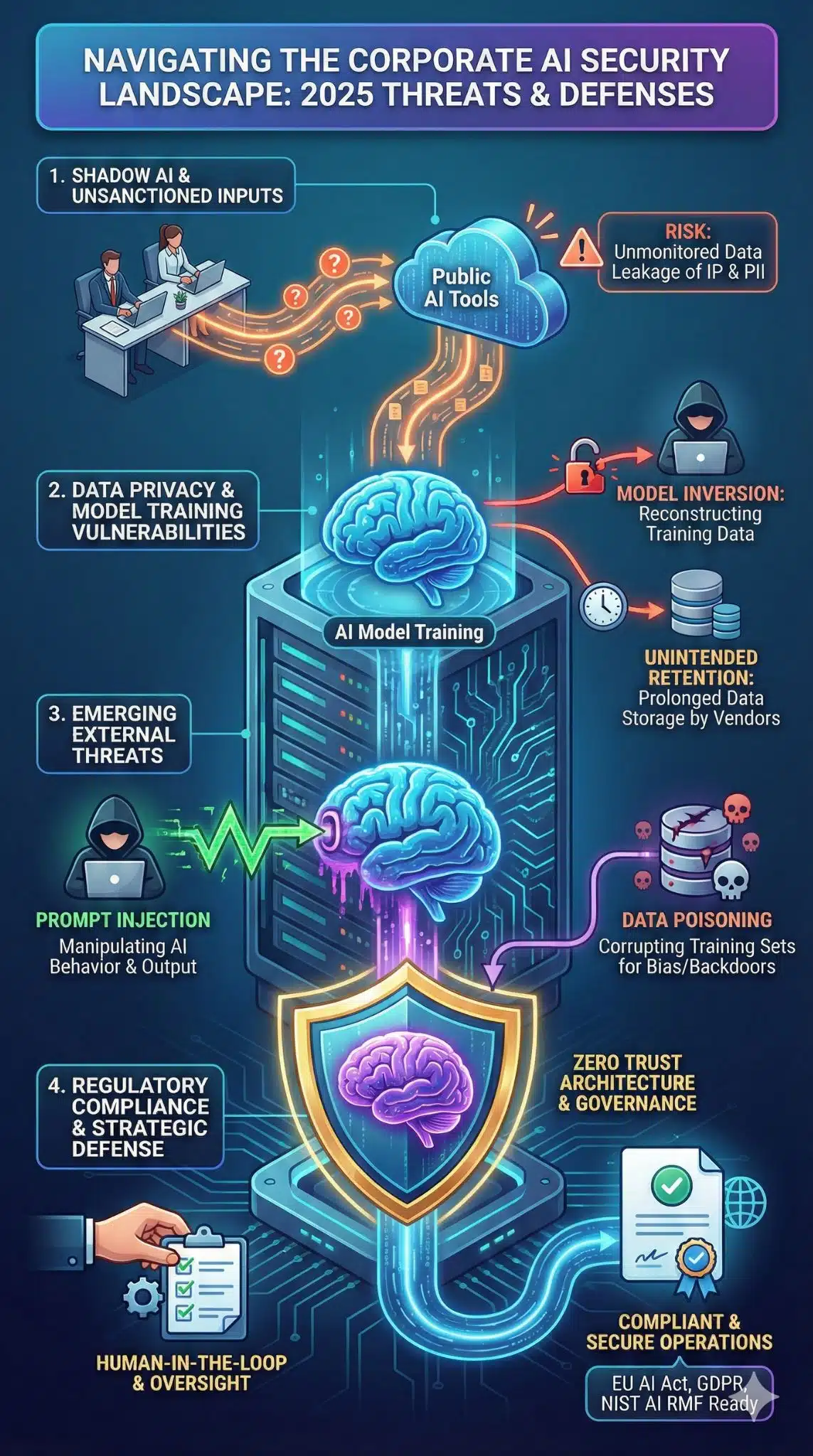

The rapid adoption of artificial intelligence in the corporate sector has fundamentally shifted operational landscapes. While these advancements promise unprecedented efficiency, the security implications of AI-integrated business tools have emerged as a primary concern for CISOs and business leaders alike. As organizations race to integrate Large Language Models (LLMs) and automated workflows, they often inadvertently expand their attack surface.

In 2025, the threat landscape is no longer hypothetical. From “Shadow AI” usage by employees to sophisticated prompt injection attacks, the vulnerabilities are as dynamic as the technology itself. Understanding these risks is not just about compliance; it is about business continuity. This analysis explores the hidden dangers of corporate AI adoption and provides a roadmap for securing your digital infrastructure against emerging threats.

The Rise of Shadow AI in the Workplace

One of the most insidious security implications of AI-integrated business tools is the phenomenon known as “Shadow AI.” This occurs when employees use unsanctioned generative AI tools to expedite tasks—such as drafting emails, debugging code, or summarizing meeting notes—without IT department approval or oversight.

When sensitive corporate data is pasted into public-facing LLMs, it often leaves the secure enterprise environment. Unlike traditional software, many AI models retain input data for training purposes, potentially exposing trade secrets or customer PII (Personally Identifiable Information) to the public domain. Organizations in 2025 are finding that their biggest security gap is not a firewall vulnerability, but well-meaning employees trying to be more productive.

Shadow AI Risk Profile

| Risk Factor | Description | Potential Business Impact |

| Data Leakage | Proprietary code or financial data entered into public chatbots. | Loss of IP; violation of NDAs. |

| Compliance Violation | Processing regulated data (e.g., HIPAA, GDPR) via unvetted tools. | Heavy regulatory fines; legal action. |

| Lack of Visibility | IT teams cannot patch or secure tools they don’t know exist. | Unnoticed breaches; delayed incident response. |

Data Privacy and Model Training Concerns

The integration of AI tools deeply into business workflows raises critical questions about data sovereignty and usage. When an enterprise connects its internal databases to a third-party AI service, the security implications of AI-integrated business tools extend to how that data is processed, stored, and potentially “memorized” by the model.

Model inversion attacks allow bad actors to query an AI system in a way that tricks it into revealing the data it was trained on. If a business tool was fine-tuned on unredacted sensitive documents, a competitor or hacker could theoretically extract that information through persistent prompting. Furthermore, the “black box” nature of many third-party AI solutions means businesses often lack clarity on whether their data is being used to train the vendor’s next-generation models.

Data Privacy Vulnerabilities

| Vulnerability Type | Mechanism | Prevention Strategy |

| Model Inversion | Reconstructing training data from model outputs. | Differential privacy techniques; strict output filtering. |

| Data Residency | AI vendors processing data in non-compliant jurisdictions. | Geo-fencing data; local LLM deployment. |

| Unintended Retention | Vendors storing API inputs for longer than necessary. | Zero-retention agreements; enterprise API keys. |

Emerging Threat Vectors: Prompt Injection and Poisoning

As businesses build applications on top of LLMs, they face novel attack vectors that traditional cybersecurity tools are ill-equipped to handle. Prompt injection involves a malicious user crafting an input that overrides the AI’s safety instructions, causing it to perform unauthorized actions, such as revealing hidden system prompts or executing backend commands.

Another growing threat is data poisoning, where attackers corrupt the training datasets used to fine-tune enterprise models. By subtly altering the data, attackers can embed “backdoors” that trigger specific, harmful behaviors only when a certain keyword or pattern is present. For a company relying on AI for fraud detection or hiring, a poisoned model could be manipulated to bypass security checks or introduce bias, undermining the integrity of the entire operation.

New AI-Specific Threats

| Threat Vector | Definition | Real-World Example |

| Prompt Injection | Manipulating AI inputs to bypass controls. | Tricking a customer service bot into refunding unauthorized purchases. |

| Data Poisoning | Corrupting training data to skew results. | Manipulating a spam filter’s learning set to let phishing emails through. |

| Supply Chain Attack | Compromising open-source models or libraries. | Injecting malicious code into a popular Hugging Face model repository. |

Regulatory Compliance and Governance

The regulatory environment surrounding the security implications of AI-integrated business tools is tightening globally. The full implementation of the EU AI Act in 2025 has set a new standard, categorizing AI systems by risk level and mandating strict transparency and human oversight for high-risk applications (e.g., AI in HR or credit scoring).

Non-compliance is no longer just a legal risk; it is a security risk. Regulatory frameworks often enforce “Privacy by Design,” requiring organizations to map data flows and implement rigid access controls. Failing to adhere to these standards not only invites massive fines—up to 7% of global turnover under the EU AI Act—but also signals a weak governance structure that cybercriminals are quick to exploit.

Compliance Checklist 2025

| Regulation | Key Requirement for AI | Action Item |

| EU AI Act | Classification of high-risk systems; human oversight. | Conduct an AI risk inventory; appoint an AI ethics officer. |

| GDPR | Right to explanation; data minimization. | Ensure AI decisions are explainable; limit data collection. |

| NIST AI RMF | Map, Measure, Manage, and Govern AI risks. | Adopt the NIST framework for internal AI audits. |

Final Thoughts

The security implications of AI-integrated business tools are complex, but they are manageable with a proactive and layered approach. As we move through 2025, the organizations that will succeed are not those that block AI, but those that wrap it in robust governance and security protocols.

By acknowledging the risks of Shadow AI, defending against prompt injection, and adhering to evolving regulations, businesses can harness the transformative power of AI without compromising their digital safety. The future belongs to the vigilant.