Goku AI Text-to-Video lands as the video-model arms race shifts from “wow” clips to workflow control. With OpenAI’s Sora 2 already in market and platforms racing to automate ads, Goku’s benchmark strength and ad-oriented direction point to a near future where video supply explodes and trust rules tighten.

How We Got Here: From Diffusion Clips to Video Foundation Models

Text-to-video has followed a familiar arc: research novelty, then creator toys, then enterprise-grade pipelines. Early systems struggled with temporal coherence, camera motion, and “physics” that looked right for a second and then fell apart. What changed is not just incremental quality, but model ambition. The industry is treating video as a foundation modality, alongside text and images, which means longer context windows, better controllability, and integration with audio, editing tools, and distribution channels.

OpenAI’s Sora 2 is a good marker of that transition. It positions video generation as both a creative tool and a step toward richer world simulation, while explicitly flagging new risk surfaces like nonconsensual likeness and misleading content. OpenAI also describes a staged rollout with restrictions around photorealistic person uploads and stronger safeguards for content involving minors. That is a sign the frontier is no longer “can we generate this,” but “can we deploy this at scale without breaking social trust.”

Goku enters precisely at this inflection point. Developed by researchers affiliated with HKU and ByteDance, it argues that “industry-grade performance” comes from a full-stack approach: data curation, architecture choices, training infrastructure, and a newer generative formulation. Instead of competing only on viral demos, it competes on what production teams actually feel: consistency, controllability, cost, and throughput.

Why Goku Matters Technically

Rectified Flow Meets Transformer

Goku is framed as a family of rectified-flow Transformer models for joint image and video generation. The paper reports a curated dataset of about 36M video-text pairs and 160M image-text pairs, and model sizes of 2B and 8B parameters. That combination matters because it signals the “scale play” is no longer limited to closed labs. If a social platform can train (or fine-tune) very large video models, the competitive boundary shifts from model quality alone to who controls distribution and feedback loops.

Rectified flow is part of a broader trend: exploring alternatives and refinements to classic diffusion sampling that can improve stability and speed. The practical implication is not academic elegance. Faster or more stable generation changes what can be productized. If generation gets cheaper and more reliable, the center of gravity moves from “single masterpiece shot” to “millions of variations,” which is exactly how modern advertising and short-form platforms operate.

Joint Image-Video Training as a Data Advantage

Goku emphasizes unified representation: a joint image-video latent space and full attention to support joint training. In a platform context, this is strategically aligned with how content is produced today. Most video workflows start with images: product shots, keyframes, thumbnails, catalog photography, creator selfies. A model that treats images and videos as a single continuum can be used as a conversion engine: image to motion, still to story, catalog to campaign.

That is also where data strategy becomes a moat. Platforms sit on oceans of short-form clips and engagement labels. Even if models are “open enough” to replicate techniques, the best training signals and the most relevant distribution feedback are concentrated in a few ecosystems.

Benchmark Signaling: What The Scores Really Say

Goku’s repo highlights strong results across common benchmarks, including 0.76 on GenEval, 83.65 on DPG-Bench, and 84.85 on VBench for text-to-video. Its VBench snapshot positions it among top systems in a crowded field that includes commercial and open offerings.

But benchmarks are double-edged. They measure important proxies (prompt alignment, temporal stability), yet production value often depends on hard-to-score traits: editability, identity consistency, style-lock across a campaign, and “legal safety” of training data. Goku’s bigger signal is not that it tops a chart. It is that serious players are converging on a similar playbook: huge curated corpora, architecture upgrades, and end-to-end tooling.

Capability Timeline Snapshot

| Milestone | What Changed | Why It Mattered |

|---|---|---|

| Dec 9, 2024: Sora System Card | Safety framing for video generation | Signaled deployment constraints would shape the market |

| Feb 7, 2025: Goku appears on arXiv | Scale claims, joint image-video approach | Marked a “platform-scale” entrant into video generation |

| Sep 30, 2025: Sora 2 | Video + audio, realism, steerability | Raised expectations for cinematic fidelity and safety controls |

| Sep 2025 to Jan 2026: EU work on labeling | Drafting a code of practice for marking AI content | Points to enforcement-era transparency requirements |

| Aug 2026: Transparency obligations apply | AI-generated or manipulated content must be marked | Pushes provenance from “nice to have” to compliance |

Goku AI Text-to-Video And the Platform Advantage

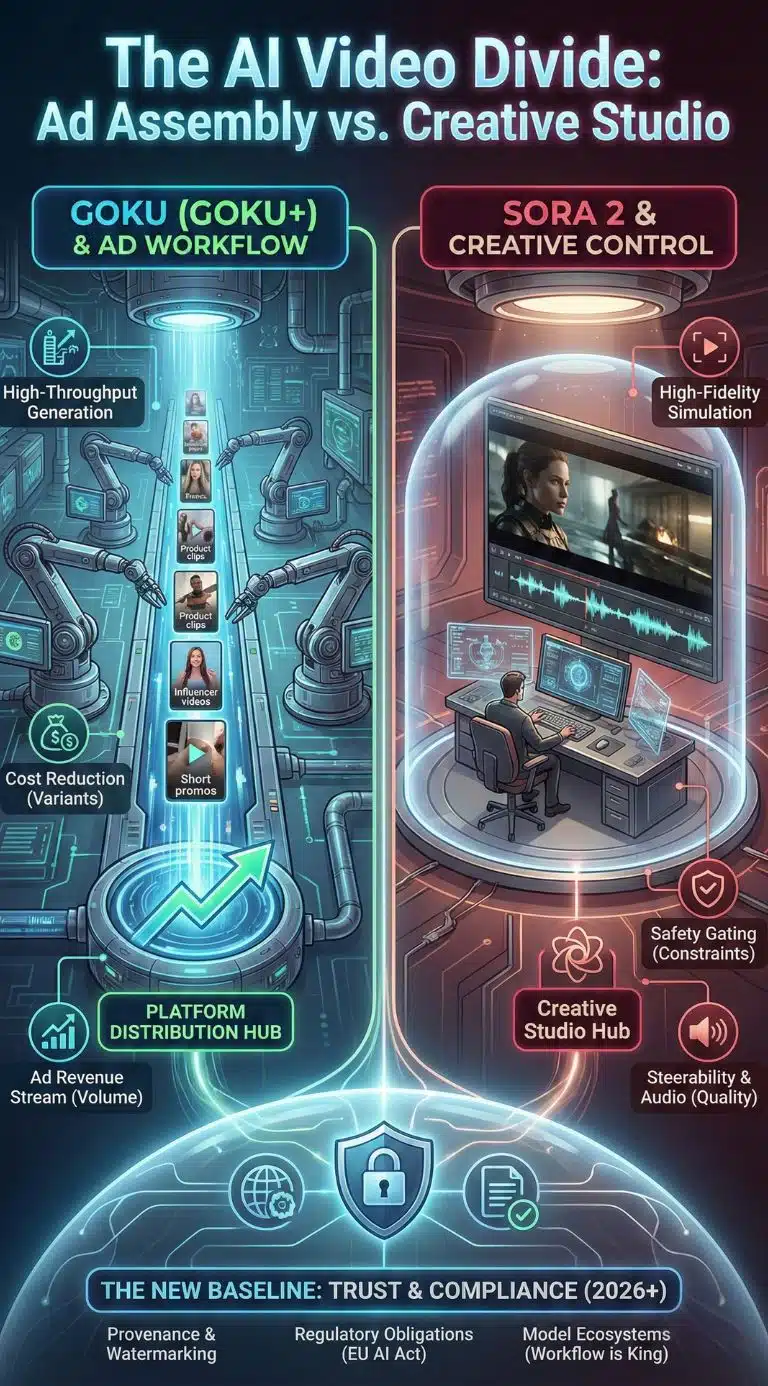

The most important context for Goku is not just “another model.” It is who sits behind it and what that implies for go-to-market. OpenAI’s strategy has leaned into high-end creative tooling and controlled access, with explicit safety gating and staged deployment.

ByteDance’s incentive structure is different. A platform business wins by increasing content supply, maximizing engagement, and lowering the cost of creative iteration for advertisers and creators. That is why coverage of Goku often foregrounds an ad-oriented variant, Goku+, and ByteDance’s claim that it could cut production costs dramatically compared to hiring creators for ad clips. Even treated as a directional claim rather than audited fact, it reveals the strategic target: not film studios first, but the advertising assembly line.

This lines up with the broader advertising automation wave. WPP projects global ad revenue at about $1.14 trillion in 2025, with further growth expected in 2026. If ad budgets keep growing while creative complexity rises (more formats, more variants, more personalization), generative video becomes less a novelty and more a structural input.

Advertising Economics Snapshot

| Indicator | Latest Figure | What It Implies For Generative Video |

|---|---|---|

| Global ad revenue (2025 projection) | ~$1.14T | A massive spend pool that rewards creative throughput |

| Global ad revenue growth (2026 projection) | ~7.1% | Pressure to scale creative production without scaling headcount |

| Claimed Goku+ cost reduction | “up to 99%” (company claim) | Aiming to make video ads as cheap and fast as image ads |

The Competitive Map: The New Baseline Is Multimodal

The competitive set has split into three lanes:

-

Premium cinematic systems that optimize for realism, long-horizon coherence, and higher-end creative control.

-

Platform-native systems that optimize for ad variants, vertical formats, influencer-like “digital humans,” and one-click distribution.

-

Ecosystem systems built around provenance and workflow tooling (editing, rights management, enterprise governance).

Sora 2 represents the first lane, emphasizing realism, synchronized audio, and steerability, while acknowledging risk and restricting certain inputs in early rollout phases.

Google’s Veo direction highlights the third lane: expanding video generation while coupling it to provenance tooling like watermarking and detection. The message is clear: future video models will ship with built-in trust layers because regulators, platforms, and brands will demand them.

Goku’s posture suggests a strong pull toward lane two: short-form, ad-grade, high-volume generation where the distribution edge matters. That is why “challenging Sora” is not only about who has the best model today. It is about whether the market’s center of profit is premium filmmaking or automated marketing.

Model Positioning Snapshot

| Player | Primary Product Gravity | Deployment Framing |

|---|---|---|

| OpenAI Sora 2 | High-fidelity creative video and audio | Limited invitations, safety gating, future API |

| Google Veo | Video generation tied to provenance | Emphasis on watermarking/detection approaches |

| Goku | Joint image-video generation | Research-to-product bridge, benchmark push |

| Goku+ | Ad-focused generation | Cost-reduction narrative for advertising |

Cost Curves, Market Growth, And The Coming Video Supply Shock

Two forces are colliding:

-

Demand pull: Ads, e-commerce, and creator economies want more video, in more formats, with more personalization.

-

Supply shock: Generative systems are about to flood the market with near-infinite variants.

Market sizing is messy because “AI video” spans analytics, editing, and generation, but multiple trackers show rapid growth. Fortune Business Insights estimates the AI video generator market at $614.8M in 2024, projecting $716.8M in 2025 and $2.56B by 2032. Grand View Research estimates a broader AI video market at $3.86B in 2024, projecting $42.29B by 2033. Even allowing for methodology differences, the direction is consistent: this category is scaling fast.

Separately, McKinsey’s widely cited work on generative AI points out that a large share of potential value sits in marketing and sales and related functions, which is where video generation fits most cleanly. The implication is that video will be one of the first modalities pushed from pilots into scaled enterprise usage, because the ROI story is easier to tell than for many “agentic” workflows.

The strategic consequence is uncomfortable: if video becomes cheap, then attention becomes the scarce resource. That tends to favor platforms with recommendation engines, ad-targeting infrastructure, and native measurement. In that world, the best model does not automatically win. The best distribution wins.

Market Growth Snapshot

| Category | 2024 | 2025 | Longer-Term Projection |

|---|---|---|---|

| AI video generator market (Fortune BI) | $614.8M | $716.8M | $2.56B by 2032 |

| AI video market (Grand View) | $3.86B | n/a | $42.29B by 2033 |

| GenAI annual economic potential (McKinsey) | n/a | n/a | $2.6T–$4.4T value range across use cases |

Trust, Regulation, And Provenance Move From Feature to Requirement

As generation quality improves, the policy environment tightens. The EU AI Act’s transparency obligations, summarized in official EU guidance, point toward a world where AI-generated or manipulated content must be clearly marked and detectable. Separate EU work on a code of practice for marking and labeling AI-generated content underscores that the practical details are being operationalized now, with applicability dates and milestones that push industry toward compliance-grade labeling.

In parallel, standards bodies are trying to create a machine-readable truth layer. C2PA’s specification for Content Credentials is explicitly designed to provide provenance and authenticity information for digital media in a scalable, opt-in way. Even if adoption is uneven, the direction is clear: provenance metadata will be part of the default expectations for newsrooms, advertisers, and platforms.

This matters for Goku-versus-Sora narratives because “best pixels” is not the only axis that buyers will care about. Brands will increasingly ask:

-

Can we prove what this clip is and where it came from?

-

Can we show a compliance story across markets?

-

Can we avoid likeness violations and reputational blowback?

OpenAI’s Sora 2 system card is essentially an admission that safety constraints shape product design. It describes mitigations, staged access, and restrictions that prioritize avoiding harm over maximal capability exposure. Platform-driven models will face the same reality, especially as enforcement dates approach.

Governance Toolkit Snapshot

| Mechanism | What It Does | Who Is Pushing It |

|---|---|---|

| Transparency obligations | Require disclosure/marking of synthetic content | EU AI Act guidance and service desk |

| Industry provenance metadata | Cryptographic provenance and edit history | C2PA specs and ecosystem |

| Deployment safety gating | Limits risky inputs and enforces safeguards | Sora 2 rollout approach |

Expert Perspectives: Two Competing Readings of What Comes Next

The Optimistic Reading: A Productivity Flywheel for Creative Work

The pro-automation view is that we are finally making video production “software-like.” Marketing teams can iterate quickly, localize campaigns, and test variants without turning every experiment into a full shoot. Market projections and enterprise research generally support this direction, arguing that content generation in customer ops and marketing is among the most immediate value pools for generative AI.

Under this view, Goku’s significance is that it pushes video generation toward high-throughput, real-world usage, not just cinematic showcases. If cost drops enough, businesses that previously could not afford video ads will enter the market, expanding total demand.

The Skeptical Reading: Attention Pollution and a Trust Tax

The counterargument is that cheap video can create a flood of low-quality content, raising the cost of moderation, brand safety, and user trust. In 2026, even mainstream business commentary is increasingly framing AI as entering a “show me the money” phase where scaling usage is harder than scaling demos. That skepticism is not a rejection of the tech. It is a warning that distribution and governance will be decisive bottlenecks.

In this reading, the “trust tax” rises: platforms and brands must spend more on provenance, detection, moderation, and legal risk management. The more realistic the content, the higher the stakes.

What Creators, Agencies, And Studios Should Do Now

For practitioners, the question is not whether Goku beats Sora on a chart. It is what changes in the operating model of content.

-

Shift from single assets to asset systems. Design campaigns as prompt libraries plus style guides plus approval gates.

-

Invest in provenance defaults. Treat content credentials, labeling, and audit trails as part of production, not compliance afterthoughts.

-

Build “human-in-the-loop” where it matters. Keep humans focused on brand voice, truth claims, and creative direction, while automation handles variants.

-

Prepare for likeness and consent constraints. Sora 2’s restrictions are a preview of what regulators and platforms will require across the market.

Future Outlook: The Milestones That Will Decide the Winner

Looking ahead, several concrete milestones are likely to shape the next phase:

-

Compliance deadlines become product deadlines. As EU transparency obligations become applicable in August 2026, model providers and deployers will be pressured to ship reliable marking, disclosure, and detection support.

-

Ad-tech integration becomes the main battleground. The biggest budgets are in marketing, and WPP’s projections suggest that ad spend remains resilient. If Goku-like systems plug directly into campaign creation and platform delivery, they may outcompete “better” models that live outside the ad stack.

-

Audio and controllability become the baseline expectation. Sora 2 is explicitly positioned as video plus audio with improved realism and steerability, raising what users consider “normal.” Competitors will have to match this standard, not just in demos but in reliable editing.

-

Benchmarks matter less than workflows. VBench-style scores can signal maturity, but procurement will increasingly revolve around brand safety, provenance, latency, and unit economics. Goku’s real “challenge” to Sora is that it points to a future where video generation is judged like ad infrastructure, not like film craft.

Prediction, clearly labeled: Analysts should expect 2026 to be less about a single “best model” and more about model ecosystems where generation, editing, provenance, and distribution are bundled. In that environment, Goku AI Text-to-Video’s most disruptive potential is not cinematic supremacy. It is accelerating the shift to automated, variant-driven video at platform scale.

Final Thoughts

Goku AI Text-to-Video matters because it reinforces a deeper industry pivot: generative video is moving from spectacle to infrastructure. Sora 2 shows what premium, safety-conscious deployment can look like. Goku signals what happens when platform incentives and ad economics drive the roadmap. The next phase will be decided by trust tooling, compliance timelines, and distribution leverage as much as by raw generation quality.