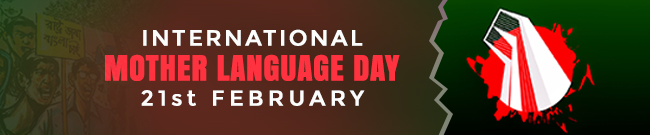

Google Gemini 3.0 and the “Ultra Voice” era represent a fundamental shift in human-computer interaction, signaling the potential transition from a text-dominant search culture to one defined by seamless, ambient conversation. As multimodal AI achieves PhD-level reasoning and real-time audio latency below the threshold of human perception, the traditional search box faces its first true existential threat.

The evolution of digital search has followed a predictable trajectory: from the keyword-stuffed queries of the late 1990s to the natural language processing (NLP) breakthroughs of the 2010s. However, the arrival of Google Gemini 3.0 in late 2025 and its full deployment in 2026 marks the “Cambrian Explosion” of voice-first interfaces. For decades, typing was the tax we paid for accessing the world’s information. We adapted our thoughts into fragmented “search-ese” to help machines understand us. With Gemini 3.0, the machine finally adapts to us. This transition is not merely a feature update; it is a structural redesign of the internet’s front door.

The Technological Architecture of Ambient Intelligence

At the heart of Gemini 3.0 lies a native multimodal architecture that treats audio, video, and text as a singular, fluid data stream. Unlike previous iterations that relied on “stitching”—where a speech-to-text model transcribed audio for a text model to process—Gemini 3.0 processes sound directly. This reduces latency to near-zero and allows the AI to perceive non-textual cues like intonation, sarcasm, and emotional urgency.

The “Ultra Voice” capability, specifically reserved for the highest-tier Gemini models, utilizes a new “Deep Think” reasoning layer. This allows the assistant to handle multi-step, complex queries without the user ever touching a screen. For example, a user can say, “Hey Gemini, look at my recent bank statements and find out why my subscription costs went up, then suggest which ones I should cancel based on my usage in Gmail.” This requires cross-app reasoning, financial analysis, and conversational memory—all executed through a voice interface that feels like talking to a human expert.

The Death of the Keyboard: A Behavioral Paradigm Shift

The psychological barrier to voice search has historically been twofold: inaccuracy and social friction. In 2026, both barriers have effectively collapsed. According to recent market data, voice-first interactions now account for over 55% of all mobile search queries, driven by the ubiquity of wearable tech like AI-integrated glasses and high-fidelity earbuds.

Typing is inherently high-friction. It requires focused attention, physical movement, and a screen. Gemini 3.0 facilitates “Zero-Click, Zero-Touch” outcomes. When the search engine can reason across your entire digital life—from your calendar to your cloud-stored documents—the need to “type a query” is replaced by “delegating a task.” We are moving from a world of Search to a world of Resolution.

| Feature | Traditional Search (2020-2024) | Gemini 3.0 “Ultra Voice” (2026) |

| Input Method | Keyboard-centric / Fragmented text | Voice-native / Continuous dialogue |

| Processing | Keyword matching / Latent indexing | Real-time PhD-level reasoning |

| Context | Single-session memory | 1M+ token multi-app context window |

| Output | List of links (SERP) | Actionable, synthesized voice/UI |

| Interaction | One-way query | Bi-directional, multi-turn negotiation |

SEO in the Age of Conversational Authority

For digital marketers and the Editorialge community, Gemini 3.0 changes the rules of the game. Traditional SEO focused on “Position 1” on a page of ten blue links. In the Ultra Voice era, there is only “Position 0″—the single spoken answer. If your content isn’t the primary source for the AI’s synthesized response, you are effectively invisible to the voice-first user.

The focus has shifted from “Keyword Density” to “Information Density and Entity Authority.” Gemini 3.0 prefers content that answers “The Why” and “The How” rather than just “The How-To.” Because the AI can now reason through complex PDFs and videos, it rewards depth. A 2,000-word deep-dive analysis is now more valuable than a dozen 500-word listicles because it provides the “Thinking Mode” of Gemini 3.0 with enough substance to form a coherent, authoritative voice response.

Comparative Market Landscape: The Battle for the Ear

Google is not alone in this race. The 2026 landscape is a tripolar struggle between Google Gemini 3.0, OpenAI’s GPT-5.2, and Anthropic’s Claude 4.5. While GPT-5.2 excels in creative nuances and “vibe-based” interaction, Gemini’s advantage lies in its “Full Stack” integration.

| Metric | Google Gemini 3.0 Ultra | OpenAI GPT-5.2 | Claude 4.5 (Anthropic) |

| Primary Edge | Ecosystem Integration (Android/Workspace) | Logic & Reasoning Prowess | Constitutional Safety & Ethics |

| Voice Latency | < 150ms (Native) | ~200ms | ~300ms |

| Context Window | 1M to 2M Tokens | 500K Tokens | 1M Tokens |

| Multimodality | Native Audio/Video/Text | Native Audio/Image/Text | Text/Image/Code |

Google’s “Antigravity” platform, launched alongside Gemini 3.0, allows the voice assistant to act as an agent within the operating system. This means the AI doesn’t just tell you the answer; it can perform the action, such as booking a flight or refactoring a block of code, via voice command. This agentic capability is what sets the Ultra tier apart from its predecessors.

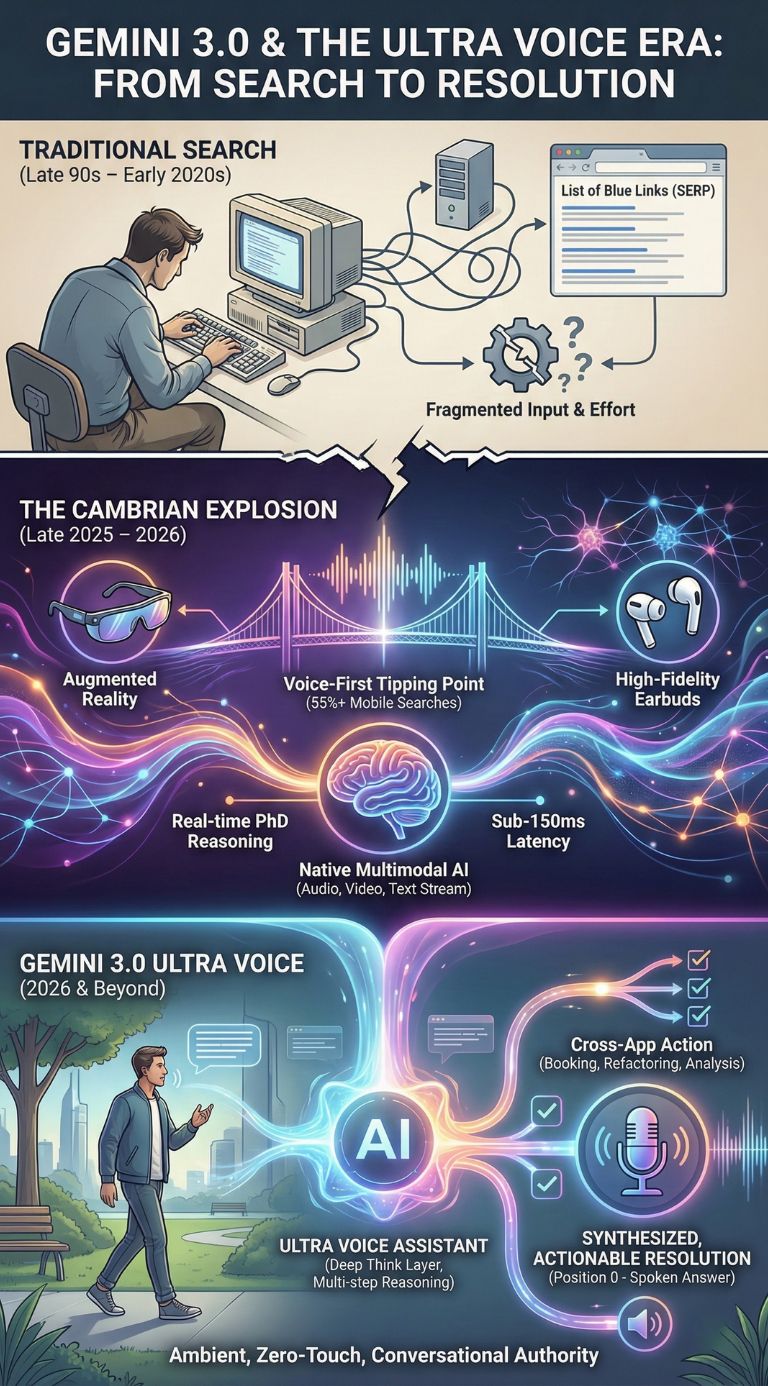

Ethical Implications and the Privacy Trade-off

The move to an “Always-On” voice interface brings significant privacy concerns to the forefront. For Gemini 3.0 to be truly effective, it requires access to a “Personal Knowledge Graph.” This includes your emails, your location history, and your real-time audio environment.

Experts suggest that 2026 will be the year of “Privacy-First AI,” where on-device processing (via Gemini Nano 3.0) handles sensitive data, while the cloud-based “Ultra” model handles high-level reasoning. However, the risk of “Acoustic Footprinting”—where AI can identify users by their voice patterns or even their background environment—remains a hot topic for regulators in the EU and North America.

Economic and Enterprise Impact

In the corporate world, Ultra Voice is revolutionizing the “Meeting Economy.” Gemini 3.0 can listen to a three-hour multilingual board meeting, identify every speaker by voice signature, and provide a real-time summary of sentiment, action items, and financial implications.

-

Productivity Gains: Estimates suggest a 35% reduction in time spent on administrative “search and find” tasks.

-

Cost Shift: Businesses are moving budgets from “Search Ad Spend” to “AI Optimization,” ensuring their data is “digestible” for AI crawlers.

-

New Job Roles: The rise of “Conversation Architects” who design how brands “sound” and “interact” in a voice-only world.

Expert Perspectives: Neutralizing the Hype

While the “End of Typing” makes for a compelling headline, many analysts remain skeptical. Dr. Elena Vance of the Global AI Institute argues that “Voice is high-bandwidth for output but low-bandwidth for complex input.” While it’s great for asking for the weather or a summary, complex tasks like data visualization or legal editing still require a “Glass and Keys” interface.

The counter-argument is that we are in a hybrid era. We aren’t throwing away keyboards; we are liberating them. Typing will become a specialized tool for creators, while “Search” becomes a casual, ambient background activity for everyone else.

Future Outlook: What Happens Next?

Looking ahead to late 2026 and 2027, we expect the “Visual-Voice Link” to strengthen. This involves the integration of Gemini 3.0 with Augmented Reality (AR) glasses. In this scenario, search is no longer a destination; it is a layer over reality. You will look at a building and ask, “Who designed this?” or look at a menu in a foreign country and hear a real-time translation and health warning based on your medical records.

Upcoming Milestones to Watch:

-

Q2 2026: Full integration of Gemini 3.0 Ultra Voice into Android Auto, eliminating touchscreens for navigation.

-

Q3 2026: Launch of “Gemini Offline,” allowing for sophisticated reasoning on edge devices without an internet connection.

-

Q4 2026: The first “Voice-Only” e-commerce holiday season, where 30% of purchases are expected to be initiated and completed via conversational AI.

Data & Statistics Summary

-

Accuracy Leap: Gemini 3.0 Pro shows a 50% improvement over Gemini 2.5 in handling multi-step reasoning tasks.

-

Reasoning Benchmarks: Scored 100% on the AIME 2025 mathematics benchmark with code execution tools.

-

Market Reach: AI Overviews now reach over 2 billion users monthly, with voice interactions growing at 40% YoY.

-

Developer Adoption: Over 13 million developers are now building on the Gemini API ecosystem as of early 2026.

Final Thoughts

The “End of Typing” is less about the disappearance of the keyboard and more about the disappearance of the effort required to find information. Google Gemini 3.0 “Ultra Voice” marks the moment when the internet becomes truly conversational. For users, it offers unparalleled convenience; for businesses, it demands a radical rethink of digital presence. As we move into the second half of 2026, the question is no longer “How do I rank on Google?” but “How does Google represent my brand in a conversation?”