We spent three years debating whether AI would replace the programmer. In 2026, that debate is dead. With Claude Code crossing the $1B ARR threshold and OpenAI’s Codex landing with a macOS “command center,” we are not watching a normal product cycle. We are watching the moment software development flips from writing code to commanding systems that write it for you.

Codex vs Claude Code is the line in the sand. The contest is not about who generates cleaner functions or smarter one-liners. It is about who owns the brain that coordinates agents, governs risk, and turns intent into shipped software at industrial speed.

If you still think this is an IDE plugin story, you are already behind. The winning platform will not live in a chat window. It will live where work gets orchestrated, reviewed, audited, and merged.

The Hook: The Debate Is Over, The Architecture War Begins

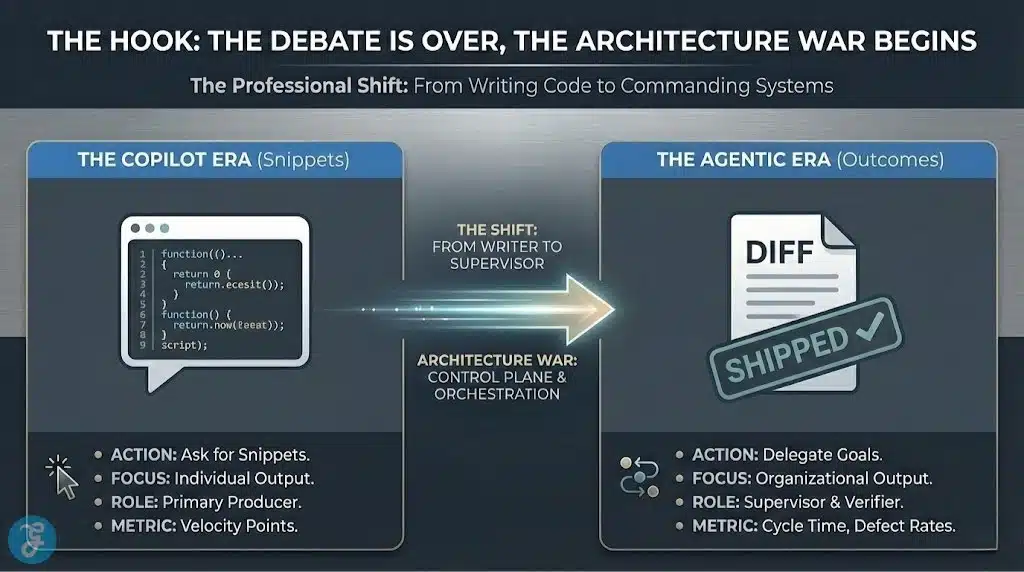

The copilot era trained people to ask for snippets. The agentic era trains people to delegate outcomes. That difference sounds subtle until you see what it does to teams, budgets, and power.

A copilot waits for your cursor. An agent takes a goal, breaks it into tasks, runs tools, writes code, tests it, and hands you a diff. The first boosts individual output. The second rewires how organizations build software.

That is why the real shift is professional, not technical. The software engineer stops being the primary producer and becomes the supervisor of autonomous production. The job becomes orchestration, verification, and accountability.

If that sounds extreme, look at what teams already measure. Many engineering orgs now track cycle time, review latency, and defect escape rates with the same intensity they once reserved for velocity points. Agents attack those metrics directly, and leadership notices.

The Great Surrender: Why OpenAI Is Running Scared

OpenAI did not stumble because it lacked intelligence. It stumbled because it assumed its gravity would hold. For years, “GPT-everything” felt like an unbreakable default, and the market tolerated half-finished developer workflows because the model was the headline.

Then Claude Code changed the story. Anthropic did not just ship autocomplete with better prose. It shipped something that behaves like a colleague, and it proved people would pay for it at scale.

A $1B revenue run-rate is not a vibe. It is a mandate. When that milestone hit in late 2025, it told every buyer that agentic coding was not experimental. It told every competitor that developer habits can move fast when value feels immediate.

OpenAI’s response was revealing in its urgency. The Codex macOS app launch on February 2, 2026 signals a pivot away from “chat as the interface” toward “command center as the interface.” In plain terms, OpenAI is acknowledging that the race is real, and it does not want to watch someone else become the default operating layer for software work.

OpenAI also framed Codex around parallelism, worktrees, and longer-horizon reasoning. That positioning matters because it admits what developers actually want. They do not want another assistant who talks. They want a system that finishes.

Codex vs Claude Code: The Fight For The Control Plane

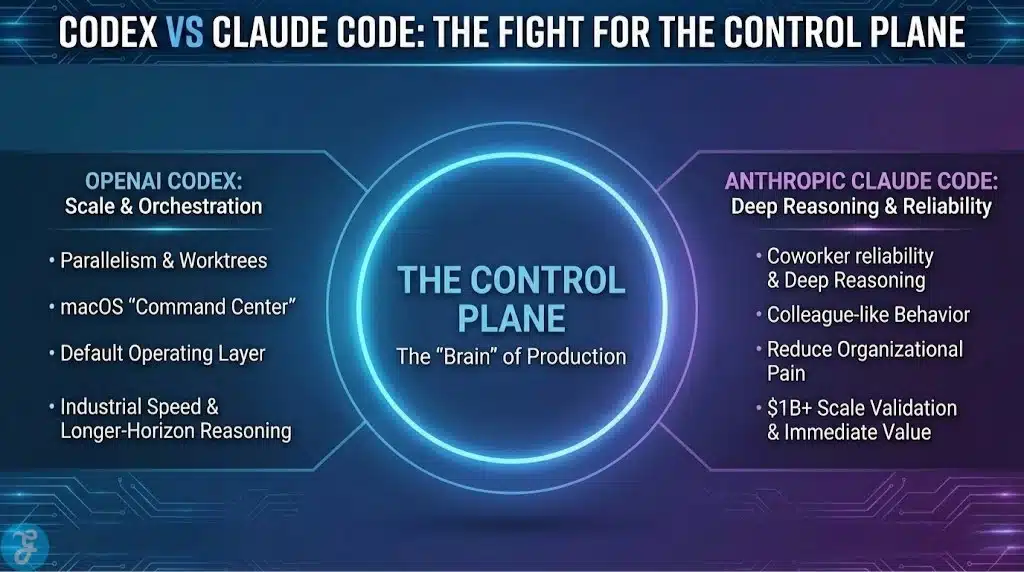

Codex vs Claude Code is a platform war disguised as a model comparison. The winner will not be the system that writes the cleverest algorithm. The winner will be the system that owns the control plane for software production.

Control plane means task intake, decomposition, permissions, sandboxing, test execution, review packaging, and merge policies. It also means telemetry, audit logs, and governance hooks that satisfy security teams and regulators. Whoever owns that plane does not just sell “AI coding.” They define how coding happens.

This is why a native desktop command center matters. It is a distribution play and a workflow play at the same time. If the agent lives where you start your day, it becomes the default place to delegate work, check progress, and approve changes.

Once a team gets used to assigning tasks to agents, switching stops feeling like changing tools. It feels like changing the factory line. That is the kind of lock-in that beats any single benchmark.

Both OpenAI and Anthropic understand this. One is leaning into scale and orchestration. The other is leaning into deep reasoning and coworker reliability. The market will decide based on which philosophy reduces real organizational pain.

The 2026 Reality: We Are No Longer Coders

In 2026, the most valuable skill is not typing. It is directing. You can call that shift “agentic architecture” or “orchestration,” but the outcome is the same. The person who wins is the person who can run a fleet of machines safely.

Industry audits now suggest a meaningful share of production pull requests are bot-generated end to end. One widely circulated 2026 figure puts it at 13.4% of PRs being entirely generated by automation. That is not “help.” That is labor substitution inside the pipeline.

You see it in the way teams talk. They no longer say, “Write me a function.” They say, “Refactor this module without breaking tests,” or “Reduce p95 latency by 20%,” or “Kill this class of vulnerabilities across the repo.” Those are outcomes, and outcomes invite delegation.

The uncomfortable truth is that many developers will resist until the market forces them. That resistance will not stop the shift. It will only decide who gets promoted and who gets managed.

The winner in this era will look less like a coder and more like a production editor. They will shape intent, constrain risk, and review outputs with ruthless clarity.

What The Orchestrator Actually Does

Orchestrators do not stop coding. They stop wasting time on low-leverage keystrokes. They spend their attention where humans still matter.

They define the outcome precisely. They set constraints like performance budgets, backward compatibility, and security requirements. They attach context, examples, and test expectations so the agent cannot pretend ambiguity is permission.

They also split work into parallel tracks. One agent explores, another implements, another writes tests, and another reviews for security regressions. The human then acts like an editor-in-chief, accepting what is right and rejecting what is wrong.

The most important skill becomes verification. If you cannot read a diff critically, you cannot lead. If you cannot spot a subtle failure mode, you will ship faster into a wall.

Winners, Losers, And The New Economics Of Software

Agentic systems change the economic unit of software. Traditional teams priced effort, headcount, and time. Agentic teams price outcomes, throughput, and verified task completion.

That shift will create a brutal sorting function. Some companies will double output with the same staff. Others will spend the same budget to ship less, because they insist on human-only throughput in a world that no longer rewards it.

Here is the pivot in a simple lens.

| Category | The Status Quo Loser (Manual) | The Agentic Winner (Orchestrated) |

|---|---|---|

| Talent Strategy | Senior devs who resist AI “on principle” | Junior architect-orchestrators using Codex and Claude |

| Release Velocity | Two-week sprints with manual QA | Continuous deployment with agent-run checks |

| Revenue Model | Per-seat SaaS licensing | Performance-based task completion credits |

| Security | Manual PR reviews with human fatigue | Sandbox testing and verifiable logic gates |

| Tech Debt | “We’ll fix it next quarter” | Refactoring as a default step in every PR |

This is not about replacing senior engineers with juniors. It is about replacing wasted human time with machine throughput, while keeping human judgment as the final gate. Teams that learn that balance will outcompete teams that refuse it.

The cost of inaction is not theoretical. Legacy code behaves like the dark matter of the internet, invisible until it breaks. Humans move too slowly to repair it at the scale modern infrastructure demands.

The biggest winners will be the ones who treat technical debt as an always-on agent task. If an agent can refactor while you sleep, “we’ll do it later” stops being a strategy. It becomes a self-inflicted tax.

Reliability Beats Brilliance: Trust, Sandboxes, And Reviewer Agents

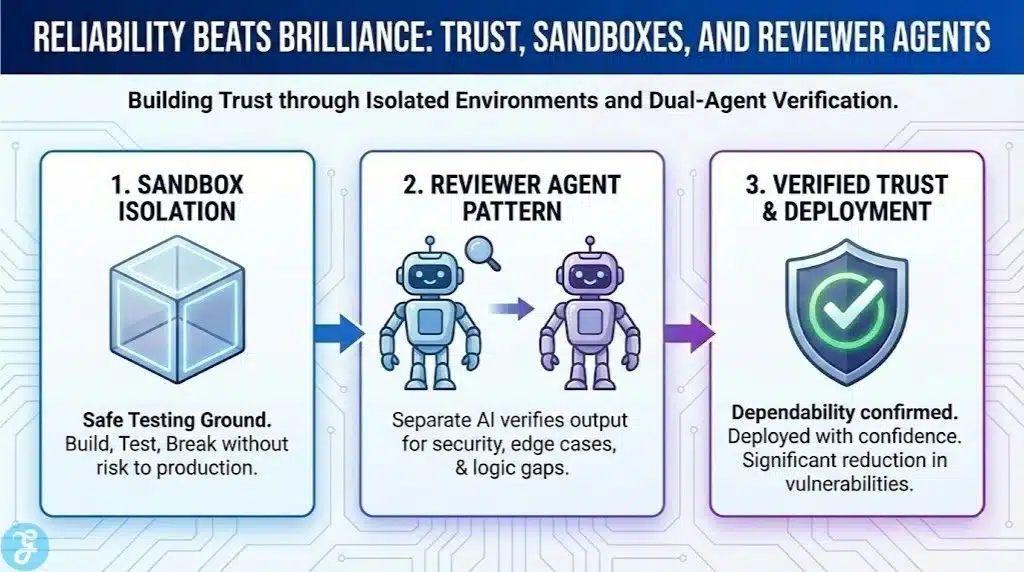

Agentic coding will not win because models are poetic. It will win because models become dependable. Dependability comes from systems, not vibes.

The core problem is simple. An agent that can change hundreds of files can also break hundreds of things. That is why sandboxes, test harnesses, and reviewer agents become the new baseline.

Many teams already run a two-agent pattern. One agent proposes a change. Another agent reviews it with a different perspective, looking for edge cases, security regressions, and missing tests. Advocates of this approach claim large reductions in vulnerability rates, with some internal reports citing around 40% fewer vulnerabilities when reviewer agents are used consistently.

You do not have to worship the exact number to see the direction. Machines do not get tired at 3 AM. They do not rush because the sprint ends tomorrow. They can check the same class of mistake across the entire repo without complaining.

This is where the “digital slop” fear collapses. Slop comes from unverified output shipped under pressure. Verification is a process problem, and agentic stacks are getting better at process than most humans ever were.

The Geopolitical Stakes: Sovereign Engineering Agents

This shift is not contained to Silicon Valley. The country that scales agentic software production will scale digital infrastructure faster. That advantage will leak into defense, industry, and critical systems.

The phrase “sovereign engineering agent” sounds dramatic, but it maps to a real objective. Nations want AI systems that can maintain and modernize their software infrastructure without depending on foreign vendors or scarce human labor. That objective will shape policy, procurement, and platform alliances.

You can already see different stances emerging. Each stance shapes how quickly organizations can deploy agentic workflows at scale.

| Entity | Regulatory Or Strategic Stance (2026) | Primary Objective |

|---|---|---|

| United States | Deregulated innovation and “AI first” momentum | Maintain dominance in agentic R&D and platforms |

| European Union | Human-in-the-loop mandates and strict oversight | Ensure ethical control, even at the cost of speed |

| China | State-integrated engineering agents | Rapid industrial automation and self-sufficient stacks |

| India | Pivot from code farms to agent hubs | Retrain millions of devs as agent managers |

This geopolitical lens changes how you read Codex vs Claude Code. It is no longer only a product choice. It is also a strategic dependency choice. If your national infrastructure depends on an agent stack you do not control, your modernization timeline depends on someone else’s roadmap.

Even inside a company, the logic is similar. The “brain” that manages your software production becomes a strategic asset, not a nice-to-have tool. That asset will attract regulation, procurement scrutiny, and national interest.

The Counter-Punch: The Human Soul Argument Fails In Practice

Critics argue that agentic coding will turn software into brittle junk. They claim that without a human “feeling” the code, systems will degrade into unreadable sludge.

That critique confuses authorship with accountability. The problem with software has never been that humans lack soul. The problem has been that humans ship under pressure, forget edge cases, and stop refactoring when priorities shift.

Agentic systems can be worse than humans when you let them run without gates. They can also be better than humans when you force them through verification. The difference is governance.

Modern evaluation suites increasingly measure more than correctness. They test regression risk, multi-file reasoning, and tool-driven debugging behavior. In that environment, the agent that explains its plan, runs tests, and shows its work wins, even if a human could have written the same change more “artistically.”

If you want to preserve the human soul of engineering, keep humans in the role they are best at. Humans set intent, values, and tradeoffs. Machines execute, verify, and iterate until the result matches the intent.

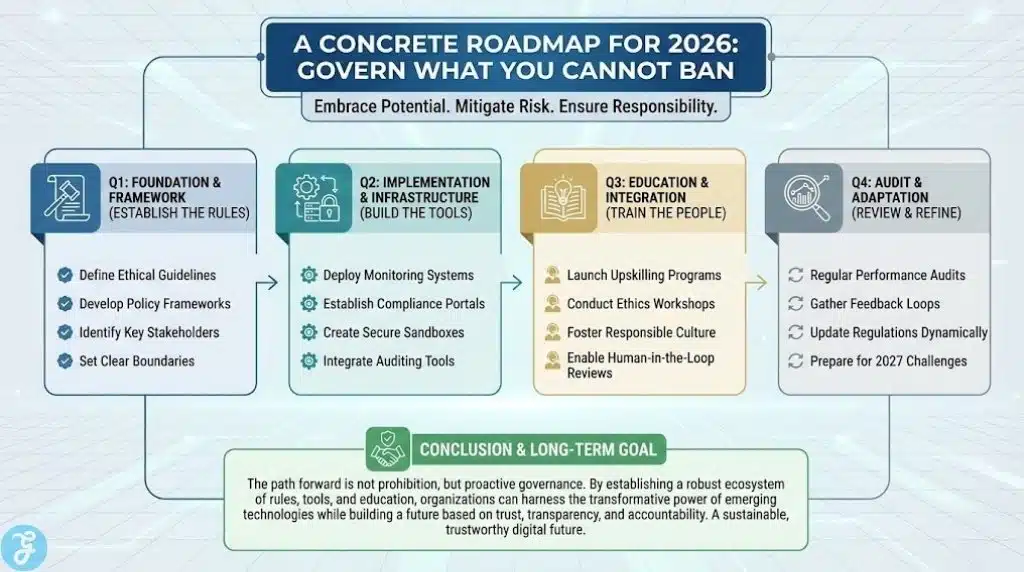

A Concrete Roadmap For 2026: Govern What You Cannot Ban

You cannot ban agentic coding without banning competitiveness. So the sane move is governance. If you want the upside without the blowups, you need a concrete operating model.

Start with three shifts that every serious organization should make in 2026. They are not optional if you ship software that matters. They are the price of admission.

First, move from writing to verifying. Universities and hiring loops should test architecture, auditing, and failure-mode thinking. If a candidate cannot review an agent’s diff, they cannot safely use AI.

Second, build a digital sandbox. Every agent needs a safe environment where it can build, test, and break things in isolation. No sandbox, no autonomy.

Third, adopt performance-based governance. Regulate the outcomes and the accountability, not the keystrokes. If an agent writes a bug that takes down a critical service, the organization is liable, and the process must change.

Here is what a practical sandbox checklist looks like.

-

Isolated environments per task, with reproducible builds

-

Mandatory test runs before a diff is surfaced for approval

-

Policy gates for secrets, credentials, and data access

-

Automatic rollback plans for high-risk changes

-

Traceable logs of tool calls, file edits, and decision steps

And here are governance principles that scale.

-

Assign ownership for every agent-enabled change, even if no human wrote the code

-

Require reviewer-agent passes for security-sensitive modules

-

Use risk tiers that decide how much autonomy an agent gets

-

Measure defect escape rates and rollbacks as primary KPIs

-

Kill autonomy quickly when metrics degrade, then re-enable after fixes

This is the point where Codex vs Claude Code stops being a philosophical debate. It becomes an operating system question. The best platform will make these safeguards easy, default, and visible.

The Cost Of Inaction: A Simple Projection

Late adopters often tell themselves they are “waiting for safety.” In practice, they are waiting to be outpaced. The gap compounds because agentic teams refactor faster, test more often, and ship improvements continuously.

You can express the fear in numbers. Even a rough projection clarifies how quickly the gap compounds.

| Metric | 2026 (Early Adopter) | 2028 (Late Majority) |

|---|---|---|

| Avg. Time To Market | Three months | 48 hours |

| Bug Density | 12 per 1k lines | Under 1 per 1k lines |

| Dev To Agent Ratio | 1:1 (copilot) | 1:10 (orchestration) |

| Maintenance Cost | 60% of budget | 10% of budget |

Treat these as directional, not prophetic. The exact values will vary by org. The trend will not.

When agents do the boring work, humans spend time on leverage. When humans do the boring work, they run out of time for leverage. That is how companies lose.

Tooling Comparison: What Matters In Q1 2026

Most teams will not choose a winner based on marketing. They will choose based on fit. Fit means context capacity, reliability under long tasks, and how easily the system fits into existing workflows.

A clean way to think about the landscape is to ask what each platform optimizes for. The details will change, but the selection logic will not.

| Feature | OpenAI Codex (macOS) | Anthropic Claude Code | GitHub Copilot (Next) |

|---|---|---|---|

| Model | GPT-5.2-Codex | Claude 4 and 4.5 | Mixture of Experts |

| Best For | Parallel tasks and macOS workflows | Deep reasoning across large repos | Enterprise integration and IDE ease |

| Key Innovation | Agentic worktrees and task threads | Model Context Protocol verification | Commit-to-deploy automation |

| Context Window | 128K (enhanced) | 200K+ | 64K (tiered) |

Do not over-index on raw context numbers. The practical question is whether the tool can keep a plan coherent across hundreds of files and multiple test runs. The tool that stays coherent will feel like magic. The tool that loses the plot will feel like expensive autocomplete.

This is also where user experience matters. A command center that makes parallel work understandable will beat a smarter model trapped in a confusing interface. Developers do not adopt intelligence. They adopt clarity.

What Happens Next: The Leash Or The Layoff

By this time next year, the phrase “software engineer” may sound as dated as “switchboard operator.” The role will not vanish. It will mutate into system design, orchestration, and audit.

The fastest teams will look weird to today’s managers. They will run more experiments, open more PRs, and revert more quickly when something fails. They will ship by directing swarms of agents and approving only what passes hard gates.

The slow teams will look familiar. They will hold meetings about the process while their competitors refactor entire subsystems in an afternoon. They will call it responsibility. The market will call it lag.

Codex vs Claude Code is not a scoreboard for two labs. It is a referendum on whether you will own the leash. If you refuse to command agents, you will compete against people who do, and you will lose on time.

You do not need to worship the machines. You need to govern them, measure them, and force them to show their work. If you can do that, you will ship faster with fewer defects, and you will spend your human attention where it matters.

Codex vs Claude Code will decide who controls the future of software because it will decide who controls the workflow of building it. The next generation of winners will not be the best coders. They will be the best commanders.