AI is moving from the radiology suite into the lab, where most clinical decisions begin. As Labcorp and Quest embed generative tools into ordering and interpretation, diagnostics becomes a software platform, shifting costs, liability, workforce needs, and patient equity debates in early 2026 globally.

How The Lab Became Medicine’s Operating System?

Clinical labs used to sit “downstream” of care. A clinician suspected a problem, ordered a test, and the lab returned a number. Over time, that workflow turned into something more central, because modern medicine runs on repeat testing, chronic disease monitoring, and high-volume screening that touches almost every patient journey.

Two forces made the lab strategically important long before generative AI arrived.

First, digitization. Electronic health records made ordering easier, but also made ordering noisier. More clicks created more tests, more duplicates, and more “just in case” panels. That raised costs and created new patient-safety risks from false positives, incidental findings, and follow-on procedures.

Second, specialization. Molecular diagnostics, high-sensitivity assays, and complex pathology expanded the lab’s menu faster than most clinicians could keep up. The lab’s limiting factor shifted from specimen processing to interpretation at the point of care. In other words, the bottleneck became decision-making, not measurement.

Now AI pushes labs into a third identity: a clinical decision partner. That is why “AI-integrated labs” matter. They turn diagnostics into guidance, not just results.

| Inflection Point | What Changed In Practice | Why It Matters Now |

| Digitized ordering | More tests, easier repeats | Cost pressure and overuse become visible |

| Advanced diagnostics | More complex menus | Clinicians need help choosing correctly |

| AI integration | Ordering and interpretation become assisted | The lab starts shaping care pathways |

Key Statistics Clinicians And Executives Keep Citing

- Global clinical laboratory services: valued at $224.35B in 2025, projected to $308.24B by 2033 (Grand View Research estimate).

- Global IVD market: $77.73B in 2025 to $117.60B by 2032 (Fortune Business Insights estimate).

- U.S. lab staffing: 7%–11% average vacancy in a major ASCP survey, reaching 25% in some areas, and BLS projected growth to 372,000 roles by 2030 from 2020 levels, as summarized in an ADLM whitepaper.

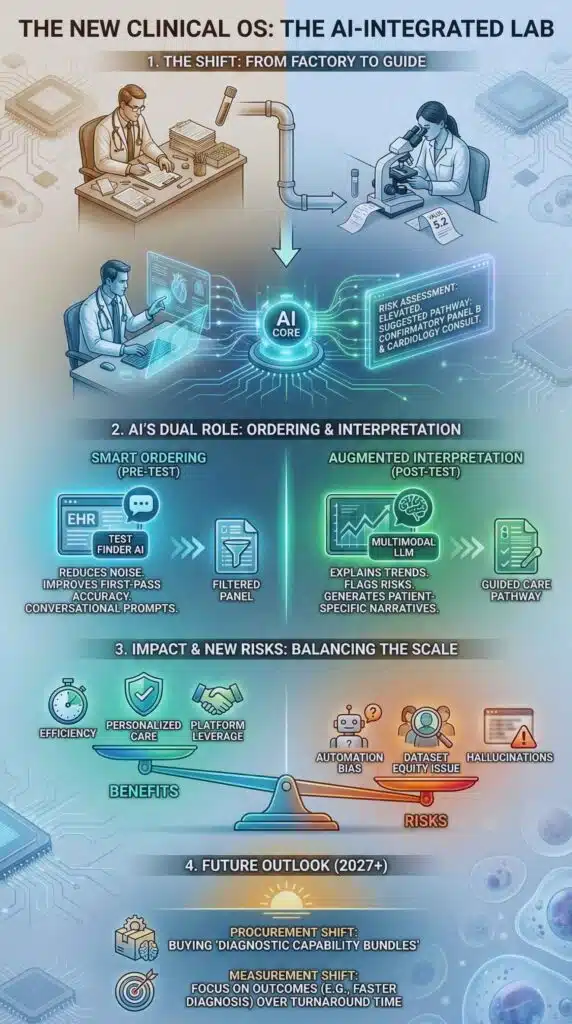

Ordering Becomes A Conversation: AI-Integrated Labs Inside The EHR

The most immediate shift is not flashy image recognition. It is test selection. That sounds mundane until you remember how much care flows from the first set of labs ordered in an ED, clinic, or inpatient unit.

Labcorp’s provider-facing rollout of its AI-powered Test Finder is a signal of where the market is going. The product is positioned to help clinicians identify appropriate tests using conversational prompts, and it is designed to work inside clinical workflows through standards-based integration rather than living as a separate website tool.

This matters because the economics of diagnostics often turn on the first decision: order the right test, at the right time, for the right patient. If AI reduces low-value tests and improves first-pass accuracy, labs gain leverage in value-based care conversations. If AI nudges clinicians toward higher-margin assays without strong clinical justification, payers and regulators will push back.

AHRQ-backed work like ML-ROVER points to the same trend from a public-interest angle. It aims to use machine-learning-based decision support to reduce lab test overutilization in an ICU setting, with project dates running through mid-2026. The strategic message is simple: stewardship is becoming algorithmic.

| Before AI-Integrated Ordering | What AI Changes | New Risks To Manage |

| Clinician relies on memory, habit, or local “favorite panels” | Suggests tests based on indication, prior results, and guidelines | Automation bias, “default ordering,” alert fatigue |

| Duplicate tests happen because prior data is hard to locate | Detects recent results and prompts reconsideration | Missed nuance in rapidly changing clinical status |

| Complex menus overwhelm non-specialists | Translates symptoms into appropriate diagnostics pathways | Hidden incentives and inconsistent suggestions |

Interpretation At Scale: From Numerical Results To Narrative Risk

The second wave is interpretation. Labs are moving from “here is the result” to “here is what this pattern could mean, and what to do next.” This is where large language models and multimodal models become tempting, because they can generate patient-specific explanations quickly.

The clinical promise is real. A well-designed interpretive layer can:

- explain borderline values in context

- flag critical trends across time

- recommend confirmatory testing when indicated

- reduce the cognitive load on clinicians who do not live in lab medicine

But interpretation is also where risk concentrates. If an algorithm explains a result incorrectly, it can mislead clinical reasoning even when the underlying lab value is accurate. That creates a different kind of diagnostic error: not a wrong measurement, but a wrong meaning.

Digital pathology shows how quickly interpretation is becoming computational. Mayo Clinic’s launch of Mayo Clinic Digital Pathology is framed around unlocking value from large slide archives and building models that support faster and more accurate diagnoses, including partnerships aimed at scaling generative AI in pathology. The broader message is that “the lab” now includes images, tissue, and multimodal records, not just chemistry analyzers.

| Traditional Lab Output | AI-Augmented Lab Output | What Changes Clinically |

| Discrete result plus reference range | Result plus trend analysis plus patient-specific narrative | Clinicians get guidance, not just data |

| Manual reflex rules | Adaptive reflex suggestions based on prior history | More personalized pathways, higher governance needs |

| Specialist interpretation limited by time | Scalable interpretation across many sites | Standardization improves, but errors scale too |

Operations, Workforce, And The New Lab Control Tower

AI integration is also a response to capacity constraints.

Lab staffing shortages are not new, and professional groups have documented them for decades. The ADLM staffing whitepaper summarizes vacancy rates that remain persistently high in many settings, plus long-term structural issues like declining training pipelines and retirement waves. When a sector lives with chronic shortages, automation stops being optional and becomes a survival strategy.

In that environment, AI is being applied to:

- specimen routing and prioritization.

- analyzer maintenance prediction.

- quality control anomaly detection.

- staffing optimization.

- report drafting and call-center support.

The interesting twist is that not all “automation” reduces labor. Some of it changes labor. The lab starts needing more informatics talent, more data governance, and more clinical oversight for algorithmic outputs. The headcount mix shifts from bench-heavy to hybrid bench-plus-digital.

| Operational Pressure | How AI-Integrated Labs Respond | Workforce Implication |

| High vacancy and burnout | Automate repetitive interpretation and workflow triage | Demand rises for informatics and QA roles |

| Growing test volume and complexity | Predict bottlenecks and allocate instruments dynamically | Labs need systems thinkers, not only technologists |

| Quality and turnaround expectations | Continuous monitoring and exception-based review | Oversight shifts from manual checks to audits |

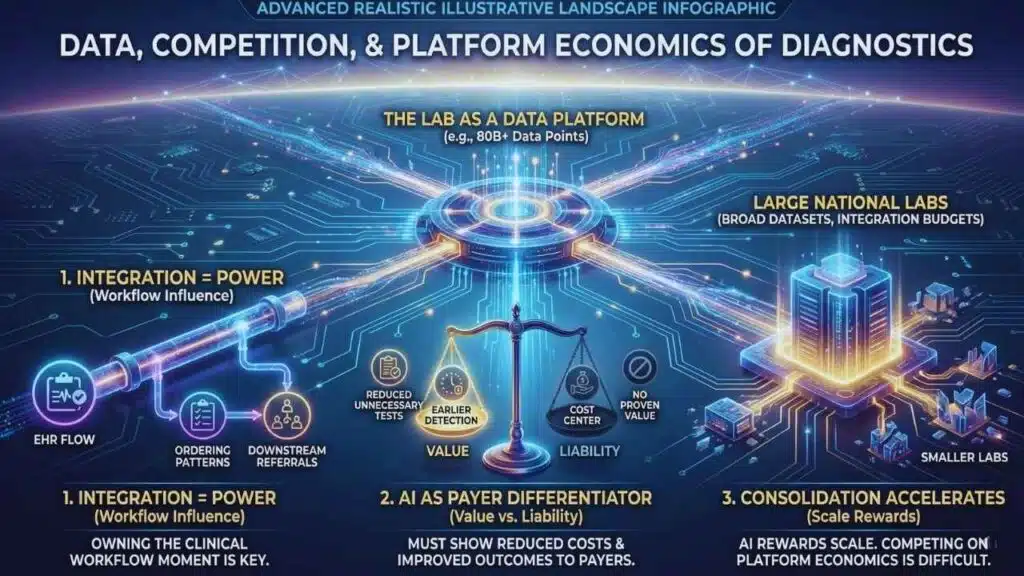

Data, Competition, And The Platform Economics Of Diagnostics

The competitive landscape is changing because AI makes lab data more valuable when it is connected and queryable.

Quest’s expanded relationship with Google Cloud highlights the scale: the company cites 200 million test requisitions and 80 billion clinical data points in its data assets. Whether every data point is equally useful is a separate question, but the direction is unmistakable. Labs are positioning themselves as data platforms.

Once labs operate like platforms, three business dynamics follow.

First, integration becomes power. Labs that sit inside EHR workflows influence ordering patterns, care pathways, and downstream referrals. The battle shifts from “who has the biggest network of draw sites” to “who owns the clinical workflow moment.”

Second, AI becomes a differentiator in payer negotiations. If a lab can credibly show reduced unnecessary testing, fewer readmissions from earlier detection, or improved pathway compliance, it has a story payers will listen to. If it cannot, AI becomes a cost center and a liability.

Third, consolidation accelerates. AI rewards scale because model performance often improves with broad, high-quality datasets and because integration work is expensive. Smaller labs can compete by specializing, partnering, or focusing on service quality, but competing head-to-head on AI platform economics is difficult.

| Likely “Winners” | Why They Benefit | Likely “Losers” | Why They Struggle |

| Large national labs | Scale, integration budgets, data breadth | Small generalist labs | Thin margins and limited digital investment |

| Health systems with strong informatics | Can govern AI and deploy safely | Systems with fragmented IT | Harder to integrate and monitor performance |

| Specialized reference labs | Clear niches, high-value interpretation | Commodity testing providers | Hard to differentiate without workflow ownership |

Regulation Catches Up: What Governance Looks Like When AI Touches A Diagnosis?

The governance problem is not abstract. The moment AI influences test selection or interpretation, it becomes clinically consequential.

Regulators and standards bodies are signaling that they know this, even if policy remains uneven.

In the U.S., the FDA maintains a public list of AI-enabled medical devices and, importantly, has begun discussing approaches for identifying or “tagging” foundation models, including large language models, as part of its evolving oversight posture. That is a key signal: regulators are starting to treat general-purpose models differently from narrow, task-specific ones.

At the same time, enforcement boundaries can shift. Reporting in early January 2026 described the FDA signaling a lighter-touch stance toward some digital health and clinical decision support, which could expand the space for AI tools that influence care while also increasing pressure on health systems to self-govern responsibly.

Labs also operate in a regulatory patchwork that includes lab-developed testing debates. The FDA’s LDT approach has faced legal and political whiplash, and labs are watching closely because compliance obligations can reshape what they build in-house versus buy from vendors.

In Europe, the EU AI Act creates a structured timeline toward requirements for high-risk systems, with a general date of application in August 2026 and a multi-year glide path to full effect. Even non-European labs should pay attention because EU rules often influence global vendor behavior.

Finally, voluntary frameworks are becoming practical necessities. NIST’s Generative AI Profile provides a risk-management lens that health systems can adapt for procurement, testing, and monitoring. WHO guidance on large multimodal models frames risks like bias, privacy, and accountability as governance issues, not technical footnotes.

| Policy Milestone | Date | What It Signals For AI-Integrated Labs |

| EU AI Act published in Official Journal | July 2024 | Risk-based regulation becomes operational, including GPAI concepts |

| EU AI Act general application | August 2, 2026 | Compliance pressure rises for high-risk clinical uses |

| NIST GenAI Profile published | July 26, 2024 | Practical risk controls become easier to standardize |

| FDA AI-enabled device list updated (content current) | Dec 5, 2025 | Visibility into FDA thinking on AI devices grows |

| LDT policy volatility continues | 2025–2026 | Labs hedge build-versus-buy decisions |

Safety, Bias, And Trust: The Hard Problems AI-Integrated Labs Cannot Outsource

If AI-integrated labs are the future, trust is the bottleneck.

ECRI has repeatedly warned about overreliance on AI, including the risk of clinicians placing too much trust in AI outputs. That warning applies directly to labs because lab results often feel “objective” to clinicians and patients. If AI adds an interpretive layer, users may assume it carries the same objectivity as the underlying assay.

Bias is another predictable fault line. Research and reporting in 2025 highlighted how generative AI systems can shift recommendations based on socioeconomic or demographic details even when symptoms are held constant. That matters in labs because ordering and interpretation can influence who gets confirmatory testing, who gets advanced imaging, and who gets specialist referral.

Civil rights organizations are now directly entering the governance conversation. The NAACP’s push for an equity-first framework and calls for bias audits and transparency measures illustrate how social legitimacy is becoming part of AI deployment, not something handled after the fact.

The practical takeaway for lab leaders is that “AI governance” must include:

- model validation on local populations.

- monitoring for drift and failure modes.

- explainability that is meaningful to clinicians.

- documentation of training data and limitations.

- clear accountability when outputs are wrong.

| Risk Category | What It Looks Like In Diagnostics | Control That Actually Works |

| Automation bias | Clinician follows AI suggestion over judgment | Training, forced second-check for high-risk calls |

| Dataset bias | Different recommendations across demographics | Bias audits, representative evaluation sets |

| Model drift | Performance changes as populations and practice change | Continuous monitoring and recalibration |

| Hallucination or overreach | Confident narrative not supported by evidence | Guardrails, citations to guidelines inside tools, escalation rules |

| Privacy and secondary use | Lab data reused beyond patient expectations | Strong governance, consent policies, vendor controls |

What Happens Next: Milestones To Watch Through 2027?

The next phase will look less like “AI features” and more like a restructuring of diagnostic workflows.

Expect procurement to change. Health systems will increasingly buy “diagnostic capability bundles” that include assays, integration, and interpretive support rather than buying tests alone. Labs that cannot integrate will be treated as commodity suppliers.

Expect measurement to change. Leaders will ask for outcomes like reduced repeat testing, fewer unnecessary panels, and faster time-to-diagnosis, not just turnaround times. A lab’s AI layer will need to prove its value in metrics that finance and quality teams both respect.

Expect governance to harden. EU timelines, evolving U.S. posture, and pressure from patient-safety groups will make “pilot and pray” deployments harder to justify. Health systems will need model registries, monitoring dashboards, and incident response processes just like they have for medication safety.

Expect a new clinical role to emerge. Many systems will need a visible owner for algorithmic diagnostics. Not a vendor manager, but a clinical leader accountable for how AI influences test ordering, interpretation, and follow-up pathways.

Milestones worth watching

- By August 2026, EU AI Act obligations become more operational, shaping vendor compliance behaviors.

- Through 2026, more labs will push AI tools into EHR ordering flows, not just patient portals.

- By 2027, governance standards for general-purpose and foundation models in health will likely become more formal, either via regulation, payer requirements, or accreditation pressure.

The big question is not whether labs will adopt AI. Many already are. The question is whether health systems can build enough governance and clinical accountability to harness AI’s interpretive power without scaling bias, error, and misplaced certainty.