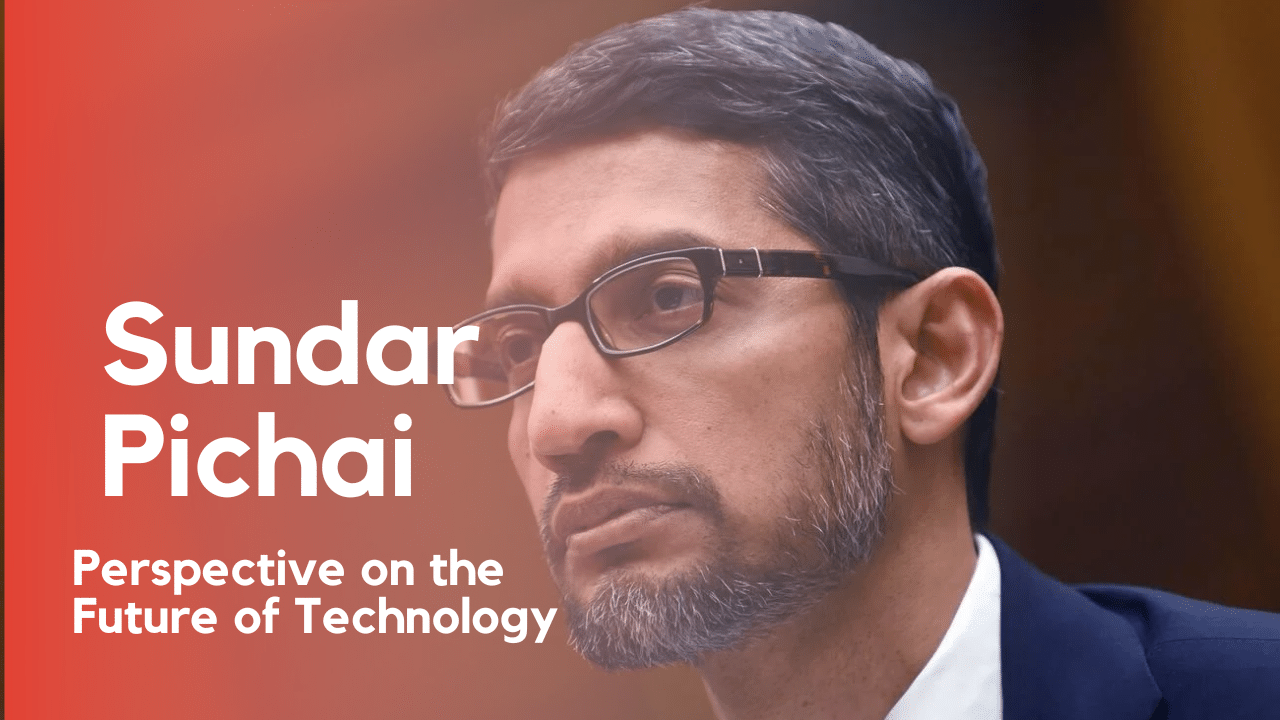

Microsoft President Brad Smith has raised a significant warning about the need for human control in the realm of artificial intelligence (AI). He emphasized that AI has the potential to pose risks similar to those of nuclear war, potentially even leading to human extinction.

Smith stressed the importance of having individuals ready to promptly shut down AI tools as a preventive measure against catastrophic consequences. Alternatively, he proposed a more measured approach by slowing down AI development to allow humans to better keep pace.

Smith also highlighted that artificial intelligence can serve as both a powerful tool and a potential weapon. To navigate this complex landscape, it’s crucial to possess a clear understanding of how to manage this technology in ways that maximize its benefits while minimizing its risks. Taking heed of insights from experts like Smith, it becomes imperative to transform their ideas into actionable solutions that can be applied in practical real-world scenarios.

This article delves into Microsoft’s president’s viewpoints regarding artificial intelligence. Furthermore, it explores the various approaches that the global community is undertaking to exert control over the expanding influence of AI.

What does the Microsoft President say about AI?

Companies creating #AI have a responsibility to ensure that it is safe, secure, and under human control. This initiative is a vital step to bring the tech sector together in advancing AI responsibly and tackling challenges so that it benefits all humanity. https://t.co/OoOaArcEIt

— Brad Smith (@BradSmi) July 26, 2023

On August 28, 2023, Brad Smith openly acknowledged a fundamental truth: that every technology ever created possesses the dual potential to be both a valuable tool and a potentially harmful weapon. However, he emphasized the critical importance of humanity exercising control over artificial intelligence to prevent its potential for destruction.

Smith emphasized that AI is a tool designed to enhance human thinking, enabling individuals to think more efficiently and quickly. He cautioned against the misconception that AI might replace the need for human thinking altogether, stating that such a perspective would be a significant misjudgment.

This sentiment resonates with educators around the world, who are witnessing a growing trend of students using AI tools like ChatGPT for dishonest purposes, such as cheating. Some educators have gone so far as to prohibit these technologies due to concerns that students may become overly reliant on them.

The issue of overreliance is profound; if students begin depending on AI to do their thinking for them, they risk missing out on acquiring essential skills and knowledge in their educational journey. Additionally, experts like Smith have raised alarm bells that artificial intelligence could potentially lead to the downfall of humanity.

One of the alarming concerns is the possibility of someone misusing AI systems to create threats comparable to the devastating impact of nuclear warfare. Hence, Smith, in his role as the president of Microsoft, underscored the dire need for safeguards against such a catastrophic scenario.

He emphasized that this necessity extends beyond individual companies making ethical choices; it requires the establishment of new laws and regulations that ensure the inclusion of safety measures. Smith highlighted that similar needs for regulatory measures have arisen in other contexts, indicating the global significance of addressing AI’s potential risks.

“Just consider this: electricity relies on circuit breakers. When you put your children on a school bus, you have the reassurance of an emergency brake. We’ve implemented safety measures like this for other technologies in the past. Now, it’s crucial that we do the same for AI,” emphasized Smith during a recent discussion.

Smith’s concerns echoed his prior warnings from the previous week. He drew parallels between the rapid development of AI and the tech industry’s previous missteps with social media.

The Microsoft president expressed his view that developers had been overly optimistic about the potential of social networks. He remarked that there was a certain degree of excessive enthusiasm for the positive impacts of social media, without adequately considering the accompanying risks.

In essence, Smith’s perspective underscores the importance of maintaining a balanced approach when embracing new technologies, particularly AI, by taking into account both the benefits and potential pitfalls.

How are We combating Dangers from AI?

Several countries are increasingly recognizing the significant impact of artificial intelligence (AI) and have taken steps to regulate its growth through legislative measures. A recent example of this is the Philippines, which introduced an AI Bill aimed at establishing comprehensive controls.

Representative Robert Ace Barbers of Surigao del Norte Second District proposed House Bill $7396, outlining the creation of the Artificial Intelligence Development Authority (AIDA). The primary objective of AIDA would be to develop and implement a national AI strategy.

Under this proposed legislation, AIDA would carry out thorough risk assessments and impact analyses to ensure that AI technologies adhere to ethical guidelines and safeguard individual well-being. Additionally, the authority would work towards establishing cybersecurity standards for AI to prevent potential hacking and other forms of cyberattacks.

The introduction of this law highlights the Philippines’ understanding of the significance of AI in national development. However, it also acknowledges the potential risks and challenges associated with the rapid advancements in AI technology. By proposing such legislation, the Philippines aims to strike a balance between harnessing the benefits of AI and addressing potential concerns.

Recognizing the need to optimize its advantages while minimizing potential drawbacks, the United States has taken steps to address the implications of artificial intelligence (AI). This was evident in the country’s inaugural AI Senate hearing held in May.

The hearing featured discussions with Sam Altman, the CEO of OpenAI, the company behind the creation of ChatGPT. During the hearing, Altman and several lawmakers concurred on the necessity to establish new regulations for AI technology.

Furthermore, efforts are being made by the US Copyright Office to address the impact of AI on intellectual property rights. To shape future AI copyright laws, the office has initiated a public comment period, allowing individuals to contribute their insights and guide the development of appropriate measures.

Conclusion

Raising a warning flag about the potential risks of unregulated artificial intelligence, Microsoft’s President Brad Smith emphasizes the importance of maintaining stringent human oversight over this technology to avert catastrophic consequences.

In response, companies have been diligently enhancing their AI programs to ensure they are aligned with the goals and values of humanity. One such example is Anthopic’s Claude chatbot, which adheres to the principles of “constitutional AI” to mitigate negative responses.

Navigating the current landscape, it’s evident that we are firmly entrenched in the era of AI. As individuals, it’s crucial to equip ourselves with the necessary knowledge and skills to navigate this terrain effectively. A valuable starting point is to stay informed about the latest digital tips and trends through resources like Inquirer Tech.