Have you ever hit “submit” on a loan application and felt a knot in your stomach? You are not the only one. It feels like your financial future is in the hands of a mysterious machine. We often assume computers are neutral math wizards. But what if the digital judge deciding your mortgage rate has the same biases as a human?

I have spent years exploring how financial health connects to personal well-being. I have learned that “fairness” in banking isn’t just about numbers. It is about your peace of mind and your right to a fair shot. Did you know that in 2025, AI was involved in over 70% of loan underwriting decisions at top U.S. banks? That means algorithms are likely deciding your next car loan or mortgage.

This guide will walk you through the ethics of algorithmic lending. I will show you how these systems work, where they fail, and the specific rights you have today.

Understanding Algorithmic Lending

Banks have moved far beyond the days of a loan officer looking you in the eye and shaking your hand. Today, smart software does the heavy lifting. These systems are designed to process information at lightning speed.

How AI is Used in Lending Decisions

Lenders use Artificial Intelligence (AI) to predict if you can pay back a loan. The system analyzes thousands of data points in seconds. It looks at your credit score, income, and debt-to-income ratio.

But it goes deeper. Some modern models consider “alternative data” like your rent payments or utility bills. A 2025 report from CoinLaw noted that digital-only banks can now process loan decisions in under six minutes thanks to these tools.

For you, this means faster answers. You don’t have to wait weeks to know if you can buy that new home. But speed comes with a trade-off. We need to ask if the machine is making the right call, or just the fast one.

“Smart algorithms have changed the face of lending, making some banks smarter but also raising tough questions on fairness.”

Potential Benefits of AI in Banking

When done right, AI is a powerful tool for inclusion. Traditional credit scores often leave out young people or recent immigrants. AI can look at a broader picture.

Recent data support this positive shift. In 2025, AI-enhanced scoring models helped increase loan approval rates for “underbanked” individuals by 22%. That is a huge win for people who were previously invisible to the system.

These tools also protect your money. They spot fraud patterns instantly, keeping your accounts safer than any human team could. The goal is a system that is efficient, safe, and open to more people.

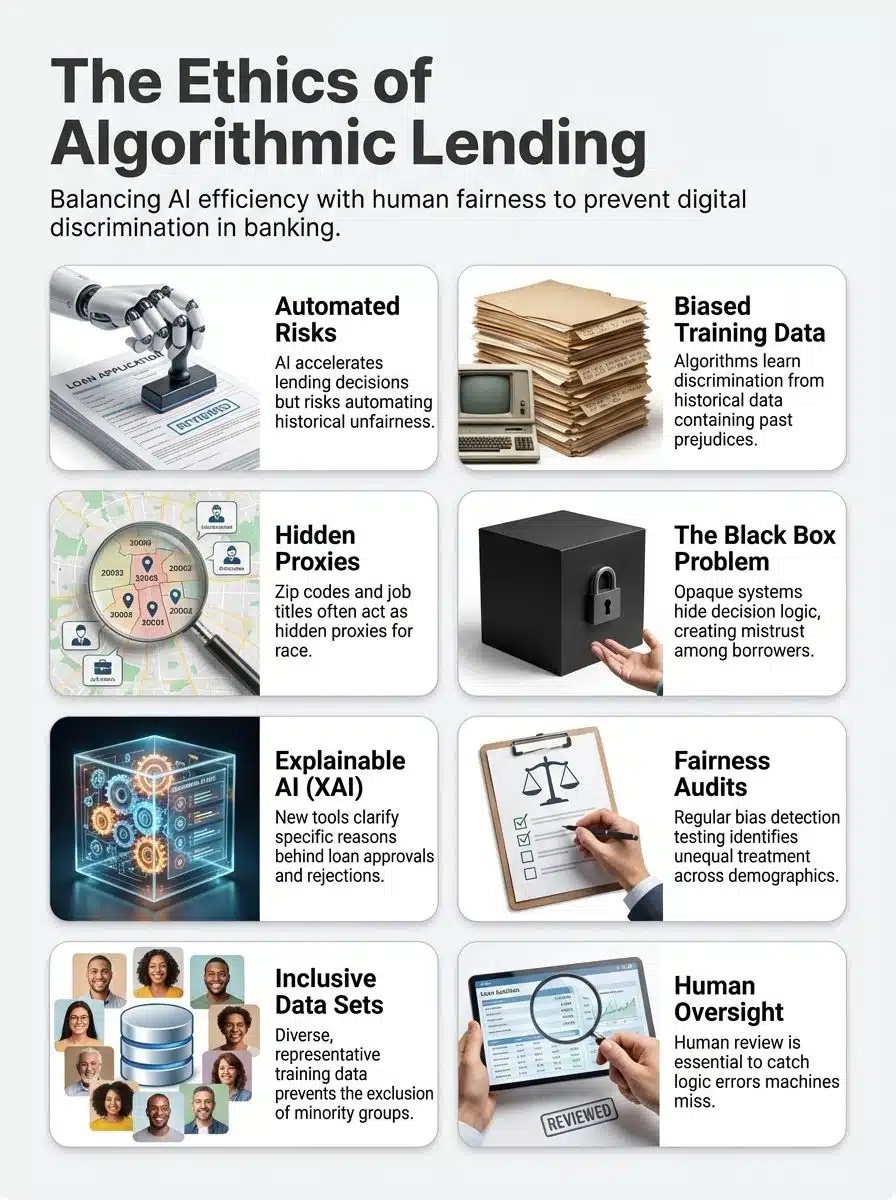

The Ethical Dilemma of Algorithmic Lending

We want to believe that math is always fair. But algorithms are built by humans, and they learn from human history. This creates a risk of “digital redlining,” where old biases get baked into new code.

Can Algorithms Be Truly Fair?

An algorithm is like a student. It learns from the textbooks you give it. If a bank trains its AI on loan data from the last 50 years, the computer sees a pattern. It notices that certain groups were denied loans more often.

The machine doesn’t know why this happened. It just thinks, “This group is high risk.” It then repeats that discrimination exists today.

Experts call this “algorithmic bias.” A 2024 analysis by the Urban Institute highlighted this danger. They found that in some markets, Black and Brown borrowers were still more likely to be denied loans compared to white borrowers with similar profiles. Fairness requires active correction, not just passive data processing.

The Risk of Reinforcing Historical Biases

History has a way of sneaking into the present. In the past, discriminatory laws prevented many families from building wealth or buying homes in certain neighborhoods.

If an AI uses “zip code” as a factor, it might accidentally block loans for those same neighborhoods today. The computer isn’t trying to be mean. It is just following a statistical path that leads to an unfair result.

This creates a cycle. If you can’t get a loan, you can’t build credit. If you can’t build credit, the AI rates you as high risk. Breaking this cycle is the core ethical challenge for modern banking.

Sources of Bias in AI Banking

You might wonder how bias gets in if the computer is just looking at numbers. It usually enters through two side doors: the data itself and “proxy variables.”

Bias in Training Data

Imagine teaching a child to recognize “successful professionals” by showing them pictures of CEOs from the 1950s. The child would learn that only men in suits can be successful. AI works the same way.

If the training data is full of past decisions where women or minorities were unfairly rejected, the AI copies that behavior. It treats those old mistakes as the “correct” rule.

We saw this with the Apple Card launch in 2019. Tech-savvy couples noticed that husbands were getting much higher credit limits than their wives, even when they shared assets. The data had learned a gender bias that no one explicitly programmed.

Proxy Variables and Hidden Discrimination

Laws strictly forbid banks from using race, gender, or religion to decide on a loan. So, the algorithm doesn’t ask those questions. Instead, it finds “proxies.”

A proxy is a piece of data that correlates with a protected trait. Here are common examples:

- Zip Code: This often aligns with racial demographics.

- College Attended: This can signal gender or background.

- Shopping Habits: Where you buy groceries can reveal your income class.

A recent high-profile case involved Navy Federal Credit Union. In late 2023, reports showed significant disparities in their mortgage approval rates. Even when applicants had similar incomes and debt ratios, Black applicants faced higher denial rates. The system wasn’t “racist” on purpose, but the proxies led to a discriminatory outcome.

Transparency and Explainability in AI Systems

When a human denies your loan, they have to tell you why. When a “Black Box” AI does it, things get murky. You deserve to know exactly what hurt your chances.

Opening the Black Box of AI

The “Black Box” problem refers to AI models that are so complex, even their creators can’t trace exactly how they made a decision. It’s like a chef who makes a stew but can’t tell you the ingredients.

This is dangerous in banking. If you don’t know why you were rejected, you can’t fix it. Was it your credit score? Your debt? Or was it a weird correlation, like the fact that you shop at a discount store?

This is where Explainable AI (XAI) comes in. These are new tools designed to translate complex math into plain English. They help banks, and you understand the “why” behind the “no.”

The Role of Explainable AI (XAI)

XAI is your advocate inside the machine. It forces the system to show its work. For example, instead of just saying “Score: 450,” an XAI tool might say, “Score lowered by 50 points due to high credit card utilization.” This leads to a critical consumer right: the Adverse Action Notice. In the U.S., if you are denied credit, the lender must send you this notice.

In September 2023, the Consumer Financial Protection Bureau (CFPB) issued strict guidance on this. They warned lenders that they cannot just use a generic checklist of reasons if an AI was involved. They must provide the specific reason the algorithm rejected you.

This transparency puts power back in your hands. It forces banks to prove their decisions are based on your financial reality, not a hidden bias.

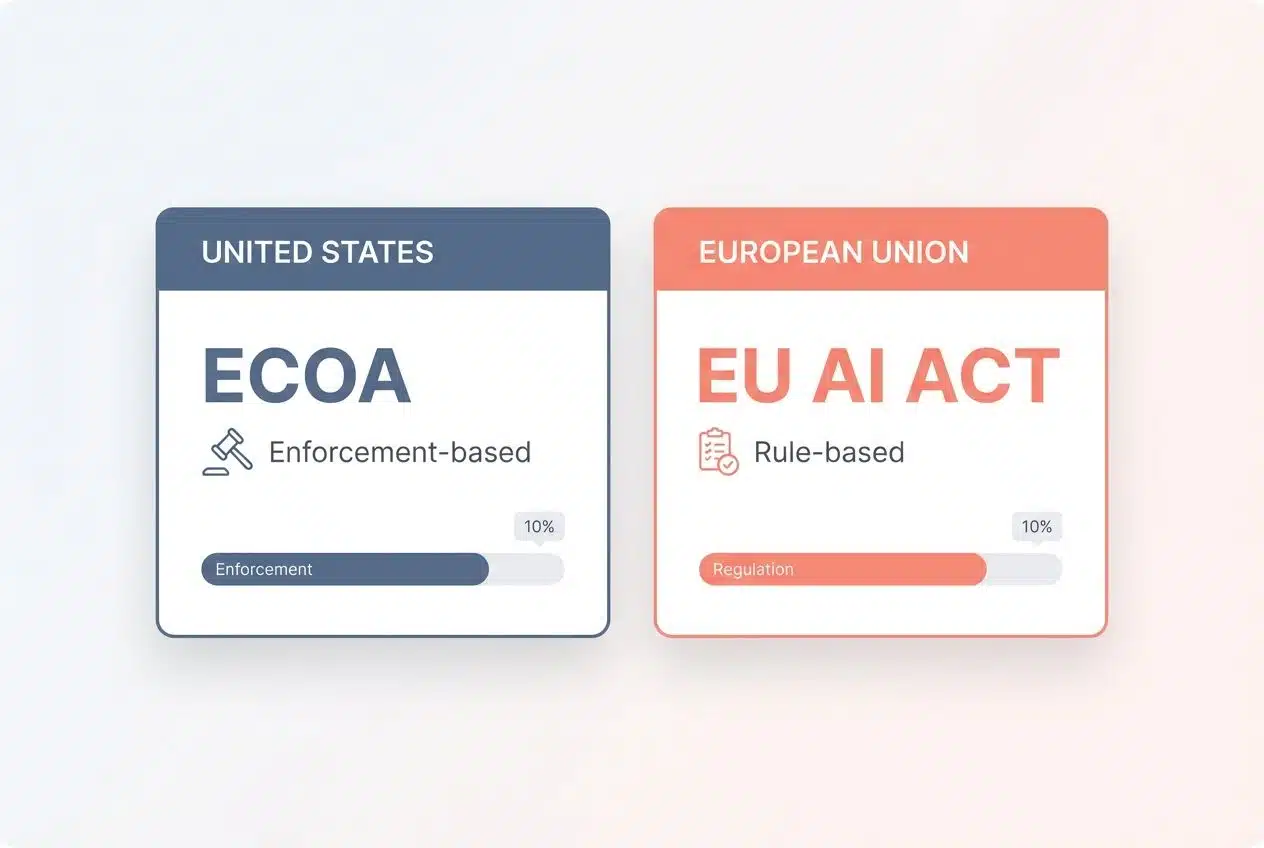

Regulation and Accountability

Governments are waking up to these risks. New “sheriffs” are in town to ensure that digital lending stays fair. The rules are getting stricter in both the U.S. and Europe.

Current Legal Frameworks for AI in Banking

In the United States, the primary shield for borrowers is the Equal Credit Opportunity Act (ECOA). It makes discrimination illegal, whether it’s done by a person or a PC.

The CFPB is actively enforcing this. They have made it clear that “the computer did it” is not a valid excuse for breaking the law. Lenders are fully responsible for their algorithms.

Across the ocean, the European Union is taking a different approach. The EU AI Act, which entered its second phase in August 2025, specifically classifies AI used for credit scoring as “High Risk.” This means European banks must pass rigorous conformity assessments before they can even switch their systems on.

The Importance of Regulatory Oversight

Regulation is what turns “good intentions” into “required actions.” Without these laws, companies might cut corners to save money. Strong oversight ensures that fairness is a requirement, not a bonus feature.

Let’s compare how the two major regions handle this:

| Region | Key Regulation | Approach |

|---|---|---|

| United States | Equal Credit Opportunity Act (ECOA) | Enforcement-based: Regulators like the CFPB punish unfair outcomes after they happen. |

| European Union | EU AI Act (2025) | Rule-based: Strict pre-launch testing and certification are required for “High Risk” systems. |

This pressure works. Because of these laws, banks are now investing millions in “fairness audits.” They know that if their AI slips up, the fines will be massive.

Solutions for Avoiding Bias in Algorithmic Lending

We don’t have to scrap AI to fix these problems. Smart engineers and ethical leaders are building solutions that keep the speed of AI while removing bias.

Fairness Audits and Bias Detection Tools

Banks are now hiring “AI Auditors” whose only job is to try to break the system. They throw thousands of test cases at the algorithm to see if it treats different groups unfairly.

They use advanced software to do this. Here are some of the top tools leading the charge:

- IBM AI Fairness 360: An open-source toolkit that helps developers check for unwanted bias in their code.

- FairPlay: A “bias bounty” system that rigorously tests lending models to ensure they comply with fair lending laws.

- Google What-If Tool: Allows teams to test hypothetical situations, like “What if this applicant were a different gender?” to see if the result changes.

Inclusive and Representative Data Sets

The best way to fix a biased student is to give them better textbooks. Banks are working to build “inclusive data sets” that reflect the real world.

This means actively including data from underrepresented groups. It also means using “alternative data” responsibly. For example, including rental payment history can help millions of people who don’t have a mortgage but pay their rent on time every month.

The Federal Reserve has highlighted that diverse sampling is key. When the data looks like the real population, the AI makes decisions that serve the real population.

Human Oversight in AI Decision-Making

At the end of the day, you need a human in the loop. This is often called “Human-in-the-Loop” (HITL) design. It acts as a safety valve for the system.

If an AI rejects a loan, a trained human reviewer should often take a second look. They can spot context that a machine misses. Maybe you missed a bill payment because of a hospital stay, but you’ve been perfect ever since. A human understands that story. A spreadsheet does not.

Trust grows when you know that a person is accountable. It ensures that empathy remains part of the financial process.

Global Approaches to Ethical AI in Banking

Different cultures solve problems in different ways. While the U.S. and EU are the big players, other nations are innovating rapidly to solve the puzzle of ethical AI.

Regional Differences in Addressing Bias

In Asia, Singapore has launched the Veritas Initiative. This is a framework led by the Monetary Authority of Singapore. It gives financial institutions a clear set of principles to validate their AI regarding fairness, ethics, and transparency.

Meanwhile, the UK is focusing heavily on “explainability.” Their regulators want to ensure that if a computer says “no,” the customer gets a clear, plain-language reason why.

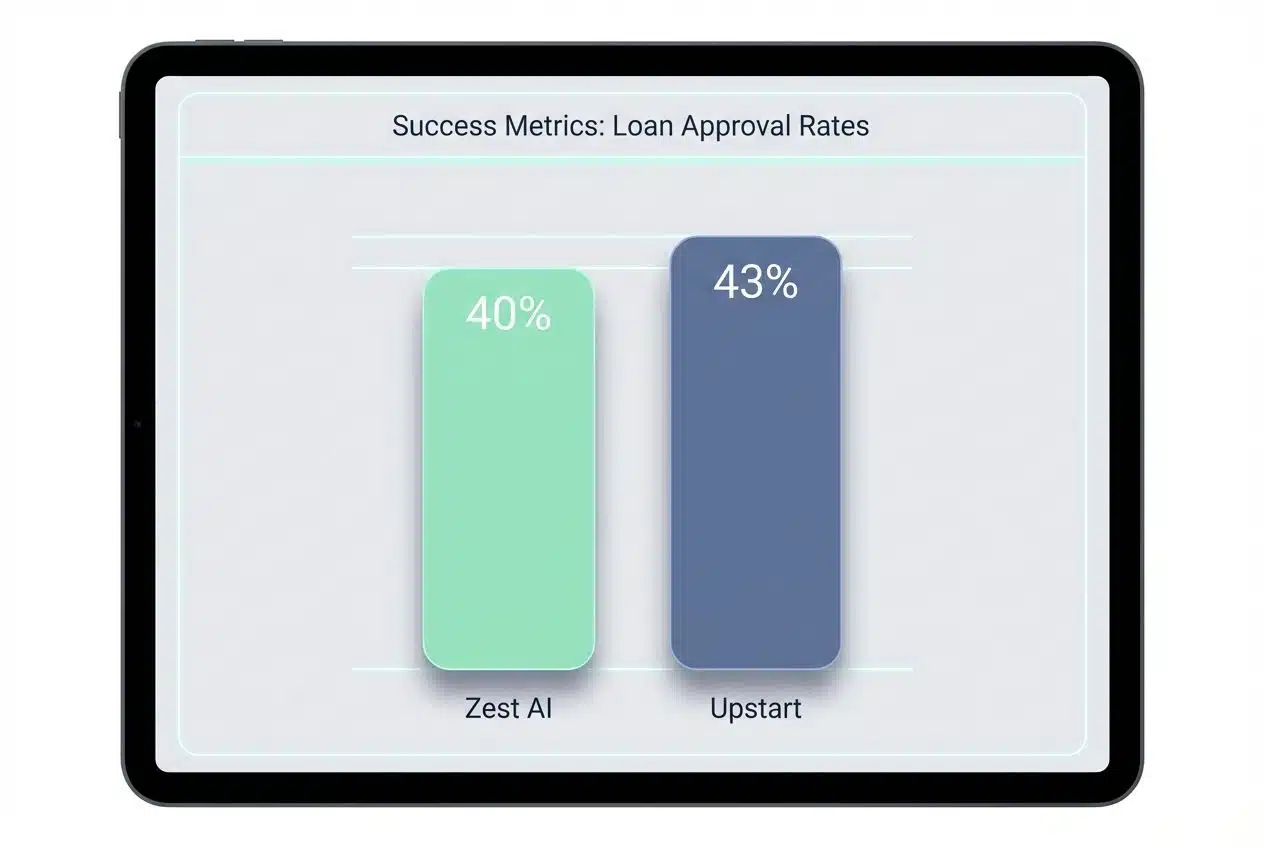

Examples of Successful Ethical AI Models

Success stories are popping up everywhere. These companies are proving that you can be profitable and fair.

- Zest AI: This company uses a technique called “Adversarial Debiasing.” Their tool, Zest FairBoost, helps lenders optimize their models to be fairer. They claim this has increased approvals for protected classes by 40% without increasing risk.

- Upstart: This lending platform uses AI to look beyond FICO scores. They consider education and employment history. Their data shows this approach approves 43% more minority borrowers than traditional models.

- Citibank: Since 2022, they have published annual reports specifically tracking their progress on ethical AI and bias reduction.

- Monzo Bank (UK): Known for its transparent approach, giving users detailed insights into their financial health and decision factors.

- Microsoft’s Responsible AI: While not a bank, its principles are being adopted by financial partners globally to set the standard for ethical code.

Real-World Cases of Algorithmic Bias

We learn the most from our mistakes. Several high-profile failures have forced the industry to look in the mirror and do better.

Notable Failures in Automated Lending

These stories made headlines and sparked real change.

- Navy Federal Credit Union (2023): As mentioned earlier, CNN found that the largest credit union in the U.S. had a massive approval gap between White and Black applicants. This sparked lawsuits and a nationwide conversation about hidden bias.

- Apple Card (2019): The launch was marred by accusations that the algorithm favored men. It was a wake-up call that even the biggest tech companies can have blind spots in their data.

- Amazon (2014-2017): While this was a hiring tool, the lesson applies to banking. Amazon built an AI to review resumes, but it learned to penalize the word “women’s” (as in “women’s chess club”). They scrapped the tool, proving that sometimes you have to pull the plug to do the right thing.

Lessons Learned from Past Mistakes

The biggest lesson is that “blindness” doesn’t work. You can’t just hide race or gender from the data and hope for the best. The AI will find proxies.

Instead, banks are learning to be “fairness aware.” This means actively testing for bias during the design phase, not just after the product launches. It also means listening to customers.

When users speak up about unfair treatment, banks now know they have to listen and investigate quickly. Transparency isn’t just nice to have; it is a survival strategy for modern banks.

Final Thoughts

We have covered a lot of ground, from “black box” algorithms to the laws protecting your rights. It is clear that while AI can make banking faster, it requires a human touch to keep it fair.

As we navigate this new era and the ethics of Algorithmic Lending, remember that you have power. You have the right to ask why. You have the right to a specific explanation if you are denied credit. And you have the power to choose lenders who prioritize ethics.

I believe that when we demand transparency, we build a system that works for everyone. Financial wellness is about more than just money; it is about trust. By staying informed and vocal, we can ensure that the future of banking is not just smart, but kind.

Here is to a future where fairness is the default setting!