In a major move to combat growing music fraud, Deezer, the Paris-based music streaming platform, announced on Friday that it will begin labeling albums that contain AI-generated songs. This step is part of a broader effort by the company to crack down on the increasing misuse of artificial intelligence by scammers attempting to exploit the platform’s royalty system.

What’s Changing on Deezer?

Starting immediately, Deezer users will begin seeing on-screen labels warning when certain songs on an album were created with the help of AI music generation tools. The tag will specifically read “AI-generated content,” alerting listeners that not all the tracks were made by human musicians.

This is the latest tactic in Deezer’s ongoing strategy to protect the integrity of its streaming ecosystem, which is being threatened by individuals and bots using generative AI to produce and mass-upload music solely for the purpose of harvesting royalties.

Surge in AI-Generated Uploads

The company shared some startling statistics: As of mid-2025, approximately 18% of all songs uploaded to Deezer daily—about 20,000 tracks—are now fully AI-generated. This marks a steep increase from just three months prior, when that figure stood at 10%, according to Deezer CEO Alexis Lanternier.

Deezer believes this rapid growth is being driven not by artists exploring new tools, but by bad actors attempting to game the system.

How AI Music Is Abused for Profit

Streaming fraud is not new, but the involvement of AI adds a dangerous twist. Music fraudsters can now use generative tools to rapidly churn out thousands of tracks that mimic real music but are often of poor quality. These tracks are then uploaded to streaming platforms like Deezer and, using streaming farms (networks of bots or paid accounts), are artificially played millions or even billions of times.

This manipulation allows scammers to collect royalties meant for legitimate musicians.

One shocking example emerged in the United States in 2024, when prosecutors filed what they described as the first criminal case involving artificially inflated music streams. A man was charged with wire fraud conspiracy after generating hundreds of thousands of AI-produced songs and programming bots to stream them repeatedly. Authorities estimated he made over $10 million through this scheme.

Deezer’s AI Detection Tools

In response to the threat, Deezer has developed its own AI-powered song detection system. This proprietary technology can scan uploads and detect whether songs were made using AI, even if the uploader doesn’t disclose it.

By identifying AI-generated content early, the platform can:

-

Flag it with labels for user transparency.

-

Exclude it from editorial playlists and algorithmic recommendations.

-

Withhold royalty payments if the content is found to be involved in fraudulent activity.

According to Deezer’s internal estimates, up to 70% of the plays on AI-generated tracks are believed to be from non-human sources—bots or automated streaming farms.

The Bigger Picture: Industry-Wide Challenge

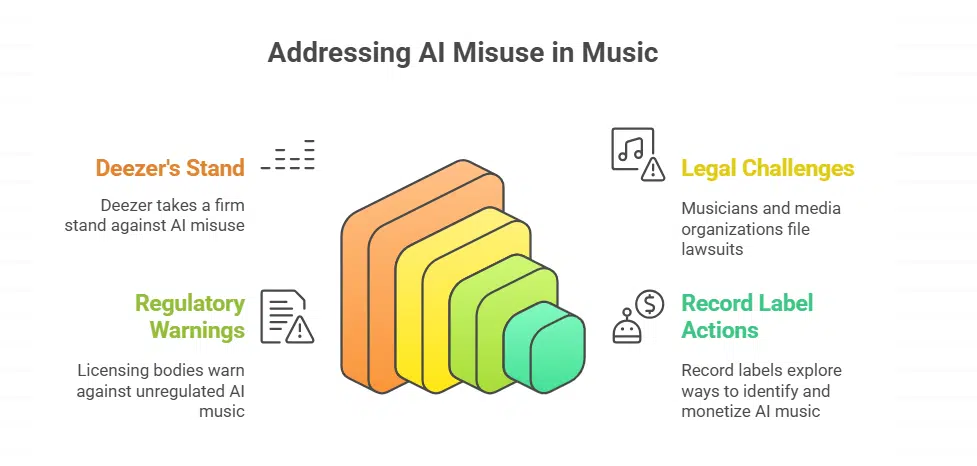

Although Deezer is not the largest music platform—compared to giants like Spotify, Apple Music, and Amazon Music—it’s emerging as one of the first major services to take a firm stand against AI misuse in music.

The move reflects growing concerns across the entire entertainment industry. With generative AI tools advancing rapidly, companies face immense pressure to balance innovation with protection of intellectual property and revenue streams.

Many major tech firms, including OpenAI and Meta, are also facing lawsuits from musicians, artists, and media organizations over the unauthorized scraping of content from the internet to train their AI models. Critics argue that these tools absorb copyrighted materials—including audio, text, and images—without proper licensing or compensation.

In Germany, performance rights organization GEMA and other European music licensing bodies have warned against unregulated AI music. Meanwhile, major record labels like Universal Music Group are also exploring ways to identify and monetize—or block—AI-generated imitations of real artists’ voices.

Deezer’s Policy: Transparency and Monetization Control

Deezer’s new content labeling isn’t just cosmetic. The company clarified that songs labeled as AI-generated may also be subject to different monetization policies. If a song is found to be part of a bot-driven fraud scheme, Deezer may refuse to pay royalties altogether. These songs can also be removed from search results or hidden from curated playlists to reduce exposure.

This approach helps preserve resources for real musicians and rights holders, especially in an industry where streaming payouts are already minimal and competitive.

Why It Matters for Listeners and Artists

While fully AI-generated music currently accounts for only 0.5% of total streams on Deezer, its disproportionate connection to fraud is alarming. Deezer emphasizes that fraud—not creativity—is the main driver behind most AI music uploads.

This trend affects genuine artists in several ways:

-

It dilutes revenue pools, making it harder for real musicians to earn a fair share.

-

It clutters playlists and discovery algorithms, reducing visibility for legitimate songs.

-

It creates legal and ethical uncertainties, especially around ownership and copyright.

By flagging AI-generated songs, Deezer hopes to protect its users and creators from being manipulated by anonymous actors exploiting the system.

Deezer’s step to label AI-generated songs is a strong, proactive effort to bring transparency and ethical control to music streaming. While artificial intelligence holds promise for creative exploration, its unchecked use risks flooding platforms with content that exists only to harvest royalties. As the music industry adapts to these new tools, Deezer’s model may serve as a blueprint for how platforms can embrace AI responsibly—without sacrificing trust, talent, or fairness.