In 2026, “AI at work” is no longer a pilot. It is a daily layer on top of email, docs, meetings, and dashboards. That speed is colliding with trust, status, and fairness at work, forcing leaders to manage a new question: who gets credit, control, and security in an AI-augmented office?

How We Got Here: From Automation To Co-Authorship?

The office has lived through waves of “productivity tech” before. Spreadsheets reshaped finance. Email flattened hierarchies by making everyone reachable. Search made information cheap. Each wave changed output, but it did not deeply challenge identity because it mostly changed how fast people did the same work.

Generative AI is different for one reason: it does not just accelerate tasks. It imitates judgment-like outputs (drafts, summaries, analysis, code, slide narratives) that used to signal competence. That makes it feel less like a tool and more like a silent colleague, or even a rival.

By late 2024 and throughout 2025, multiple surveys converged on the same reality: adoption is widespread, and it is increasingly informal. Employees are using AI even when organizations have not fully communicated a strategy, or when governance is unclear. The “AI-augmented office” arrives not as a clean transformation project, but as a messy cultural retrofit.

That is why identity is the sleeper issue of 2026. The firms that treat AI as “just another software rollout” will keep tripping over hidden human variables: pride, fear, moral ownership, and status.

Status, Craft, And The New Definition Of “Good Work”

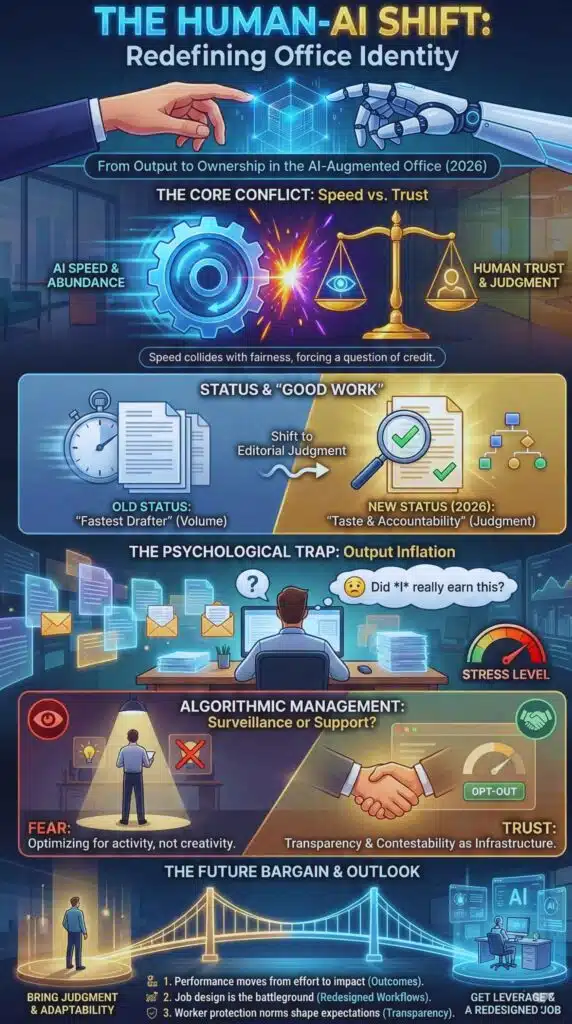

In the classic office ladder, status came from scarce capabilities: writing clearly, analyzing quickly, producing polished deliverables under time pressure, and being the person who “just gets it.” Generative AI makes first drafts abundant. When abundance rises, status shifts to what remains scarce.

What stays scarce is taste and accountability: deciding what matters, what is true, what is ethical, and what will actually work in the real world. In other words, the new prestige is moving from production to editorial judgment.

A useful way to understand the identity shift is to separate work into two layers:

- Output layer: drafts, summaries, slides, code snippets, routine decisions

- Ownership layer: goals, constraints, verification, trade-offs, and accountability

In 2026, employees who can convincingly occupy the ownership layer will feel amplified. Employees stuck in the output layer risk feeling replaceable, even if they are still valuable.

| Old Office Status Signal | What AI Changes | New Status Signal In 2026 | Practical Implication |

| “Fastest drafter” | Draft speed is commoditized | “Best editor and verifier” | Reward review quality, not just volume |

| “Slide hero” | Polished slides are easier | “Narrative architect” | Promote people who can frame decisions |

| “Knows everything” | AI can retrieve and summarize | “Knows what to ask” | Train prompt design and problem framing |

| “Always available” | AI increases throughput expectations | “Sets boundaries and priorities” | Normalize scope control and triage |

| “Senior = answers” | AI produces plausible answers | “Senior = standards” | Seniors define guardrails and quality bars |

What it means next: performance systems will quietly rewrite themselves. Teams will need to define what “good” looks like when outputs are easy to generate. If they do not, employees will default to the only visible metric: volume. That usually produces burnout and a culture of noise.

Output Inflation And The Psychology Of Being “Less Real” At Work

The AI-augmented office creates a unique psychological trap: output inflation. When everyone can produce more, the baseline rises, and people feel pressure to keep up. But unlike past productivity tools, generative AI also blurs authorship. That can trigger a specific kind of identity stress: “If AI helped, is this still my work?”

This is not philosophical. It shows up as day-to-day anxiety:

- Reluctance to share how much AI is used, especially in high-status roles

- Fear that using AI signals lower competence, even when it improves outcomes

- Fear that not using AI signals being behind

- Quiet guilt about “shortcutting” craft, even when the shortcut is rational

This is why identity management becomes a leadership task. Employees do not just need tool training. They need permission and clarity about what is acceptable, what must be disclosed, and how credit is assigned.

| Identity Stressor In AI Workflows | What Employees Often Do | What Good Governance Looks Like | What Leaders Should Measure |

| “Did I really earn this?” | Hide AI use | Define disclosure norms by risk level | Error rates, rework, client escalations |

| Fear of being judged | Underuse AI even when helpful | Manager messaging that rewards learning | Adoption by role, not just overall |

| Output inflation | Work longer hours | Reset expectations, redesign workflows | Meeting load, cycle time, burnout signals |

| “AI is taking my voice” | Over-edit to sound “human” | Style guides and voice ownership | Satisfaction, brand consistency |

| Skill atrophy worries | Avoid AI for growth tasks | “Training mode” projects without AI | Skill progression and internal mobility |

What it means next: 2026 will be the year organizations split into two cultures. One culture treats AI as a shameful shortcut. The other treats it as a standardized assistant with clear rules. The second culture will recruit better, move faster, and reduce covert risk.

The Rise Of The AI Manager: Surveillance, Scoring, And Trust

AI in the office is not only about copilots writing drafts. It is also about algorithmic management: tools that instruct, monitor, and evaluate work at scale. This is where identity issues become political because monitoring changes the psychological contract.

When workers believe they are being scored continuously, they shift behavior:

- They optimize for measurable activity, not meaningful outcomes

- They take fewer creative risks

- They become less honest about problems, because problems look like failures

In 2026, the tension is sharper because many firms are experimenting with analytics that go beyond productivity, including monitoring communication patterns, sentiment-like signals, and behavioral metadata. Even if the intent is quality or safety, the lived experience can feel like surveillance.

Trust becomes a design problem. The question is not “should monitoring exist” but “what is proportional, transparent, and contestable.”

| Algorithmic Management Use Case | Claimed Benefit | Main Identity Risk | Minimum Safeguard In 2026 |

| Task allocation and scheduling | Efficiency | Loss of autonomy | Human override and appeal path |

| Activity and speed tracking | Throughput | “Always on” pressure | Limits on metrics used for discipline |

| Monitoring emails/calls for “tone” | Quality | Chilling effect, self-censorship | Clear scope, opt-outs where possible |

| Automated performance rankings | Fairness | Dehumanization, gaming | Explainability and manager accountability |

| Automated sanctions | Consistency | Moral injury, fear culture | Ban fully automated disciplinary action |

What it means next: the AI-augmented office will reward firms that treat trust as infrastructure. In practical terms, that means transparency, worker consultation, documented limits, and real recourse when the system is wrong.

Rules Are Catching Up: What Regulation Signals About The 2026 Office?

Regulators are increasingly focused on employer misuse of AI, not just consumer harms. That matters because it legitimizes a worker-centered lens: consent, proportionality, and fundamental rights in the workplace.

Europe is the clearest example of this direction. The policy signal is simple: some workplace AI uses are considered too intrusive to normalize, especially those that infer emotions or use biometric-like inference in coercive contexts. Even outside Europe, regulators and watchdogs are scrutinizing biometric monitoring and AI in recruitment.

For employers, regulation changes identity management in two ways:

- It forces clearer boundaries on what is allowed

- It changes employee expectations of what is “reasonable” at work

| Regulatory Signal | What It Targets | Why It Matters For Identity | What Compliance Looks Like Operationally |

| Limits on emotion inference at work | “Mind-reading” AI | Protects dignity and psychological safety | Ban or tightly restrict these tools |

| Higher scrutiny of biometrics | Attendance and access control | Reduces coerced consent | Alternatives, opt-outs, necessity tests |

| Recruitment tool auditing | Hiring and screening | Prevents opaque gatekeeping | Documentation, bias testing, DPIAs |

| Transparency expectations | AI use disclosure | Makes hidden AI use harder | Policies, training, internal reporting |

| Enforcement risk | Revenue-based fines | Puts AI governance on the board agenda | Vendor due diligence and controls |

What it means next: the regulatory arc strengthens employees’ ability to question AI systems at work. Even where the law is lighter, cultural expectations travel. Multinationals often adopt a “highest standard” approach to reduce operational complexity, which makes stricter regions shape global norms.

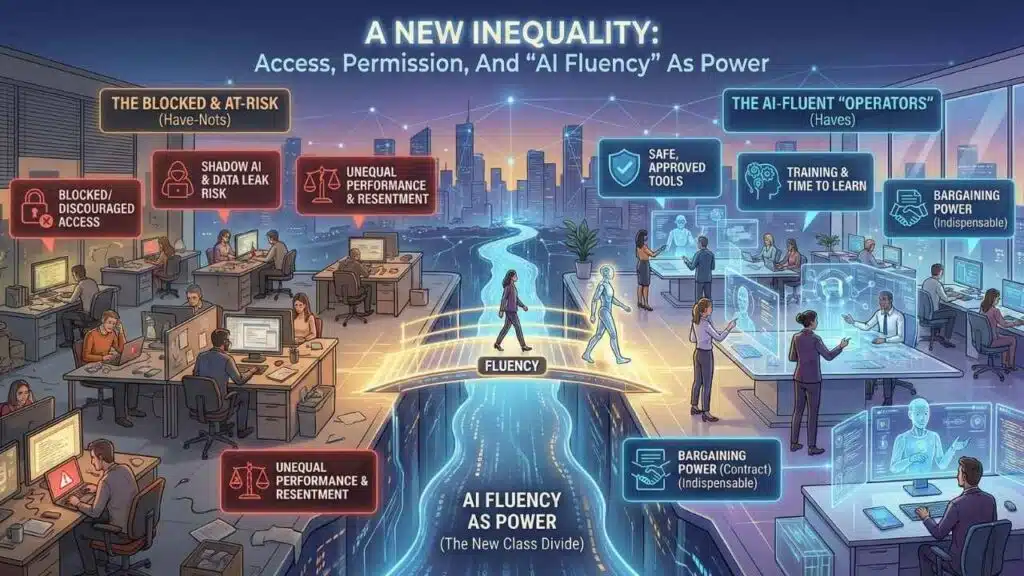

A New Inequality: Access, Permission, And “AI Fluency” As Power

The AI-augmented office creates a new class divide inside companies. It is not only between “technical” and “non-technical” roles. It is between employees who have:

- Access to safe, approved tools

- Training and time to learn them

- Permission to use them without stigma

- Managers who reward experimentation

And those who do not.

In 2026, informal adoption is a red flag. If employees use personal tools because the company tools are slow, restricted, or unclear, risk rises. Sensitive information can leak. Quality becomes inconsistent. And employees who take the most initiative become both the most productive and the most exposed.

AI fluency also changes bargaining power. Employees who can translate messy business problems into AI-assisted workflows become indispensable “operators” even without formal authority.

| AI Maturity Level Inside A Team | What It Looks Like | Who Benefits Most | Hidden Risk |

| No access | AI is blocked or discouraged | Few | Shadow AI usage grows |

| Limited pilots | Only select teams can use it | Managers and early adopters | Resentment and unequal performance |

| “Bring your own AI” | Personal tools fill gaps | Power users | Data leakage and policy drift |

| Standardized copilots | Approved tools, baseline training | Broad workforce | Output inflation if goals not reset |

| Workflow redesign | AI changes processes, not just tasks | Whole org | Over-automation without accountability |

What it means next: fairness in 2026 is not only pay equity. It is capability equity. The organizations that treat AI training as a universal benefit, not a perk, will reduce internal inequality and improve retention.

Accountability In Shared Authorship: Who Owns The Work Now?

Identity and accountability are inseparable. Employees will not feel secure using AI until the organization answers basic questions:

- If AI makes a mistake, who is responsible?

- If AI helps create value, who gets credit?

- When must AI assistance be disclosed?

- What kinds of work require human verification?

The 2026 standard will likely resemble aviation-style thinking: you can use automation, but you must know when it fails, and you must have a defined human-in-the-loop standard for high-risk decisions.

This is not only legal. It is cultural. If people fear blame for AI errors they did not understand, they will avoid the tools or hide their use. If people can claim credit for AI output without ownership, trust collapses.

| Work Type | AI Can Help With | Minimum Human Responsibility | Best Practice Quality Check |

| Internal summaries and notes | Speed and recall | Confirm key decisions and owners | Random audits and feedback loops |

| Client-facing writing | Drafting and tone options | Final voice and factual accuracy | Verification checklist and versioning |

| Financial or legal content | Drafting and analysis | Compliance and approvals | Mandatory review and documentation |

| Hiring and performance decisions | Pattern detection | Fairness and contestability | Bias testing, audit logs, appeals |

| Strategy and planning | Scenarios and options | Final judgment | Pre-mortems and assumptions review |

What it means next: “AI accountability” will become a core competency like cybersecurity hygiene. Organizations will need simple rules employees can remember, not legal documents nobody reads.

Future Outlook: The Identity Bargain Employers Must Offer In 2026

The AI-augmented office is pushing toward a new bargain between employers and employees.

- The old bargain was: bring your skill, your time, your loyalty, and you get stability, growth, and recognition.

- The emerging bargain is: bring your judgment, your adaptability, and your willingness to collaborate with AI, and you get leverage, learning, and a redesigned job that keeps the human parts meaningful.

Three forward-looking implications stand out.

First, performance will move from effort to impact. AI makes effort harder to see and less correlated with output. That forces a shift toward outcomes, but only if organizations define outcomes well. Otherwise, they will fall back to proxy metrics and surveillance.

Second, job design becomes the real battleground. The biggest productivity gains will come from redesigning workflows, not from asking employees to “use AI more.” That redesign will also determine identity outcomes. If employees only get the leftovers after automation, morale drops. If employees get more ownership, growth follows.

Third, expect a new wave of worker protection norms. Even outside strict regulatory regions, cases involving biometrics, recruitment tools, and intrusive monitoring are shaping expectations. Employees are increasingly likely to demand transparency and boundaries as part of workplace trust.

What To Watch Next

- Policy milestones: expanding guidance on prohibited or high-risk workplace AI uses, and more enforcement actions around biometrics and recruitment tools

- Management redesign: more “AI enablement” roles inside HR and operations, not just IT

- A new skills baseline: organizations formalizing AI literacy as core training, aligned to the reality that a large share of core skills are expected to change by 2030

- A cultural split: firms that normalize transparent AI use will outcompete firms where AI is covert and shame-driven

In 2026, the winners will not be the companies with the flashiest model. They will be the companies that solve the human problem: identity, trust, fairness, and accountability in a workplace where intelligence is suddenly cheap, but responsibility is not.