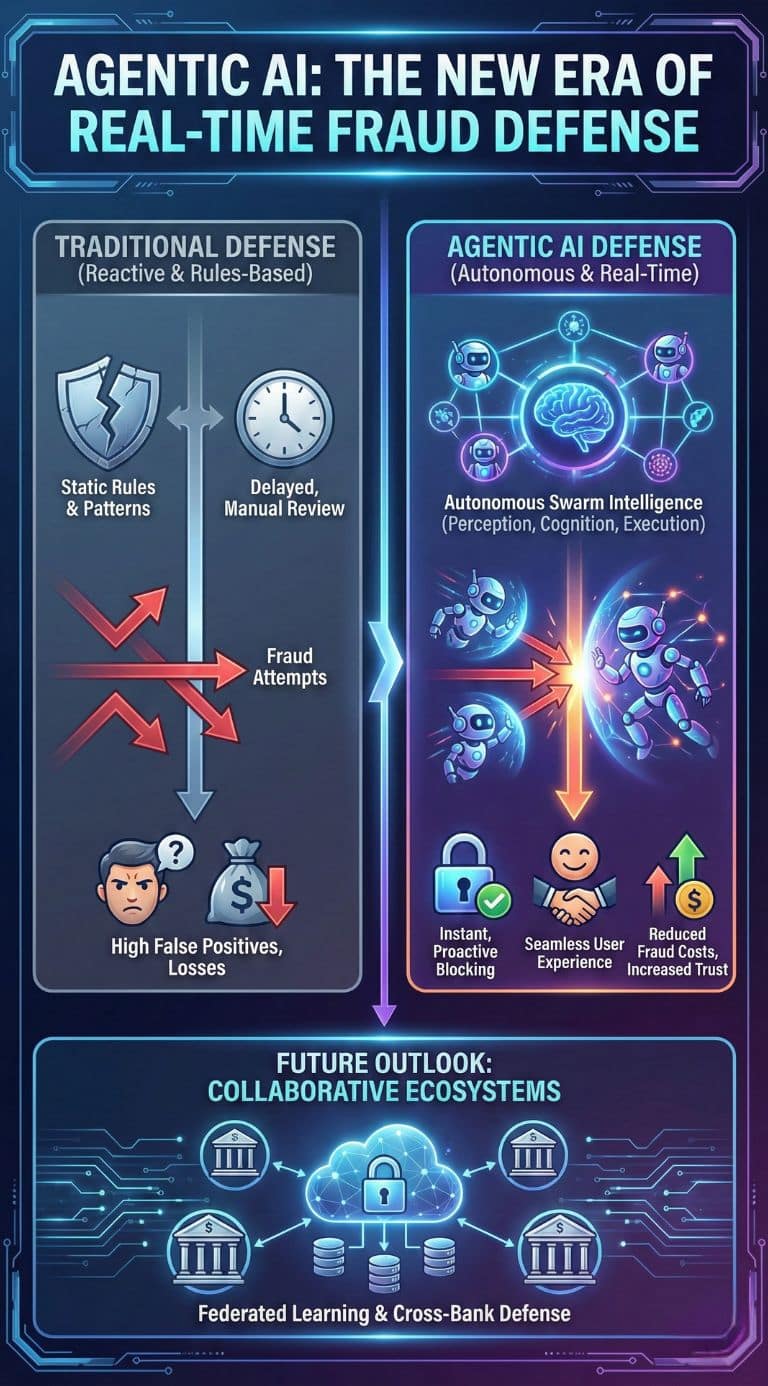

The banking sector faces a pivotal transition as agentic AI shifts fraud prevention from reactive detection to autonomous, real-time neutralization. This evolution isn’t just a technical upgrade; it’s a strategic necessity to counter “hyper-personalized” cyberattacks and synthetic identity fraud, redefining the boundary between human oversight and machine autonomy in 2026.

The evolution of financial security has reached a critical juncture. For decades, the industry operated on a “catch-up” basis, relying on static, rule-based systems that used simple “if-then” logic. These legacy frameworks were notorious for high false-positive rates, often frustrating legitimate customers while failing to stop sophisticated criminals who could easily bypass rigid thresholds. The mid-2010s saw the rise of Predictive AI and Machine Learning, which allowed banks to identify patterns in historical data and move toward probabilistic scoring. However, even these advanced models remained essentially passive; they could flag a suspicion, but they required human intervention or pre-set lockdowns to take action.

Entering 2026, the narrative has fundamentally shifted toward Agentic AI. Unlike its predecessors, Agentic AI does not just predict; it plans, reasons, and executes. It operates with a level of “agency” that allows it to gather context from disparate systems—such as geolocation, device biometrics, and historical spending—and autonomously decide whether to approve, challenge, or block a transaction in milliseconds. This transition marks the “New Frontier,” where the speed of the attacker is finally matched by the speed of the defender through autonomous, goal-oriented digital workforces.

The Autonomy Leap: Moving Beyond Predictive Analytics

The core differentiator of Agentic AI in 2026 is its ability to handle multi-step workflows without constant human prompts. Traditional AI might flag a suspicious $5,000 transfer, but an Agentic system takes the initiative to investigate. It may simultaneously query the customer’s mobile GPS, check if their device has recently downloaded a remote-access tool, and initiate a “soft-check” via a secure push notification.

This shift is driven by three architectural layers: Perception (ingesting real-time streams), Cognition (reasoning through Large Language Models), and Execution (interacting with core banking systems). Leading institutions are no longer just deploying algorithms; they are deploying “digital employees” capable of making independent decisions within set boundaries. This next-generation capability is what separates agentic systems from traditional generative models that only produce text or summaries.

| Feature | Traditional Rules (Pre-2015) | Predictive AI (2015-2024) | Agentic AI (2026) |

| Logic Core | Static If/Then rules | Pattern matching & statistics | Goal-oriented reasoning |

| Reaction Speed | Seconds to Minutes | Milliseconds (Flag only) | Milliseconds (Action taken) |

| User Impact | High false positives | Moderate friction | Hyper-personalized/Low friction |

| Independence | Zero | Low (Requires human review) | High (Autonomous workflows) |

| Adaptability | Manual updates required | Retraining on old data | Real-time learning from events |

The “AI vs. AI” Arms Race: Defending Against Generative Crime

The urgency behind adopting Agentic AI in Banking stems from the weaponization of the same technology by criminal syndicates. In 2026, the primary threat is no longer simple phishing, but “hyper-realistic” deepfakes and Synthetic Identity Fraud. Criminals use Agentic AI to create “Frankenstein” identities—blending real, stolen, and AI-generated data to create digital personas that can build credit and operate for months before exfiltrating funds.

Banks are responding by deploying specialized “Defense Squads” of agents. For instance, a Critic Agent might be tasked specifically with identifying inconsistencies in a video call used for account opening, while a Validation Agent cross-references the metadata of submitted documents against global databases in real-time. This “fire with fire” strategy is the only viable path as the volume of AI-generated fraud attempts has reached a scale that manual processes cannot possibly manage. According to 2026 data, 86% of financial executives are now aware that agentic AI poses both a primary defense and a significant new risk vector.

| Threat Type | How Fraudsters Use AI | How Agentic AI Defends |

| Synthetic Identity | Creating fake personas with history | Deep behavioral & metadata cross-linking |

| Deepfakes | Mimicking customer voice/face | Real-time biometric liveness detection |

| Social Engineering | Autonomous AI-driven “scam bots” | Detecting “victim duress” in typing/swiping |

| Velocity Attacks | Mass small-value siphoning | Global “swarm” monitoring across accounts |

Swarm Intelligence: Multi-Agent Orchestration in 2026

In leading financial institutions, fraud prevention is no longer a single software package; it is a decentralized ecosystem of collaborating agents known as a “Swarm.” This orchestration allows for granularity that was previously impossible. One agent focuses on Retrieval Augmented Generation (RAG) to pull a customer’s entire history, another focuses on Behavioral Biometrics, and a third acts as an Orchestrator to delegate tasks and compile a final risk assessment.

This multi-agent approach eliminates the “silo” problem where different departments (AML, Credit, Fraud) held fragmented views of the same customer. By 2026, these agents communicate via standardized protocols, allowing them to share intelligence across the bank’s infrastructure instantly. Investigations that once took a human analyst two hours of data gathering now finish in less than five minutes, with the AI agent providing a full audit trail of its reasoning.

| Agent Role | Primary Responsibility | Key Metric Tracked |

| RAG Agent | Contextual data retrieval | Data accuracy & recall |

| Behavioral Agent | Swiping/Typing/Pressure analysis | Anomaly score vs. baseline |

| Research Agent | External OSINT & Watchlist checks | Entity resolution speed |

| Orchestrator | Planning the investigation steps | End-to-end latency |

| Critic Agent | Identifying bias or errors | False positive reduction rate |

Economic Shifts: ROI and the Productivity Frontier

The financial stakes of this transition are staggering. According to 2026 market projections, global market spend on Agentic AI has reached an estimated $50 billion. The return on investment (ROI) is no longer theoretical; banks are seeing an average 2.3x return within 13 months of deployment. By automating the “low-level” fraud alerts that previously required thousands of human hours, institutions are reallocating their human capital to complex, high-value investigations.

The efficiency gains are particularly visible in the cost per investigation. In 2024, a manual Anti-Money Laundering (AML) investigation cost a bank roughly $45. By 2026, the use of agentic “digital factories” has dropped that cost to less than $0.20 per alert. Furthermore, the top 5% of banks globally are reporting that Agentic AI has freed up nearly 30% of their total workforce efficiency, allowing teams to focus on dismantling organized crime syndicates rather than clearing false alarms.

| Performance Metric | 2024 (Manual/Predictive) | 2026 (Agentic Era) | Improvement |

| Investigation Time | 2 – 4 Hours | < 2 Minutes | ~98% Reduction |

| Cost Per Fraud Alert | $45.00 | $0.15 | ~99% Reduction |

| False Positive Rate | 12.5% | < 1.8% | 85% Improvement |

| Revenue Saved | Baseline | +22% Fraud detection | N/A |

Governance, Ethics, and the “TRUST” Framework

As agents gain more autonomy, the risk of “algorithmic runaway” increases. If a bank’s agents are given the goal of “minimizing fraud” without proper guardrails, they might theoretically decide to block all transactions from a specific demographic or region, leading to financial exclusion. To counter this, the industry has adopted the TRUST (Transparent, Robust, Unbiased, Secure, and Tested) framework.

In 2026, regulatory bodies like the EU (under the AI Act) and the UK (FCA) require “Agentic Transparency.” Every autonomous decision must leave a “reasoning trail” that human auditors can inspect. This has led to the rise of “Auditor Agents”—AI systems whose only job is to watch other agents for signs of bias or logic failures. This Human-in-the-Loop 2.0 model ensures that while the machine executes the task, the human remains the ultimate architect of the strategy and ethics.

| Framework Pillar | Implementation Method | Objective |

| Transparent | Explainable AI (XAI) logs | Defending against “Black Box” decisions |

| Robust | Adversarial “Red Team” testing | Preventing AI-on-AI manipulation |

| Unbiased | Demographic parity checks | Ensuring fair lending & access |

| Secure | Quantum-resistant encryption | Protecting the agents’ memory |

| Tested | Real-time regulatory sandboxes | Proving safety before deployment |

Expert Perspectives: A Divided Outlook on Autonomy

The industry is currently divided into “Frontier Firms” and “Conservative Incumbents.” Analysts from Gartner predict that by the end of 2026, 40% of all enterprise applications will include task-specific AI agents. These firms argue that the productivity gains—estimated at $3 trillion globally—make the transition inevitable. They point to case studies like JPMorgan Chase and Bradesco, where agents handle everything from payment validation to customer concierges, as evidence of the “Autonomous Enterprise.”

Conversely, skeptics warn of Systemic Algorithmic Risk. If most major banks use similar agentic models trained on similar datasets, a single “poisoned” data point could trigger a synchronized failure across the global financial network. There is also the “Innovation Gap“; smaller banks and credit unions often lack the infrastructure to build these systems, potentially leading to a massive consolidation where only the tech-richest institutions can survive the modern fraud landscape.

Future Outlook: The Rise of Collaborative Agentic Networks

As we look toward 2027 and beyond, the “Frontier” will move toward Cross-Bank Agent Collaboration. Currently, agents operate within the silos of individual institutions. The next major milestone is the development of secure, privacy-preserving networks (utilizing Federated Learning) where a “Defense Agent” at Bank A can instantly share a fraud signature with Bank B without compromising customer privacy.

Furthermore, we are witnessing the rise of Personal Defense Agents (or “Agentic Twins”) for the consumer. In the near future, individuals will have their own AI sovereigns on their smartphones that negotiate with the bank’s agents. If a bank agent asks for a verification, the user’s personal agent will verify the bank’s identity first, creating a double-sided autonomous security layer that could finally end the era of social engineering.

Key Milestones to Watch (2026-2027):

- Agent-to-Agent (A2A) Protocols: Standardizing how different banks’ agents talk to one another to stop cross-border “velocity attacks.”

- Quantum Transition: The integration of post-quantum cryptography into agent memory to prevent “harvest now, decrypt later” attacks.

- Regulatory Harmonization: The shift from prescriptive local rules to global standards for autonomous AI accountability.