The rumored integration of an “Anxiety Algorithm” in the upcoming Apple Watch X represents a seismic shift from physical fitness tracking to proactive mental health surveillance. With the confirmed January 2026 Apple-Google Gemini partnership, the convergence of biometric data and generative AI raises urgent questions about user privacy, data sovereignty, and the medicalization of human emotion.

Key Takeaways

- The Shift: Apple is moving from logging health data (reactive) to predicting emotional states (proactive).

- The Engine: The newly announced collaboration with Google Gemini provides the LLM (Large Language Model) reasoning power needed to interpret complex physiological stress signals.

- The Risk: Privacy experts warn of a “data sovereignty crisis” where mental health metrics could be weaponized by insurers or advertisers in a regulatory gray zone.

- The Psychology: There is a growing fear of the “Nocebo Effect”—where the device creates anxiety simply by measuring it.

From Pedometer to Psychiatrist: How We Got Here

To understand the magnitude of the “Anxiety Algorithm,” one must view it not as a standalone feature, but as the culmination of a decade-long strategy. The trajectory of the Apple Watch has been a relentless march from a notification companion to a Class II medical device.

- 2015 (Series 1): The “Quantified Self” era begins. Focus is on steps, calories, and closing rings.

- 2018 (Series 4): The pivot to medical diagnostics with FDA-cleared ECG.

- 2020 (Series 6): Blood Oxygen monitoring during the pandemic era.

- 2025 (Series 11): The introduction of Hypertension detection and Sleep Apnea scoring.

- 2026 (The “Watch X” Era): The leap from physical to mental.

In late 2025, reports surfaced of “Project Mulberry,” an internal Apple initiative designed to turn the Health app into an AI-powered “Health Coach.” By January 2026, with the integration of Google’s Gemini models into the Apple ecosystem, the hardware finally caught up to the ambition. The Apple Watch X is no longer just counting heartbeats; it is attempting to interpret why the heart is beating.

1. The Mechanism: How the “Anxiety Algorithm” Works

The “Anxiety Algorithm” is not a single sensor; it is a synthesis engine. Unlike a heart attack, which is a singular physiological event, anxiety is a complex constellation of markers. The Watch X is rumored to utilize a “sensor fusion” approach, combining established metrics with new AI reasoning.

The “Stress Stack”

The algorithm likely relies on three primary data streams:

- Heart Rate Variability (HRV): A well-established proxy for the autonomic nervous system. Low HRV often correlates with high stress or fight-or-flight responses.

- Electrodermal Activity (EDA): Building on the sensors introduced in earlier models, the Watch X tracks micro-fluctuations in skin conductance (sweat) that occur during emotional arousal.

- Contextual Metadata: This is the game-changer. By leveraging the Gemini-powered “Apple Foundation Models,” the watch can cross-reference physiological spikes with your calendar (e.g., “Meeting with CEO”), location (e.g., “Hospital”), and sleep history.

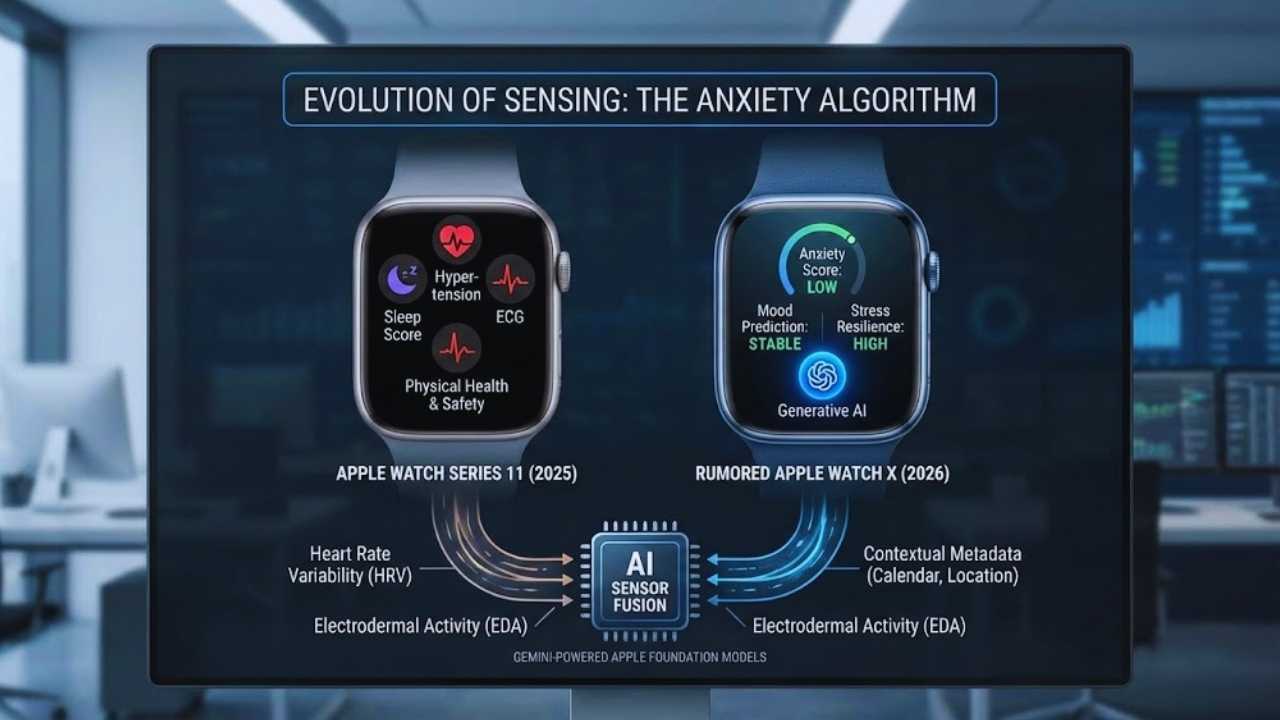

The Evolution of Sensing (Series 11 vs. Rumored Watch X)

| Feature | Apple Watch Series 11 (2025) | Rumored Apple Watch X (2026) |

| Primary Focus | Physical Health & Safety | Mental Health & Predictive AI |

| Key Metrics | Hypertension, Sleep Score, ECG | “Anxiety Score,” Mood Prediction, Stress Resilience |

| Data Processing | On-device algorithms (Standard) | Generative AI (Gemini-powered Contextual Awareness) |

| User Action | User initiates check (e.g., takes ECG) | Device nudges user (Pre-emptive “Breathe” alerts) |

| Sensors | Optical Heart, Temp, Accelerometer | + Advanced EDA (Continuous), Cortisol Proxy Estimation |

2. The Privacy Nightmare: Who Owns Your Panic?

The most critical concern surrounding this technology is the nature of the data being collected. Physical health data is sensitive; mental health data is existential.

The HIPAA Gap

While Apple frames itself as a privacy fortress, consumer wearables exist in a regulatory gap. Data collected by a doctor is protected by HIPAA (in the US). Data collected by a watch is protected by Terms of Service.

- Inference vs. Raw Data: Apple may encrypt the raw heart rate data, but the inference—the “Anxiety Score”—is a derived metric.

- The Gemini Variable: The partnership with Google complicates the “what happens on iPhone, stays on iPhone” mantra. While Apple insists on “Private Cloud Compute,” the necessity of using Gemini for complex reasoning means some data processing may leave the device’s secure enclave.

Privacy Risk Assessment Matrix

| Risk Category | Description | Potential Consequence | Severity |

| Data Inference | AI infers mental state without explicit user input. | Targeted advertising during moments of vulnerability (e.g., “Comfort Food” ads). | High |

| Third-Party Leak | Health data shared with “Partner Apps.” | “Anxiety history” potentially used in credit scoring or employment vetting. | Critical |

| Legal Discovery | Subpoenas for device data in custody battles or criminal cases. | Emotional state used as evidence of instability in legal proceedings. | Medium |

| Cloud Processing | Complex AI queries sent to Private Cloud. | Potential (though low) risk of interception or de-anonymization. | Low |

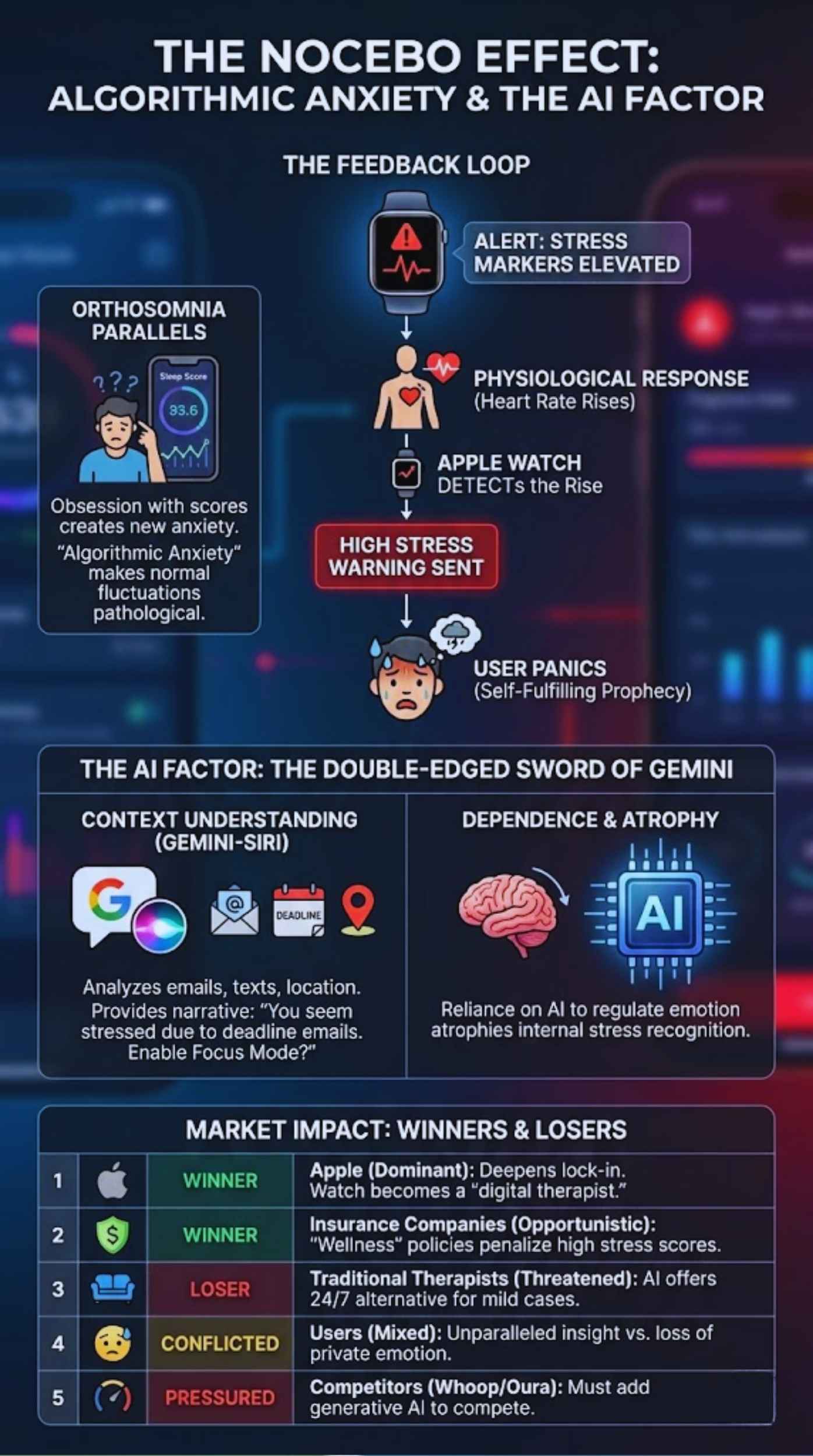

3. The “Nocebo” Effect: Creating Anxiety by Measuring It

There is a profound psychological risk known as the nocebo effect (the opposite of placebo). If a user receives a notification saying, “Your stress markers are elevated,” the notification itself can induce anxiety, creating a self-fulfilling prophecy.

- The Feedback Loop: A user sees the alert -> Heart rate rises -> Watch detects rise -> Watch sends “High Stress” warning -> User panics.

- Orthosomnia Parallels: We have already seen “orthosomnia,” where users develop insomnia because they are so obsessed with perfecting their sleep scores. “Algorithmic Anxiety” is the next iteration—users becoming hyper-aware of every minor physiological fluctuation, interpreting normal excitement as pathological stress.

4. The AI Factor: The Double-Edged Sword of Gemini

The January 2026 announcement that Google Gemini will power the next generation of Siri is central to this feature.

- Context Understanding: Old Siri could tell you that your heart rate is up. Gemini-Siri can tell you why. It can analyze your emails, texts, and location to provide a narrative: “You seem stressed. It might be because of the 3 deadline emails you just received. Would you like to enable Focus Mode?”

- Dependence: This creates a dependency on the AI as a regulator of emotion, potentially atrophying our own internal ability to recognize and manage stress.

Market Winners & Losers (The Impact of Anxiety Tech)

| Entity | Status | Why? |

| Apple (Winner) | Dominant | Deepens lock-in. Once the watch “knows” your feelings, switching to Android feels like losing a therapist. |

| Insurance Companies (Winner) | Opportunistic | New “Wellness” policies will offer discounts for low “Anxiety Scores,” effectively penalizing those with high stress. |

| Traditional Therapists (Loser) | Threatened | “AI Coaching” offers a free, 24/7 alternative to CBT (Cognitive Behavioral Therapy) for mild cases. |

| Users (Mixed) | Conflicted | Gain unparalleled insight but lose the ability to have a “private” emotional moment. |

| Competitors (Whoop/Oura) | Pressured | Must scramble to add generative AI features to their hardware-focused trackers. |

Expert Perspectives

The Privacy Advocate:

“The danger isn’t just that the data exists, but that it creates a digital profile of human vulnerability. If an algorithm knows you are anxious before you do, it creates an unprecedented window for manipulation. We are moving from the ‘Attention Economy’ to the ‘Emotion Economy’.”

— Dr. Elena Rosas, Digital Ethics Fellow, Institute for Algorithmic Justice

The Tech Optimist:

“For millions suffering in silence, this is a lifeline. A nudge to ‘breathe’ or ‘take a walk’ precisely when cortisol spikes can prevent panic attacks before they spiral. The privacy trade-off is real, but the preventative health benefit—saving the healthcare system billions in stress-related ailments—is worth it.”

— Marcus Thorne, Senior Analyst at Consumer Tech Insights

The Medical Skeptic:

“Anxiety is not just a high heart rate. It is a subjective, cognitive experience. Reducing it to a ‘score’ on a wrist is reductionist medicine. We risk pathologizing normal human emotions like excitement or grief.”

— Dr. Sarah Jenkins, Clinical Psychologist

Future Outlook: What Comes Next?

As we look toward the latter half of 2026, several trends will crystallize:

- iOS 20 & The “Health Agent”: Expect the next major iOS update to introduce a “Health Agent”—an AI persona that acts as a dedicated medical concierge, synthesizing data from the Watch, medical records, and daily activity.

- Corporate Wellness 2.0: By 2027, corporate wellness programs will likely subsidize these devices. However, this comes with a dark side: “Productivity Monitoring” disguised as “Stress Management.” Bosses may not see your heart rate, but they might see your aggregate “Resilience Score.”

- The Rise of “Bio-Privacy” Laws: The European Union is already debating extensions to GDPR that specifically cover “inferred biometric data.” The US may follow suit if insurance companies begin using anxiety data for underwriting.

Final Verdict

The Apple Watch X “Anxiety Algorithm” is an inevitable innovation. It fulfills the promise of the “Quantified Self.” But it also crosses a Rubicon. Until now, our devices observed our actions. Now, they are beginning to observe our feelings. The question is no longer “Can they do it?” but “Do we want them to?”